justice system

The US prison system continues to fail, so why does it still exist?

▸

18 min

—

with

The ‘reasonable person’ represents someone who is both common and good.

The idea behind the law was simple: make it more difficult for online sex traffickers to find victims.

A new look at existing data by LSU researchers refutes the Trump administration’s claims.

An elephant at the Bronx Zoo has become a cause célèbre for animal rights activists.

What do we want to do with convicted criminals? Penology has several philosophies waiting to answer that question.

The Labour Economics study suggests two potential reasons for the increase: corruption and increased capacity.

Researchers are using technology to make visual the complex concepts of racism, as well as its political and social consequences.

▸

5 min

—

with

The programming giant exits the space due to ethical concerns.

In classical liberal philosophy, individual pursuit of happiness is made possible by a framework of law.

▸

with

An algorithm produced every possible melody. Now its creators want to destroy songwriter copyrights.

A computer coder and a lawyer decide they have a right to speak for all the songwriters that ever lived, those who are alive today, and all those yet to be born.

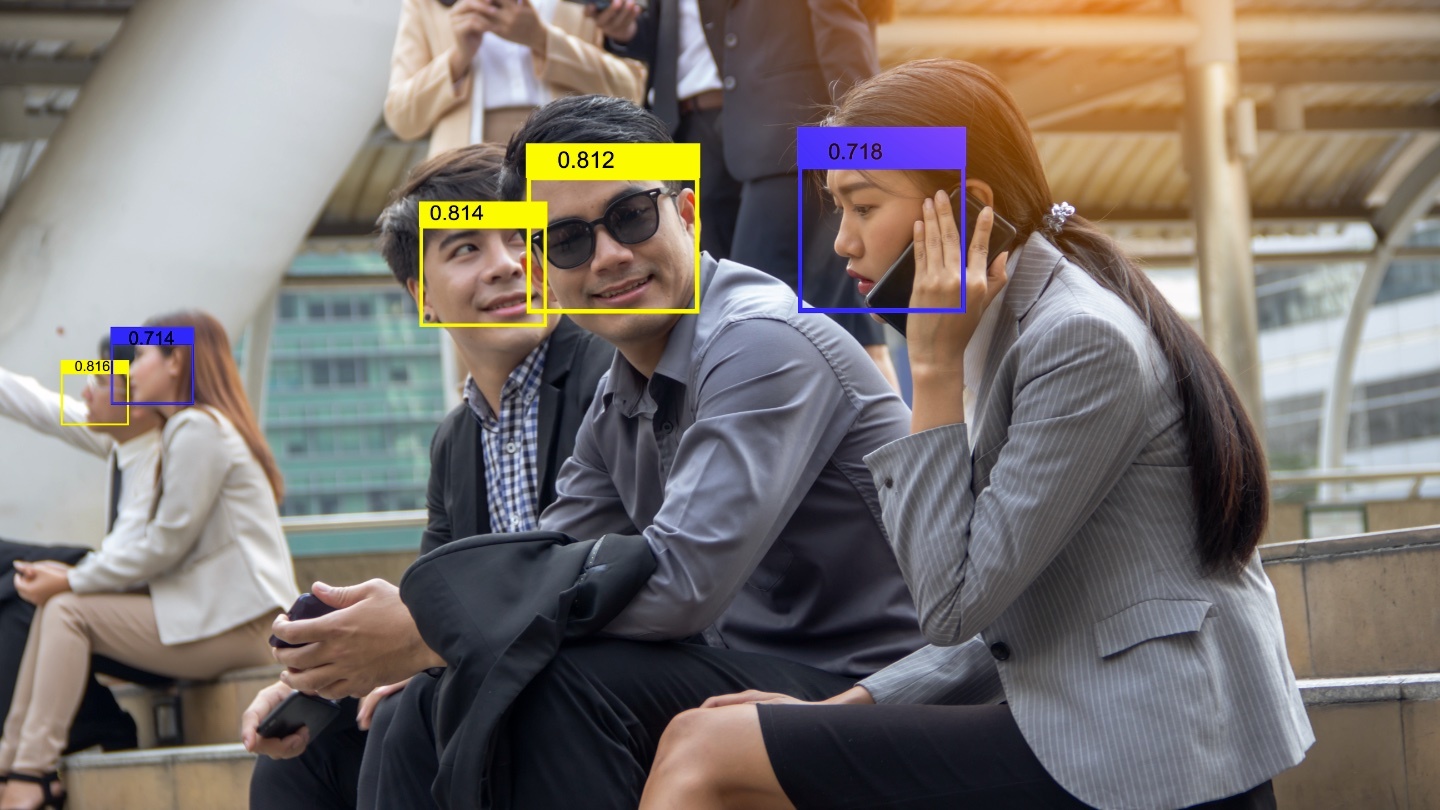

Can AI make better predictions about future crimes?

Laws can’t stand by themselves. Professor James Stoner explains why.

▸

4 min

—

with

When it comes to foreign intervention, we often overlook the practices that creep into life back home.

▸

3 min

—

with

Does the President get to decide when to ignore the law?

▸

6 min

—

with

A punishment is handed down for performing shocking research on human embryos.

David Bienenstock has made it his mission to keep the history of cannabis alive.

“For decades, a national anti-cruelty law was a dream for animal protectionists. Today, it is a reality.”

A federal court ruled that the state of Kentucky was wrong to deny a man’s request for a personalized license plate reading “IM GOD.” Here’s why that’s a win against atheist discrimination.

Wide Angle Motion Imagery (WAMI) is a surveillance game-changer. And it’s here.

We tend to promote foreigners by broadcasting their economic and scholarly value, instead of their intrinsic humanity.

▸

4 min

—

with

Gun safety laws have a historical precedent in the 1939 court case U.S. v Miller.

▸

16 min

—

with

Researchers discover government agencies use facial recognition software on photos from local DMVs.

Studies on stress and memory have often given conflicting results.

The city council voted in favor of the ban by a margin of 8 to 1.

“Prohibitionist strategy is unsustainable,” reads the policy plan.

An investigation finds the cause of failed NASA launches and $700 million in losses.

The U.S. has a talent shortage and the formerly incarcerated have paid their debt to society. Let’s solve two problems with one idea.

▸

5 min

—

with

Healthy Housing Foundation has purchased four properties in Los Angeles, with more planned.