privacy

In the name of fighting horrific crimes, Apple threatens to open Pandora’s box.

Companies can identify you from your music preferences, as well as influence and profit from your behavior.

And is anyone protecting children’s data?

The attack on the Capitol forces us to confront an existential question about privacy.

Neuroscientists and ethicists wants to ensure that neurotechnologies remain benevolent.

Here’s why you may want to opt-out of Amazon’s new shared network.

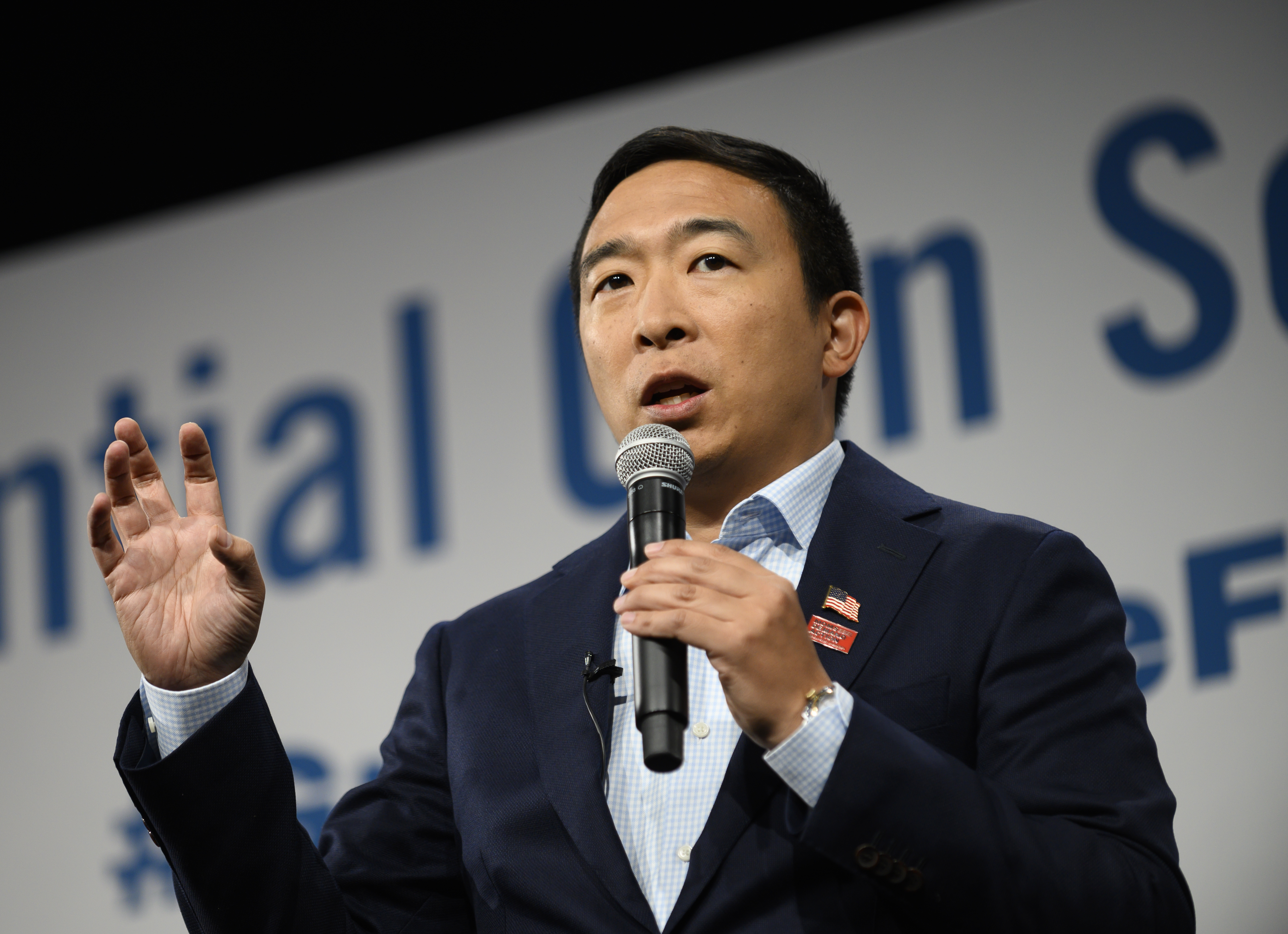

“Our data should be ours no matter what platforms and apps we use,” Yang said.

The system is basically facial recognition technology, but for cars.

A report from the New York Times raises questions over how the teletherapy startup Talkspace handles user data.

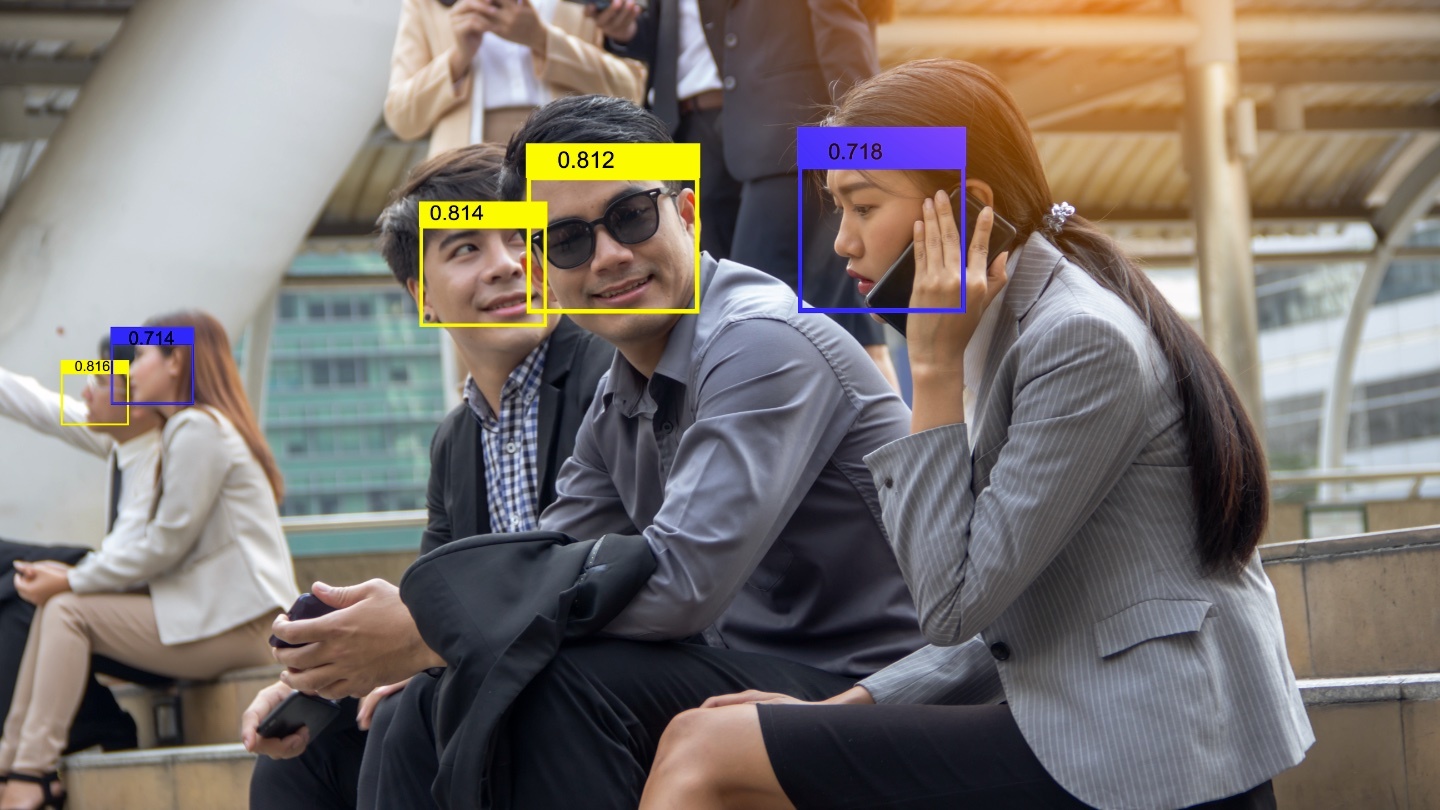

A new study explores how wearing a face mask affects the error rates of popular facial recognition algorithms.

Innovative use of blockchain tech, data trusts, algorithm assessments, and cultural shifts abound.

The programming giant exits the space due to ethical concerns.

Got any embarrassing old posts collecting dust on your profile? Facebook wants to help you delete them.

The program aims to notify people after they’ve come in close contact with someone who tested positive.

The system can even be designed to send alerts to employees when they’ve come too close to a coworker.

Video meetings on the popular platform don’t seem to offer end-to-end encryption as advertised.

Enjoy safe, private browsing wherever you go, for life.

Protect yourself and your personal information at all times on the internet.

If its claims are true, Clearview AI has quietly blown right past privacy norms to become the nightmare many have been fearing.

What happens when a major social media platform’s business model abuses user trust?

▸

2 min

—

with

The Response Act calls on schools to increase monitoring of students’ online activity.

“At this point our data is more valuable than oil,” Yang said. “If anyone benefits from our data it should be us.”

Before we release new technology into the ether, we need to make safeguards so that bad actors can’t misuse them.

▸

3 min

—

with

Frank W. Abagnale says scammers don’t discriminate — here’s what you can do to protect yourself.

▸

5 min

—

with

But some say the settlement is a slap on the wrist.

Wide Angle Motion Imagery (WAMI) is a surveillance game-changer. And it’s here.

These tests report on more than just your risk for cilantro aversion and your ice-cream flavor preference.

The fine Facebook just paid was huge, but many in tech say it wasn’t nearly enough to protect your data.

Researchers discover government agencies use facial recognition software on photos from local DMVs.

The company must also appoint an independent privacy committee to its board of its directors.