Is there a 5th fundamental force of nature?

- Back in the late 1800s, only two forces, electromagnetism and gravity, were thought to describe all of the interactions that occurred in the Universe.

- Over the 20th century, new phenomena resulted in the discovery of two more fundamental forces: the strong and weak nuclear forces, revealed by precise high-energy experiments.

- Now, in the 21st century, more precise experiments than ever before are occurring, and each anomaly holds the tantalizing possibility of revealing a new fundamental force. Will we ever find a 5th?

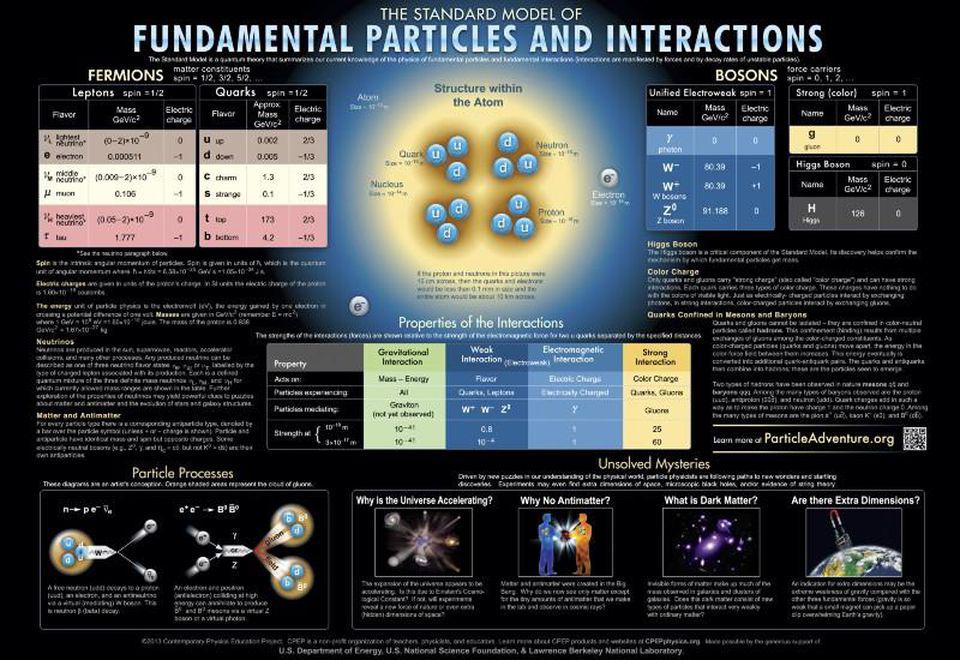

Despite all we’ve learned about the nature of the Universe — from a fundamental, elementary level to the largest cosmic scales fathomable — we’re absolutely certain that there are still many great discoveries yet to be made. Our current best theories are spectacular: quantum field theories that describe the electromagnetic interaction as well as the strong and weak nuclear forces on one hand, and General Relativity describing the effects of gravity on the other hand. Wherever they’ve been challenged, from subatomic up to cosmic scales, they’ve always emerged victorious. And yet, they simply cannot represent all that there is.

There are many puzzles that hint at this. We cannot explain why there’s more matter than antimatter in the Universe with current physics. Nor do we understand what dark matter’s nature is, whether dark energy is anything other than a cosmological constant, or precisely how cosmic inflation occurred to set up the conditions for the hot Big Bang. And, at a fundamental level, we do not know whether all of the known forces unify under some overarching umbrella in some way.

We have clues that there’s more to the Universe than what we presently know, but is a new fundamental force among them? Believe it or not, we have two completely different approaches to try and uncover the answer to that.

Approach #1: Brute force

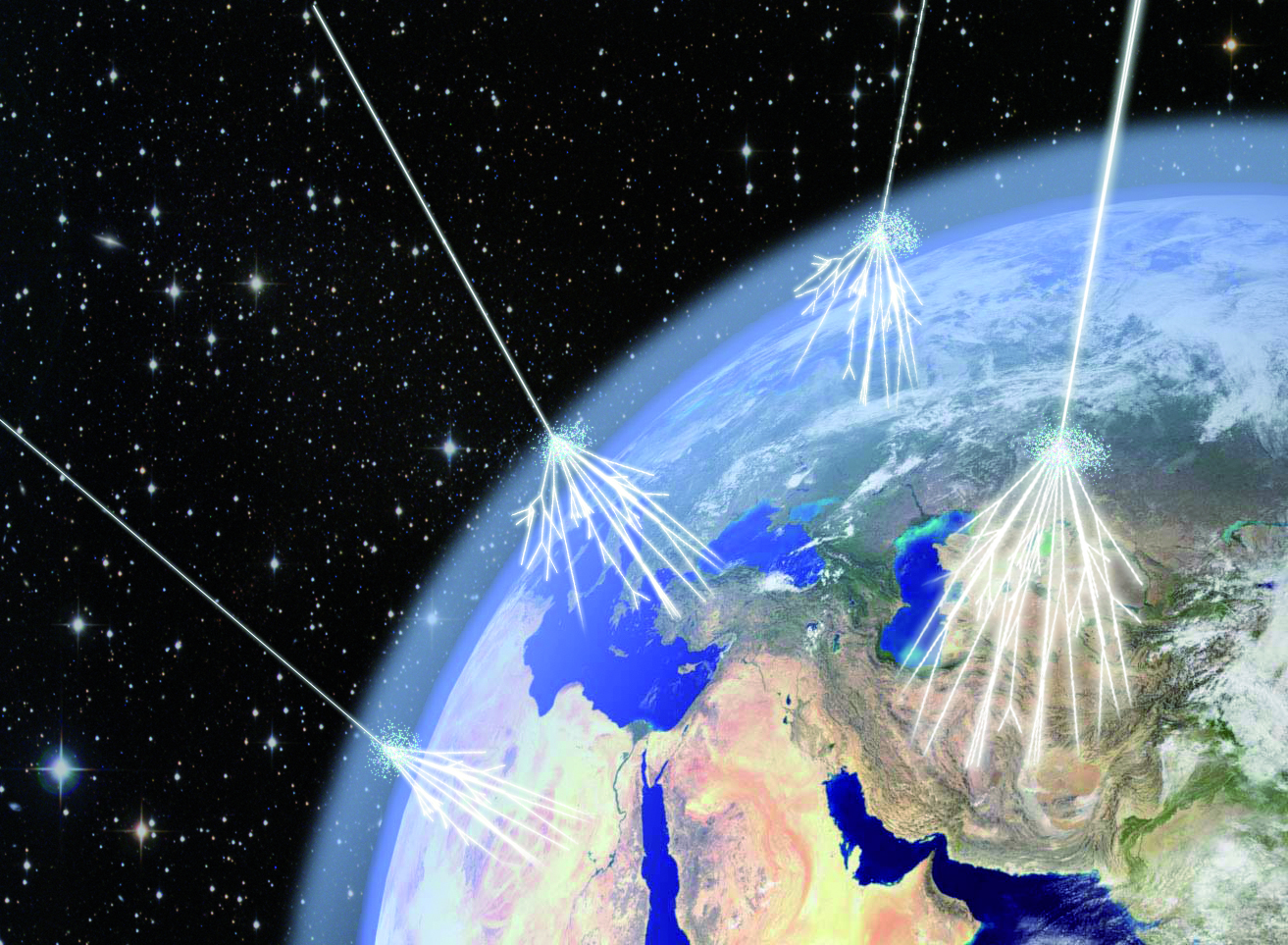

If you want to uncover something hitherto unknown in the Universe, one approach is to simply probe it in a more extreme way than you ever have before. Plans to:

- build a telescope to see farther back in time or at higher resolution than ever before,

- build a particle accelerator capable of colliding particles at greater energies than ever before,

- or devising an apparatus to cool matter down closer to absolute zero than ever before,

are all examples of taking this “brute force” style of approach. Probe the Universe under more extreme conditions than you’ve ever probed them previously, and it just might reveal something shocking, surprising, and most importantly, compelling to look at.

This is an option we should always be exploring when it comes to the Universe, as our current limits on all of these are only set by the combined limits of our technology at the time we chose to make the last great investment on these fronts. With improved technologies and the ability to invest anew in these (and similar) approaches, we can continuously push the extreme limits of human knowledge at all of the frontiers that matter. In the sciences, we talk about pushing past our previous limits in terms of opening up new “discovery space,” and sometimes — like when we cracked open the atomic nucleus in the 20th century — that’s precisely where new fundamental discoveries will emerge from.

Approach #2: High-precision

Alternatively, you can recognize that our current theories make very precise predictions, and that if we can experimentally make measurements to those same high precisions, we can see if there are any departures from predictions that are borne out by experiments and observations. This can come about in a variety of ways, including:

- from examining ever-greater numbers of particles, collisions, or events,

- from controlling the conditions of your experimental apparatus to greater precisions,

- from increasing the purity of your samples,

and so on. Basically, each time you endeavor to increase the signal-to-noise ratio of what you’re trying to measure, whether through statistics, improved experimental procedures, or by eliminating known sources of error, you can increase the precision at which you can probe the Universe.

It’s these high-precision approaches which, in many ways, are the most promising for revealing a new force at play: if you see an effect — even in the 10th or 12th decimal place — that disagrees with your theoretical predictions, it could be a hint that there’s a new force or interaction at play. We haven’t robustly discovered one beyond the known four just yet, but there are many high-precision areas of investigation where this remains a possibility.

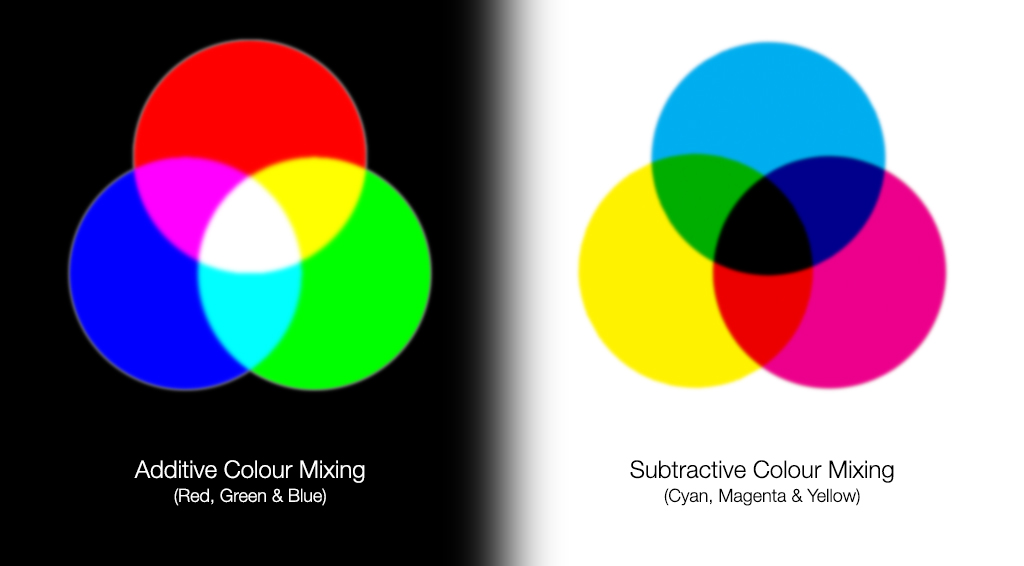

The key is to look for what we call “anomalies,” or places where theory and experiment disagree. In 2015, a nuclear physics experiment gave results that seemed to conflict with the very specific predictions for what should happen when an unstable beryllium-8 nucleus is created in an excited state. In theory, beryllium-8 normally decays into two helium-4 nuclei. In an excited state, it should decay into a photon and two helium-4 nuclei. And, above a certain photon energy, there should be a chance that instead of a photon and two helium-4 nuclei, you’d get an electron-positron pair and two helium-4 nuclei.

The experiment was to measure the angle at which events that produced an electron-positron pair had those two particles, the electron and the positron, made relative to one another. The 2015 experiment, led by Attila Krasznahorkay, found that there was a slight but meaningful excess of events where the electron and positron went off at large angles relative to one another: of about 140 degrees and greater. This has become known as the Atomki anomaly, and many have suggested that a new particle, and a new, fundamental interaction (or fifth force) could be the key explanation behind these findings.

But not only are there multiple possible explanations behind this result, but the simplest is perhaps the most sobering: that there’s been an error somewhere along the way. In principle, it could mean:

- an error in the theoretical calculations that were done,

- a measurement error at any point along the way,

- or an experimental error related to the setup of the experiment and the way it was conducted.

In this particular case, the group in question has previously produced three results each claiming the discovery of a fifth force and hints of a novel particle, but none of them panned out. Previous culprits were due to a miscalibration of the equipment: with an inconsistently efficient spectrometer to blame for some of the earlier results.

There is a strong suspicion, based on the published method of calibration used for this set of experiments, that an incorrect calibration of the experiment at intermediate angles, from about 100-125 degrees, is what’s behind the supposed excess at large angles. Although many are still chasing after this anomaly in pursuit of a fifth force, a superior experiment known as PADME should settle the issue once and for all.

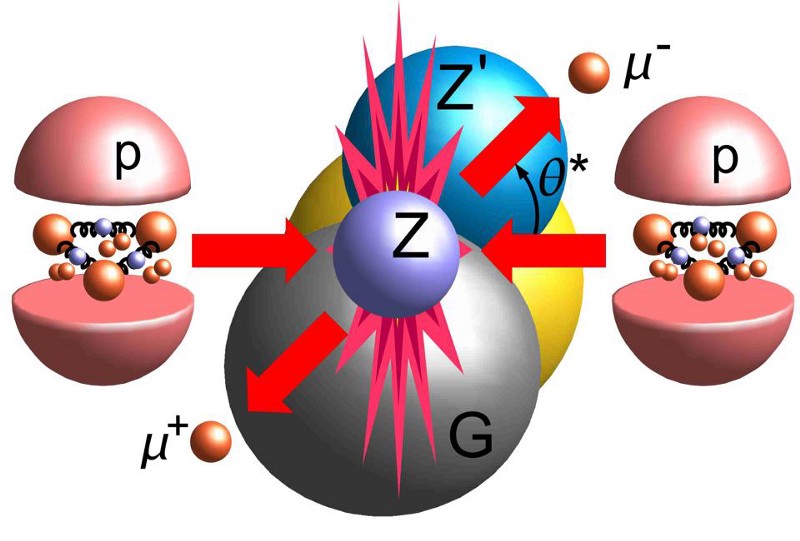

One extremely interesting anomaly that has appeared in physics in recent years is known as the Muon g – 2 (just pronounced “gee minus 2”) experiment, which has, to much (well-deserved) fanfare, just released their latest results: confirming earlier experimental results that seem to disagree with theoretical predictions. In physics, the quantity g that we measure is the gyromagnetic ratio: the strength of the magnetic field of a spinning particle relative to its electric charge.

- The naive prediction for g, from plain old regular quantum mechanics, is that g would equal 2 for both the electron and its more massive sibling particle: the muon.

- The more complex prediction involves using quantum electrodynamics: the quantum field theory that describes the electromagnetic interaction. Instead of the prediction that g would equal 2, the prediction comes closer to g equaling 2 + α/π, where α is the fine structure constant (about ~1/137.036) and π is the familiar 3.14159… that defines a circle’s ratio of its circumference to its diameter.

- But the full prediction would involve not only quantum electrodynamics, but all of the quantum forces and interactions in our Universe, including the ones that involve nuclear particles like quarks and gluons. That prediction, quite explicitly, is slightly different from the plain old QED prediction, and can be made to about 12 significant figures.

Wherever you have very precise predictions and the capability of gleaning very precise experimental results, it’s an experiment you simply have to do: it’s a chance to test nature to the greatest precision of all-time in a way it’s never been probed before. If there’s an anomaly — i.e., a mismatch between theoretical predictions and experimental results — it just might be a hint of new physics, and one form of new physics that might occur is the discovery of a novel fundamental force.

Experimentally, we know now, from Fermilab’s latest results, that the measured g – 2 for the muon has been determined to be 0.00233184110 ± 0.00000000047. That’s a very, very precise answer, and clearly (albeit slight) different from the 0.00232281945 that you’d get from the simple, first-order QED contribution.

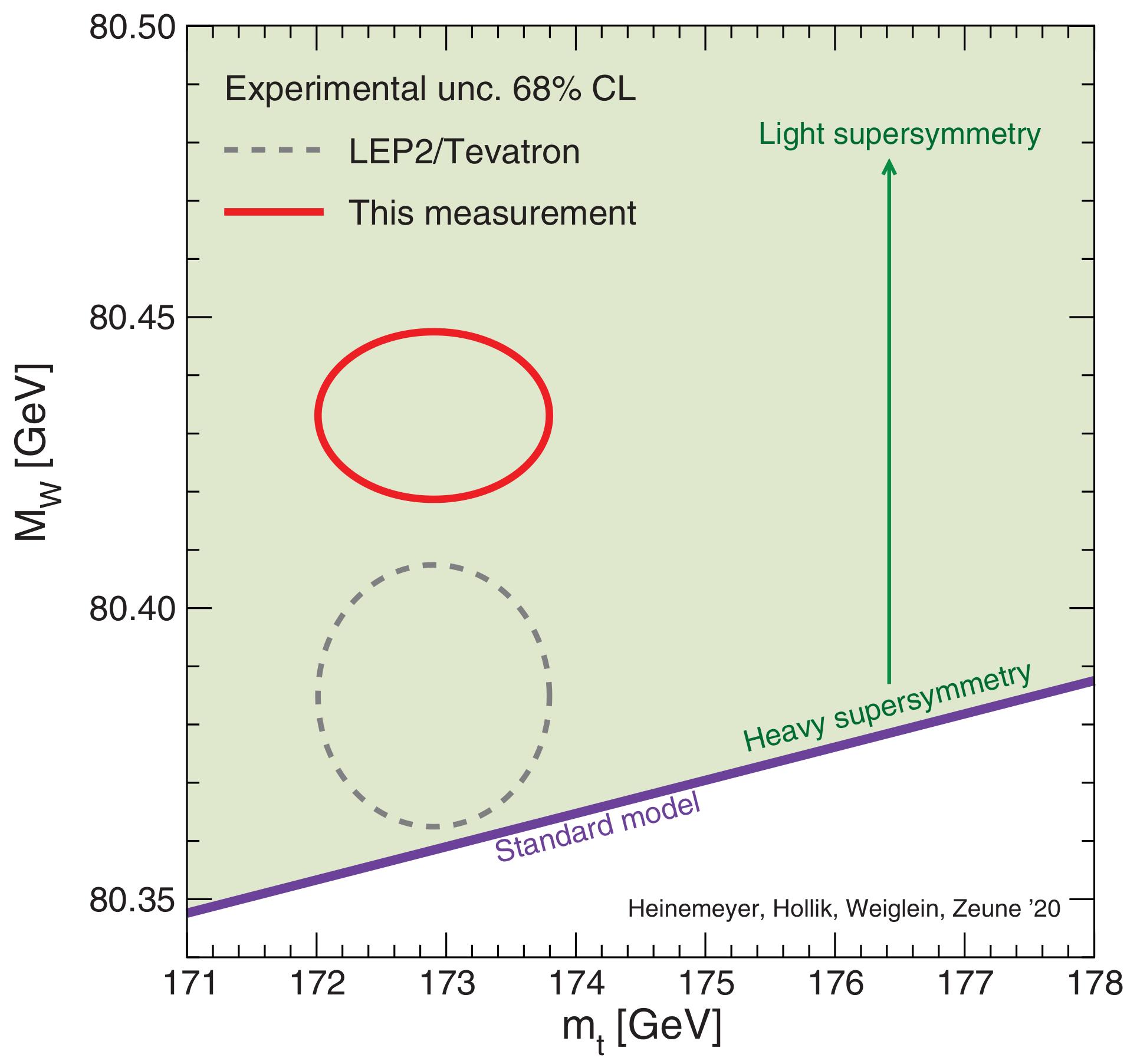

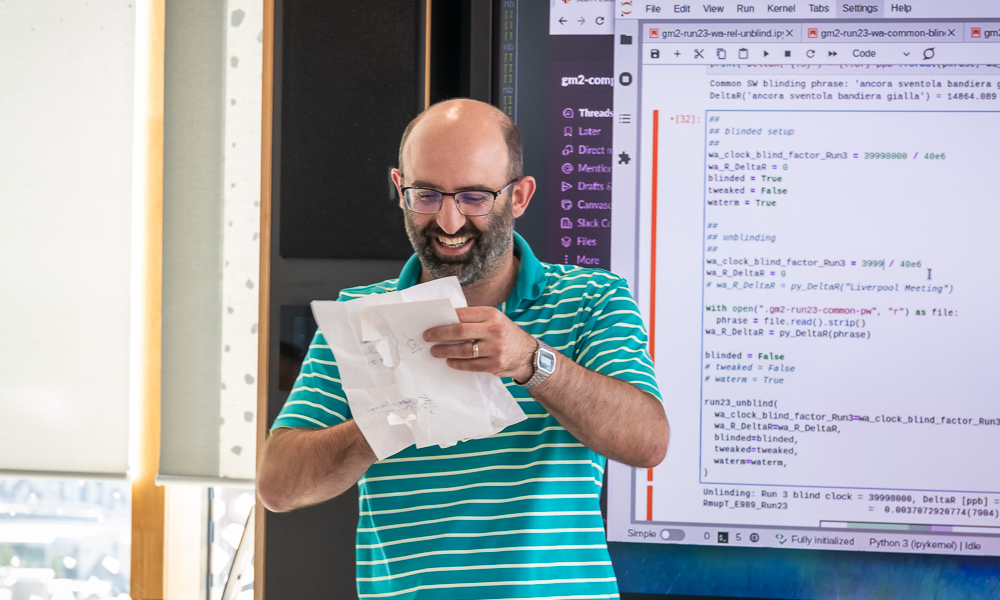

Claims have been made that this deviates from the Standard Model’s predictions at that vaunted 5-sigma significance, indicating that there’s only a 1-in-3.5 million chance of this being a statistical fluke: significant enough to warrant claims of a discovery. When Fermilab scientists unveiled the experimental results, we saw that they really were “blind” all along. However, in this instance, the theoretical uncertainties are now known to be much larger than they were previously estimated to be, throwing the significance of this “discovery” into doubt.

The issue is as follows.

- It’s very straightforward to calculate the contributions to the muon’s predicted gyromagnetic ratio from electromagnetic effects; the uncertainties there are only about 1 part per billion.

- Similarly, the effects of the weak nuclear interaction can also be well quantified, and the uncertainties there are also small: about 10 parts per billion.

- (The experimental uncertainties, for comparison, are about 190 parts per billion, if you combine all of the data released from the g – 2 experiment, including when the experiment was conducted, earlier, at Brookhaven, before moving to Fermilab.)

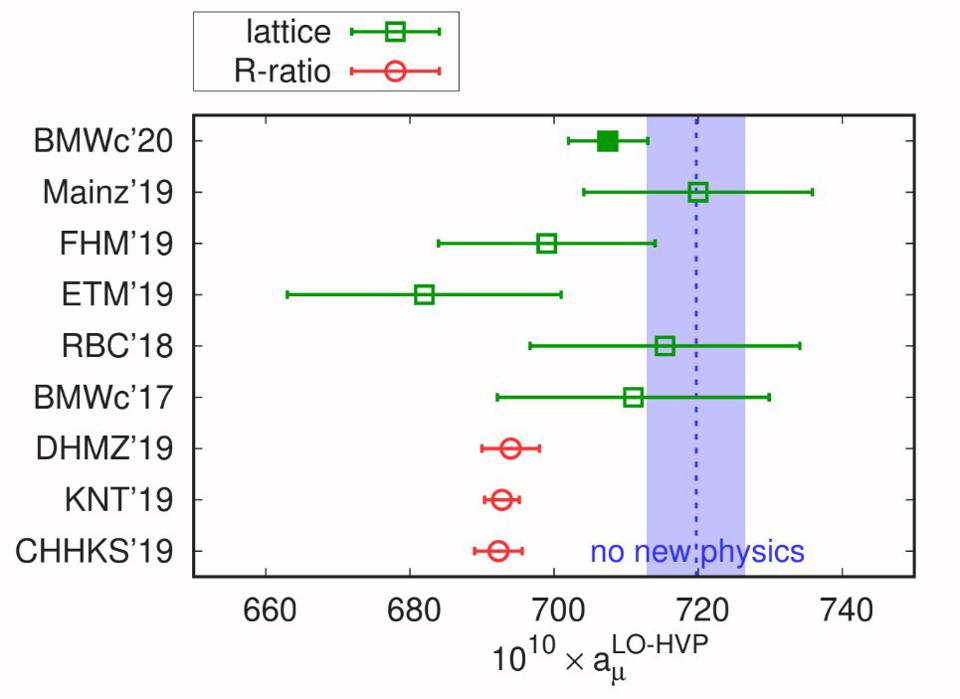

But when it comes to the effects of the strong nuclear force — the contributions of quarks, gluons, and all composite interactions (such as from mesons and baryons) — this is not easy to calculate. In fact, the way we calculate the effects of the weak and electromagnetic interactions, which is to calculate the contributions from progressively more and more complex interaction diagrams, will not work for the strong interactions. All we can do is either plug in inputs from other experiments to estimate their effects (the so-called R-ratio method) or attempt to do the non-perturbative QCD calculations on a supercomputer: the lattice QCD method.

Although both the R-ratio proponents and the lattice QCD proponents claim very small errors in their work, the various predicted results span a wide range of values. That range spans about 370 parts-per-billion between the various estimates, with some overlapping with Fermilab’s experimental data and others, particularly from the older R-ratio methods, disagreeing with Fermilab’s data at greater than the 5-sigma threshold.

This does not mean there’s evidence for a fifth force.

What it does mean is that doing this experiment is vitally important, and that checking your theoretical predictions via multiple independent methods is one of the only ways to be sure you’re getting the right answer. In this case, experiment needs to lead the way, and it will be the theorists now who are forced to play catch-up. It may yet turn out that there’s evidence for a fifth fundamental force in this data somewhere, but it will take a significant advance in theoretical precision in order for us to get there and know for sure. However, this only underscores what an important achievement the experimental results of the Muon g – 2 collaboration have given us so far.

There may yet be a fifth fundamental force out there, and it could be lurking anyplace where the data has surprised us in some fashion or other. However, we have to be very careful not to jump to (almost certainly) invalid conclusions based on preliminary data.

- Many thought the XENON collaboration had detected something anomalous in their XENON1T experiment, but a subsequent superior iteration of that experiment showed the experimental anomaly went away.

- Many thought the Atomki anomaly would lead to the discovery of a new particle and a fifth fundamental force, but the inability to replicate its results and the lack of such a predicted particle in other experiments have thoroughly darkened those prospects.

- Many still hold out hope that dark energy will prove to be something other than a cosmological constant, which means it may yet be a fifth fundamental force of nature, but all observations show no deviation from the boring old cosmological constant predicted by Einstein over 100 years ago.

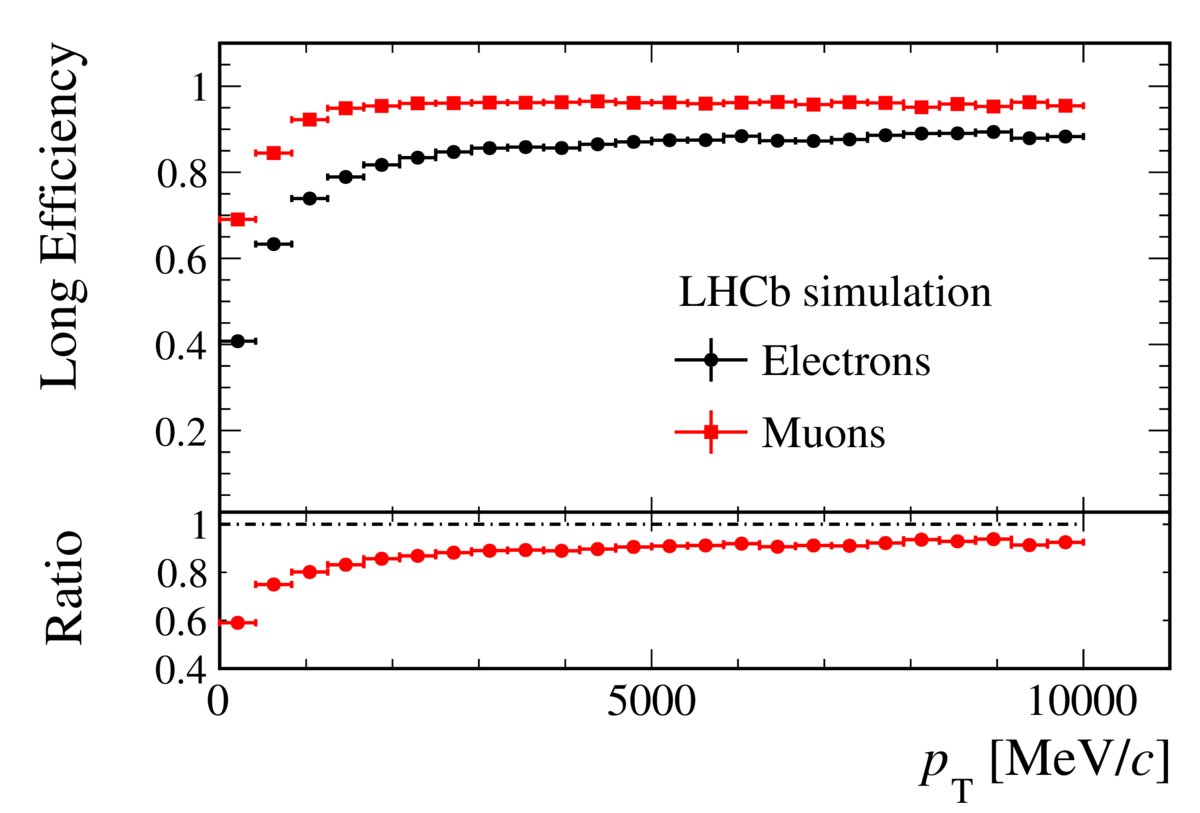

But you must remember that any such claim must hold up to scrutiny. Many hoped that the DAMA/LIBRA collaboration’s evidence for dark matter would pan out, but it turned out to be shoddy methodology that led to dubious results. Many hoped that lepton universality would be violated, but the LHCb collaboration, perhaps to their own surprise, wound up vindicating the Standard Model.

When it comes to a fifth fundamental force, it’s still possible, and if it shows up anywhere, it will likely be a high-precision anomaly that first reveals it. But it’s vital that we get the science right, otherwise we’ll be crying “wolf” at our own peril: simply because we cried out before whatever we were attempting to see actually came into focus.