This Is Why The ‘X17’ Particle And A New, Fifth Force Probably Don’t Exist

If it holds up, it would revolutionize physics and be a slam-dunk Nobel Prize. Here’s why that’s unlikely to be the case.

Every so often, an experiment comes along in physics that gives a result that’s inconsistent with the Universe as we currently understand it. Sometimes, it’s nothing more than an error inherent to the specific design or execution of the particular experiment itself. At other times, it’s an analysis error, where the way the experimental results are interpreted is at fault. At still other times, the experiment is right but there’s a mistake in the theoretical predictions, assumptions, or approximations that went into extracting the predictions that the experiment failed to match.

Way, way down the list of scientific possibilities is the notion that we’ve actually discovered something fundamentally new to the Universe. If you were to read the latest hype surrounding a potential discovery of a new, fifth force and a new particle — the X17 — you might think we’re on the cusp of a scientific revolution.

But that assumption is almost certainly wrong, and there’s a ton of science to back it up. Here’s what you need to know.

Experimental physics is a hard game to play, with many possible pitfalls that need to be understood. Physicists have become very tentative about announcing discoveries over the years, owing to an extraordinary number of finds that were announced, publicized, and then needed to be walked back.

This isn’t restricted to historical examples (like the infamous “oops-Leon” particle, a spurious statistical fluctuation that was misidentified as the then-predicted and now-discovered-elsewhere upsilon particle), but includes modern examples (from the 2010s) such as:

- the faster-than-light neutrino result from the OPERA experiment, which was discovered to be due to a faulty piece of equipment,

- the BICEP2 collaboration’s claimed detection of gravitational waves from inflation, due to incorrect assumptions about the Planck satellite and galactic foreground dust,

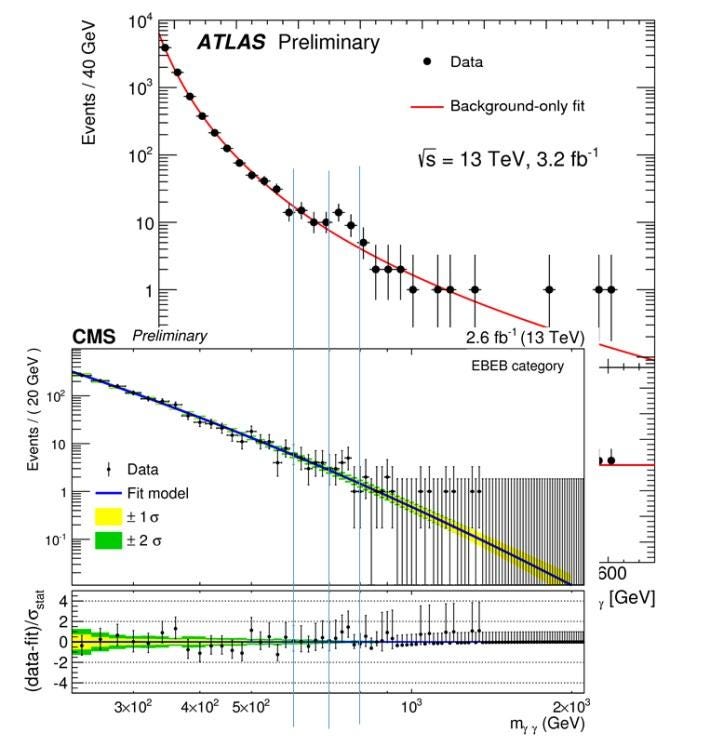

- or new particles corresponding to a bump in the diphoton channel at the LHC, which was simply a statistical fluctuation that disappeared with more data.

You cannot be afraid to make a mistake in science, but you must be aware that mistakes are common, can come from unexpected sources, and — as a responsible scientist — our job is not to sensationalize our most wishful thinking about what might be true, but to subject it to the most careful, skeptical scrutiny we can muster. Only with that mindset can we responsibly take a look at the experimental evidence in question.

If we want to give these new results a proper analysis, we need to make sure we’re asking the right questions. How was the experiment set up? What was the raw data? How was the analysis of the data performed? Was it verified independently? Is this data consistent with all the other data we’ve taken? What are the plausible theoretical interpretations, and how confident are we they’re correct? And finally, if it all holds up, how can we verify whether this really is a new particle with a new force?

The experiment behind these claims goes back many years, and despite its colorful history (which includes numerous announcements of spurious, unconfirmed detections), it’s a very straightforward nuclear physics experiment.

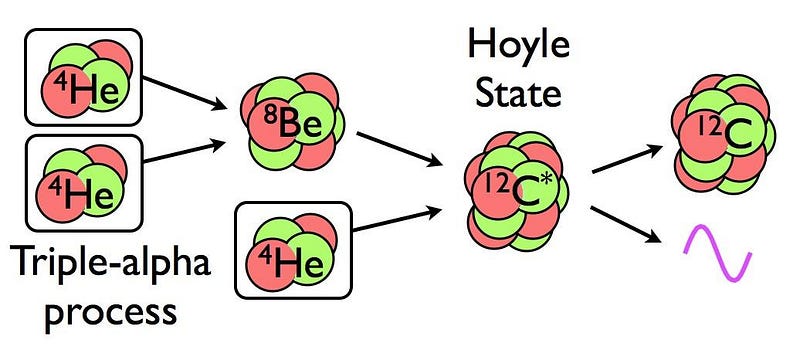

When you think about atomic nuclei, you probably think about the periodic table of the elements and the (stable) isotopes associated with each one. But in order to build the elements as we know them, we need to take into account the unstable, temporary states that may only exist for short periods of time. For example, the way carbon is formed in the Universe is via the triple-alpha process: where three helium nuclei (with 2 protons and 2 neutrons apiece) fuse into beryllium-8, which lives for only a tiny fraction of a second before decaying. If you can get a third helium nucleus in there quickly enough — before the beryllium-8 decays back into two heliums — you can produce carbon-12 in an excited state, which will then decay back into normal carbon-12 after emitting a gamma-ray.

Although this occurs easily in stars in the red giant phase, it’s a difficult interaction to test in the laboratory, because it requires controlling nuclei in an unstable state at high-energies. One of the things we can do, however, is produce beryllium-8 rather easily. We don’t do it by combining two helium-4 nuclei, but rather by combining lithium-7 (with 3 protons and 4 neutrons) with a proton, producing beryllium-8 in an excited state.

In theory, that beryllium-8 should then decay into two helium-4 nuclei, but since we made it in an excited state, it needs to emit a gamma-ray photon before decaying. If we make that beryllium-8 at rest, that photon should have a predictable energy distribution. In order to conserve both energy and momentum, there should be a probability distribution for how much kinetic energy your photon has relative to the initial beryllium-8 nucleus at rest.

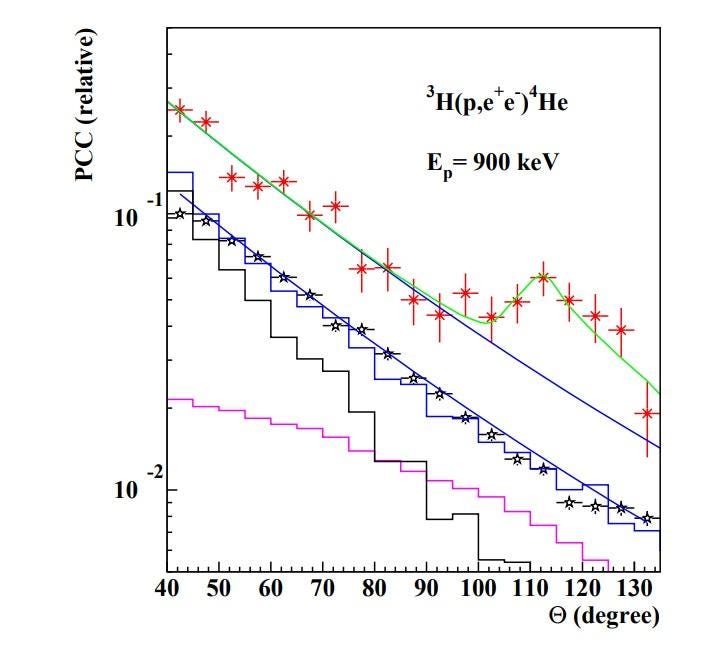

However, above a certain energy, you might not get a photon at all. Because of Einstein’s E = mc², you might get a particle-antiparticle pair instead, of an electron and its antimatter counterpart, a positron. Depending on the energy and momentum of the photon, you’ll fully expect to get a specific distribution of angles that the electron and positron make with one another: lots of events with small angles between them, and then less frequent events as you increase your angle, down to a minimum frequency as you approach 180°.

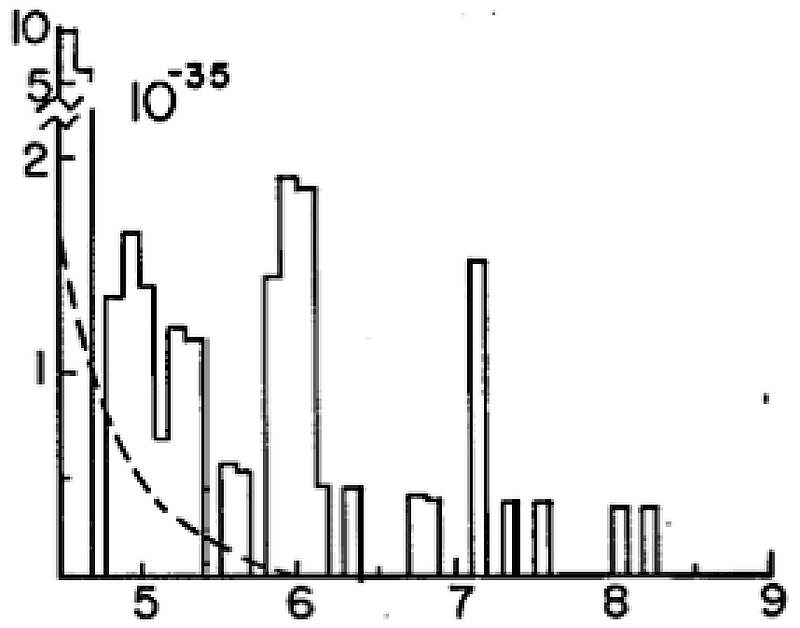

Back in 2015, a Hungarian team led by Attila Krasznahorkay made this measurement, and found something very surprising: their results didn’t match the standard nuclear physics predictions. Instead, once you got up to angles of around 140°, you found a slight but meaningful excess of events. This became known as the Atomki anomaly, and with a significance of 6.8-sigma, it appears to be much more than a statistical fluctuation, with the team putting forth the extraordinary explanation that it may be due to a new, light particle whose effects had never been detected before.

But one experiment at one place with one unexpected result does not equate to a new scientific breakthrough. At best, this is merely a hint of new physics, with multiple possible explanations if true. (While at worst, it’s a complete mistake.)

However, the reason for all the recent attention is because the same team did a new experiment, where they created a helium-4 nucleus in a very excited state, one that would again decay by emitting a gamma-ray photon. At high enough energies, the gamma-rays would produce electron/positron pairs once again, and above a certain energy threshold, they would look for a change in the opening angle between them. What they found was that another anomalous increase appeared, at a different (lower) angle, but at similar energies to the anomalies seen in the first experiment. This time, the statistical significance claimed is 7.2-sigma, also appearing to be much larger than a statistical fluctuation. In addition, it appears to be consistent with one particular explanation: of a new particle, a new interaction, and a new fundamental force.

Let’s go deeper, now, into what’s actually occurring in the experiment, to see if we can uncover the weak points: the places where we’re likely to find an error, if one exists. Although it’s now occurring in a second experiment, the two experiments were performed at the same facility with the same equipment and the same researchers, using the same techniques. In physics, we need independent confirmation, and this confirmation is the opposite of independent.

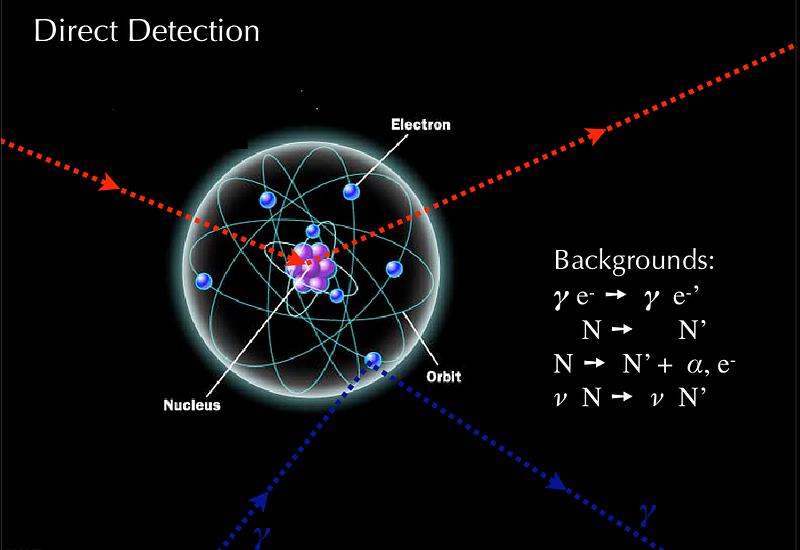

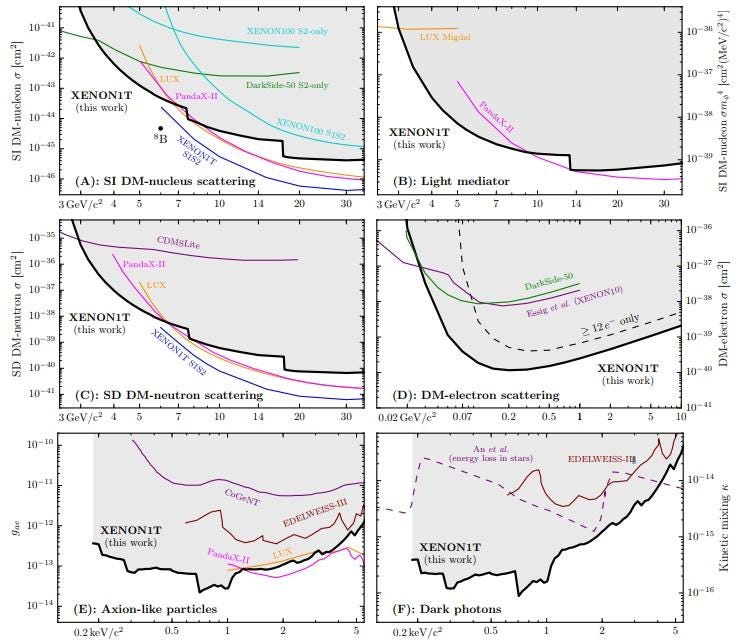

Second, there are independent experiments out there that should have created or seen this particle, if it exists. Searches for dark matter should see evidence for it; they don’t. Lepton colliders producing electron-positron collisions at these relevant energies should have seen evidence for this particle; they have not. And in the same vein as the proverbial boy who cried wolf, this is at least the fourth new particle announced by this team, including a 2001-era (9 MeV) anomaly, a 2005-era (multiple-particle) anomaly, and a 2008-era (12 MeV) anomaly, all of which have been discredited.

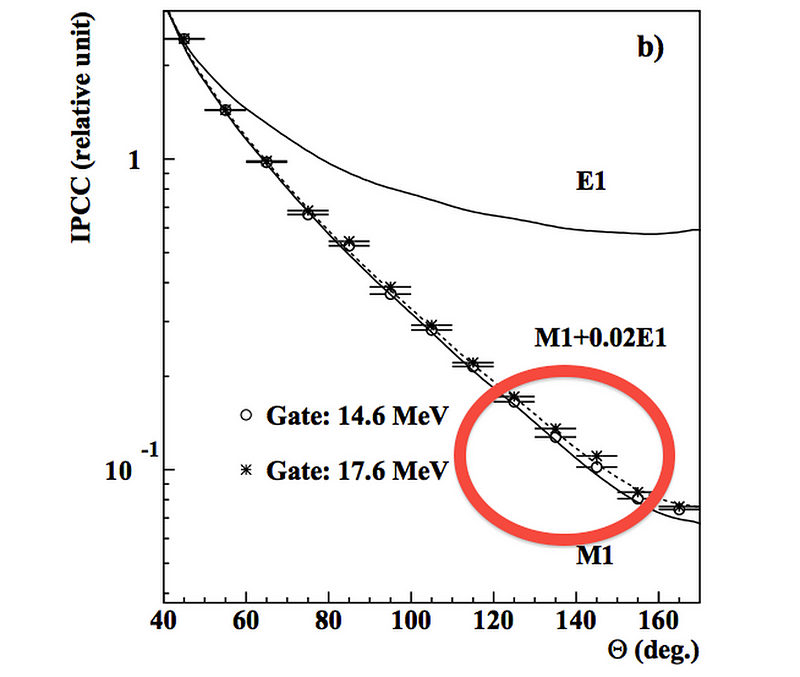

But the most suspicious evidence against it comes from the data itself. Take a look at the above graph, where you can see the calibration (low-energy) data in blue. Do you notice that the curve (solid line) fits the data (black points) extremely well overall? Except, that is, between about 100° and 125°? In those cases, the data is a poor fit to what’s taken as “a good calibration,” as there should be more events that what was observed. If you only considered the data between 100° and 125°, you would never use this calibration; it’s unacceptable.

Then, they rescale that calibration to apply to the higher-energy data (the raised blue line), and lo-and-behold, it’s a great calibration until you reach about 100°, at which point you start seeing a signal excess. Regardless of quality or faulty calibrations, there is no physical reason for the two separate (helium and beryllium) experiments to produce signals at different angles. This is what we loosely call “sketchy,” and part of why we demand confirmation that’s truly independent.

In physics, it’s important to follow whatever clues nature gives you, as today’s anomaly can often lead to tomorrow’s discovery. It may be possible that a new particle, interaction, or unexpected phenomenon is at play, causing these bizarre and unexpected results. But it’s far more plausible that an error with the experiment itself — and it could be as mundane as a problematic, efficiency-inconsistent spectrometer, which is an essential part of the experiment and was the culprit in the last round of faulty results — is what’s ultimately responsible.

Until we directly detect a new particle, remain skeptical. Until these early results are successfully replicated by a completely independent team using a completely independent setup, remain highly skeptical. As particle physicist Don Lincoln notes, the history of physics is littered with fantastic claims that fell apart under closer scrutiny. Until that scrutiny arrives, bet on the X17 as an error, not as a slam-dunk Nobel Prize.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.