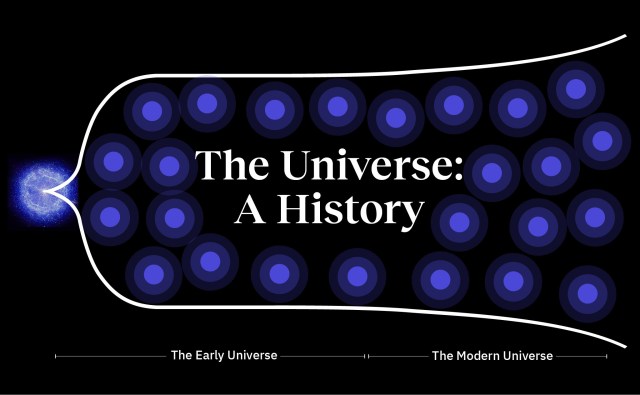

What was it like when the first stars began to shine?

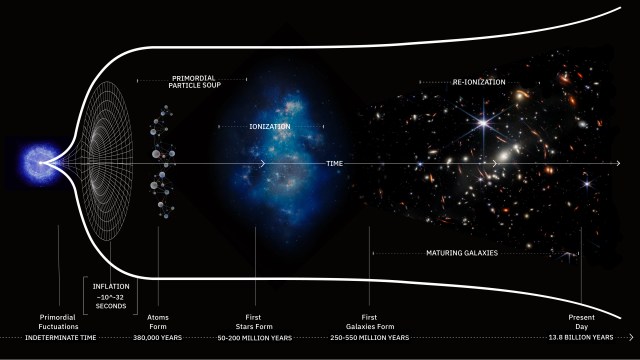

- It took less than a second to form protons and neutrons, minutes to form atomic nuclei, hundreds of thousands of years to make neutral atoms, but tens of millions or even a hundred million years to make stars.

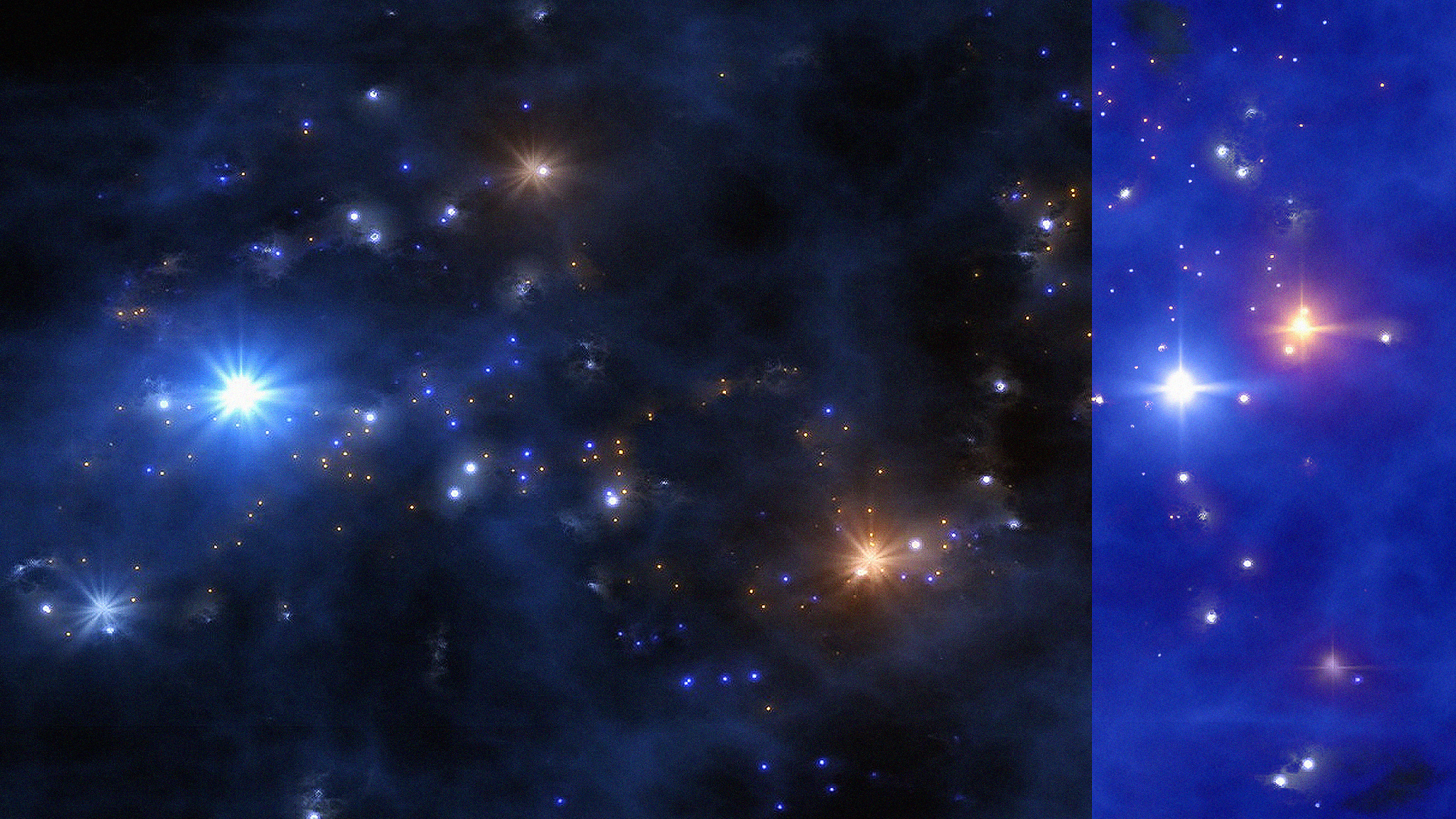

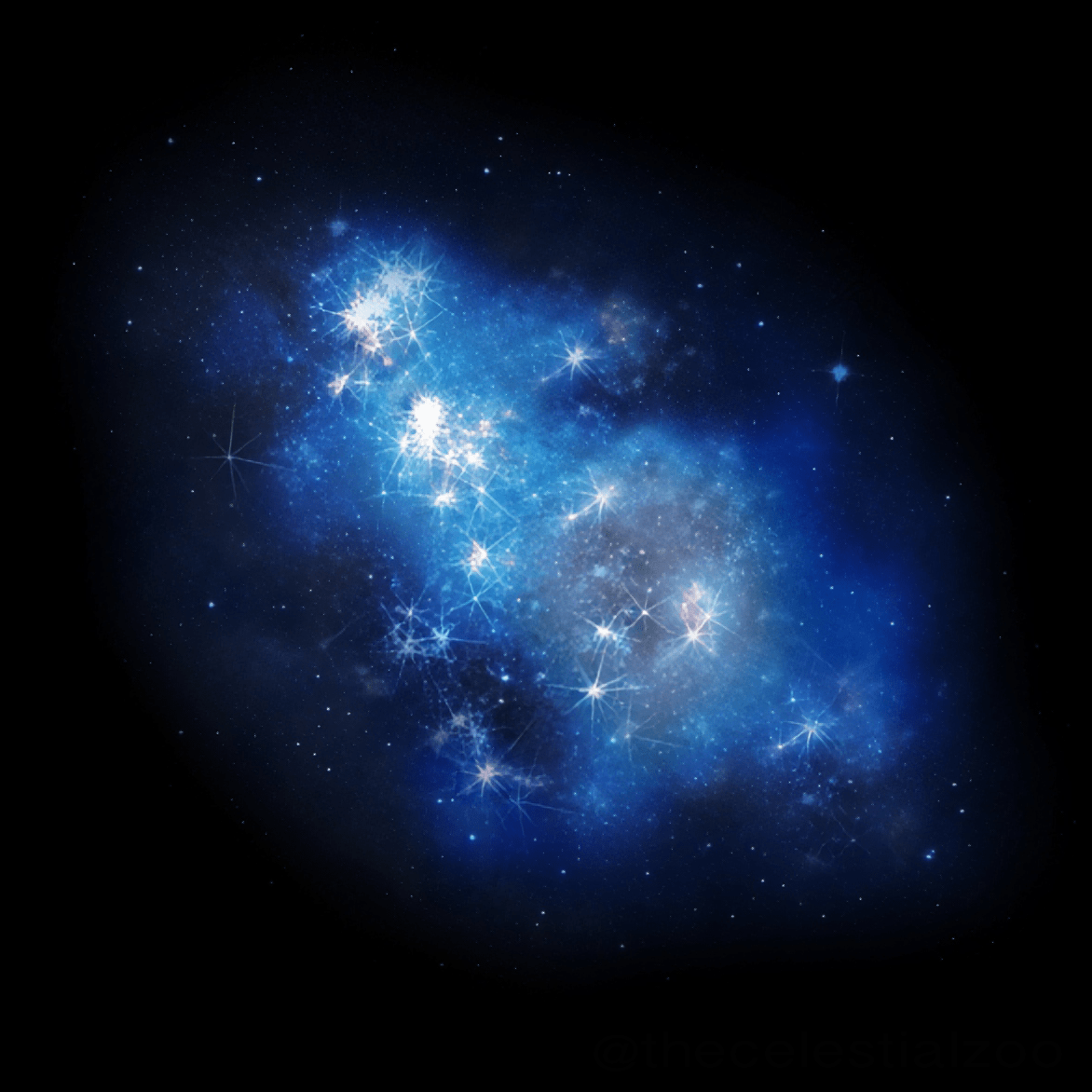

- These first stars in the Universe, unlike all of the stars formed today, were made exclusively of hydrogen and helium, and were far and away more massive and shorter-lived than nearly all of today’s stars.

- As the first stars began to shine, they also began transforming the Universe in a series of profound ways. Here’s what it was like back then at times we have yet to directly probe.

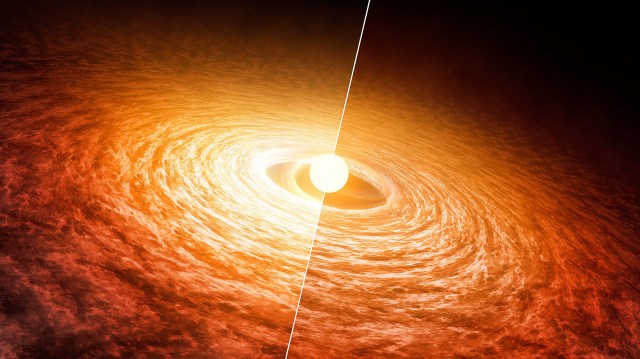

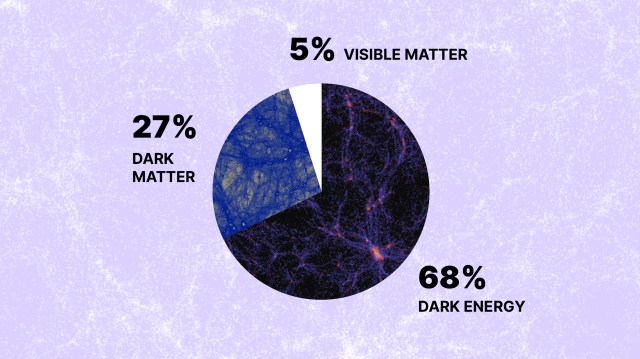

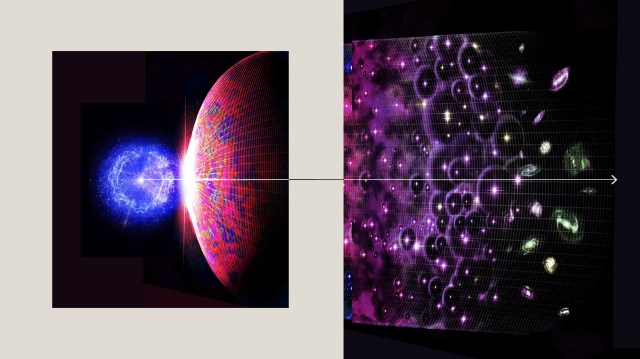

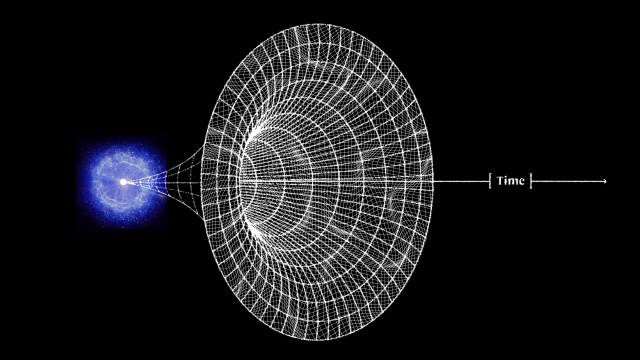

For perhaps as long as the first 100 million years after the start of the hot Big Bang, the Universe was devoid of stars. The matter in the Universe required just half-a-million years to finish forming neutral atoms, but stars would take much longer to form for a variety of reasons. For one, gravitation on cosmic scales is a slow process, made even more difficult by the high energies of the radiation the Universe was born with. For another, the initial gravitational imperfections were small: just 1-part-in-30,000, on average. And for yet another, gravity only propagates at the speed of light, meaning that when the Universe is very young, there’s only a very small distance range over which other masses can “feel” the gravitational force from any particular initial mass.

As the Universe cooled, gravitation began to pull matter together into clumps and eventually clusters, growing faster and faster as more matter was attracted together. Eventually, we reached the point where dense gas clouds could collapse, forming objects that were dense and massive enough to ignite nuclear fusion in their cores. When those first hydrogen-into-helium chain reactions began taking place, we could finally claim that the first stars had been born: a process taking at least 50 million years and maybe as much as 100 million years or more for even the very first ones to ignite. Here’s what the Universe was like back then.

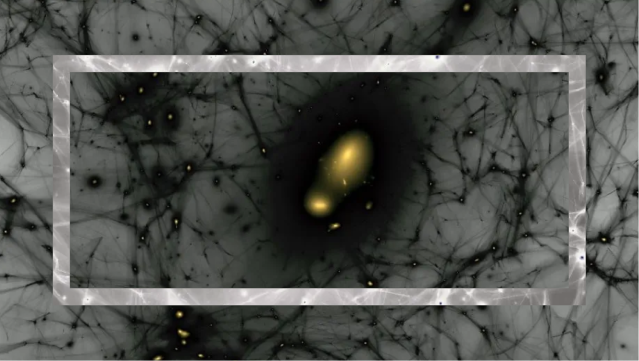

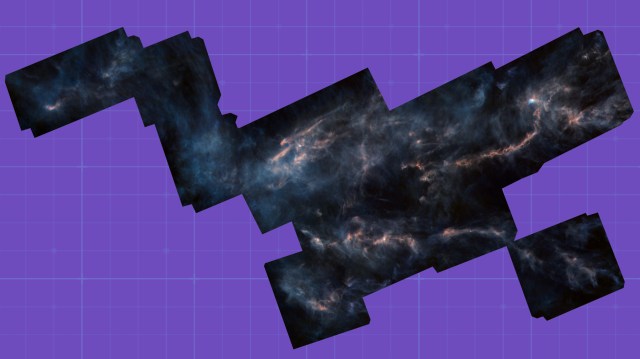

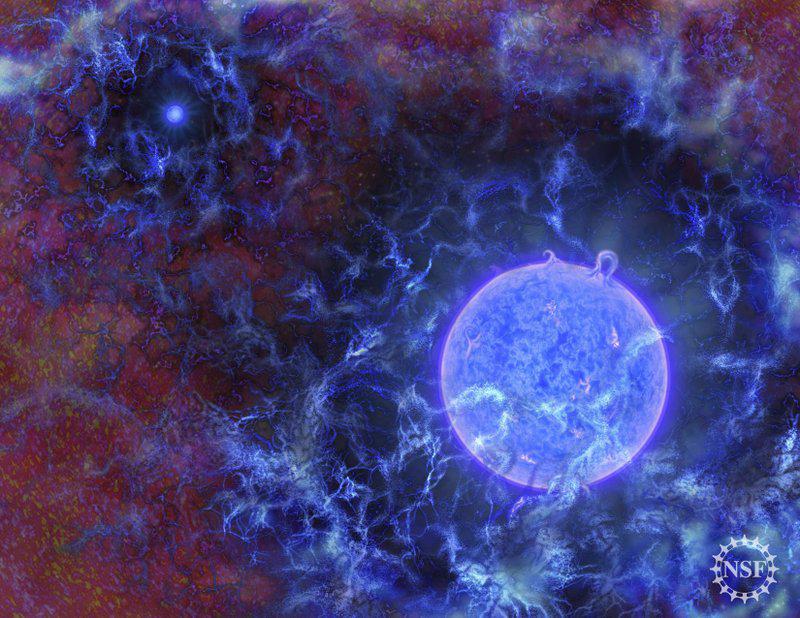

By the time 50-to-100 million years have passed since the onset of the hot Big Bang, the Universe is no longer completely uniform, but has begun to form a structure resembling a great cosmic web, all under the cosmic influence of gravity. The initially overdense regions have grown and grown, attracting more and more matter to them over time. Meanwhile, the regions that began with a lower density of matter than average have been less able to hold onto it, giving it up to the denser regions that surround them.

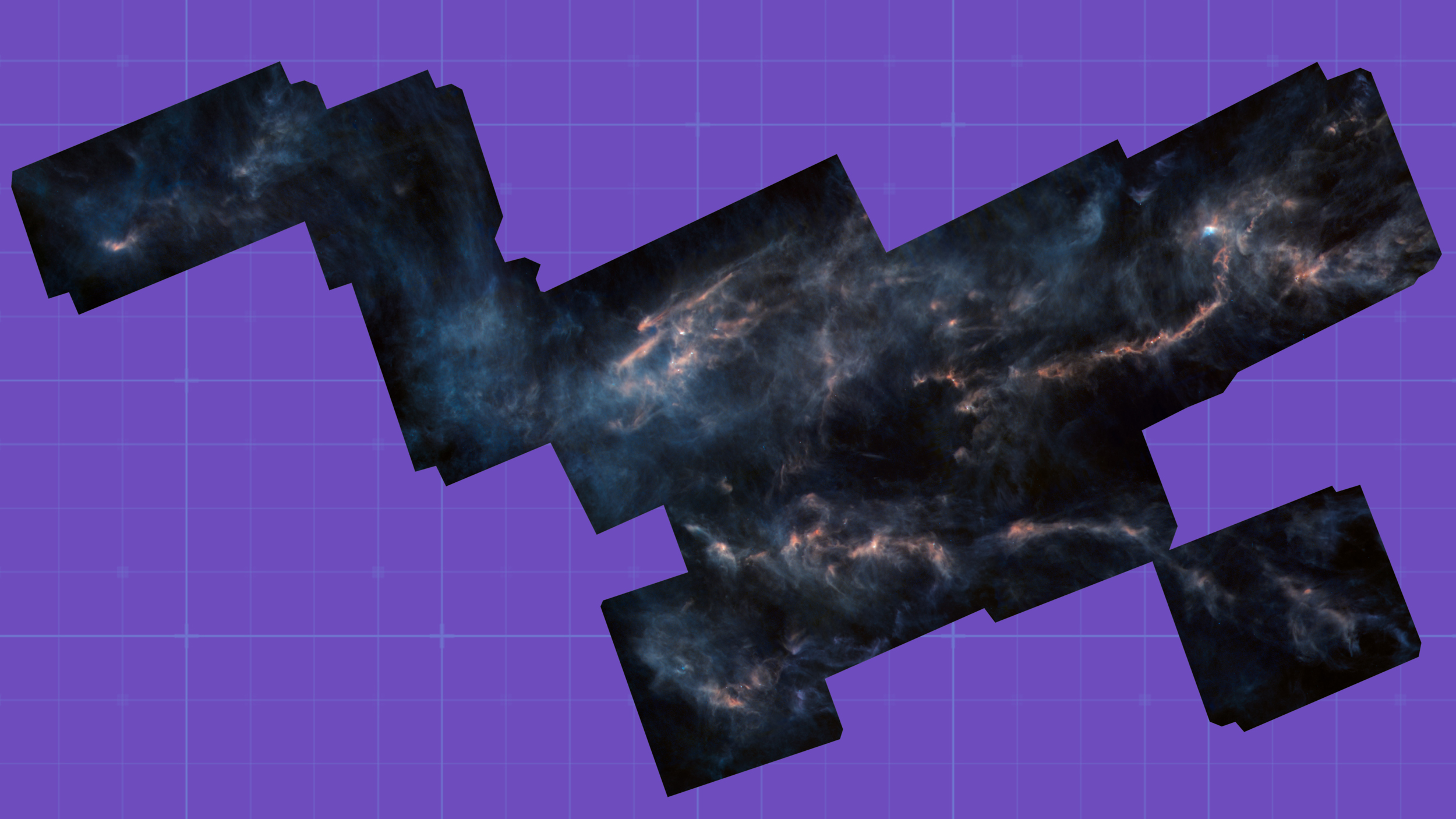

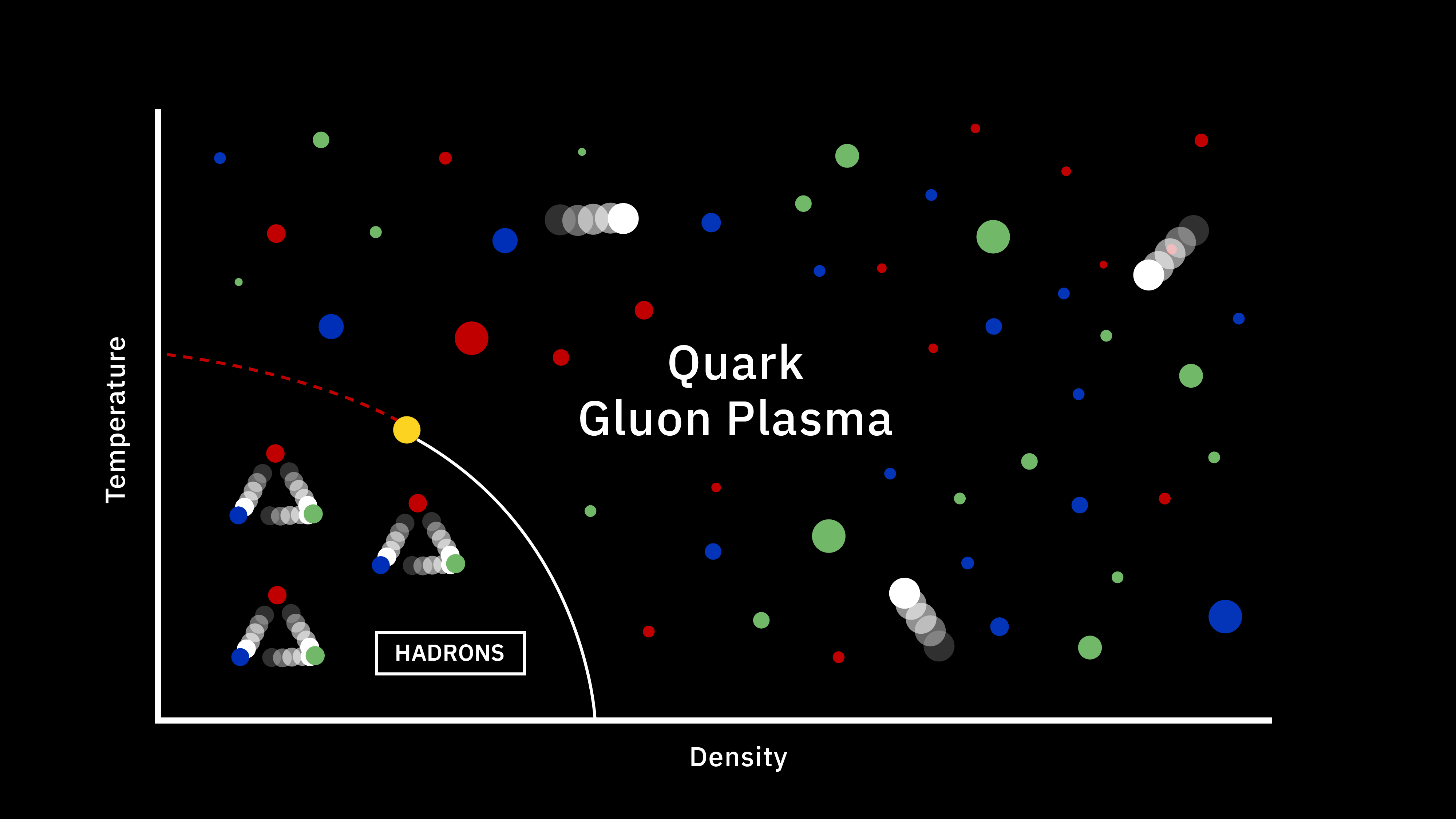

In this sense, gravity is what we know as a “runaway” force, where the (matter) rich regions get richer, and the initially matter-poor regions get poorer over time. As overdense regions grow, they draw more and more matter into them, dominated by neutral atoms and streams of gas. These very dense regions accumulate more and more mass, but there’s a problem: as they gravitationally collapse, the atoms collide and generate heat. That heat must be radiated away for stars to form, but hydrogen and helium are terrible at radiating heat away. As a result, the streams of gas grow more and more massive, increasing the mass, temperature, and pressures within them in the densest locations.

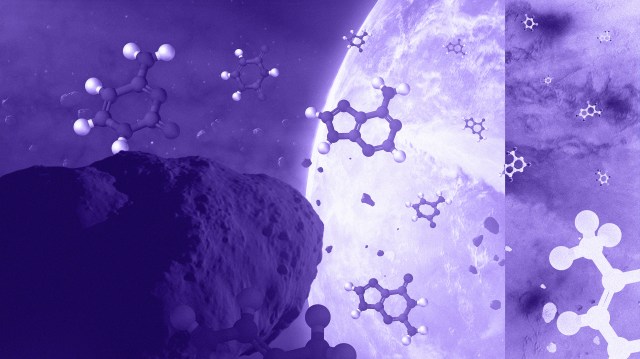

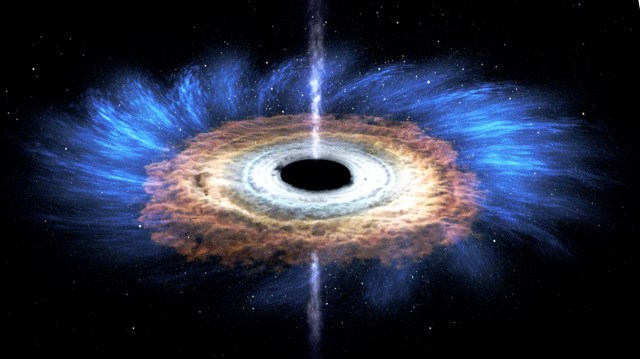

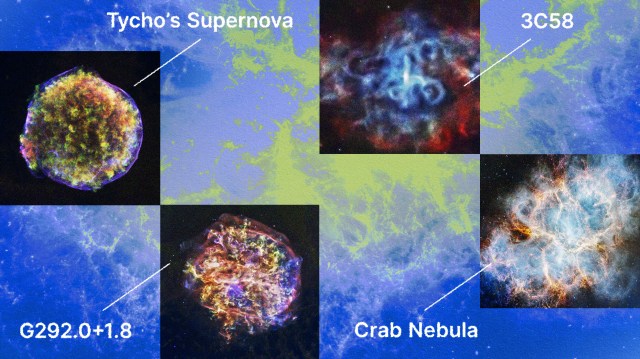

Driven by the first molecule in the Universe, an ion known as helium hydride, cooling does indeed take place, but only slowly. The very densest regions take one of two paths: they can either directly collapse to form black holes, potentially with tens of thousands or even a hundred thousand solar masses at the outset, or those dense regions can fragment to begin forming stars. The initial mass of these massive clumps has to be enormous: hundreds or even thousands of times the mass that star-forming regions typically have today. When cooling is inefficient, gas remains diffuse, and cannot collapse to form stars unless tremendous amounts of mass are gathered together in one place.

The slightly less initially dense regions will get to those same places eventually, but tens-to-hundreds of millions of years later, as gravitational growth depends both on the initial size of your overdense seeds as well as the mass distribution of the surrounding regions. Regions that begin with only a modest overdensity will take perhaps half-a-billion years or more to form stars for the first times, while regions of merely average density might not begin forming stars until much later: until as many as a couple of billion years have passed.

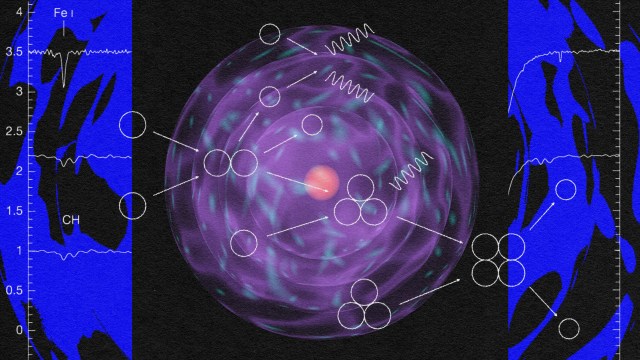

The very first stars, when they ignite, do so deep inside molecular clouds. They’re made almost exclusively of hydrogen and helium; with the exception of the approximately 1-part-in-a-billion of the Universe that’s lithium, there are no heavier elements at all. As gravitational collapse occurs, the energy gets trapped inside this gas, causing the interior temperatures deep inside the still-forming proto-star to heat up. It’s only when, under high-density conditions, the temperature crosses a critical threshold of around 4 million K that nuclear fusion of hydrogen into helium, occurring in a chain reaction, begins. When that occurs, things start to get interesting.

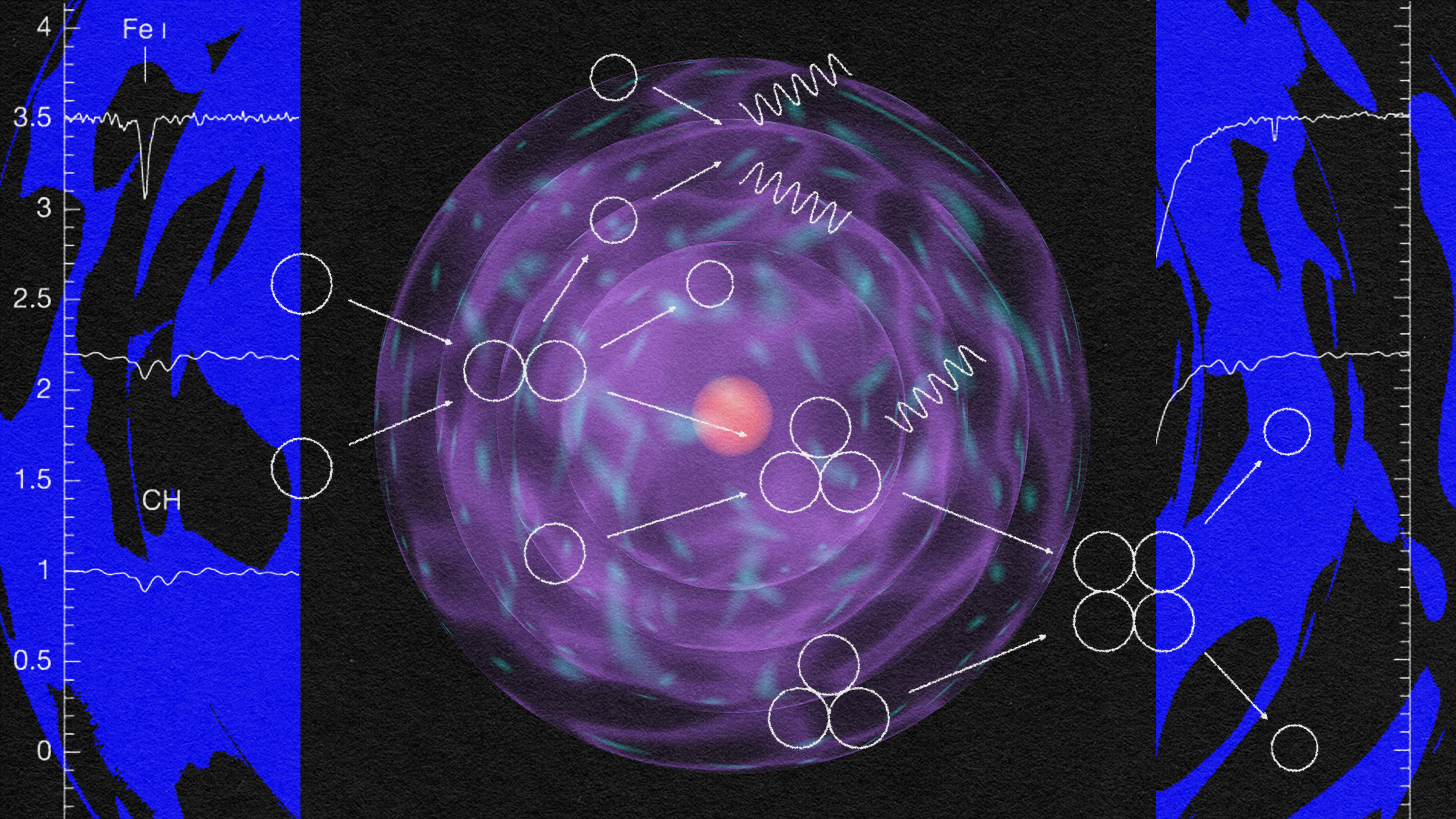

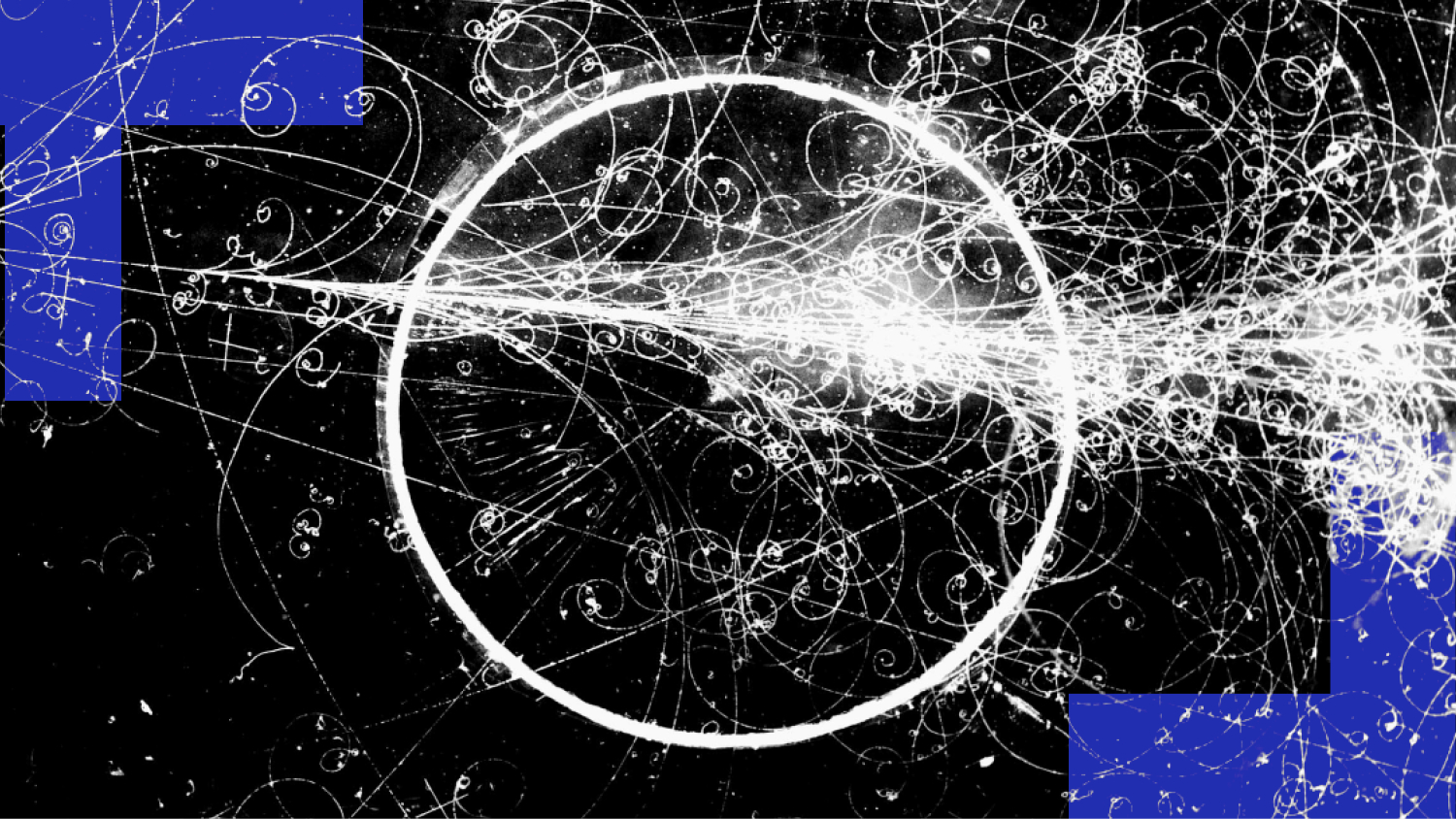

For one, the great cosmic race that will take place in all future star-forming regions begins in earnest for the first time in the Universe. As fusion begins in the first proto-star’s core, the gravitational collapse that continues to grow the mass of the star is suddenly counteracted by the radiation pressure emanating from the inside. At a subatomic level, protons are fusing in a chain reaction to form deuterium, then either tritium or helium-3, and then helium-4, emitting energy at every step. As the temperature rises in the core, the energy emitted increases, eventually fighting back against the infalling of mass due to gravity.

These earliest stars, much like modern stars, grow quickly due to gravitation. But unlike modern stars, they don’t have heavy elements in them, so they cannot cool as quickly; it’s more difficult to radiate energy away without heavy elements. It’s molecular hydrogen (H2) and the helium hydride ion that are left as the most efficient “cooling” mechanisms, but they’re all far less efficient than particles containing elements that become common later on, such as oxygen and carbon, which will swiftly (but have not yet) become the 3rd and 4th most common elements in the Universe. Because you need to cool in order to collapse, this means it’s only the largest, most massive clumps that will lead to stars.

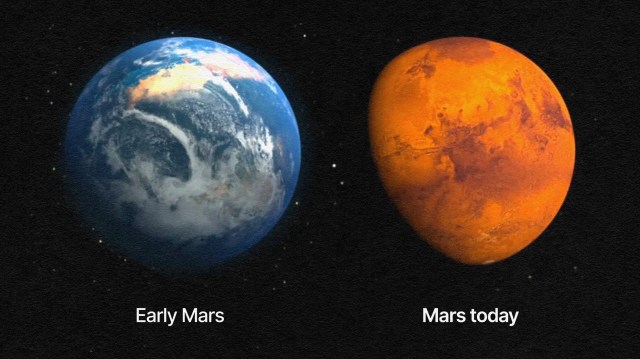

Because of how massive these clumps need to grow in order for stars to form, the first stars that are forming in the young Universe wind up having a mass that is, on average, about 10 times more massive than our Sun. The most massive stars that formed early on, whereas they cap out at around 200-300 solar masses today, could have reached up to many hundreds or even several thousands of solar masses. For comparison, the average star that forms today, 13.8 billion years after the Big Bang, is merely about 40% the mass of our Sun, or 1/25th of what it was for the first stars.

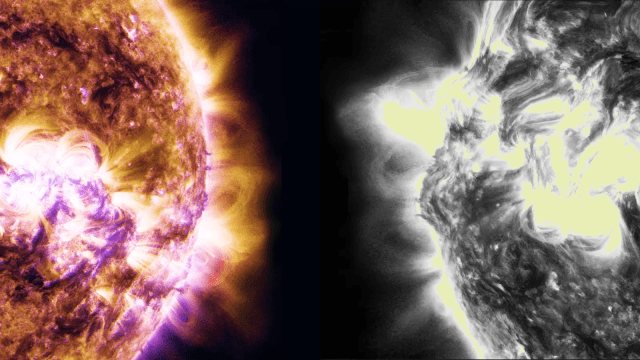

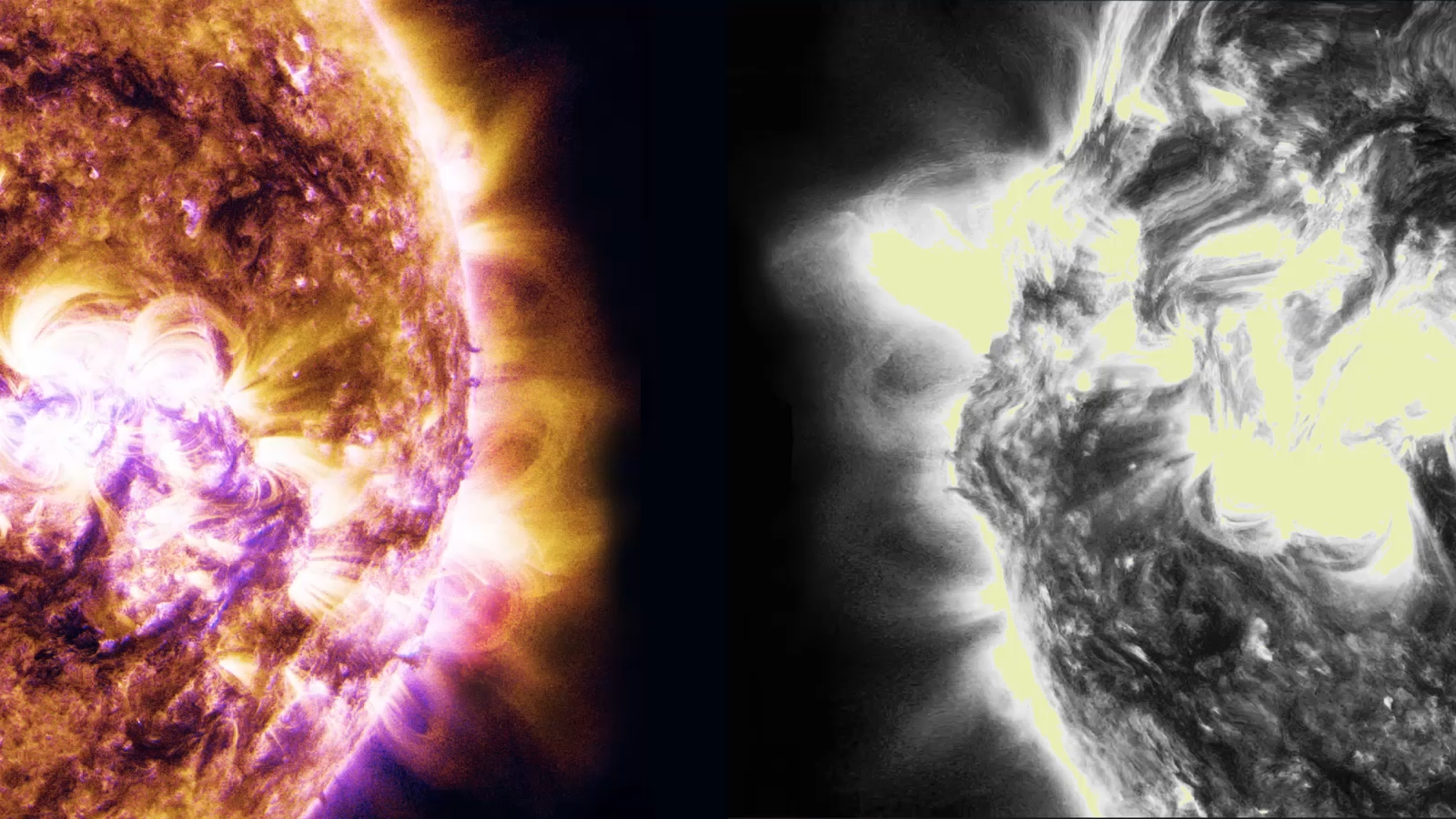

The radiation emitted by these very massive stars is peaked, in terms of the various wavelengths of light that get emitted, differently than our Sun. While our Sun emits mostly visible light, these more massive, early stars emit predominantly ultraviolet light: higher energy photons than we typically have today. Ultraviolet photons don’t just give humans sunburns; they have enough energy to knock electrons clean off of the atoms they encounter: they ionize matter.

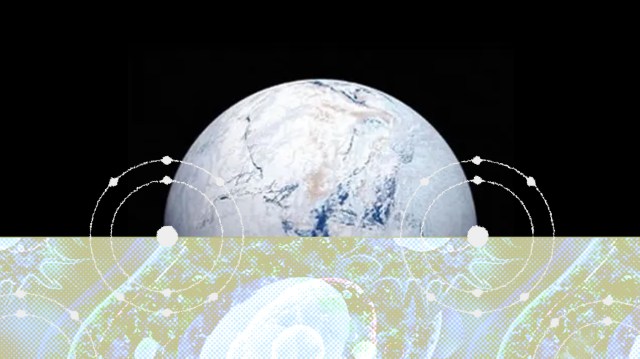

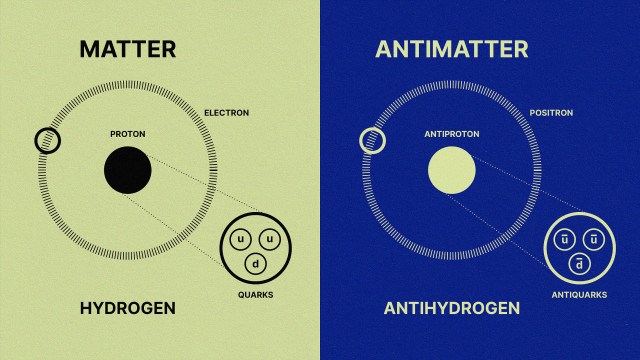

Since most of the Universe is made out of neutral atoms, with these first stars showing up in these clumpy clouds of gas, the first thing the light does is smash into the neutral atoms surrounding them. And the first thing those atoms do is ionize: breaking apart into nuclei and free electrons, populating the Universe with these entities for the first time since before the initial formation of neutral atoms just 380,000 years after the Big Bang. This process is known as “reionization,” since it’s the second time in the Universe’s history (after the initial plasma phase of the hot Big Bang) that atoms became ionized.

However, because it takes so long for most of the Universe to form stars, there aren’t enough ultraviolet photons to ionize most of the matter just yet. For hundreds of millions of years, neutral atoms will dominate over the reionized ones, and what’s more, some of the ionized electrons will fall back onto ionized atomic nuclei, re-neutralizing these reionized atoms once again. The starlight from the very first stars cannot propagate very far; it gets absorbed by the intervening neutral atoms almost everywhere. Some of these neutral atoms will scatter that light, while others will become ionized once again.

The ionization and the intense radiation pressure from the first stars forces star formation to cease shortly after it begins; most of the gas clouds that give rise to stars will have those dense clumps of matter blown apart, evaporated away by this radiation. The matter that does remain around the star collapses into a protoplanetary disk, just like it does today, but because there are not yet any heavy elements, only diffuse, giant planets can form. The first stars of all couldn’t have hung onto small, rocky-size planets at all, as the radiation pressure would destroy them entirely.

The radiation doesn’t just destroy aspiring planets, it destroys atoms as well, by kicking electrons energetically off of the nuclei and sending them into the interstellar medium. But even that leads to another interesting part of the story: a part that creates light whose signatures will someday be observable.

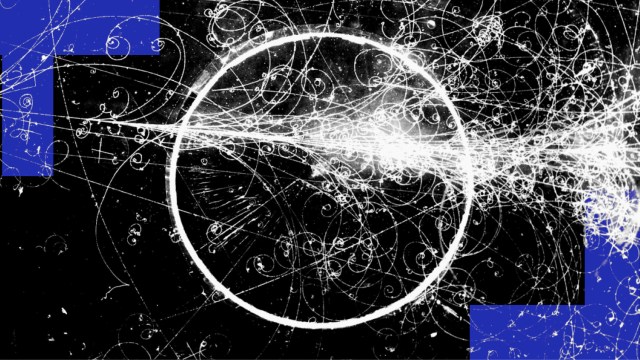

Whenever an atom becomes ionized, there’s a chance it will run into a free electron that was kicked off of another atom, leading to a new neutral atom. When neutral atoms form, their electrons cascade down in energy levels, emitting photons of different wavelengths as they do. The last of these lines is the strongest: the Lyman-alpha line, which contains the most energy. Some of the first light in the Universe that’s visible is this Lyman-alpha line, allowing astronomers to look for this signature wherever light exists.

The second-strongest line is the one that transitions from the third-lowest to the second-lowest energy level: the Balmer-alpha line. This line is interesting to us because it’s red in terms of the color we see, rendering it visible to the human eye.

If a human were somehow magically transported to this early time, we’d see the diffuse glow of starlight, as seen through the fog of neutral atoms. But wherever the atoms become ionized in the environs surrounding these young star clusters, there would be a pinkish glow coming from them: a mix of the white light from the stars and the red glow from the Balmer-alpha line. This signal is so strong that it’s visible even today, in environments like the Orion Nebula in the Milky Way.

After the Big Bang, the Universe was dark for millions upon millions of years; after the glow of the Big Bang fades away, there’s nothing that human eyes could see. But when the first wave of star formation happens, growing in a cosmic crescendo across the visible Universe, starlight struggles to get out. The fog of neutral atoms permeating all of space absorbs most of it, but gets ionized in the process. Some of this reionized matter will become neutral again, emitting light when it does, including the 21-cm line over timescales of ~10 million years.

But it takes far more than the very first stars to truly turn on the lights in the Universe. For that, we need more than just the first stars; we need them to live, burn through their fuel, die, and give rise to so much more. The first stars aren’t the end; they simply mark the dawn of a new chapter in the cosmic story that gives rise to us.