prosthetics

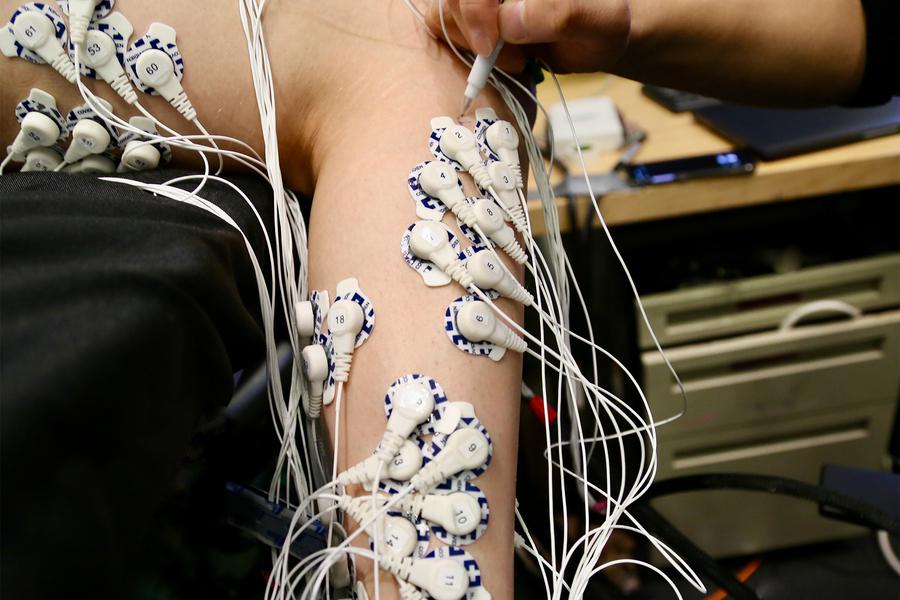

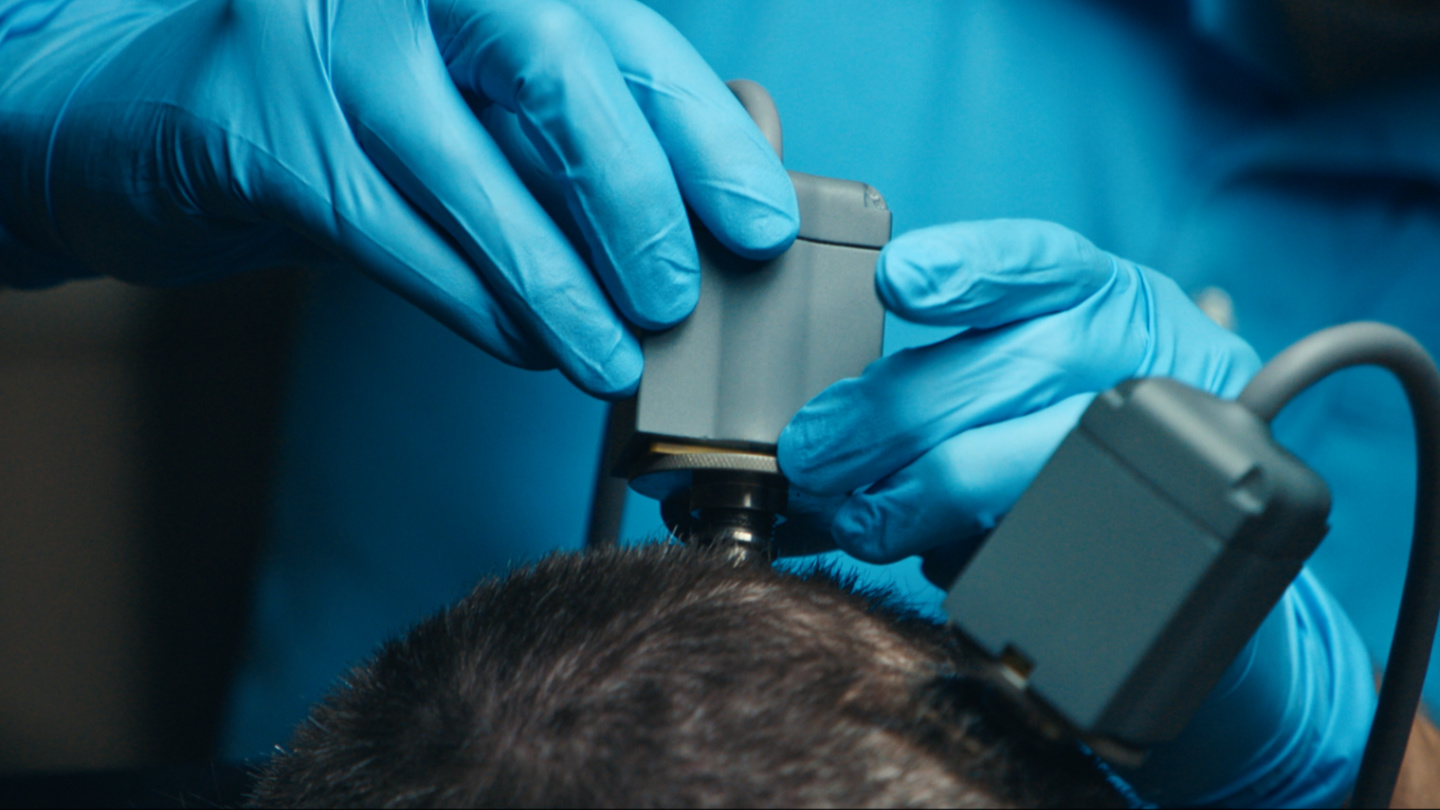

Reconnecting muscle pairs allows for better sensory feedback from the limb.

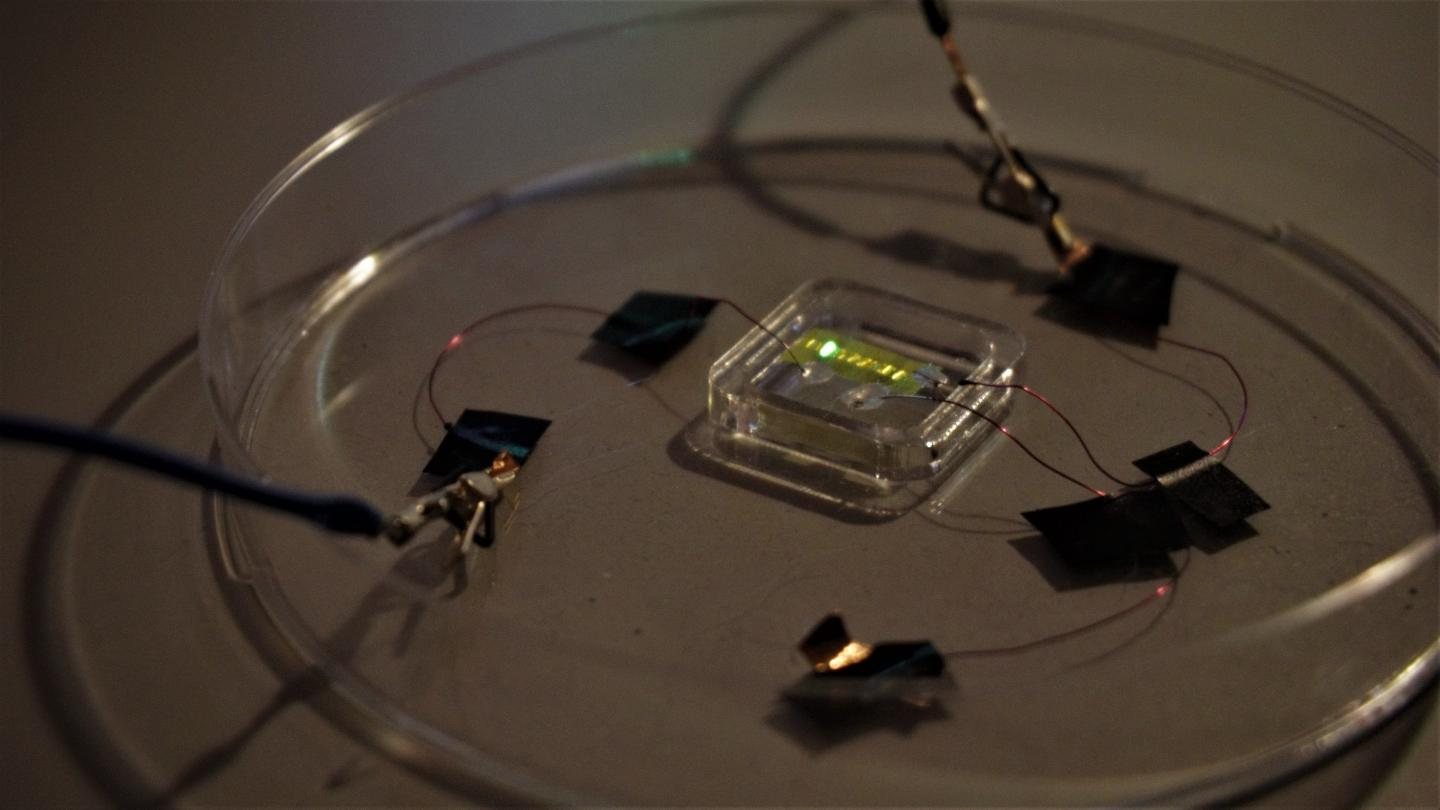

Light-emitting tattoos could indicate dehydration in athletes or health conditions in hospital patients.

An accident left this musician with one arm. Now he is helping create future tech for others with disabilities.

▸

8 min

—

with

Duke University researchers might have solved a half-century old problem.

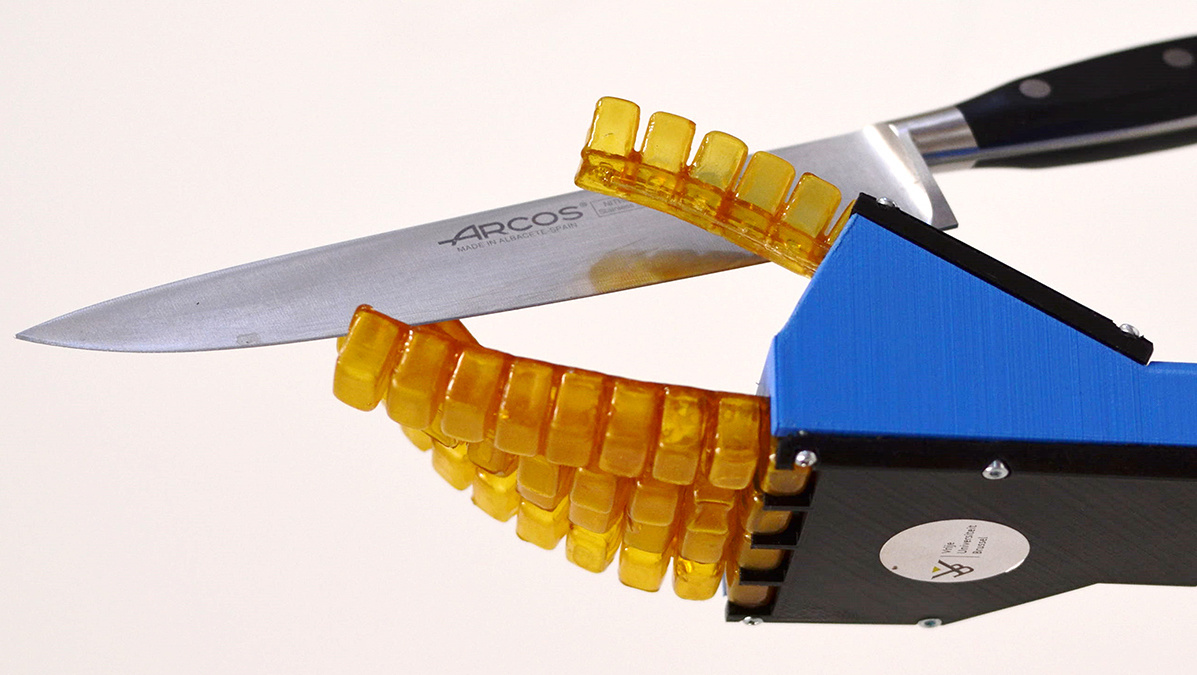

The old idea of running with springs on your feet gets a high-tech makeover.

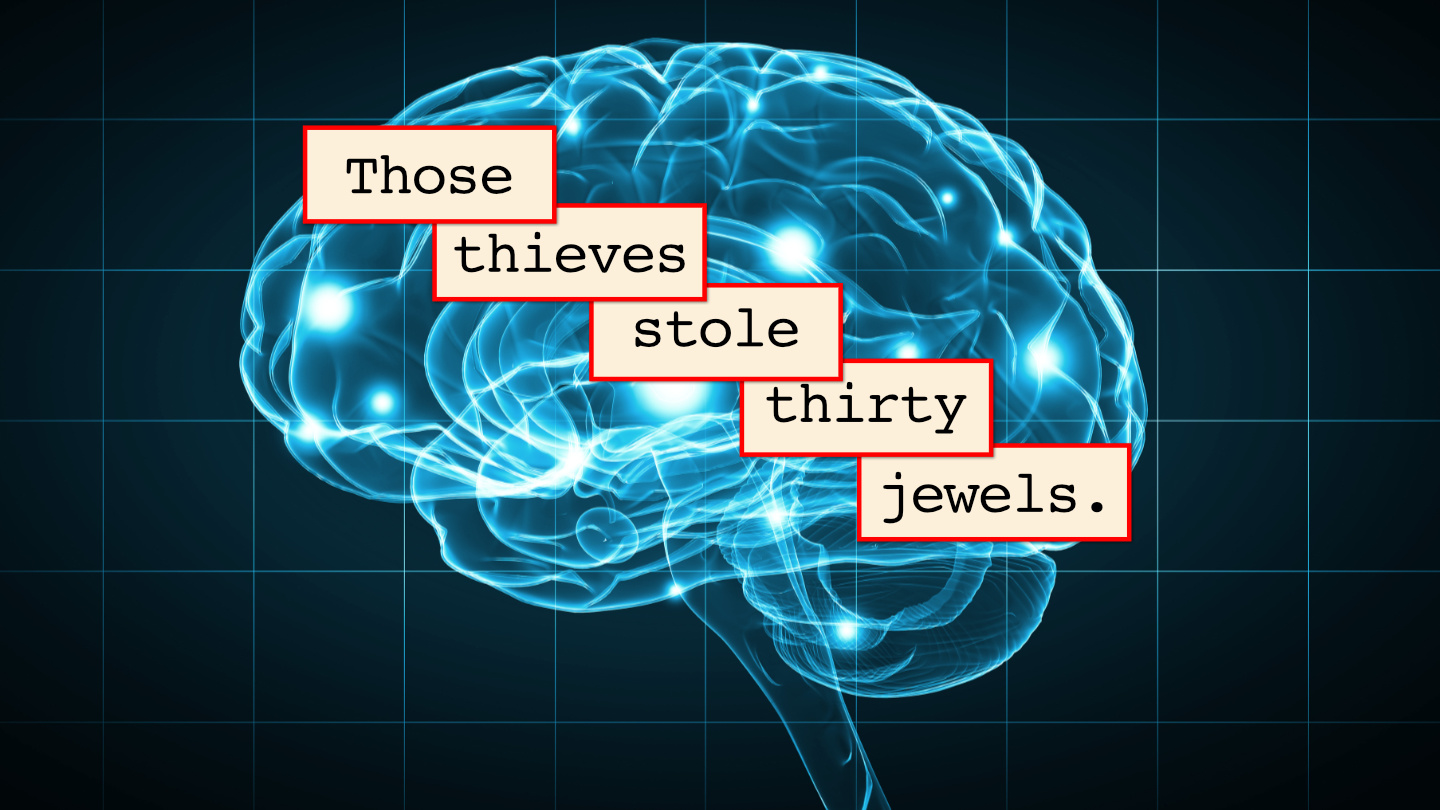

Researchers at UCSF have trained an algorithm to parse meaning from neural activity.

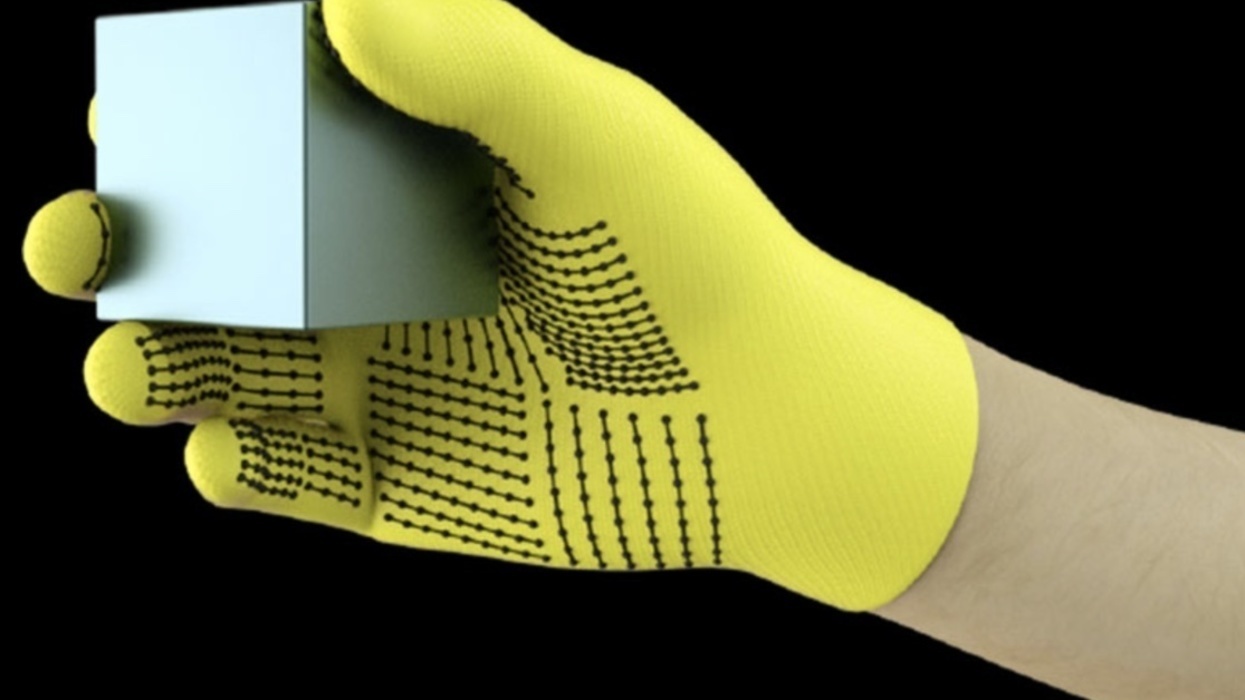

Our clever human hands may soon be outdone.

Neuroscience is working to conquer some of the human body’s cruelest conditions: Paralysis, brain disease, and schizophrenia.

▸

5 min

—

with

A balanced discussion of the realities, the mythologies, and the concerns surrounding cutting-edge brain research.

What gives us color now may give rise to our cyborg future.

The researchers created a special polymer that can make robots repair themselves.