How safe is it to “stand on the shoulders of giants”?

- Progress depends on ideas created by people before us. If we did not “stand on the shoulders of giants,” as Newton put it, we could never reach higher.

- Moving forward relies on assuming certain things are true. At what point, then, does an assumption become epistemologically unjustified?

- Perhaps, as philosopher Michael Huemer argues, there are times when we ought to trust others’ testimony more than our own critical thinking skills.

When we open a book, we open the past. In reading, we enter the mind of someone from long ago, and often long dead. Literacy is what has allowed the transmission of knowledge through the ages. When I read about physics in a textbook, I am adding centuries of knowledge, experiment, and genius to my understanding. When I read philosophy, I am walking in the path of someone very wise, and very intelligent, who has asked the same questions I have. Books facilitate progress. They are the “saved games” of history that allow each generation to pick up and carry on.

But the philosophical question is how far should we challenge received ideas?

Shoulders of giants

Although he was by no means the first to coin the expression, Isaac Newton wrote of his own brilliance that, “If I have seen further [than others] it is by standing on the shoulders of giants.” His point is that every technological, scientific, or medical discovery is only the latest brick in a huge edifice. The academics and inventors of today are pushing a ball already in motion. Which, of course, makes sense. If we had to rediscover and corroborate every fact ever established, we would never have time to do anything new. Moving forward relies on assuming certain things are true.

At what point, then, does an assumption become epistemologically unjustified? When do we not simply stand on the shoulders of giants, but scrutinize what those giants have done? For most people — both academics and layman — we tend to adopt a kind of epistemic falsification. Essentially, this means that once we establish theories or hypotheses by experiment, induction, and observation, we accept them so long as they are not proven wrong. Established knowledge, then, is simply a litany of the best ideas left standing. So, as long as previous generations’ science (our giants) has not been shown to be false, then we are reasonably justified in believing it. Or, if there are no good grounds to question received wisdom, then we can accept it.

The social foundation of knowledge

If you reflect for a moment on the huge encyclopedia of knowledge in your head, you’ll find that the overwhelming majority of things in there are based entirely on trust. It’s the trust of someone else – both historical and distant as well as recent and close to you. You have not privately fact-checked most of what you know. Few reading this will have looked upon an atom, yet you assume they exist. You have not met Montezuma, yet you accept that he once lived. You have not seen the dark side of the moon.

Each of us, in almost all aspects of life, depend on other people’s knowledge. The only way to get by is if we accept the possibility that we can assimilate other’s knowledge on the weight of testimony alone. The American philosopher, Robert Audi, referred to this as the “social foundation of knowledge” — where my knowledge depends on your knowledge. Audi’s point is that we must accept testimonial knowledge as “basic,” in the same way we do our sense impressions or reflections. But, where sense impressions or personal experience is a “generative” form of knowledge (it feels like we create it), testimonial knowledge is transmissive. We pass it around like a ball or a current. In short, for Audi, we have to accept that at least some knowledge can be transmitted across peoples, nations, and ages (although, not quite as efficiently as in The Matrix).

Uncritical thinking skills

In 2005, the philosopher Michael Huemer developed this point to give us an interesting idea: sometimes we shouldn’t use our “critical thinking” skills.

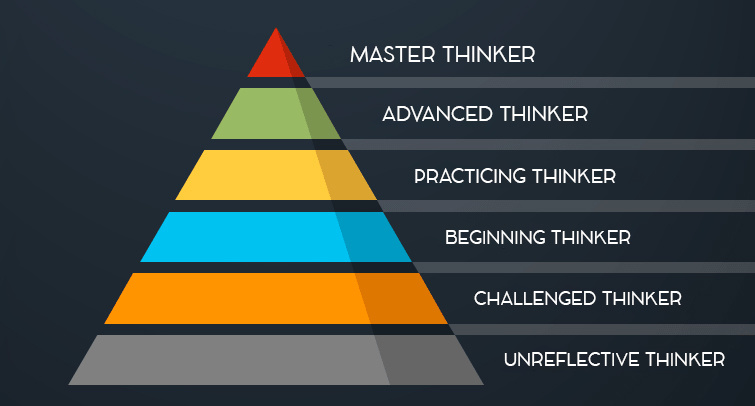

Suppose, for instance, a non-expert is trying to establish their opinion on a controversial issue. Huemer said they essentially have three strategies available to them:

- Credulity: the ability to canvass the opinions of a number of experts and adopt the belief held by most of them.

- Skepticism: when you form no opinion, but withhold judgement until the issue becomes clearer.

- Critical thinking: you gather the arguments and evidence that are available on the issue, from all sides, and assess them for yourself.

Huemer asks us a question: which of these strategies is the most reliable and most effective? His answer is 1 and 2.

If you use 3, your critical thinking, then there are two outcomes. Either you find the expert consensus is right, in which case you might as well have saved yourself years of study and gone with “credulity” anyways. Or, you find the majority of experts are wrong. But as Huemer writes, “It is reasonable that, in this case, the experts would nevertheless be right…any given expert would be no more likely than you are to be in error; even more clearly, the community of experts as a whole is far more likely to be correct.” Which is to say that, despite spending two hours on Reddit or reading a huge Twitter thread, you’re unlikely to be a pioneering, paradigm-shifting genius.

Huemer compares it to a sick person who visits the doctor. When you go to your physician, you accept what they say, not unquestioningly, but trustingly. What you don’t do is put in ten years of medical research to try and disprove their prescription of antibiotics. Similarly, “Critical Thinking, in [some] kinds of cases, may be unwise the same way that diagnosing one’s own illnesses is unwise.”

There are times, then, when we ought to rely more on credulity or skepticism. Critical thinking is simply too much, too hard, and too unrealistic for a lot of scenarios. We need to rely on experts. Almost all of the time across our life, we need others to do the work for us and for them to tell us the right of it. In short, we must trust the giants beneath our feet are sturdy and true.

Jonny Thomson teaches philosophy in Oxford. He runs a popular account called Mini Philosophy and his first book is Mini Philosophy: A Small Book of Big Ideas.