Whose ethics should be programmed into the robots of tomorrow?

- Whether it’s driverless cars to personal robots, AI will have to make decisions that could significantly affect our lives.

- One way to instill ethics in AI is to have everyone vote on what robots should do in particular situations. But are values and morals really about what the majority want?

- When we make moral decisions, we each weigh up principles and certain values. It’s unlikely that we can recreate that in AI.

You are responsible for all of the things that you do. The good and the bad lie solely at your door. We are each come swollen with opinions and weighed down by our values. It’s what makes the world such a vibrant and dynamic place. And it’s also why ethics is such a fascinating, conversation-worthy area of study. In any get together, there are as many answers as there are people. In fact, it’s sometimes said if you give two philosophers a problem you get three answers.

Ethics is never a straightforward discipline. It’s disorderly and unruly, confused and confusing, with few answers and a great many questions. Yet, this is not how the world of technology and artificial intelligence works. If programming were a child, it’d be coloring neatly within the lines, using a finite selection of crayons, and with great efficiency. If ethics were a child, it would be scribbling nonsense on the wall with permanent marker, dancing about like some court jester.

So, in a world of machine learning, automated robots, and driverless car which demands answers, the philosophical question is whose answers do we choose? Whose worldview should serve as the template for AI morality?

What would you do if…?

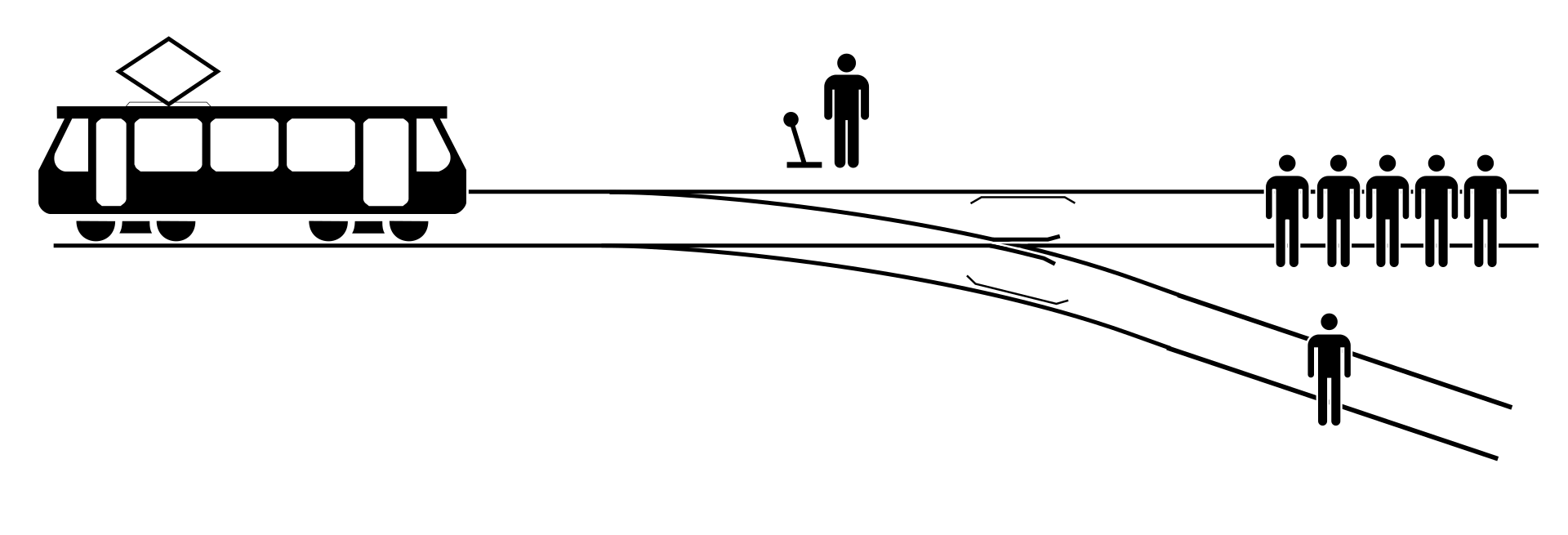

In 2018, an international team of philosophers, scientists, and data analysts gave us The Moral Machine Experiment. This was a collection of 40 million responses across 233 countries and territories about what people would do in this or that moral situation. The problems were modifications on the famous “trolley problem” — the thought experiment which asks whether it’s better to actively kill one person or passively let more than one person die. The team changed the scenario to include different ages, genders, professions, underlying health conditions, and so on.

What the answers revealed is that, unsurprisingly, most people favor humans over animals, many humans over fewer, and younger over older. What’s more, respondents tended to prefer saving women over men, doctors over athletes, and the fit over the unfit.

This is interesting enough. But what’s more interesting is what three of the study’s authors suggested afterward: that a democratization of ethical decisions would make good grounds for how to program the machines and robots of tomorrow.

The democratization of values for ethical AI

There are two issues with this.

First, how do we know what we say we’ll do will match what we’ll actually do? In many cases, our intentions and actions are jarringly different; the millions of dollars wasted in unused gym memberships and the scattered ground of broken marriages is testament to that. In other situations, we simply don’t know what we would do. We can hypothesize what we would have done in Nazi Germany, or when we have a gun to our head, or if our loved ones risk death. Still, we often don’t know what we’d do until we’re in that situation.

Second, it’s not clear that values and ethics are something that should be democratized. The lone and brave good person is just as good in a mass of bad. When the French political philosopher Alexis de Tocqueville toured America, he asked how it was that the US didn’t succumb to a “tyranny of the majority” — a voting on right and wrong. His conclusion was that the US had a foundation of Christian values that superseded all else. Religious or not, many of us, today, will probably agree that right and wrong is not something determined by the majority.

The purpose of thought experiments

All of this poses wider questions about the purpose and limitations of thought experiments or “what would you do?” scenarios more generally. In a recent article, the philosopher Travis LaCroix, asks what it is we are hoping to achieve by using ethical dilemmas. His argument is that thought experiments are designed deliberately to test our intuitions about such and such a situation. Then, the answer we intuit serves to bolster or critique some other, deeper philosophical analysis. As LaCroix puts it, “moral thought experiments serve to perturb the initial conditions of a moral situation until one’s intuitions vary. This can be philosophically useful insofar as we may be able to analyze which salient feature of the dilemma caused that variance.”

The point is that thought experiments are a tool by which to service other philosophical reasoning. They are not a basis for a moral position in and of themselves. To borrow terminology from science, thought experiments are the evidence that either corroborates or falsifies a certain hypothesis. They are not the hypothesis itself.

Let’s return to the example of driverless cars and the trolley problem. The questions given in the likes of The Moral Machine Experiment (like “would you kill an old person or let two children die?”), are designed to reveal an underlying, foundational ethical principle, (such as “always protect human potential”). The issue with simply programming AI on the basis of individual answers to thought experiments is that they will be far too particular. That is not what ethics is about. An ethical choice requires values and general principles to weigh up the situations we encounter. They need rules to guide our decisions in everyday, new contexts..

Building ethical AI

There are a great many philosophical problems raised by emerging technology and AI. As machines start to integrate more and more into our lives, they will inevitably face more and more ethical decisions. In any given day, you will make a great many ethical decisions, and a significant number of these will be your choice based on your values. Do you give up a seat on a bus? Do you drink the last of the coffee in the office kitchen? Do you cut ahead of that person on the highway? Are you kind, honest, loyal, and loving?

There is no “perfect moral citizen” and there is no black and white when it comes to right and wrong. If there were, ethics would be a much less interesting area of study. So, how are we to square all this? Who should decide how our new machines behave? Perhaps we need not fear a “tyranny of the majority,” as de Tocqueville mused, but rather the tyranny of a tiny minority based in Silicon Valley or robotics factories. Are we happy to have their worldview and ethical values as the model for the brave new world ahead of us?

Jonny Thomson runs a popular Instagram account called Mini Philosophy (@philosophyminis). His first book is Mini Philosophy: A Small Book of Big Ideas.