Chapter 1: The hard problem of consciousness

SUSAN SCHNEIDER: Consciousness is the felt quality of experience. So when you see the rich hues of a sunset, or you smell the aroma of your morning coffee, you're having conscious experience. Whenever you're awake and even when you're dreaming, you are conscious. So consciousness is the most immediate aspect of your mental life. It's what makes life wonderful at times, and it's also what makes life so difficult and painful at other times.

No one fully understands why we're conscious. In neuroscience, there's a lot of disagreement about the actual neural basis of consciousness in the brain. In philosophy, there is something called the hard problem of consciousness, which is due to the philosopher David Chalmers. The hard problem of consciousness asks, why must we be conscious? Given that the brain is an information processing engine, why does it need to feel like anything to be us from the inside?

Chapter 2: Are we ready for machines that feel?

SUSAN SCHNEIDER: The hard problem of consciousness is actually something that isn't quite directly the issue we want to get at when we're asking whether machines are conscious. The problem of AI consciousness simply asks, could the AIs that we humans develop one day or even AIs that we can imagine in our mind's eye through thought experiments, could they be conscious beings? Could it feel like something to be them?

The problem of AI consciousness is different from the hard problem of consciousness. In the case of the hard problem, it's a given that we're conscious beings. We're assuming that we're conscious, and we're asking, why must it be the case? The problem of AI consciousness, in contrast, asks whether machines could be conscious at all.

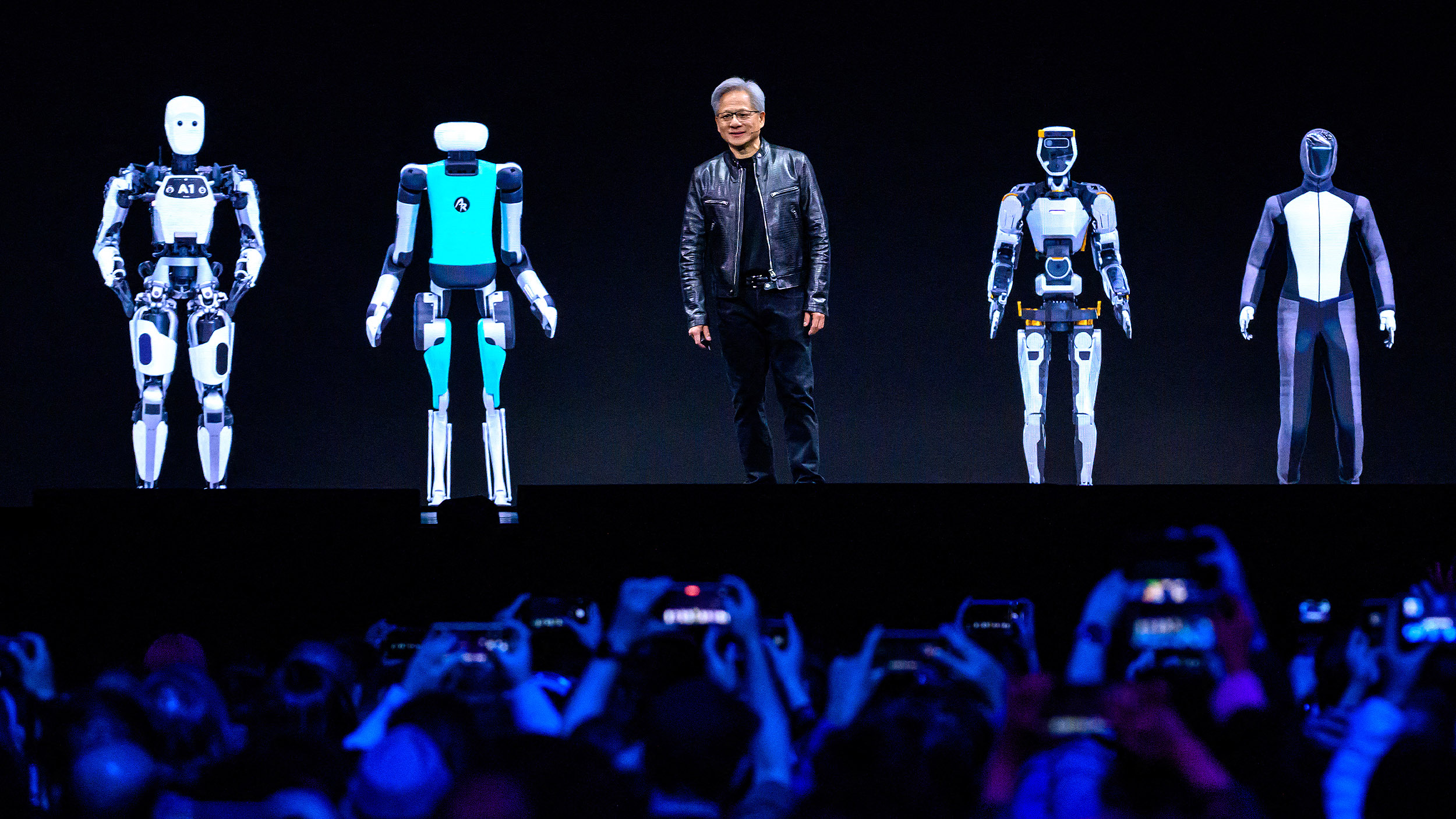

So why should we care about whether artificial intelligence is conscious? Well, given the rapid-fire developments in artificial intelligence, it wouldn't be surprising if within the next 30 to 80 years, we start developing very sophisticated general intelligences. They may not be precisely like humans. They may not be as smart as us. But they may be sentient beings. If they're conscious beings, we need ways of determining whether that's the case. It would be awful if, for example, we sent them to fight our wars, forced them to clean our houses, made them essentially a slave class. We don't want to make that mistake. We want to be sensitive to those issues. So we have to develop ways to determine whether artificial intelligence is conscious or not.

It's also extremely important because as we try to develop general intelligences, we want to understand the overall impact that consciousness has on an intelligent system. Would the spark of consciousness, for instance, make a machine safer and more empathetic? Or would it be adding something like volatility? Would we be, in effect, creating emotional teenagers that can't handle the tasks that we give them? So in order for us to understand whether machines are conscious, we have to be ready to hit the ground running and actually devise tests for conscious machines.

Chapter 3: Playing God: Are all machines created equal?

SUSAN SCHNEIDER: In my book, I talk about the possibility of consciousness engineering. So suppose we figure out ways to devise consciousness in machines. It may be the case that we want to deliberately make sure that certain machines are not conscious. So for example, consider a machine that we would send to dismantle a nuclear reactor. So we'd essentially quite possibly be sending it to its death. Or a machine that we'd send to a war zone. Would we really want to send conscious machines in those circumstances? Would it be ethical?

You might say, well, maybe we can tweak their minds so they enjoy what they're doing or they don't mind sacrifice. But that gets into some really deep-seated engineering issues that are actually ethical in nature that go back to Brave New World, for example, situations where humans were genetically engineered and took a drug called soma, so that they would want to live the lives that they were given. So we have to really think about the right approach. So it may be the case that we deliberately devise machines for certain tasks that are not conscious.

On the other hand, should we actually be capable of making some machines conscious, it may be that humans want conscious AI companions. So, for example, suppose that humans want elder care androids, as is actually under development in Japan today. And as you're looking at the android shop, you're thinking of the kind of android you want to take care of your elderly grandmother, you decide you want a sentient being who would love your grandmother. You feel like that is what best does her justice. And in other cases, maybe humans actually want relationships with AIs. So there could be a demand for conscious AI companions.

Chapter 4: Superintelligence over sentience

SUSAN SCHNEIDER: In Artificial You, I actually offer a 'wait and see' approach to machine consciousness. I urge that we just don't know enough right now about the substrates that could be used to build microchips. We don't even know what the microchips would be that are utilized in 30 to 50 years or even 10 years. So we don't know enough about the substrate. We don't know enough about the architecture of these artificial general intelligences that could be built. We have to investigate all these avenues before we conclude that consciousness is an inevitable byproduct of any sophisticated artificial intelligences that we design.

Further, one concern I have is that consciousness could be outmoded by a sophisticated AI. So consider a super intelligent AI, an AI which, by definition, could outthink humans in every respect: social intelligence, scientific reasoning, and more. A super intelligence would have vast resources at its disposal. It could be a computronium built up from the resources of an entire planet with a database that extends beyond even the reaches of the human World Wide Web. It could be more extensive than the web, even.

So what would be novel to a superintelligence that would require slow conscious processing? The thing about conscious processing in humans is that it's particularly useful when it comes to slow deliberative thinking. So consciousness in humans is associated with slow mental processing, associated with working memory and attention. So there are important limitations on the number of variables, which we can even hold in our minds at a given time. I mean, we're very bad at working memory. We could barely remember a phone number for five minutes before we write it down. That's how bad our working memory systems are.

So if we are using consciousness for these slow, deliberative elements of our mental processing, and a superintelligence, in contrast, is an expert system which has a vast intellectual domain that encompasses the entire World Wide Web and is lightning fast in its processing, why would it need slow, deliberative focus? In short, a superintelligent system might outmode consciousness because it's slow and inefficient. So the most intelligent systems may not be conscious.

Chapter 5: Enter: Post-biological existence

SUSAN SCHNEIDER: Given that a superintelligence may outmode consciousness, we have to think about the role that consciousness plays in the evolution of intelligent life. Right now, NASA and many astrobiologists project that there could be life throughout the universe, and they've identified exoplanets, planets that are hospitable, in principle, to intelligent life. That is extremely exciting. But the origin of life right now is a matter of intense debate in astrophysics. And it may be that all of these habitable planets that we've identified are actually uninhabited.

But on the assumption that there's lots of intelligent life out there, you have to consider that, should these life forms survive their technological maturity, they may actually be turning on their own artificial intelligence devices themselves. And they eventually may upgrade their own brains so that they are cyborgs. They are post-biological beings. Eventually, they may have even their own singularities.

If that's the case, intelligence may go from being biological to post-biological. And as I stress in my project with NASA, these highly sophisticated biological beings may themselves outmode consciousness. Consciousness may be a blip, a momentary flowering of experience in the universe at a point in the history of life where there is an early technological civilization. But then as the civilizations have their own singularity, sadly, consciousness may leave those biological systems.

Chapter 6: The challenge: Maximizing conscious experience

SUSAN SCHNEIDER: That may sound grim, but I bring it up really as a challenge for humans. I believe that understanding how consciousness and intelligence interrelate could lead us to better make decisions about how we enhance our own brain. So on my own view, we should enhance our brains in a way that maximizes sentience, that allows conscious experience to flourish. And we certainly don't want to become expert systems that have no felt quality to experience. So the challenge for a technological civilization is actually to think not just technologically but philosophically, to think about how these enhancements impact our conscious experience.