Artificial You: AI and the Future of Your Mind

SUSAN SCHNEIDER: So the ACT test actually looks at the AI to see if it has the felt quality of experience. So we've noted that consciousness is that inner feel. So it actually probes the AI by asking questions that are designed to determine whether it feels like something to be the AI.

And I actually published the questions in my book, some of the questions. And they're questions that are actually philosophical in nature in some cases, or even that are inspired by religious traditions. So one example is you would ask the machine about whether it understands the idea of reincarnation. So even if you don't agree with reincarnation, you can vaguely understand the idea of your mind returning.

Similarly, you can understand the idea portrayed in the film Freaky Friday of swapping a body with somebody else. You can also understand the idea of the afterlife. Even if you disagree with these ideas, and you think that they're ultimately not well founded, the point here is that the reason that we can think of these things at all, the reason we can entertain these thought experiments is that we're sentient beings. It would be very difficult to understand what these thought experiments were getting at if we weren't conscious beings.

Similarly, think of a machine that is at the R&D stage. So it hasn't been spoon fed any information about human consciousness whatsoever. If at that point, we detect that it grasps these questions, it understands the idea of the mind existing separately from the body or the system or the computer, then there's reason to believe that it's a conscious being. Now this being said, the ACT test only applies in very circumscribed cases.

So first off, you can't pre-program answers into the machine. So it would be inappropriate to run the test on a system like, say, Hanson Robotic's Sofia, which has stock answers that she goes through when she's on TV shows. I've done TV shows with Sophia. I've noticed that she uses the same answers. They're programmed in. So that wouldn't do. Also, you can't have a deep learning system that has been spoon fed data about how to go about answering these sorts of questions. Also, the system has to have linguistic capacities. It has to have the ability to answer the questions.

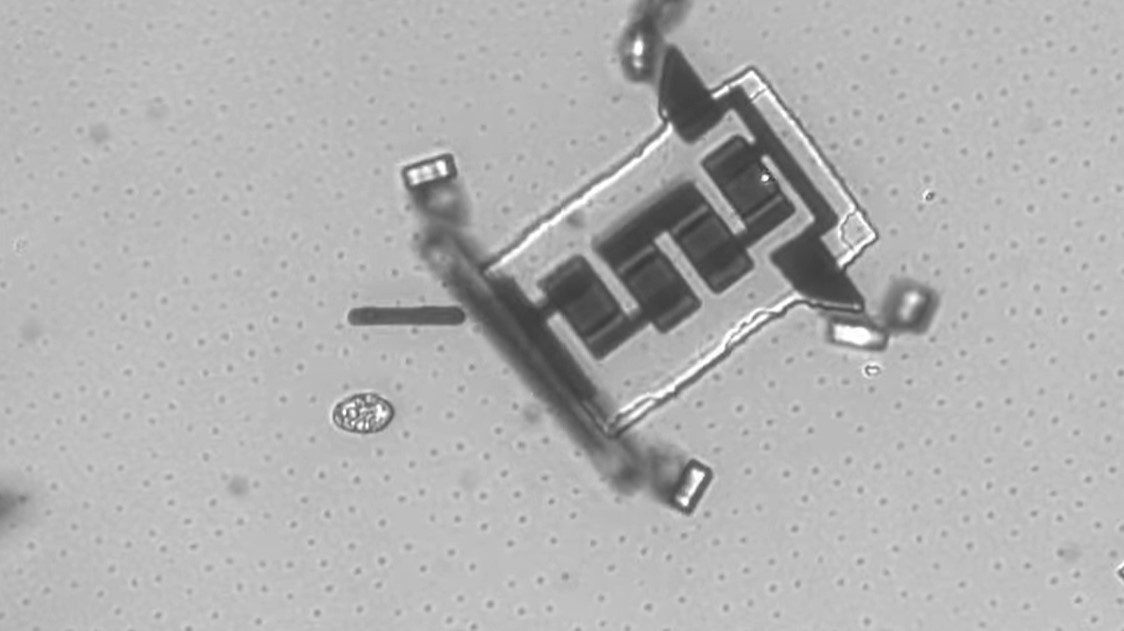

Another test for machine consciousness is the chip test. The chip test actually involves humans.

So imagine a situation where you have an opportunity to upgrade your mind, so you put a microchip in your head. Now suppose you are about to replace part of the brain that underlies conscious experience. If you did this, and you didn't feel any different, and if you checked carefully by neuroscientists and there were no changes in the felt quality of your mental life, if you didn't turn into one of those cases that Oliver Sacks talks about, for example, in his books with strange deficits of consciousness, then we have reason to believe that microchips might be the right stuff for consciousness.

On the other hand, suppose the chips don't work. So you go back year after year to see if there have ever been new developments by the chip designers. And you try various chips. And after 10 years of trying, they throw their hands up and they tell you, you know, it doesn't look like we can devise a microchip of any sort. It doesn't have to be silicon. It could be carbon nanotubes, whatever it is that the chip designers are using. None of those chips successfully underlie conscious experience. We just do find deficits. If that's the case, we have reason to conclude that microchips may not be the right stuff. In that case, I consider that to be strong evidence that the machines that we build based on those substrates are not conscious.

On the other hand, to go back to a situation where the chips work, that indicates that we have to test machines made of that sort of substrate and that type of chip design very carefully. They, in fact, could be conscious beings. But it doesn't mean that they are definitely conscious. I stress that in the book. It could be the case that their architectural design does not feature conscious components. So a positive result on the chip test just indicates, in principle, machines could be conscious if they have the right architectural features. So for instance, humans have features like working memory and attention and brain stems that are very important to the neural basis of conscious experience. So if there is an analog in machines, and the machines are built with those sorts of microchips, it may be that they're conscious machines.