How do self-driving cars know their way around without a map?

Self-driving cars are coming down the pike, and there’s a lot of excitement and fear amongst the general public about it. Experts say you should be seeing them on the road here and there by 2020. They’ll be the majority of the vehicles out there by 2040. Consider that 90% of all traffic fatalities are due to human error, according to the US National Highway Traffic Safety Administration. But autonomous vehicles are not without controversy.

In March of this year, a woman in Arizona was struck and killed by one of Uber’s self-driving cars, while she was crossing the street. Most experts say that this incident is an anomaly. Nidhi Kalra—a roboticist at the Rand Corporation told Wired that the development of this technology is moving incredibly fast, particularly the software component. “With software updates,” he said, “there’s a new vehicle every week.”

This brings up an interesting question: how do self-driving cars navigate? An important thing to note is that there are many, many companies breaking into the market. Apple, Google, Tesla, Uber, Ford, GM, and more. They each have their own systems, although most work more or less the same.

Big, big data

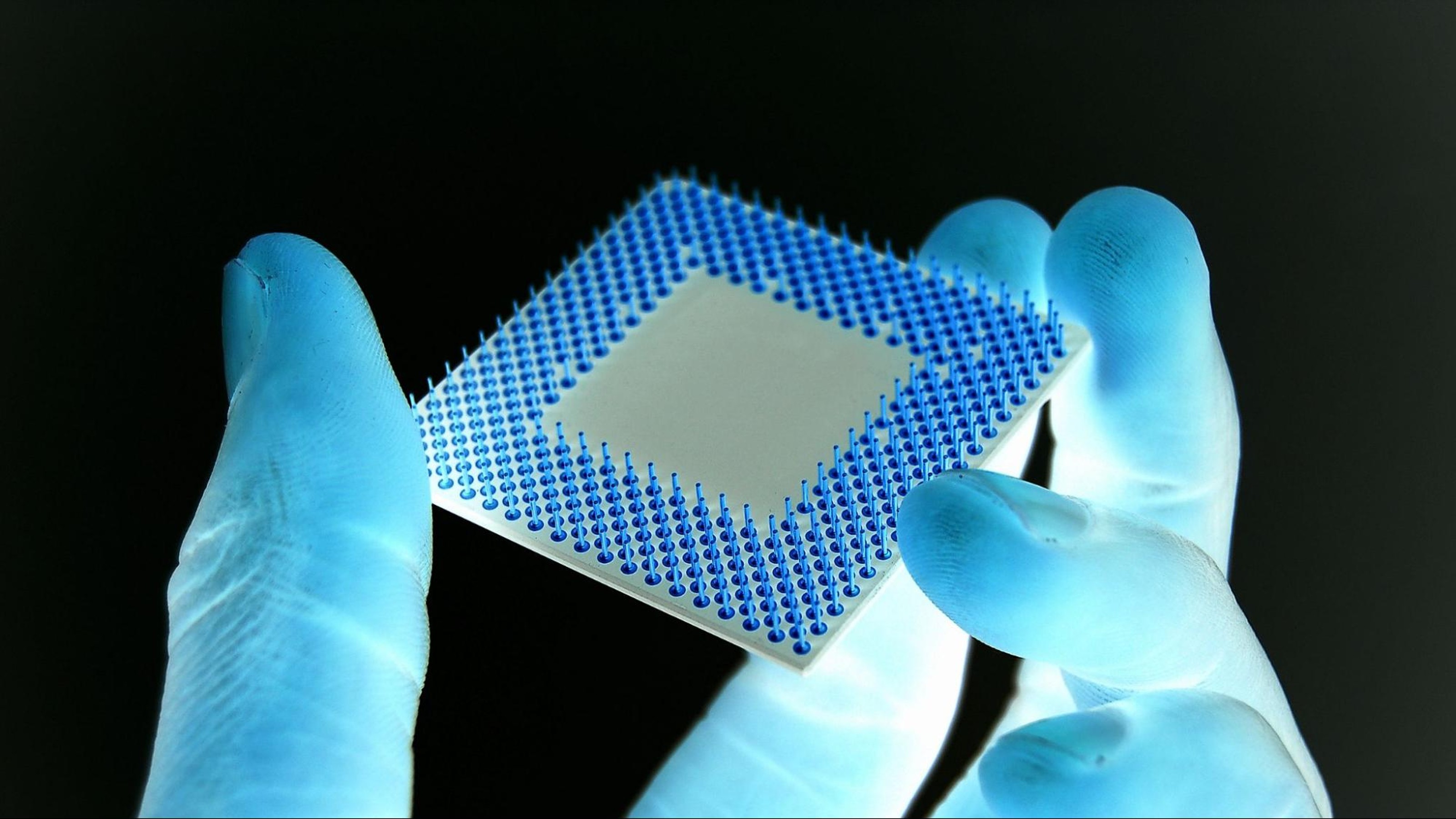

In a sense, advances in the self-driving vehicle industry are about dealing with huge amounts of data. The hardware in self-driving cars generates tons of it since it’s vital to know exactly where a vehicle is and what’s around it for safety.

Sensors in a vehicle may include:

- LiDAR, for “light detection and ranging” — that bounces anywhere from 16 to 128 laser beams off approaching objects to assess their distance and hard/soft characteristics and generate a point cloud of the environment.

- GPS — that locates the car’s location in the physical world within a range of one inch, at least in theory.

- IMU, for “inertial measurement unit,” — that tracks a vehicle’s attitude, velocity, and position.

- Radar — that detects other objects and vehicles.

- Camera — that captures the environment visually. The analysis of everything a camera sees requires a powerful computer, so work is being done to reduce this workload by directing its attention only to the relevant objects in view.

The challenge is taking in all this information, blending it, and processing it fast enough to be able to make split-second decisions, like whether or not to duck into another lane when an accident seems imminent.

Because all of this equipment generates so much data, and because it’s so expensive — a full sensor rig can easily cost upward of $100k per vehicle — maps for self-driving cars depend on specially equipped mapping vehicles. The maps they produce — actually not maps as we know them, but complicated datasets made up of coordinates — are ultimately loaded into consumer cars that navigate by continually using their own sensor array to compare the map to the actual surrounding environment, and instruct the car where to safely go.

The mapping problem

Obviously, high-quality, accurate and up-to-date maps for these cars are a critical piece of the puzzle. But producing them is hard. Most companies developing maps for self-driving vehicles currently use a system that works fine for research and development but is probably prohibitively expensive and time-consuming for mass production.

The typical strategy

In each car must be, of course, the full array of sensors. In addition, just managing all of that data requires a powerful, desktop-or-better-grade processor and lots of storage space on a hard drive, usually in the car’s trunk. How big? A map of San Francisco alone requires 4 terabytes.

The process for turning all that data into a map, called a “base map,” for a passenger car to use involves driving to a data center, carrying the drive inside — or shipping the drive — getting the data off it, processing the data, and returning the drive to the car. There are three big issues with this:

- The process takes so long that the critical need to keep base maps current is difficult if not impossible to meet.

- Cars can only drive within the areas for which they have base maps, so improvising a destination on the fly is impossible — new base maps are much too large to upload or download while on the road.

- The hardware and labor involved are too expensive to multiply by millions of cars.

Another idea

One company, Civil Maps, has developed what may be a more realistic solution to the mapping problem. The software in their mapping vehicles analyzes the driving environment within the car, extracting the relevant details via machine learning, and generating what the company calls a “Fingerprint Base Map™” (FBM) that can reduce, for example, that 400 TB San Francisco map to 400 MB, about the size of an MP3 song, which makes sense, since it uses technology similar to what Shazam uses for retrieving songs. The system is nonetheless precise, tracking the vehicle’s location to within 10 centimeters and in what’s called “six degrees of freedom”: the car’s location, altitude, and its attitude relative to the road.

The small size of the FBM means that an area’s base map can be downloaded as needed, even over current cellular networks, so drivers are freed to go wherever they want. (Civil Maps says they can easily fit a whole continent’s worth of maps into a car.) Current conditions are uploaded to the company’s cloud, and crowdsourcing produces a continually updated base map. The solution is also much less expensive, with less required storage space and allowing the use of a much less expensive onboard computer, in part because the fingerprints eliminate the need to analyze the camera’s entire view feed, allowing it to recognize and pay attention to just what matters.

Hitting the road

Getting a handle on the special maps that self-driving cars need to avoid either having to carry around a super-computer within each vehicle or crashing into things is a major roadblock the industry is struggling with now. Smart-enough AI within a car to avoid accidents is obviously another key piece of the puzzle.

That’s self-driving cars as they stand now. With such rapid advancements being reported all the time though, one wonders what capabilities future iterations might possess.