New hypothesis argues the universe simulates itself into existence

This article has been retracted.

Credit: Quantum Gravity Institute

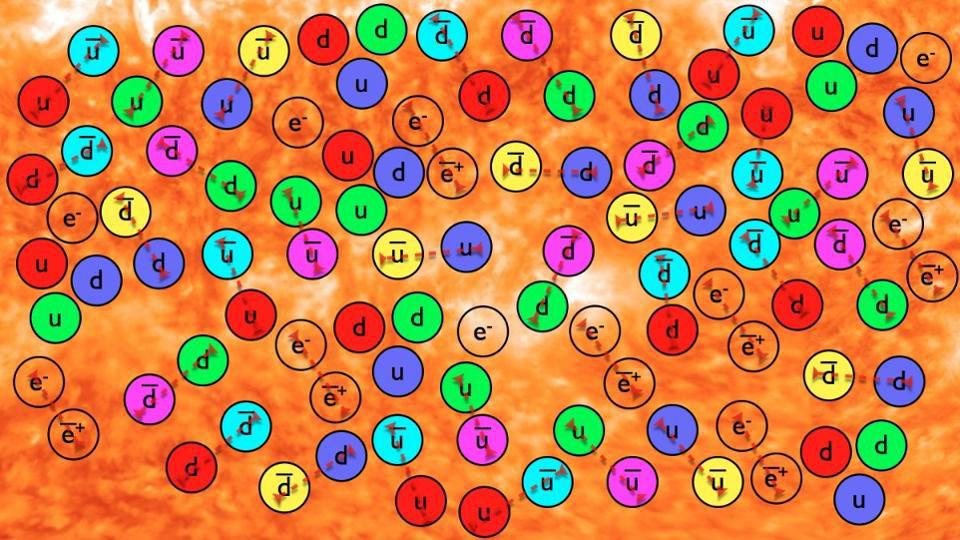

Tetrahedrons representing the quasicrystalline spin network (QSN), the fundamental substructure of spacetime, according to emergence theory.

Sign up for the Smarter Faster newsletter

A weekly newsletter featuring the biggest ideas from the smartest people

The content of this article has been removed because it does not meet Big Think’s editorial standards.

Sign up for the Smarter Faster newsletter

A weekly newsletter featuring the biggest ideas from the smartest people