Ask Ethan: What could an array of space telescopes find?

- Our view of the Universe changed as never before when we started putting telescopes in space, revealing galaxies, quasars, and objects from the Universe’s deepest recesses.

- Yet even our modern space telescopes, spanning the electromagnetic spectrum from gamma-rays and X-rays through the ultraviolet, optical, infrared, and microwave, have their limits.

- If we had an array of space telescopes distributed all throughout the Solar System, how much more could we see and know? The answer may surprise you.

Out there in the deep, dark recesses of space are mysteries just waiting to be discovered. While the advances we’ve made in telescopes, optics, instrumentation, and photon efficiency have brought us unprecedented views of what’s out there, arguably our largest advances have come from going to space. Viewing the Universe from Earth’s surface is like looking out at the sky from the bottom of a swimming pool; the atmosphere itself distorts or completely obscures our views, depending on what wavelength we’re measuring. But from space, there’s no atmospheric interference at all, enabling us to see details that would be completely inaccessible otherwise.

Although Hubble and JWST are the two best-known examples, they’re simply one-off observatories. If we had an array of them, instead, how much more could we know? That’s the question of Nathan Trepal, who writes in to ask:

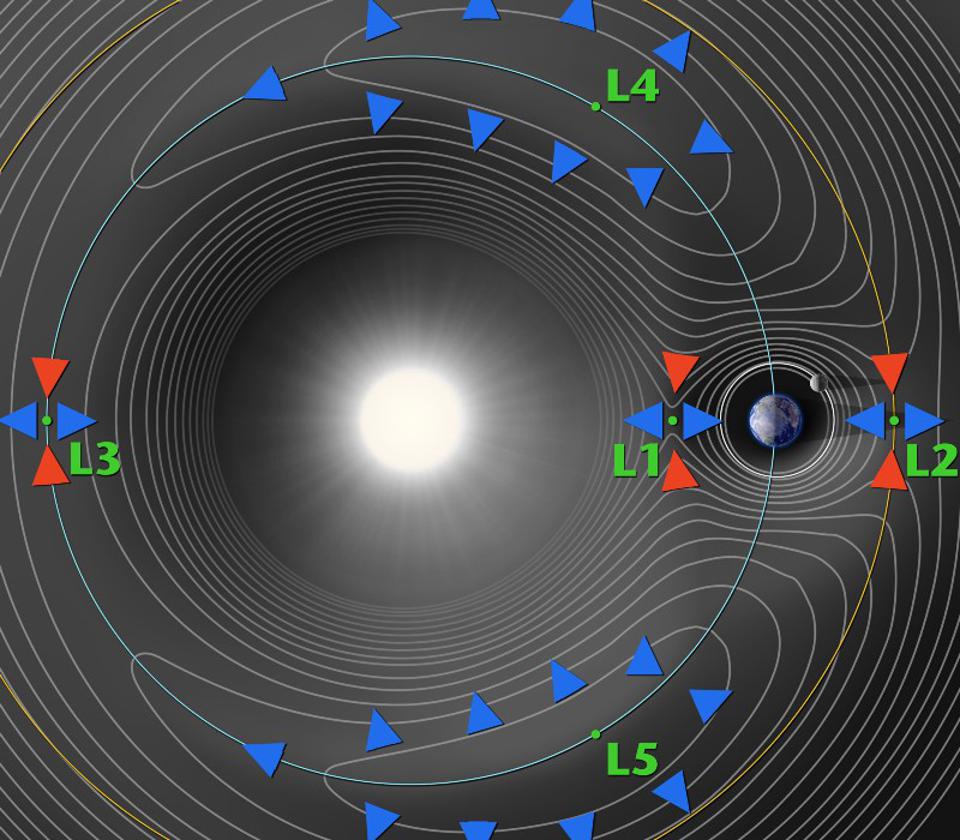

“[W]hat could be seen with an array of telescopes across the solar system? Some scenarios I was thinking would be, a telescope at the L3, L4, and L5 Lagrange points for each of the planets from Earth out to Neptune… What could be seen? Or how big would each telescope need to be to see a rocky exoplanet 1AU from a star like our sun?”

It’s not simply a dream, but a well-motivated scientific option to consider. Here’s what we could learn.

The limits of a monolithic telescope

Whenever you look at the Universe in any wavelength of light, you’re collecting photons and transmitting them into an instrument that can efficiently make use of them to reveal the shape, structure, and properties of the objects that both emit and absorb that light. There are a few properties that are universal to astronomical endeavors such as these, including:

- resolution/resolving power,

- sensitivity/faintness/light-gathering power,

- and wavelength range/temperature.

While the specifications of your instruments determine things like spectral resolution (i.e., how narrow your energy “bins” are), photon efficiency (what percent of your collected photons are converted to useful data), field-of-view (i.e., how much of the sky you can view at once), and noise floor (any inefficiencies produce noise in the instrument, which the collected signal must rise above in order to detect and characterize an object), the properties of resolution, sensitivity, and wavelength range are inherent to the telescope itself.

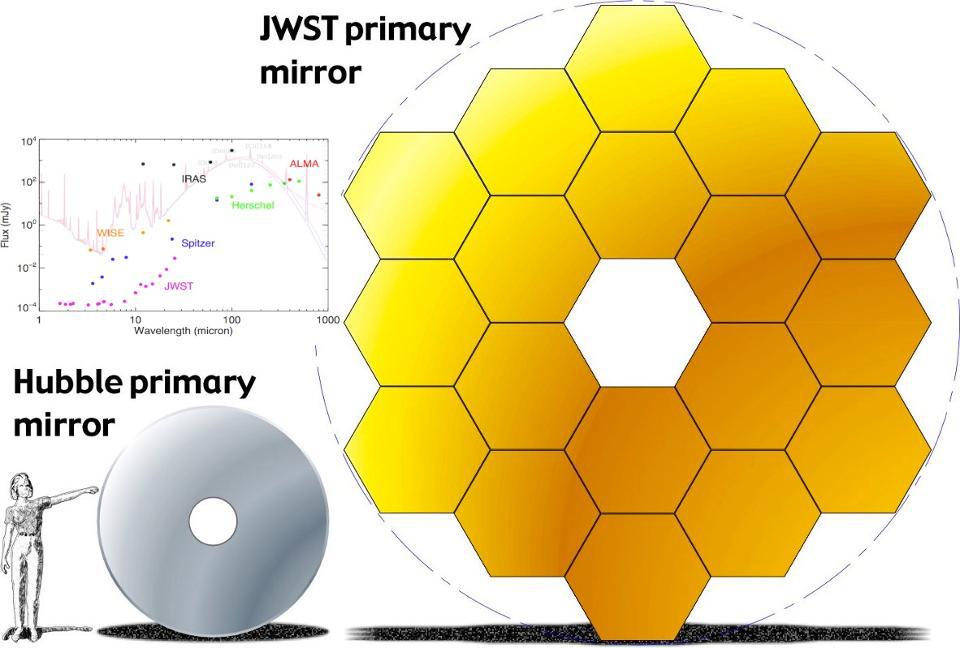

Your telescope’s resolution, or how “small” of an angular size on the sky it’s capable of resolving, is determined by how many wavelengths of the particular light you’re looking at fit across your telescope’s primary mirror. This is why observatories optimized for very short wavelengths, like X-rays or gamma-rays, can be very small and still see objects at very high resolution, and why JWST’s near-infrared (NIRCam) instrument can see objects at higher-resolution than its mid-infrared (MIRI) instrument.

Your telescope’s sensitivity, or how faint of an object it can see, is determined by how much cumulative light you gather. Observing with a telescope that’s twice the diameter of a previous one gives you four times the light-gathering power (and double the resolution), but observing for twice as long only collects twice as many photons, which only improves your signal-to-noise ratio by about 41%. It’s why “bigger is better” is so true when it comes to aperture in astronomy.

And finally, if you want to observe longer wavelengths, you need a cooler telescope. Infrared light is what the cells in our body perceive as heat, so if you want to see farther into the infrared portion of the spectrum, you have to cool yourself down to below the temperature threshold that produces infrared radiation in that range. This is why the Hubble Space Telescope is covered in a reflective coating, but the JWST — with a 5-layer sunshield, 1.5 million km from the Earth, and with an on-board cooler for its mid-infrared instrument — can observe at wavelengths about ~15 times longer than Hubble’s limits.

The limits of Earth-based telescope arrays

Building a single telescope, whether you’re on Earth or in space, is a more difficult task the larger you want to go. The largest optical/infrared telescopes on Earth are in the 8-12 meter class, with new telescopes ranging from 25-39 meters currently under construction and in the planning phase. In space, the JWST is the largest optical/infrared telescope of all-time, with a diameter for its segmented mirror of 6.5 meters: some 270% as large as Hubble’s monolithic 2.4 meter mirror. Building a telescope’s primary mirror to arbitrarily large sizes is not only a technical challenge, it’s prohibitively expensive in many cases.

That’s why, on Earth, one of the tools we leverage is to build telescope arrays instead. In optical/infrared wavelengths, observatories like the twin Keck telescopes atop Mauna Kea or the Large Binocular Telescope Observatory in Arizona use the technique of long-baseline interferometry to go beyond the limits of a single telescope. If you network multiple telescopes together into an array, instead of simply getting multiple independent images to average out, you get a single image with the light-gathering power of all of the telescope’s collecting area added together, but with the resolution of the number of wavelengths that can fit across the distance between the telescopes, rather than the primary mirror of each telescope itself.

The Large Binocular Telescope Observatory, for instance, is two 8 meter diameter telescopes that are mounted together on a single telescope mount, behaving as though it has a resolution of a ~23 meter telescope. As a result, it can resolve features that no single 8 meter telescope can on its own, including the above image of erupting volcanos on Jupiter’s moon Io, as seen while it experiences an eclipse from one of Jupiter’s other Galilean moons.

The key to unlocking this power is that you must put your observations together, from the different telescopes, so that the light you’re observing with each telescope corresponds to light that was emitted from the source at the exact same instant. This means you need to account for:

- the varying distances between the source and each of the telescopes in your array,

- the different light-travel-times that correspond to those three-dimensional distances,

- and any delays arising from either intervening matter or curved space along the light-path of travel,

to ensure that you’re observing that particular object at the same instant across all of your observatories.

If you can do this, you can perform what’s known as aperture synthesis, which gives you images that have the light-gathering power of the telescopes’ collecting area combined, but the resolution of the distance between the telescopes.

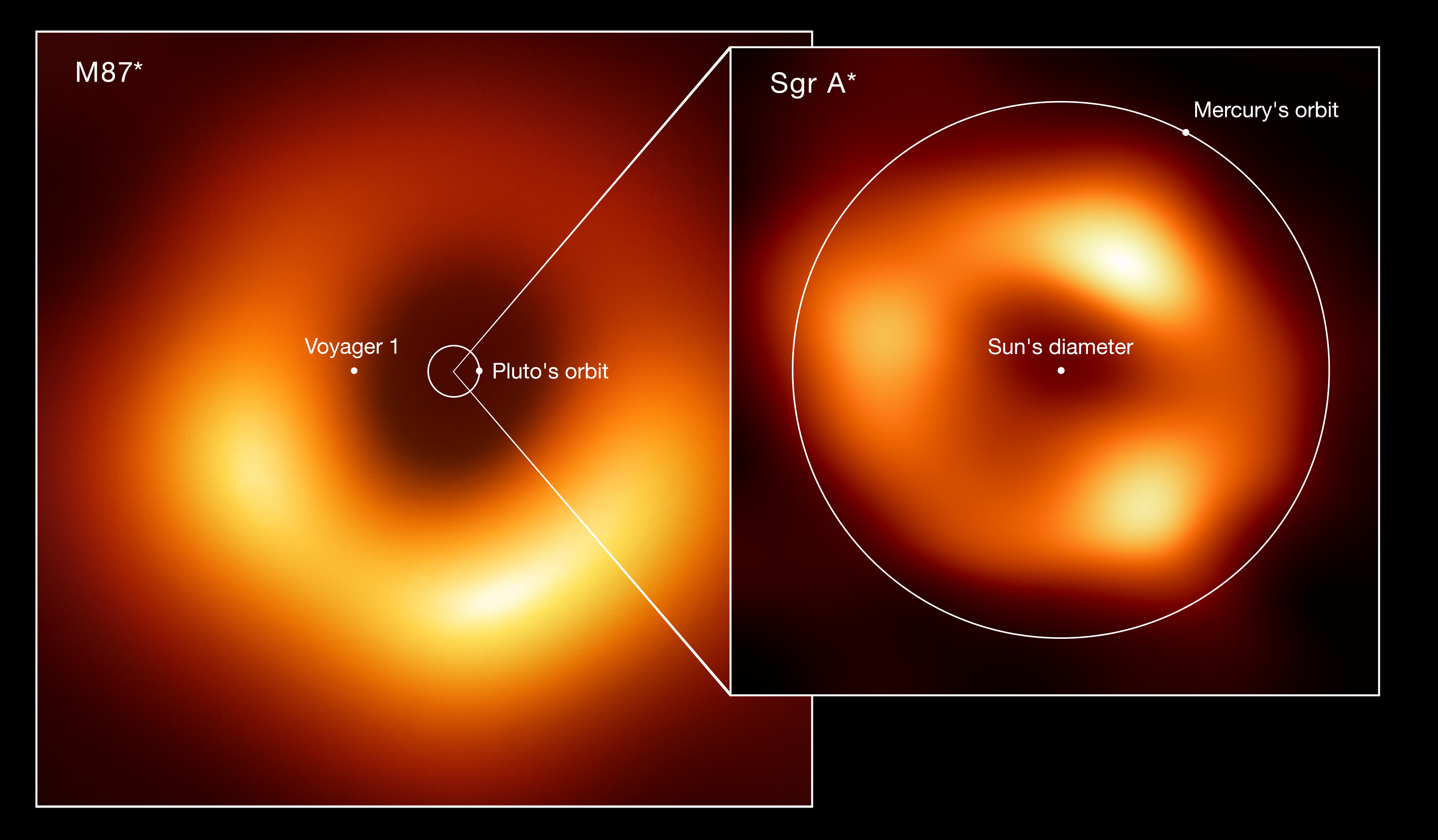

This was leveraged most successfully by the Event Horizon Telescope, which imaged a number of radio sources — including the black holes at the centers of the Milky Way and Messier 87 galaxies — with the equivalent resolution of a telescope the size of planet Earth. Some of the keys to making this happen were:

- atomic clocks at each of the telescope’s location, enabling us to keep time to the attosecond (10^-18 s) level,

- observing the source, across all telescopes, at the exact same frequency/wavelength,

- correcting properly for any sources of noise that differ across telescopes,

- and to be able to extract the real interference effects of the light arriving at the different telescopes while ignoring the errors/noise that arise in the data.

These are the basics for performing Very-Long-Baseline Interferometry (VLBI), pioneered by Roger Jennison way back in 1958. Because of the long nature of radio waves and the finite speed of light, attosecond timing precision is more than sufficient to reconstruct these ultra-high-resolution images, even across a baseline that’s the size of the Earth. If we can upgrade from atomic to nuclear clocks, that improved timing of a few orders of magnitude could enable this type of technology to not only be applied to radio waves, but to light with wavelengths that are a factor of ~100 or even ~1000 shorter.

What we’d gain from an array in space

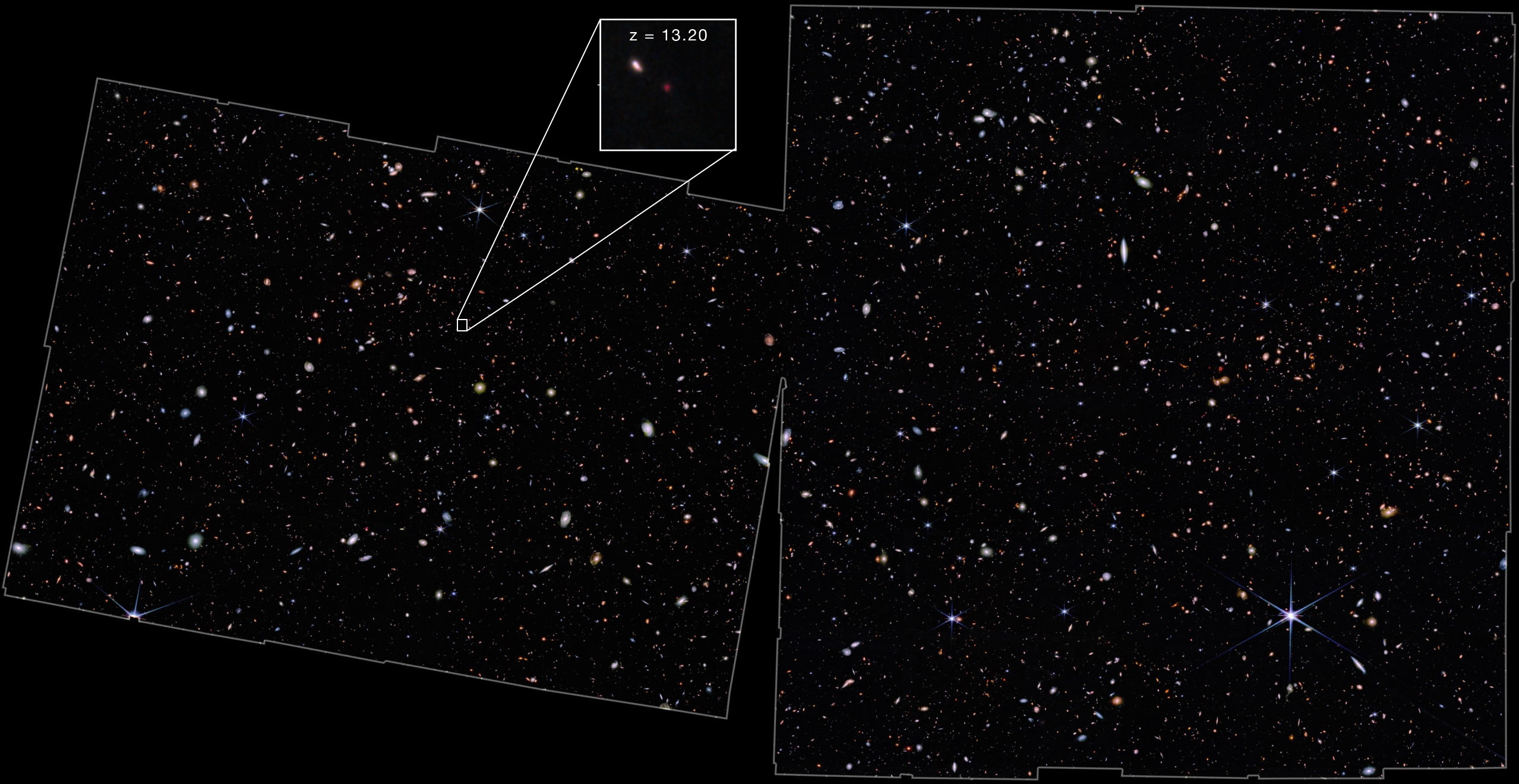

If you’re talking about an array of telescopes that can be phase locked together — that can be aperture-synthesized to behave as a single telescope over the baseline distance/arrival time differences that are being considered — that’s the ultimate dream. The Earth has a diameter of about 12,000 kilometers, and the Event Horizon Telescope can use that data to resolve about 3-4 black holes in the Universe. If you were to put an array of telescopes throughout:

- Earth’s orbit, with a span of 300 million kilometers, you could measure the event horizons of tens of thousands of supermassive black holes.

- Jupiter’s orbit, with a span of 1.5 billion kilometers, you could measure the event horizons of black holes, like Cygnus X-1, even within our own galaxy.

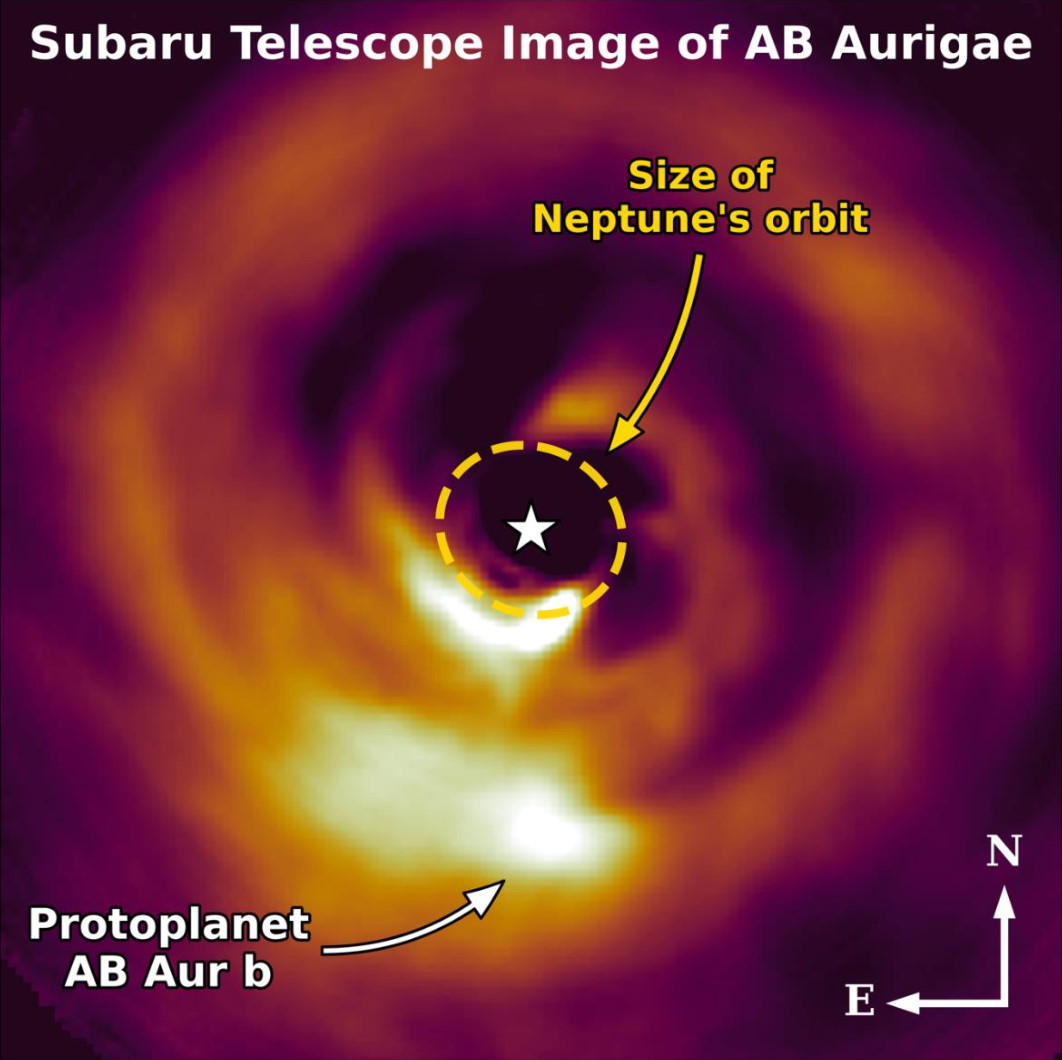

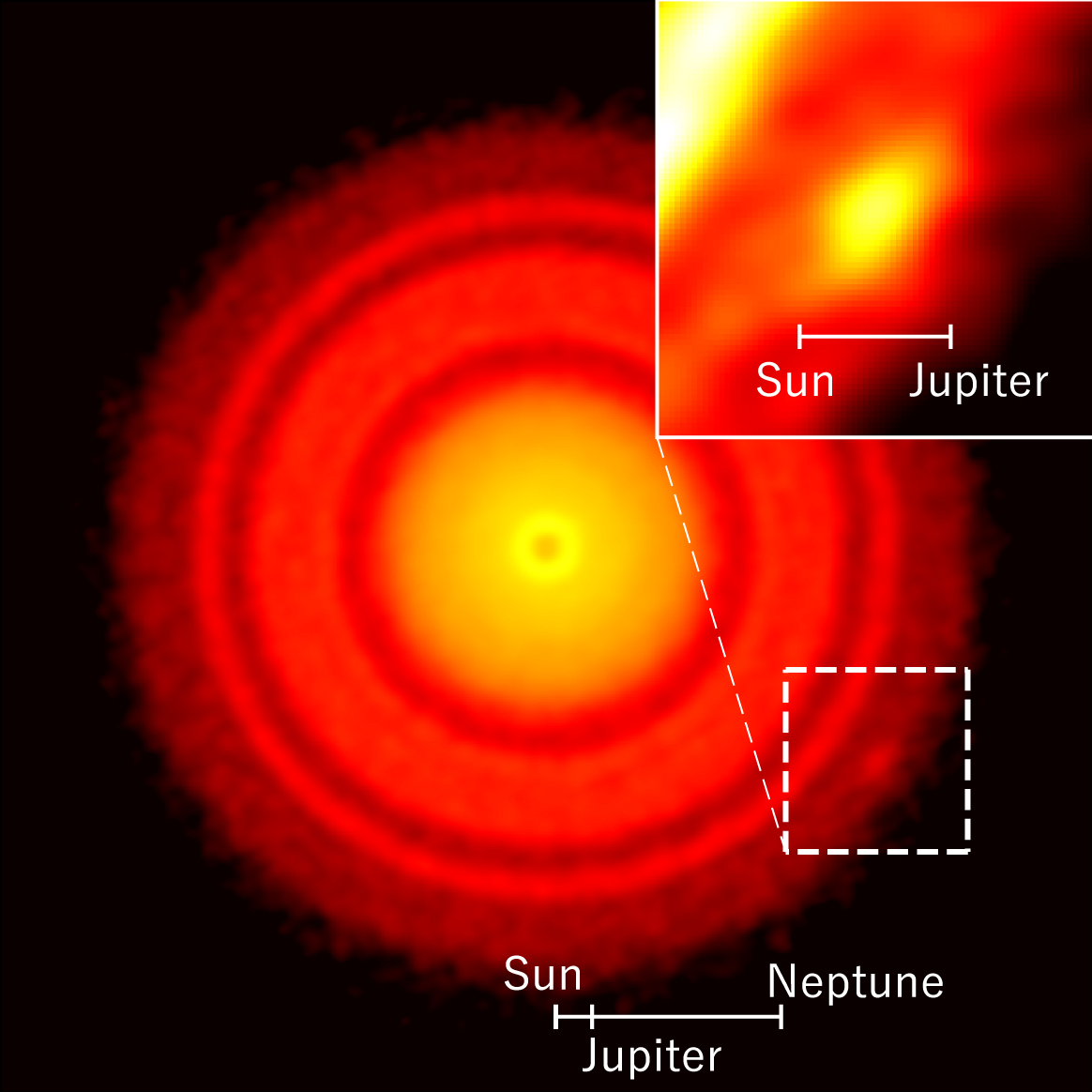

- Neptune’s orbit, with a span of 9 billion kilometers, you could resolve Earth-sized planets forming within protoplanetary disks around newborn stars.

You’re talking about increasing your resolution of what you can see with observatories like ALMA and the Event Horizon Telescope by a factor of thousands for an Earth-diameter array, and by a factor of around a full one million for an array in Neptune’s orbit.

This won’t improve your light-gathering power, however. You could still only see “bright” objects that only required the light-collecting area of the telescopes present within the array. You’d only be able to see active black holes, for example, not the majority of them that are quiet at the moment. The level of detail would be extraordinary, but you’d be limited by the faintness of objects that you could see by the sum of the individual telescopes.

However, there’s something worth considering that often is overlooked. The reason JWST is so superior of an observatory is because of all of the novel types of data it can bring in. Bigger is better, colder is better, in space is better, etc.

But most JWST proposals, like most Hubble Space Telescope proposals, get rejected; there are simply too many people with good ideas that are applying for observing time on too few high-quality observatories. If we had more of them, they wouldn’t have to all observe the same objects together all the time; they could simply observe whatever people wanted them to look at, obtaining all sorts of high-quality data. Bigger is better, sure, but more is better, too. And with more telescopes, we could observe so much more and learn so much more about all sorts of aspects in the Universe. It’s part of why NASA doesn’t only do large flagship missions, but demands a balanced portfolio of explorer-class, mid-sized, and large/flagship missions.

What we’d hope to gain, but the technology isn’t there (yet)

Unfortunately, we can’t really hope to perform the kind of aperture synthesis we’d like to for wavelengths that are smaller than a few millimeters over large distances. For ultraviolet, visible, and infrared light, we have to have extremely precise, unchanging surfaces and distances at the precision of only a few nanometers; for arrays of observatories orbiting in space, the best precision we can hope for is about a factor of many thousands worse than what’s presently technologically feasible.

That means that we can only get Event Horizon Telescope-like resolutions in the radio, millimeter, and many sub-millimeter wavelengths. To get down to micron-level precisions, which is where the near-infrared and mid-infrared lie, or even into the hundreds-of-nanometers range, which is where the visible light wavelengths are, we’d have to vastly increase the level of precision timing we can achieve.

There is the possibility for this, however, if we can advance far enough. Right now, the best timekeeping method we have is through atomic clocks, which rely on electron transitions within atoms, and keep time to about 1 second in every 30 billion years.

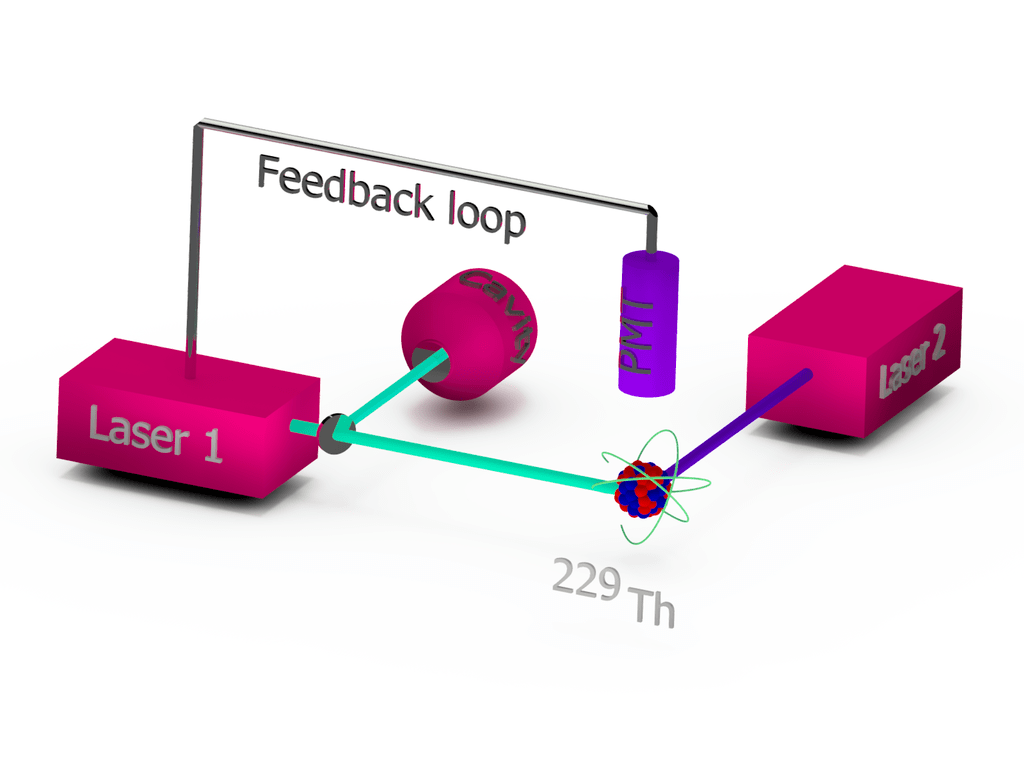

However, if we can instead rely on nuclear transitions within the atomic nucleus, because we’re talking about transitions that are thousands of times more precise and light-crossing distances that are 100,000 times smaller than for an atom, we could hope to someday develop nuclear clocks that are accurate to better than 1 second every 1 trillion years. The best progress toward this has been made using an excited state of the thorium-229 nucleus, where the hyperfine-structure shift has already been observed.

The development of the necessary technology to bring about optical or infrared very-long baseline interferometry — and/or to extend the radio interferometry we do today to even greater distances — would lead to a remarkable set of advances alongside this. Financial transitions could occur with ~picosecond accuracy. We could achieve global positioning accuracies to sub-millimeter precision. We could measure how Earth’s gravitational field from water-table levels change to less than a centimeter. And, perhaps most excitingly, rare forms of dark matter or time-varying fundamental constants could potentially be discovered.

There’s a lot to be done if we want to directly image an Earth-sized exoplanet with very-long baseline, optical/infrared interferometry, but there is a technological path toward getting there. If we dare to go down it, the rewards will extend far beyond what, in hindsight, seems a rather meager goal that we’ve set for ourselves.

Send in your Ask Ethan questions to startswithabang at gmail dot com!