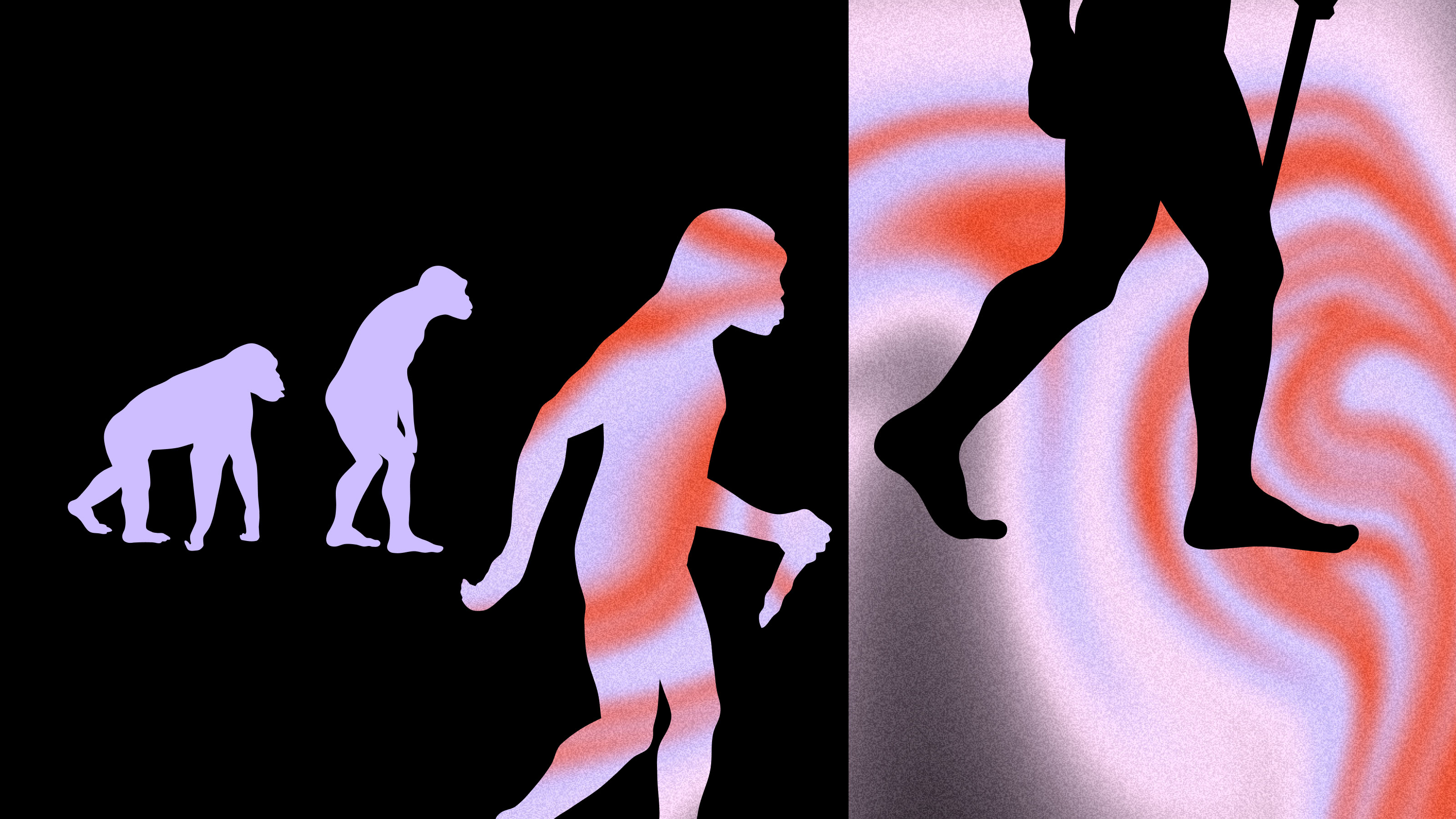

- The brain’s job is not to pass a math test, get a promotion at work, or win a Nobel Prize. Our brain’s only job is to make sure we survive today, and that we live to see another. Our brain’ system worked well for us when humanity’s only job was to hunt for food and fight for survival. But enter into the modern-day, our brain is not working so well as we have to rationalize problems like genetically modified foods and climate change.

- This is something we have to accept about our minds and better understand how our brain works in order to better avoid pitfalls in the future. In the coming years, because of modern technology, we will be able to better identify weaknesses in our rationality and thought patterns. Once we flag these weaknesses, we can make changes to our thinking patterns so that our brains have the tools to make us more rational decision-makers.

- So, how do we start thinking more rationally in the 21st century? Experts tell us that we will have to think from an outsider’s perspective. To better adapt our fight or flight brains to the modern-day, we will have to step outside of our emotions, reframe our thoughts, and see things from a new perspective.

DAVID ROPEIK: The brain is only the organ with which we think; we think. Its job is not to win Nobel prizes and to pass math tests. Its job is to get us to tomorrow. It's a survival machine, and it plays a lot of tricks with the facts in order to get us to tomorrow. That worked pretty well when the risks were lions and tigers and bears and the dark, oh, my. It's not as good now when we need to rationalize and reason and use the facts more with the complicated risks we face in a modern age. Climate change and genetically modified food and unsustainable living on the planet, that takes a lot more thinking. We'd better accept that and understand it so that we can apply that in order to avoid the pitfalls of our subjective way of perceiving the world.

DANIEL DENNETT: It's quite a robust thinking system that we've got between our ears, but what's going to happen, and has been happening for several millennia now, is we're gonna develop more and better-thinking tools, and we're going to identify more weaknesses in our rationality. A weakness identified is at least something that can be avoided to some degree. We can learn workarounds. We can recognize that we're suckers for certain sorts of bad ideas and, alerted to that, we can flag them when they come up.

DAN ARIELY: There's one way to be rational. There are many ways to be irrational. We could be irrational by getting confused, not taking actions, being myopic, vindictive, emotional, you name it. There's lots of ways to be wrong, and because of that, there's not one way to fix it, but one interesting way to try and inject some rationality is to think from an outsider's perspective. Here's what happens- When you think about your own life, you're trapped within your own perspective, you're trapped within your own emotions and feelings and so on, but if you give advice to somebody else, all of a sudden, you're not trapped within that emotional, combination, mishmash complexity, and you can give advice that is more forward-looking and not so specific to the emotions. One idea is to basically ask people for advice. So if you're falling in love with some person, good advice is to go to your mother and say, "Mother, what do you think about the long-term "compatibility of that person?" You're infatuated, right, when you're infatuated, you're not able to see things three months down the road. You're saying, "I'm infatuated. "I'll stay infatuated forever, this will never go away." Your mother, being an outsider, is not infatuated, and she could probably look at things like long-term compatibility and so on.

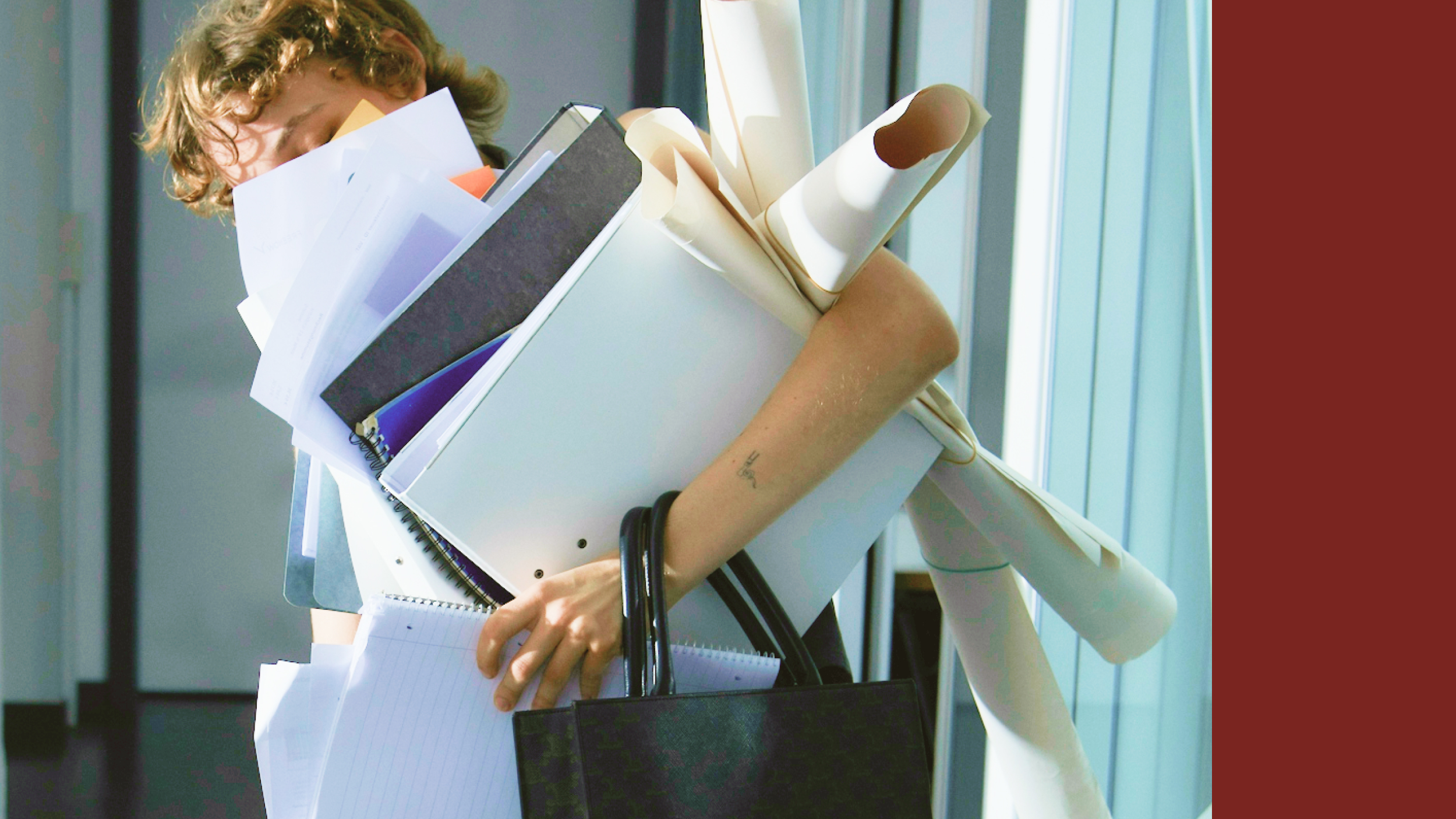

JULIA GALEF: We stick with a business plan or a career or a relationship long after it's become quite clear that it's not doing anything for us or that it's actively destructive or self-destructive. We have an irrational commitment to whatever we have been doing for a while, 'cause we don't like the idea of our past investment having gone to waste or because it's become part of our identity.

ROPEIK: Our brain is hardwired, and the chemistry of the brain guarantees that we feel first and think second. That's initially when we encounter information, but in an ongoing basis between the facts and the feelings in our brain, the feelings carry more weight. They feel wonderful, but they might be wrong. Recognizing that they might be wrong, here's what you can do- Take more time. If the brain jumps to conclusions out of emotion first, just assume that your first decision might not be the most informed one. Don't leap to conclusions. Take more time, a half an hour, an hour, a day, two. Think about it, cogitate on it, get more information. Get more information, not just from sources who already tell you what you know and believe, because that's gonna reinforce what you know, which will feel great but may not add to your knowledge. Take more time and get more information, and that allows that information and the facts side of this dual system to play more of a role.

GALEF: I'd like to introduce you to a particularly powerful paradigm for thinking, called Bayes' rule. Bayes' rule is provably the best way to think about evidence. Many people, certainly including myself, have this default way of approaching the world, in which we have our pre-existing beliefs, and we go through the world, and we pretty much stick to our beliefs, unless we encounter evidence that's so overwhelmingly inconsistent with our beliefs about the world that it forces us to change our mind and, you know, adopt a new theory of how the world works, and sometimes even then, we don't do it. The implicit question that I'm asking myself, that people ask themselves as they go through the world, is, "When I see new evidence, can this be explained with my theory?" And if yes, then we stop there, but after you've got some familiarity with Bayes' rule, what you start doing is, instead of stopping after asking yourself, "Can this evidence be explained with my own pet theory?" You also ask, "Would it be explained better with some other theory, or maybe just as well with some other theory? Is this actually evidence for my theory?" Bayes' rule is essentially a formalization of the best way to reason about evidence, the best way to change your mind or update your confidence in your beliefs when you encounter new information or have new experiences.

DENNETT: I think that process of self-knowledge and self-purification will continue to develop. So it's not so much, although it might include, the development of actual software technology to help us think. That's part of it, but also just the self-knowledge that alerts us to foibles, blind spots in our own thinking.

PAUL BLOOM: My fellow psychologists, philosophers, neuroscientists often argue that we're prisoners of the emotions, that we're fundamentally and profoundly irrational, and that reason plays very little role in our everyday lives. I honestly don't doubt that that's right in the short-term, but I think in the long run, over time, reason and rationality tends to win out. I look at the world we're in now, and for all of its many flaws and problems, I see signs of those moral accomplishments all over the place. We have a broader moral circle. There's a lot of explanation for these changes, but I think one key component has been exercise of reason, and I'm optimistic we'll continue this in the future.