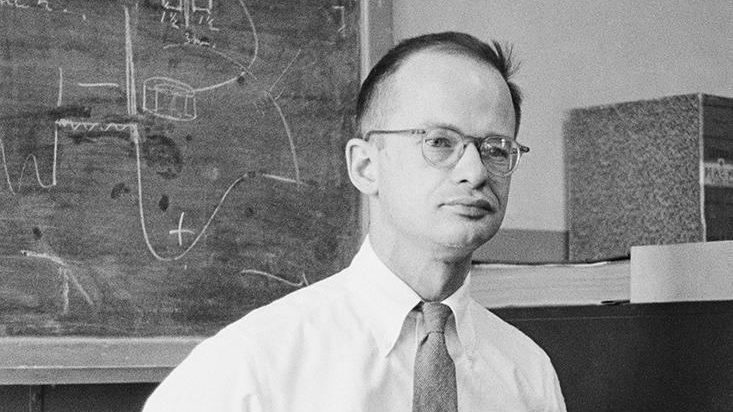

For years, the cloud was expected to become the dominant model for applications, overtaking traditional data centers, but Jim Whitehurst, CEO of Red Hat, explains that this just isn’t so. Instead, the cloud has become just one part of a larger system, which comprises private data centers as well as public and private clouds. A lot of this evolution has to do with the development progress: Public clouds are just easier to scale, build, and tear down. But private data centers are cheaper to run. So, we’re seeing this convergence of applications that are built to live across these spaces. “It’s a continuum,” he says, “and people are in different places on that continuum even with different applications in the same portfolio of applications in the same enterprise IT shop.” Whitehurst has written his first book titled The Open Organization: Igniting Passion and Performance.

Jim Whitehurst: Cloud computing is developing in a way I think very few of us expected. You know when the concepts of cloud computing first developed, we saw them as very different than the traditional data center. So it’s like Amazon and Google and Rackspace, you know, building these data centers away and you could buy elastic compute, et cetera, et cetera. And then you have the traditional data center. And so there’s been an ongoing dialogue for the last several years: Well when are people going to move to the cloud? Is that going to be the predominant model? What I think we’re actually finding now is those models are converging. There is going to be no is this a cloud application? Is this something that’s in a traditional data center? And a lot of exciting things happening about how applications are being built and deployed around containers and elasticity on premise. And you talk about a lot of those things, but fundamentally what we’re seeing is a hybrid model developing. The largest companies that I talk to — so very, very large companies will typically say, "I like public cloud to do development test or applications that I don’t know what their initial demands is going to be and let them burst out for a while and they may get small again and I may want to tear them down." And they like public cloud for that because it’s easy to pull up new compute network storage power; it’s easy to scale; it’s easy to pull it down.

But what I also hear from those same large enterprises: "Wow, once that application’s up and running and have a sense of, you know, how much compute resources is going to require and I have a good sense of is that going to vary much? It’s much cheaper to run a more traditional data center." So I think the big problem now that people are struggling with with containers and other technologies is how do I build an application portfolio that may run in the cloud, but may also run in a very traditional data center. And how do you build a structure — they had to build applications that can run across those models, but also to monitor and manage them and decide when is this more appropriate to run a public cloud and when is it more appropriate to run in a private cloud or in a traditional infrastructure? So I think the whole bifurcation of cloud versus not — what we’re finding is it’s all one thing. It’s a continuum and people are in different places on that continuum even with different applications in the same portfolio of applications in the same enterprise IT shop.