What Separates A Good Scientific Theory From A Bad One?

There are many arguments over what makes a theory beautiful, elegant, or compelling. But in the face of data, predictive power is everything.

When you look at any phenomenon in the Universe, one of the major goals of scientific investigation is to understand its cause. If we see something occur, we want to know what made it happen. Quantitatively, we want to understand what processes were at play, and how they caused the effect of the exact magnitude that we observed. And finally, we want to know what to expect for systems we have not yet observed, and to make predictions about what behavior we’re likely to see in novel situations we may encounter in the future. You can find dime-a-dozen ideas, from professional physicists to philosophers to amateur enthusiasts, but most of them make lousy scientific theories. The reason? Because they simply assume too much and predict too little. There’s a science to how this all works.

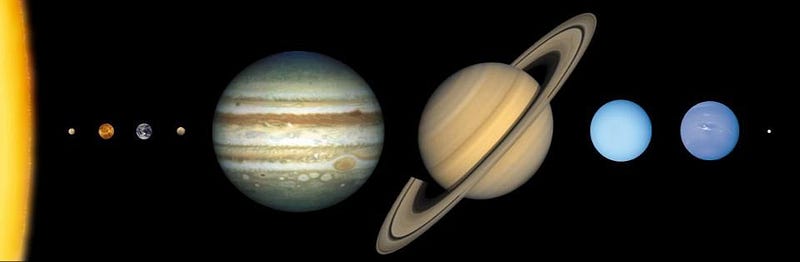

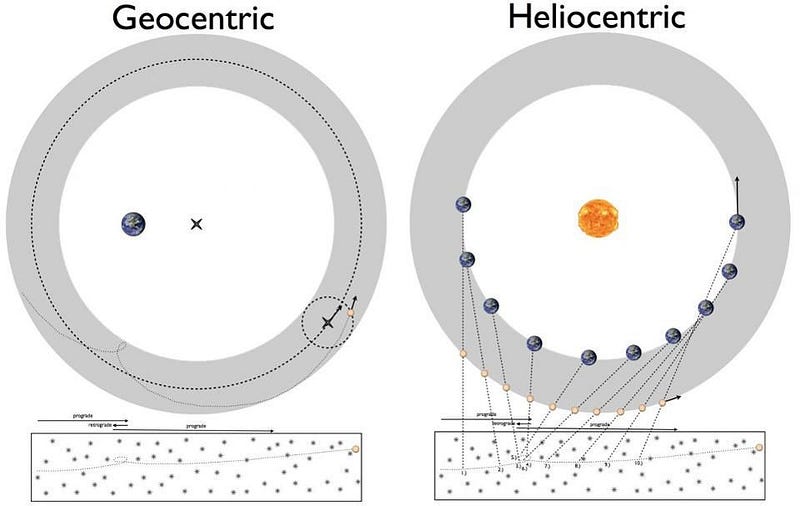

Consider our Solar System. The planets orbit through the skies, taking the complex paths that we see from our location on Earth. Throughout history, there have been many explanations put forth to explain their behavior, depending on how you look at the problem. While Ptolemy and Copernicus are perhaps famous for putting forth the two major concepts for models, geocentric and heliocentric, these are only models, not theories. Why’s that? Because for every planet you introduce, there’s no rule or law governing how it orbits. These models simply state that if you supply the correct parameters, like equants, deferents, and epicycles for Ptolemy, you can describe a planet’s motion through the skies.

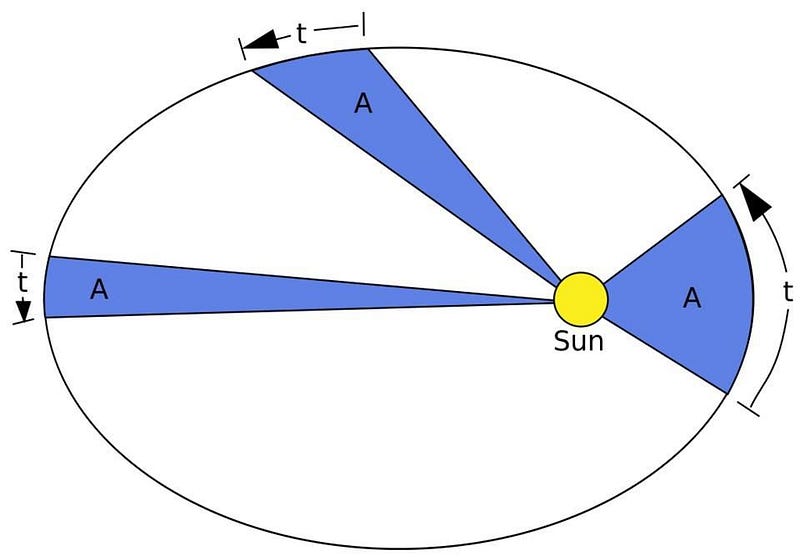

A descriptive model is an important step, but it isn’t a full-fledged scientific theory. Principles are a great starting point, but they won’t get you all the way to the end. For that, you have to go a step further: you need a rule, a law, and/or a quantitative set of equations that give you the ability to make predictions about things you have not yet measured. Kepler’s laws were the first leap in that direction. They didn’t simply accurately prescribe the orbits’ shapes and paths (ellipses, with the Sun at one focus), but begin to quantitatively describe it. Kepler’s second law gives the relationship between the orbital speed and the relative distance from the Sun, while the third law gives the relationship between the orbital period and the semimajor axis. For the first time, it wasn’t just that a behavior could be described through a set of parameters, but predicted.

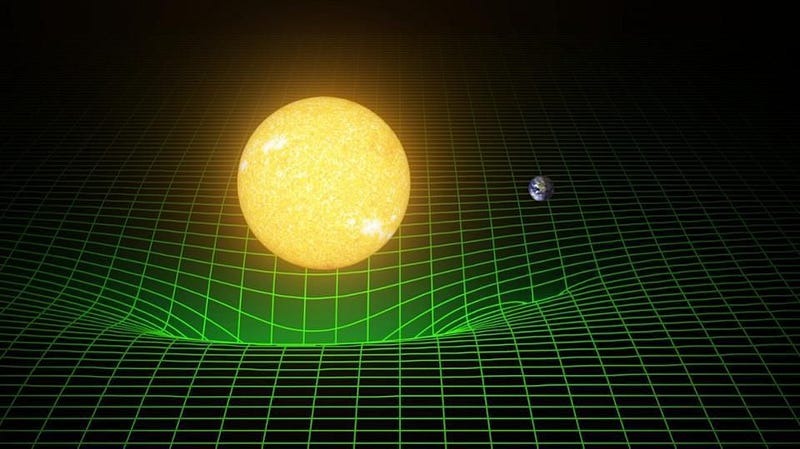

Newton’s law of universal gravitation went even further, allowing all of Kepler’s laws to be derived from just a single equation: the law of gravity. Suddenly, if you knew the masses and positions of the objects you had, you could predict how their motions would change arbitrarily far into the future. It represented a huge leap forward: you could give someone who understood the theory just a few parameters, like masses and positions, and they could calculate anything you wanted to know about the future behavior of any mass in the Universe. It was, in short, a good scientific theory.

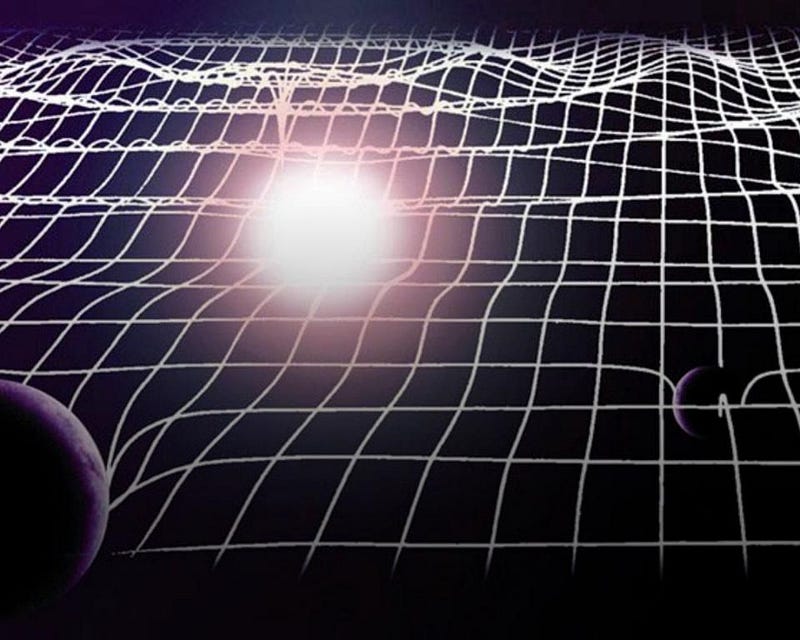

Einstein’s General Relativity went one better: given the same set of information as you’d give someone under Newton’s gravity, it would replicate all the successes of the prior theory, plus it made a suite of predictions that were observably different. These included:

- the orbits of Mercury and all the inner planets,

- the time-delay of light traveling through a gravitational field,

- the gravitational redshifting of light,

- the bending of starlight due to intervening foreground masses,

- frame-dragging effects,

- and the existence and properties of gravitational waves,

to name just a few. In every single regime where it’s been put to the test, Einstein’s General Relativity has been demonstrated to be successful.

The key feature of what made the later theories not only successful, but more successful than their predecessors, can be boiled down to this:

By adding the fewest number of new, free parameters, the greatest number of hitherto unexplained phenomena can be explained and accurately predicted.

This is why Einstein’s General Relativity is so successful, and why so many of our greatest theories are accepted the way they are. The great power of a scientific theory is in its ability to quantitatively make predictions that can be verified or refuted by experiment or observations.

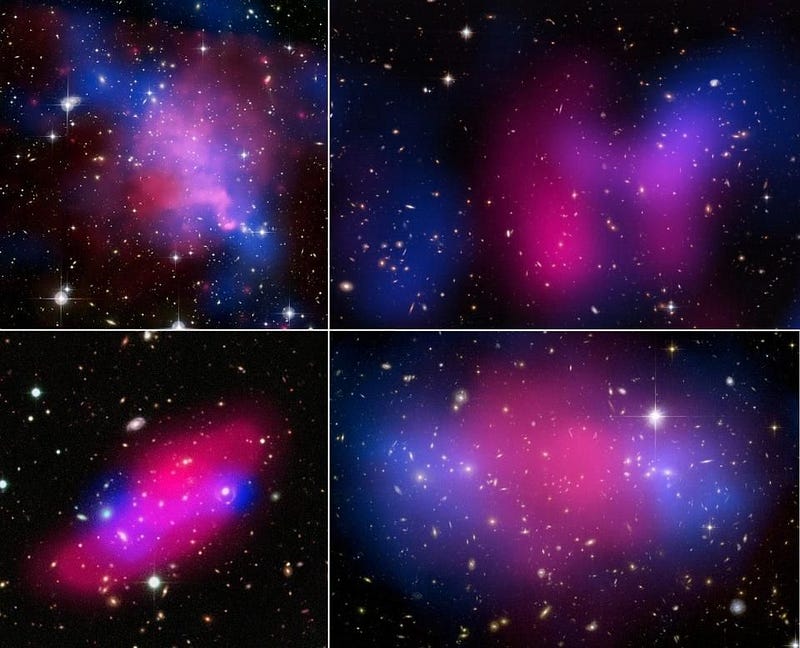

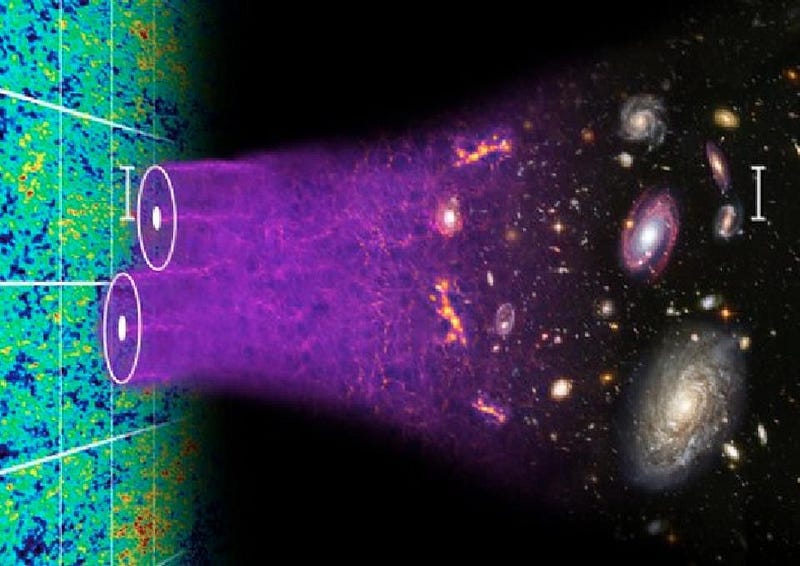

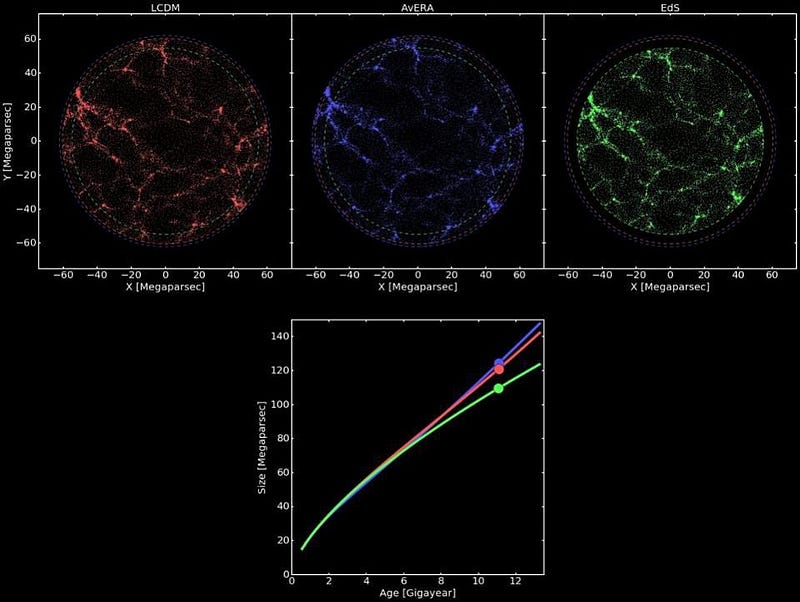

It’s why an idea like dark matter is so powerful. By adding just a single new species of particle — something that’s cold, collisionless, and transparent to light and normal matter — you can explain everything from rotating galaxies to the cosmic web, the fluctuations in the microwave background, galaxy correlations, colliding galaxy clusters, and much, much more. It’s why ideas with a huge number of free parameters that must be tuned to get the right results are less satisfying and less predictively powerful. If we can model dark energy, for instance, with just one constant, why would we invent multi-field models with many parameters that are no more successful?

There are all sorts of scientific-sounding ideas, like the recently promoted-by-Aeon cosmopsychism, that start with a grand idea, but that require a whole slew of new physics (and new free parameters) to explain very little. In general, the number of new free parameters your idea introduces should be far smaller than the number of new things it purports to explain. Most people who invoke Occam’s razor fail to evaluate it based on this near-universal criterion.

The next time you encounter a bold new idea, ask yourself how many new free parameters are in there, compared to the leading theory it’s seeking to replace or extend: call that number X. Then ask yourself how many hitherto unexplained phenomena it claims to explain: call that number Y. If Y is significantly greater than X, you might have something worth investigating. You might be dealing with a good idea. But if not, you’re almost certainly dealing with a bad scientific idea at best, or an unscientific idea at worst. The great power of science is in its ability to predict and explain what we see in the Universe. The key is to do it as simply as possible, but not to oversimplify it any further than that.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.