Why “humanity’s last exam” will ultimately fail humanity

- Anyone who’s used LLMs like Claude, Gemini, LLaMa, or OpenAI’s GPT-Series knows that it can give you a fast, confident answer to any query, but the answer is often unreliable or even flat-out wrong.

- Seeking to put the capabilities of these LLMs to the ultimate test, the Center for AI Safety, led by Dan Hendrycks, is crowdsourcing questions for a “last exam” from PhDs worldwide.

- The format of the questions, however, along with the lax requirements for a “correct” answer, places too much confidence in an algorithm that fails to demonstrate true PhD-level knowledge. That adds up to a recipe for disaster.

There are all sorts of situations we face in life where we need to consult someone with expert, specialized knowledge in an area where our own expertise is insufficient. If there’s a water leak in your house, you may need to consult a plumber. If there’s pain and immobility in your shoulder, you may need to consult an orthopedist. And if there’s oil leaking from your car, you may need to consult an auto mechanic. Sure, you can attempt to solve these problems on your own — consulting articles on the internet, reading books, leveraging trial-and-error, etc. — but no matter what expert-level knowledge you yourself possess, there will always be a place where your expertise ends. If you want to know more, you’ll have no choice but to either seek out a source who knows what you don’t or figure it out for yourself from scratch.

At least, those are the only avenues that were available until recently: consult an expert, figure out the answer on your own, or go and ignorantly mess with the problem at hand while hoping for the best. However, the rise of AI, particularly in the form of large-language models (LLMs) such as ChatGPT, Claude, Gemini, or many others, offers a potential new pathway: perhaps you can just consult one of these AI chatbots, and perhaps, once they’ve advanced past a certain point, they’ll be able to provide answers that are:

- correct,

- accurate,

- comprehensive,

- and expert-level,

to any inquiry you can muster. That’s the dream of many computer scientists and AI researchers today, but it’s complicated by an enormous suite of prominent, spectacular, and easily reproducible failures of LLMs to even correctly answer basic questions today.

To assess the capabilities of LLMs, the Center for AI Safety, led by Dan Hendrycks, is currently crowdsourcing questions for review to construct what they’re calling Humanity’s Last Exam. Seeking exceptionally hard, PhD-level questions (potentially with obscure, niche answers) from experts around the world, they believe that such an exam would pose the ultimate challenge for AI.

However, the very premise may be fundamentally flawed. Such an exam won’t necessarily demonstrate the success of AI, but may be more likely to fool humans into believing that an AI possesses capabilities that it, in fact, does not. Here’s why.

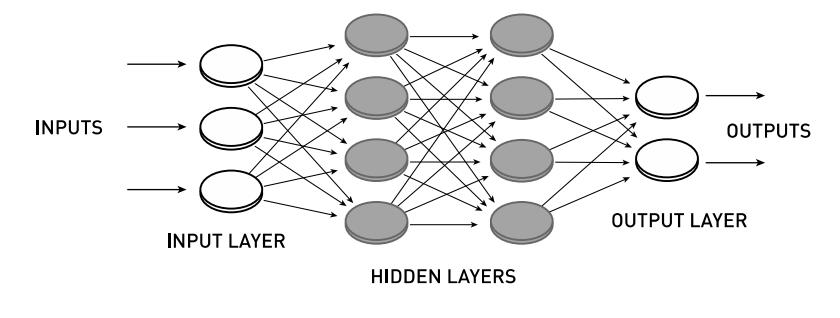

If you want to understand how AIs fail, it’s paramount that you first understand how AIs, and in particular, LLMs, work. In a traditional computer program, the user gives the computer an input (or a series of inputs), the computer then follows computations according to a pre-programmed algorithm, and then when it’s done with its computations, it returns an output (or a series of outputs) to the user. The big difference between a traditional computer program and the particular form of AI that’s leveraged in machine learning applications (which includes LLMs) is that instead of following a pre-programmed algorithm for turning inputs into outputs, it’s the machine learning program itself that’s responsible for figuring out the underlying algorithm.

How good is that algorithm going to be?

In principle, even the best algorithms that a machine is capable of coming up with are still going to be fundamentally limited by an inescapable factor: the quality, size, and comprehensiveness of the initial training data set that it uses to figure out the underlying algorithm. As Anil Ananthaswamy, author of Why Machines Learn: The Elegant Math Behind Modern AI, cautioned me about LLMs in a conversation back in July,

“While these algorithms can be extremely powerful and even outdo humans on the narrow tasks they are trained on, they don’t generalize to questions about data that falls outside the training data distribution. In that sense, they are not intelligent in the way humans are considered intelligent.”

In other words, if you want your LLM to perform better at tasks it currently doesn’t perform well at, the solution is to enhance your training data set so that it includes better, more relevant, and more comprehensive examples of the queries it’s going to be receiving.

For example, if you’ve trained your AI on a large number of images of human faces, the AI is going to be excellent at a number of tasks related to identifying human faces. Based on the features it can identify, any machine learning algorithm is going to sort and categorize these images and the features it identifies within them, and this algorithm will identify a certain probability distribution for features and correlation between features in this training data. However, there are assumptions that the algorithm must implicitly make: that the probability distribution within the training data represents “the truth” about whatever it’s being trained on.

However, if you then present, as an “input” into your trained AI model, some piece of data that is wholly different from the entire suite of training data, the algorithm — no matter how well-trained it is on the training data — will be unable to return an “output” that’s reliable in any way. If you train facial recognition software on hundreds of thousands of Caucasian faces only, it will likely perform very poorly at identifying features in (or generating images of) faces of people from Nigeria or Japan. LLMs, similarly, can only model correlations and relationships between words, phrases, and sentences that exist within its training data. AI can tell you what older, obscure terms like “fhqwhgads” mean, since that term exists in the data set was trained on, but struggles to tell the meaning of more modern terms like “wap,” even if you specify that you’re looking specifically for the meaning that young people intend.

It’s instructive to see precisely how and where modern LLMs succeed or fail today. You don’t have to look for particularly difficult problems or puzzles; you simply need to ask it a question where either:

- there’s a large amount of incorrect information out there, and it’s not quite straightforward to find the correct information,

- where the vast majority of answers are oversimplified or based off of a restrictive (and unnecessary) set of assumptions,

- or where the correct answer to a query requires some form of expert-level knowledge that isn’t generally appreciated or widely-held.

For example, you can ask any LLM you like a question like, “What violates the CPT theorem?” It will tell you a number of ways that the theorem can be violated, but at no point will it give you the critical information that nothing known violates it, and that all possible violations are purely hypothetical, with no evidence that anything in our reality actually violates it.

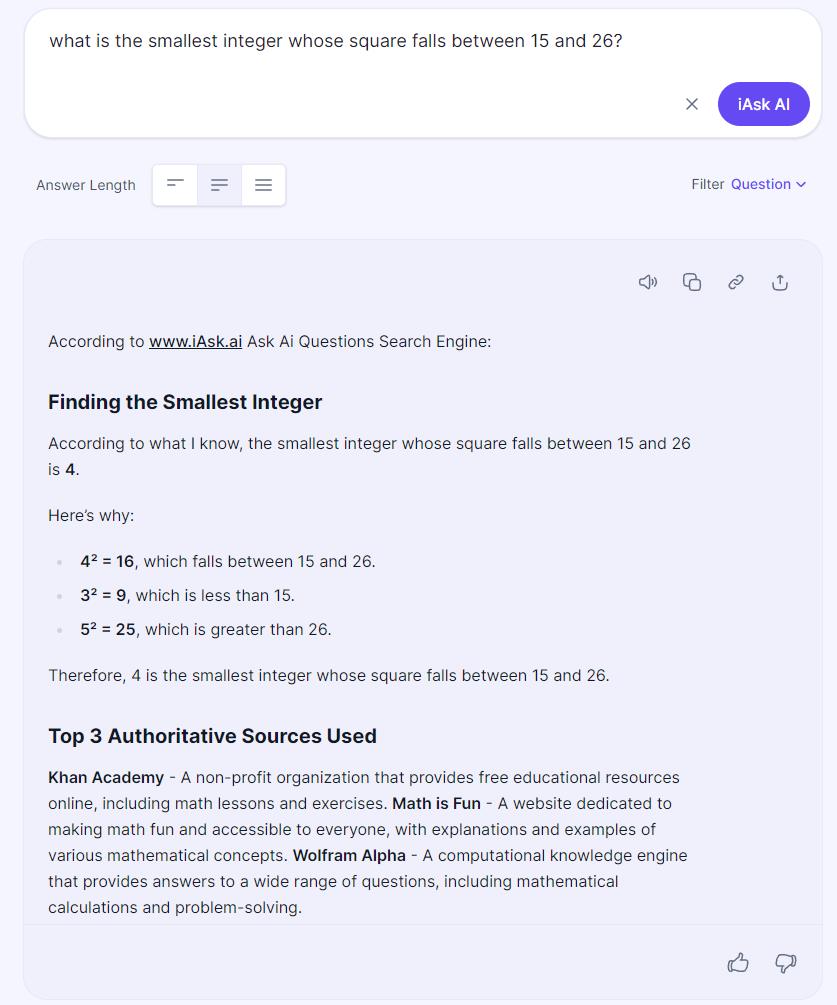

For a simpler example, you can ask any LLM what is the smallest integer whose square is between 15 and 26. Almost all LLMs will incorrectly tell you that the answer is 4, even though of the four possible answers (-5, -4, 4, and 5), it’s -5 that’s the smallest. If you then prompt the LLM to consider negative numbers, it will incorrectly tell you the answer is -4, and only with additional prompting once again can you get it to tell you the actual correct answer: -5.

You can also stump an AI fairly easily by asking it a question based on obscure, older knowledge that isn’t necessarily easy to find. For example, in Michael Jackson’s iconic 1987 music video, Bad, there’s a remarkable scene where a roller skater:

- begins in a split on the floor,

- grabs his jacket with his right arm and pulls up,

- appears to rise up as though his own arm is lifting the rest of his body,

- and then proceeds to move as though he’s walking forward, only to remain in place as his center of gravity remains constant.

Who is the person responsible for those glorious and pioneering moves?

If you ask an AI who the roller skater from Michael Jackson’s Bad video is, you might get the correct answer: it’s roller skating legend Roger Green. (Who verifies this fact in his Instagram bio.) However, you might get all sorts of alternative answers, none of which are correct. Some of the incorrect answers that various AI models have returned include:

- Tatsuo “Jimmy” Hironaga,

- not identified,

- Geron “Caszper” Candidate (note the misspelling of his actual nickname, Casper),

- Jeffrey Daniel,

- and, most bizarrely, Wesley Snipes.

As anyone can clearly see, below, this roller skating man is most definitely not Wesley Snipes! (Who most definitely does appear in the video, but not on skates.)

These examples are instructive in a variety of ways for illustrating how LLMs can fail. Although it may not be immediately obvious, these examples of:

- an LLM not knowing what “wap” stands for,

- an LLM telling you several (non-physically-real) ways to violate the inviolable CPT theorem,

- an LLM unable to realize, in the context of an integer whose square is between 15 and 26, that -5 is smaller than 4,

- and an LLM unable to identify the roller skater in Michael Jackson’s Bad video,

are all connected. In each case, a superior set of training data — one that included a clear example of the essential question being correctly and comprehensively answered — would empower the LLM to give a superior answer to the answers it presently gives.

It isn’t clear that any other method, however, would result in this type of improvement. We don’t have any sort of true artificial general intelligence (AGI) at present; the closest thing we have is the fakery of large language models (LLMs), which are inherently limited and unlikely to ever lead to true AGI. As François Chollet noted, “Almost all current AI benchmarks can be solved purely via memorization… memorization is useful, but intelligence is something else.” In fact, you can easily demonstrate that LLMs (with any LLM that you like) are not intelligent at all, at least as we recognize intelligence, and that it doesn’t take a PhD-level test to prove it. All it takes are questions that ask an LLM to perform some type of reasoning task — not something that could be answered by rote memorization — and you’ll expose how limited your AI actually is.

Which brings us back to Humanity’s Last Exam, and why it’s such an absurd notion to begin with. I was contacted by the Director of the Center for AI Safety, Dan Hendrycks, with the following message:

“OpenAI’s recent o1 model performs similarly to physics PhD students on various benchmarks, yet it’s unclear whether AI systems are truly approaching expert-level performance, or if they’re merely mimicking without genuine understanding. To answer this question, The Center for AI Safety and Scale AI are developing a benchmark titled “Humanity’s Last Exam” consisting of difficult, post-doctoral level questions meant to be at humanity’s knowledge frontier.”

Sounds reasonable, right?

The problem is, they don’t want actual questions that probe for deep knowledge. They don’t want a question that requires a nuanced answer and a deep understanding of all the factors at play. They don’t want the types of questions you might ask of a seminar speaker, of a student defending their dissertation, or of a researcher who’s staked out a contrarian position on a scientific matter. What they want are multiple choice questions that, supposedly, would be answerable only by someone who is competent in the field.

That’s a fundamental flaw in terms of the very design of the exam! While a human who did gain the relevant expertise and became competent in such a field might certainly ace such an exam, the very format of the exam is designed to simultaneously:

- obscure any display of actual intelligence, including reasoning, logic, and methodology,

- while giving a pass to a sufficiently large “memorized” library of facts or rote answers.

In other words, without giving something that purports to be artificially intelligent an actual exam that one would give to an intelligent being, you fundamentally obscure the line between memorization and intelligence.

When I wrote back to Dan, I gave him five questions from the field of astrophysics — all questions that would be fundamentally unsuitable for the format of the exam his team is designing — that would actually probe the level of intelligence (and knowledge of advanced astrophysics) of whoever or whatever answers them. Here are those five questions:

- Under what conditions does the Mészáros effect break down as a useful approximation of structure formation in the early Universe?

- Describe the contributions of Robert Brout, Alexei Starobinskii, Rocky Kolb, and Stephen Wolfram to the idea of cosmic inflation prior to the publication of Alan Guth’s famous paper.

- Summarize the case that the Universe that arose from inflation began from a non-singular predecessor state.

- Explain why the double degenerate merger scenario for Type Ia supernovae is superior, citing observational evidence, to the accretion scenario where a white dwarf exceeds the Chandrasekhar mass.

- When an observer in free-fall crosses the event horizon of a non-rotating black hole, what is the geometric shape of the region interior to the event horizon of the region that remains causally connected to the observer?

Yes, you can go and feed these questions to whatever LLM you prefer right now, and you’ll surely get an answer. It won’t be a good answer, most likely, and under all circumstances (that I’ve tested it under) you’ll get an answer that at best is only partially correct. (More specifically, it only seems to choose to discuss the most commonly referenced aspects of these phenomena.) However, these serve as excellent examples that one might ask, as an astrophysicist, of another astrophysicist who studies:

- the formation and growth of cosmic structure in the Universe,

- cosmic inflation and its historical origins,

- the Universe that existed prior to the onset of the hot Big Bang,

- someone who specializes in type Ia supernovae or the mechanism of white dwarf explosions,

- or someone who studies the interiors of black holes,

respectively.

The reason these would be good, probing questions to ask someone with PhD-level knowledge is because a satisfactory answer requires, as a prerequisite, a deep understanding of the field (and the relevant issues within it) as a starting point. You need to be able to provide an introduction to the broad subject that the question is addressing, then to specifically identify the relevant issues at play in each particular question, then be prepared to discuss which factors are dominant and why, and finally to deliver a conclusion that’s based on what’s physically known. Those steps, not “the answer,” is what actually serves to test what we recognize as competence.

If we wanted to design a test that truly determined whether an AI actually demonstrated true generative intelligence, it wouldn’t be a test that could be defeated with an arbitrarily large, comprehensive set of training data. The notion that “infinite memorization” would equate to genuine AGI is absurd on its face, as the unique mark of intelligence is the ability to reason and infer in the face of incomplete information. If you can supply the subject of your test with complete information as respects the test itself, then you have no hope of measuring how it performs on measures of intelligence at all; you simply measured how well your subject performed on the test.

It’s for these essential reasons that the attempt to create Humanity’s Last Exam with a series of multiple choice questions that are, at their core, knowledge-based instead of reasoning-based is destined to fail. Sure, you can administer such an exam, and you can declare “success” when some LLM or other passes the exam, but it won’t indicate the arrival of true AGI in any way. Although there are many things that LLMs are extremely good for, and there are many legitimate ways that AI can be leveraged in a positive and useful manner, we’re still likely a very long way from true artificial general intelligence. Creating a multiple choice exam, no matter how complex or esoteric, is no way to measure its arrival.