Astronomy’s secret weapon in the resolution wars: interferometry

- In astronomy, the total area of your telescope determines your light-gathering power, while the number of wavelengths of light that fit across your telescope’s diameter determine your telescope’s resolution.

- However, through the technique of interferometry, we can enhance the resolution of an array of telescopes by placing a large number of them far apart from each other, but by synching them all together.

- This technique brought us our first images of a black hole’s event horizon, but now astronomers seek to do it in optical (visible) light, surpassing Hubble’s resolution by a factor of more than 100. Here’s what’s ahead.

When human eyes gaze up at the night sky, what we can see is profoundly limited. The pupils of our eyes, which allow light through, can only reach a maximum diameter of around 7 millimeters (0.28 inches) each, which limits the amount of light our eyes can collect and, therefore, the faintness of the objects we’re sensitive to. Because we can only see visible (optical) light, the maximum resolution we can see is defined by the number of wavelengths that can fit across the diameter of our pupils. It’s why the stars Mizar and Alcor, the second star in the handle of the Big Dipper, can be seen as two individual points of light by unaided human eyes, but the binary star Albireo, within the Summer Triangle, requires binoculars or a telescope to resolve into two separate stars.

Traditionally, the simplest way to improve your view of the Universe is to build larger-aperture instruments: telescopes with larger collecting areas. That gives them both more light-gathering power and also higher resolution, as larger telescopes collect more light in the same amount of observing time, and also as more wavelengths of the same type of light can fit across the diameter of your telescope, producing sharper images. However, larger telescopes are more expensive and more challenging to build instruments for, as there are only two optical telescopes larger than ~12 meters under construction today.

However, a technique known as interferometry offers the best of both worlds: we can build an array of smaller telescopes and synchronize them together, producing images with:

- the light-gathering power of all the telescopes in the array added together,

- but with resolution determined by the distance between the telescopes, rather than the size of any individual telescope itself.

In recent years, this technique, when applied to radio astronomy, brought us our first images of a black hole’s event horizon. Now, in the mid-2020s, astronomers are looking to do something similar with optical telescope arrays. If successful, it can produce images that are literally hundreds of times sharper than anything our best space-based optical telescope, Hubble, has ever captured. Here’s how.

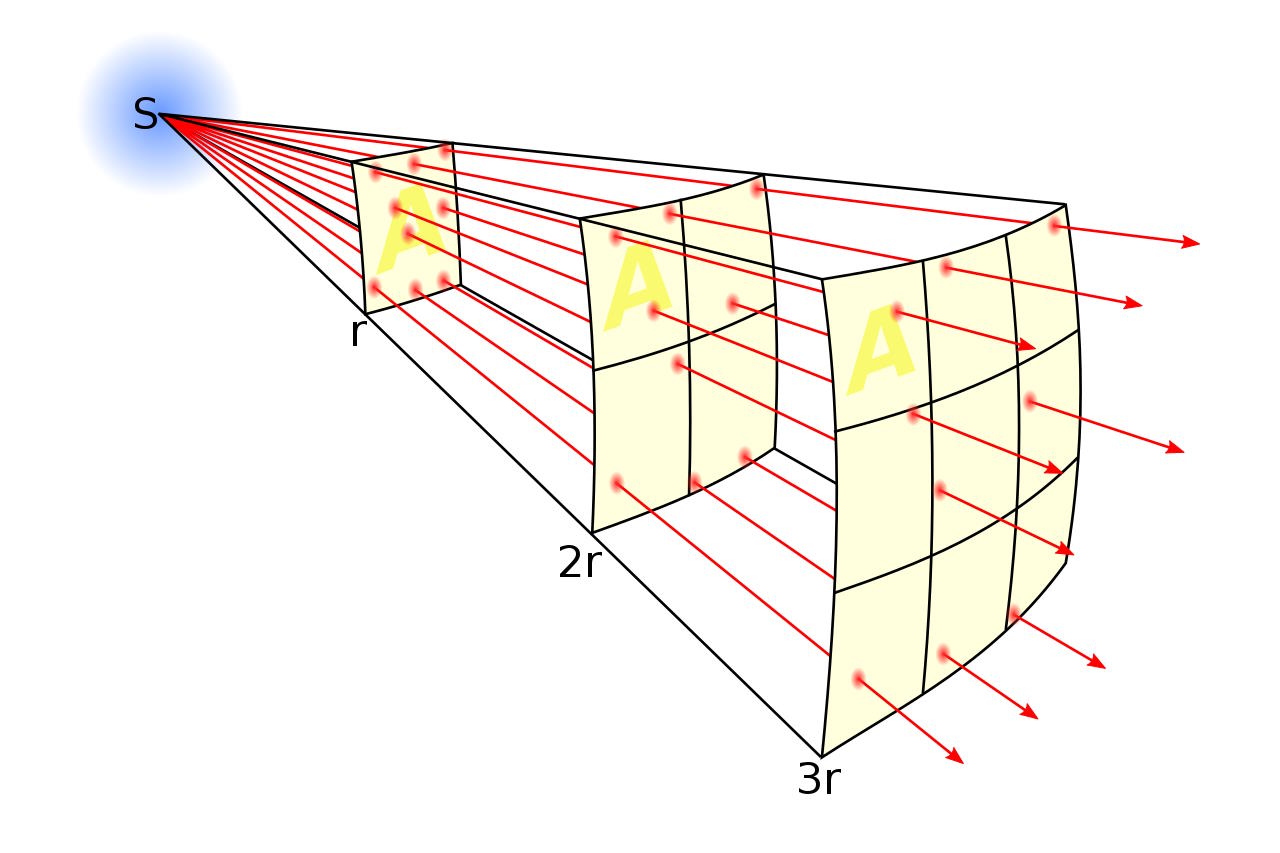

The first thing we have to understand is this: from every light-emitting source in the Universe, photons propagate outward from it at the speed of light omnidirectionally. If you have a single telescope at a single location in space, those photons arrive at that telescope after traveling for the same amount of time: the light-travel time of each photon is identical. However, if you have telescopes at two different locations in space, that won’t necessarily be the case any longer. The amount of time it takes the light to travel from the source to “point A” might not be identical to the time it takes light to travel from the source to “point B,” even if points A and B are relatively close together.

For example, if you have two telescopes that are separated by a certain distance — say, 300 meters — then there might be a time delay between when the emitted light arrives at “point A” from “point B” by as much as one microsecond: the amount of time it takes light to travel 300 meters. For features that remain constant over long periods of time, like stars, galaxies, nebulae, and other non-transient features, this doesn’t make much of a difference, as what you see at any particular instant in time is not going to be all that different from what happened a microsecond before-or-after, but for events that occur at a specific moment, like the detonation of a supernova or the merger of two black holes or neutron stars, it’s incredibly important that you synchronize the timing between your different telescopes.

There’s another thing we need to take note of beyond “timing” the arrival signals: resolving the object we’re seeking to observe. If all you had was a point-like detector, even a powerful enough signal could result in you detecting it; if the light that arrives has enough intensity, you can “see” it with your instruments. However, you wouldn’t be able to resolve that object; it would only appear as a point in your point-like instrument. You could learn what that light’s energy was, what wavelength it was at, and what direction it was arriving from. But you wouldn’t be able to measure the physical properties of the light-emitting source itself: not its size, not its shape, not its physical extent, and not whether different components of that source contained varied colors or brightnesses. A single point can’t tell you that information.

But a large-area detector, like a dish (or, in general, the primary mirror on a telescope), can give you that information. Now, the light isn’t simply striking a point, but rather strikes an area. Light:

- left the source,

- spread out in a spherical fashion,

- got reflected off of a large-area mirror,

- and became focused to a point.

If that source is truly point-like, the way a single, distant star is point-like, then a point-like detector and a large-area detector will see the same features. But if your source either consists of multiple points of light (like a star cluster or a multi-star system) or is an extended light source (like a galaxy or nebula), then the light from each individual source will get focused to a slightly different location.

If your telescope mirror is large enough compared to the angular separation of distinct sources (or the angular size of an extended object), you’ll be able to resolve the multiple, separated features that were present within the original source. For a single-dish telescope, there’s a simple relationship between:

- the angular resolution you can achieve,

- the diameter of your primary (light-collecting) mirror,

- and the wavelength of light you’re observing in.

If your light source is either smaller in angular size or made of points that are closer together, it becomes harder to resolve the individual components, just as looking with a smaller-diameter mirror or in longer wavelengths of light lowers your resolution. And this is bad: when you don’t have enough resolution, everything just appears as a blurry, unresolved, single spot in your instruments.

You can overcome this by building larger and larger telescopes, or making your telescope sensitive to shorter wavelengths of light, or by getting physically closer to the light sources you’re trying to observe. However, the first option is often limited by cost, the second option is limited by Earth’s atmospheric windows and the type of light that’s emitted by objects, and the third option is really only useful for bodies within our own Solar System. For interstellar or extragalactic objects, you cannot appreciably change the distance between the observer and the source with current technology.

Imagine, then, that you took the largest telescope you dare imagine building, unconstrained by cost or resources. Larger telescopes will give you better resolution, as more wavelengths of the same type of light can fit across a larger-diameter telescope. Larger telescopes will also give you more light-gathering power, as the amount of light you can collect is proportional to a telescope’s area, or its radius (or diameter) squared. Bigger is better in both of these ways, even if the telescope you’ve imagined is infeasible to build. “Too expensive,” you’re going to object, and that objection is legitimate for most telescopes that are anywhere from about 100 meters up to the size of the Earth (or beyond).

But we’re not done.

The reason I wanted you to imagine a stupendously large telescope is because now I want you to imagine something you’d never, ever do if it was real: vandalizing it. Imagine you had the ability to darken out huge portions of your extraordinarily large telescope’s mirror, like you were making a mask from that mirror. Obviously, you wouldn’t be able to receive light from those locations. As a result of that, the brightness limits on what you’d be able to see would decrease; the fainter and fainter objects would disappear from view as you masked off more and more of that mirror, as you’re decreasing its light-gathering power. But as long as you left “good spots” all throughout the mirror itself, including at the edges, your resolution wouldn’t decrease; it would still give you the resolving power of the full, unmasked mirror.

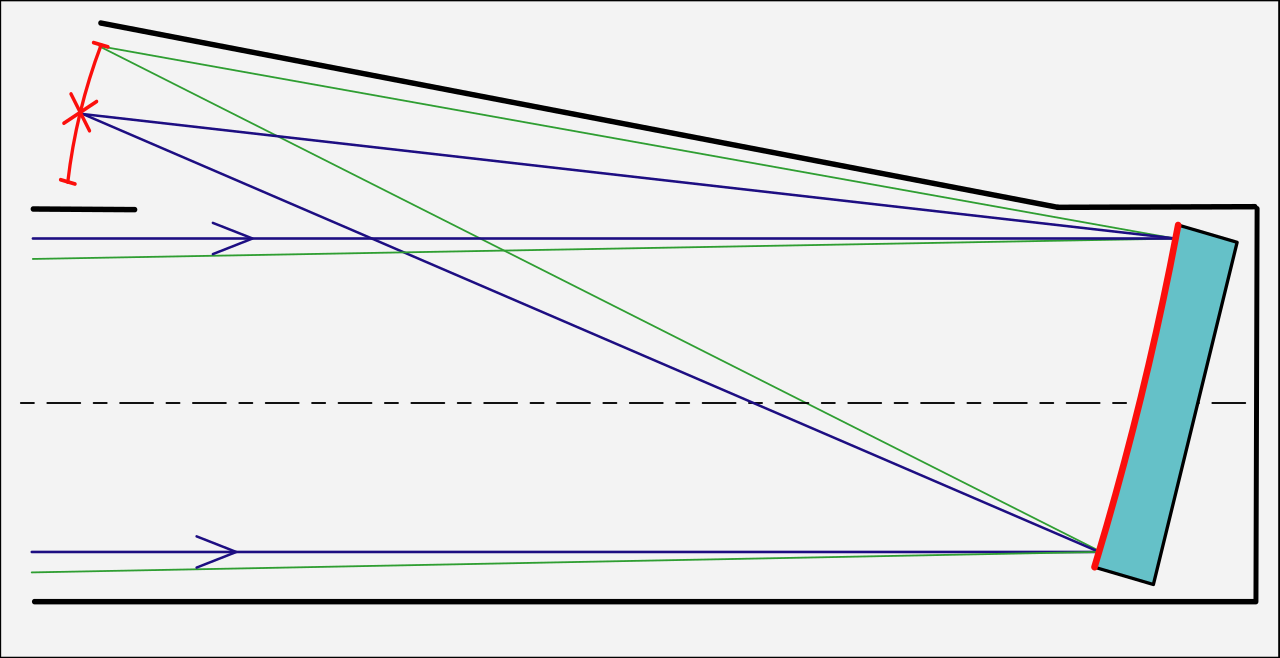

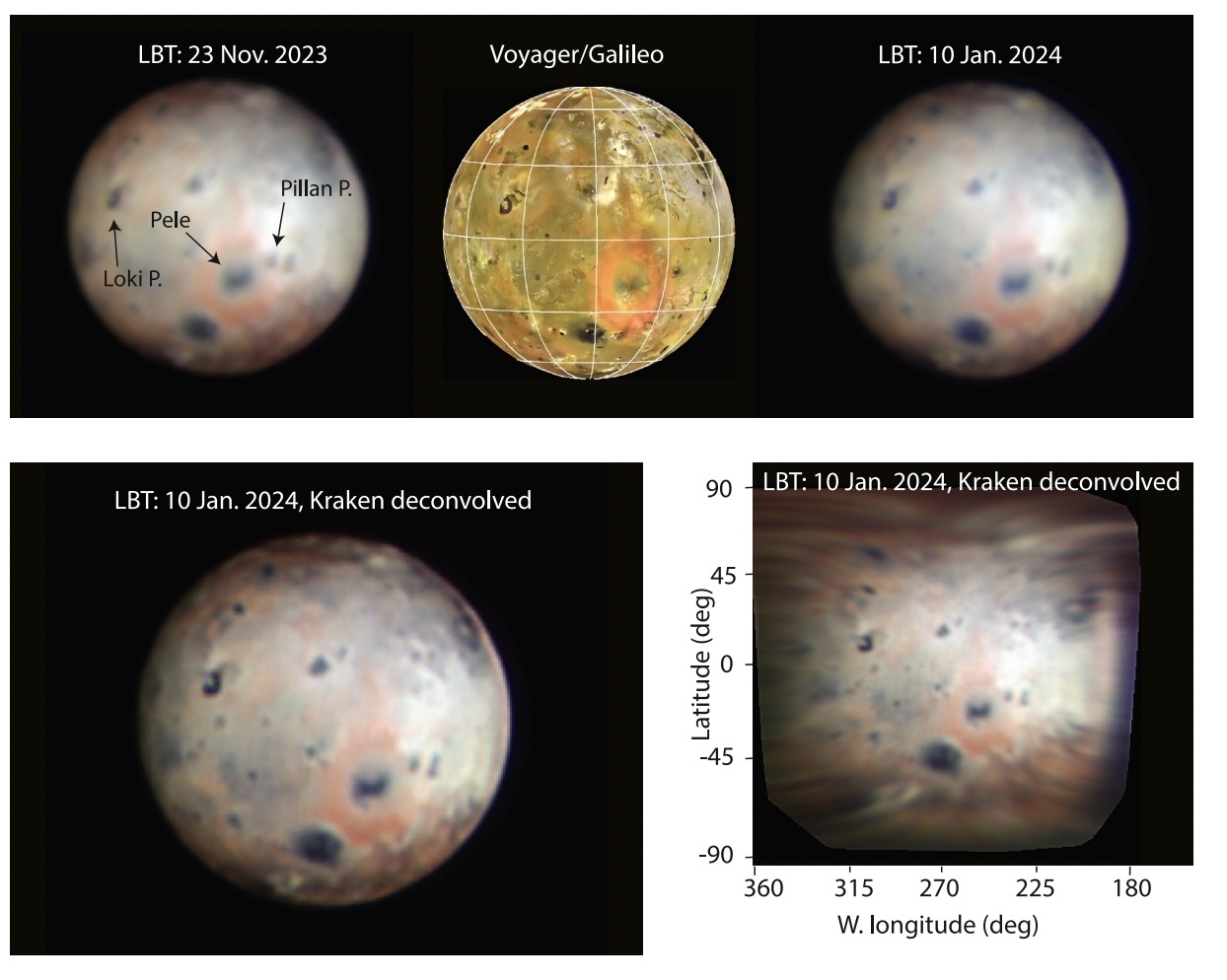

This is the principle behind building not just a single telescope, but an array of telescopes: it’s nearly identical, optically, to a single-mirror telescope that simply has an enormous but incomplete mask applied to it. Above, the LBTO, or Large Binocular Telescope Observatory, behaves in exactly this fashion. With two 8.4 meter diameter mirrors mounted on the same support structure, but with an edge-to-edge baseline of 23 meters, it has already done something phenomenal: provided us with the highest-resolution image of Jupiter’s Moon Io ever acquired from a telescope/camera that didn’t physically fly to Jupiter itself.

You have to be very, very careful, however, about how you go about combining data from an array of telescopes to form a single image. The temptation is strong to simply do the following:

- build an array of telescopes,

- focus the light from each telescope the way you’d do it for a single-dish telescope,

- have each primary mirror focus the light, collecting and storing the data,

- and then combine the data from those images to produce a single image.

If that were the procedure you followed, tempting though it might be, you would only average your data out. You would, in fact, get data that was no better than taking data with a single telescope at an “array” of different times, and then just co-added all the data together. You wouldn’t get the improvement in resolution you hoped for at all, and instead would just “see” what a single dish was capable of seeing.

How, then, can you unlock the true power of an array of telescopes, and get to that “holy grail” of the resolution of the distance between the telescopes?

The key is the technique of very long baseline interferometry (VLBI, for short), and in particular the ability to put the observations together from different telescopes that correspond to the exact same moments in time with respect to the source being imaged. Light signals arrive, remember, with unique light-travel-times separating the source from each separate telescope/dish, owing to the varying distance between the source and the various points on Earth where the telescopes exist. You must know the arrival time of the signals at each different telescope location that’s part of the array in order to successfully combine the data together into a single image.

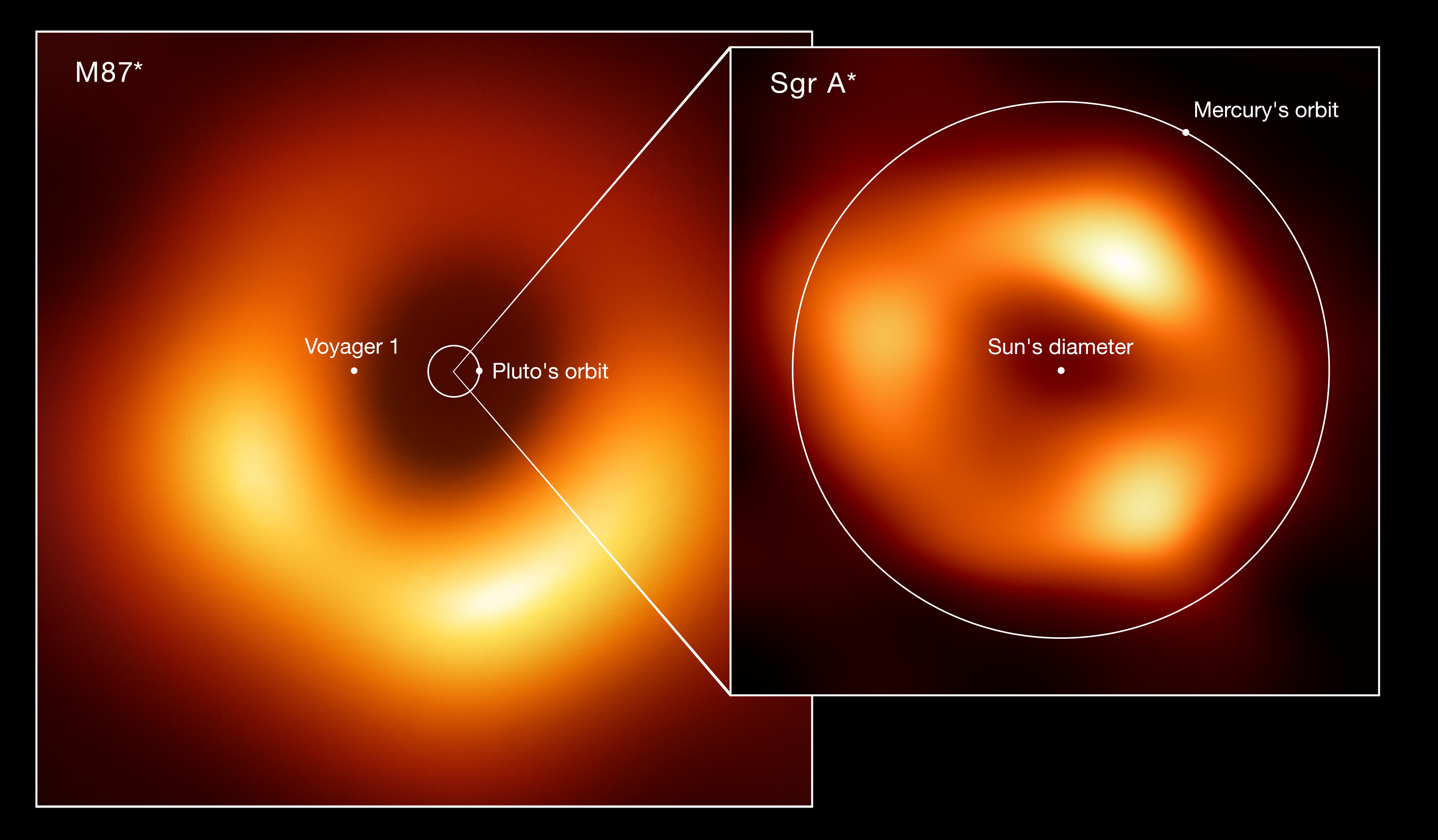

For the Event Horizon Telescope, the global array of radio telescopes that successfully imaged the event horizons of the two largest-diameter black holes as seen from Earth — M87* at the center of the Virgo Cluster and Sagittarius A* at the center of the Milky Way — the way to accomplish this was by making use of atomic clocks. At every one of the eight telescope (or telescope array) locations across Earth, an atomic clock was placed, allowing us to keep time to precisions of attoseconds, or 10-18 seconds: thousands of times more precise than the femtosecond differences in arrival times between subsequent wavelengths of (visible) light. By observing:

- the same object,

- at the same time,

- at the same wavelength/frequency,

- all with the proper corrections applied for calibrations and atmospheric noise,

you can combine the data from the different telescopes together in a way that will, in fact, give you the desired resolution.

There was an enormous technical challenge that needed to be overcome to make these multi-telescope synchronizations possible, despite its conceptual simplicity. The problem is how to synthesize these different images together, and the technique is literally known as aperture synthesis. For radio astronomy, including the Event Horizon Telescope, you simply need to measure the amplitude and phase of the incoming signal, which can be accomplished electronically. The theoretical solution to this problem is actually old, dating back to 1958, when Roger Jennison wrote his now-famous paper: “A phase sensitive interferometer technique for the measurement of the Fourier transforms of spatial brightness distributions of small angular extent.” Here’s the gist of it.

- Imagine, first, that you have three antennas (or, alternatively, radio telescopes) that are all hooked up together, separated by a known distance from one another and also from a distant source.

- Then, imagine that the distant source emits a signal that can be received by each antenna, where the relative arrival times of the signal at each different antenna can be known/computed.

- Now, you’re going to want to mix these multiple signals together, and when you do, the signals from each individual antenna will mix together: partially due to real (physical) effects, but also partially due to sources of error.

- Through Jennison’s pioneering process, which is still used today in the form of self-calibration, we are now able to combine the real effects, properly, while ignoring and filtering out the errors.

Remarkably, this technique can, in theory, be applied to any wavelength at all, and shouldn’t be limited to radio wavelengths.

For optical and certain near-infrared wavelengths, which can also be observed from the ground (like the radio portion of the electromagnetic spectrum), however, you can’t measure the amplitude and phase of the incoming light so easily. You cannot simply measure them electronically, the way you can with radio waves. Instead, the electromagnetic field itself must be propagated by a sensitive-enough optical system and then subjected to optical interference, which requires extraordinarily accurate optical delay and wavefront aberration correction systems. These were first developed in the 1990s and applied to systems such as the Keck Interferometer, which linked up two 10-meter telescopes to create an instrument with an 85-meter baseline.

But why stop there? Why stop with just two optical telescopes, or with a modest (less than 100-meter) baseline? Yes, greater distances are harder, and using more than two telescopes is an additional challenge, but the rewards are grander as well: better resolution across the full coverage area of the object/source you’re attempting to image. This is not some pipe dream, but an ambitious, under-construction project that’s currently being built and developed: the Magdalena Ridge Observatory Interferometer (MROI), just a hop, skip, and a jump away from the Very Large Array, which uses the same technique but at much longer (radio) wavelengths.

To be sure, there are a lot of challenges associated with bringing this new technology to fruition, most of them extremely technical. However, arguably the most complex piece of the puzzle — the Beam Combining Facility that will bring the data from any and all of the proposed (ten) telescopes across the possible (28) stations together — has already been completed. Baselines can be as small as 7.8 meters or as large as 340 meters: the latter of which would be a record for optical astronomy. Think about this for a moment: the Hubble Space Telescope, for all that it brings to the world, is only a single-dish telescope with a diameter of 2.4 meters. At 340 meters, the MRO interferometer could achieve resolutions that are up to 142 times as sharp as Hubble’s views.

This remains true despite the relatively modest size (1.4 meters) of each of the telescopes within the array. The only limitation is that the astronomical source in question must be bright enough so that each individual telescope is capable of detecting it. As long as that’s the case, and as long as all the steps and stages of the interferometer array are functioning properly, then any appropriately imaged object, from newborn stars with protoplanetary disks to active black holes at the centers of galaxies, can be imaged at resolutions that even 30-meter class telescopes can only dream of.

For better resolution and superior light-gathering power, “bigger is better” is still true, but “smaller, more modular, and capable of interferometry” can give you unprecedented resolution, albeit at the expense of light-gathering power. In terms of a scientific bang-for-your-buck, investing in a project like this may be one of the smartest moves humanity can make.