Don’t worry about making a mistake. It’s how we learn.

- Humans learn best when avoiding too much complexity and getting the gist of situations, according to a new study by researchers at the University of Pennsylvania.

- Instead of remember every detail, we learn by categorizing situations through pattern recognition.

- We wouldn’t retain much if we considered a high level of complexity with every piece of information.

Humans learn in patterns. Take a bush that you pass every day. It’s not particularly attractive; it just happens to exist along your normal route. One day you notice a brownish tail sticking out of one side. A nose pops out of the other side. The bush happens to be roughly the size of a tiger. The only thought you have is run.

You didn’t need to see the entire tiger to get out of there. Enough of a pattern had emerged for you to get the gist.

Getting the gist is how we learn, according to a new study by researchers at the University of Pennsylvania. Published in Nature Communications, the paper looks at the balance between simplicity and complexity. Human learning falls somewhere in the middle of this spectrum: enough to get an idea, not enough to avoid mistakes. Mistakes are an integral aspect of learning.

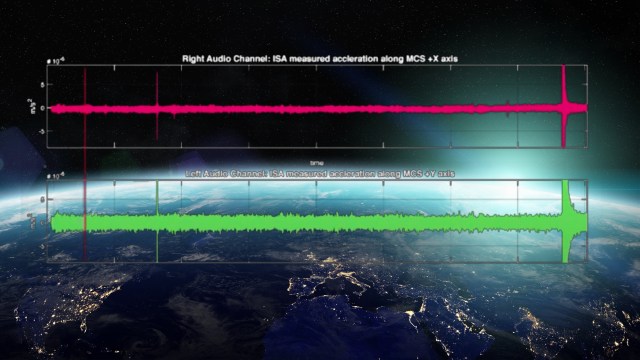

The team, consisting of physics Ph.D. student Christopher Lynn, neuroscience Ph.D. student Ari Kahn, and professor Danielle Bassett, recruited 360 volunteers. Each participant stared at five grey squares on a computer screen, with every square corresponding to a keyboard key. Two squares simultaneously turned red. Participants were asked to tap the corresponding keys every time this happened.

While volunteers suspected the color changes were random, the researchers knew better. The sequences were generated using one of two networks: a modular network and a lattice network. Though nearly identical at a small scale, the patterns produced appear different from a macro level. Lynn explains why this matters:

“A computer would not care about this difference in large-scale structure, but it’s being picked up by the brain. Subjects could better understand the modular network’s underlying structure and anticipate the upcoming image.”

The Science of Learning: How to Turn Information into Intelligence | Barbara Oakleywww.youtube.com

Comparing a human brain to a computer is inaccurate, they say. Computers understand information on a micro level. Every tiny detail matters. One errant symbol in one line of code can bring down an entire network. Humans learn by staring at the forest, not the trees. This allows us to avoid complexity, which is important if the goal is to understand a lot of information. It also means we’re going to make mistakes. As Kahn phrases it,

“Understanding structure, or how these elements relate to one another, can emerge from an imperfect encoding of the information. If someone were perfectly able to encode all of the incoming information, they wouldn’t necessarily understand the same kind of grouping of experiences that they do if there’s a little bit of fuzziness to it.”

Recognizing that something is like something else is a major reason we can consume so much data. In cognitive psychology this categorization process is known as chunking: individual pieces of data broken down and grouped together to form a whole. It is a highly efficient process that also leaves us prone to errors.

Ten percent of participants had high beta values, meaning they were extra cautious. They didn’t want to make errors. Twenty percent exhibited low beta values—highly error-prone. The bulk of the group fell somewhere in-between.

Fans of a recent anti-vaccination film could be said to exhibit low-beta value. Vaccines are one of the most beneficial protective measures ever discovered. You can’t actually estimate how many lives have been saved; that’s not how proactive measures work. You can look at population charts, however. When vaccines were first put into clinical use there were over a billion people on the planet. That’s after 350,000 years of Homo sapiens development. We’re approaching eight billion people just 139 years after Louis Pasteur’s vaccine experiments. (Germ theory, food distribution, antibiotics, and technology also play a role, though vaccines are relevant.)

Vaccination has never been a perfect science. As with every medical intervention, they’re complex. Low-beta thinkers eschew complexity for simplicity. Many confuse a few trees for the forest. This is important during a time in which information is being weaponized to promote agendas. Sifting through complexity is exhausting; thus more people take the easiest route.

Not that learning should be too complex. As stated, only one in 10 people overly complicate their thinking. Most people sit in the middle, making mistakes while mostly getting the gist.

The researchers hope that this information will help address psychiatric conditions (such as schizophrenia) in the future. They cite the emerging field of computational psychiatry, “which uses powerful data analysis, machine learning, and artificial intelligence to tease apart the underlying factors behind extreme and unusual behaviors.”

Don’t get frustrated with your mistakes. We all make them. The key is to recognize them and learn from the experience. Mostly, the gist is enough.

—

Stay in touch with Derek on Twitter and Facebook. His next book is “Hero’s Dose: The Case For Psychedelics in Ritual and Therapy.”