Your Brain Isn’t a Computer — It’s a Quantum Field

The irrationality of how we think has long plagued psychology. When someone asks us how we are, we usually respond with “fine” or “good.” But if someone followed up about a specific event — “How did you feel about the big meeting with your boss today?” — suddenly, we refine our “good” or “fine” responses on a spectrum from awful to excellent.

In less than a few sentences, we can contradict ourselves: We’re “good” but feel awful about how the meeting went. How then could we be “good” overall? Bias, experience, knowledge, and context all consciously and unconsciously form a confluence that drives every decision we make and emotion we express. Human behavior is not easy to anticipate, and probability theory often fails in its predictions of it.

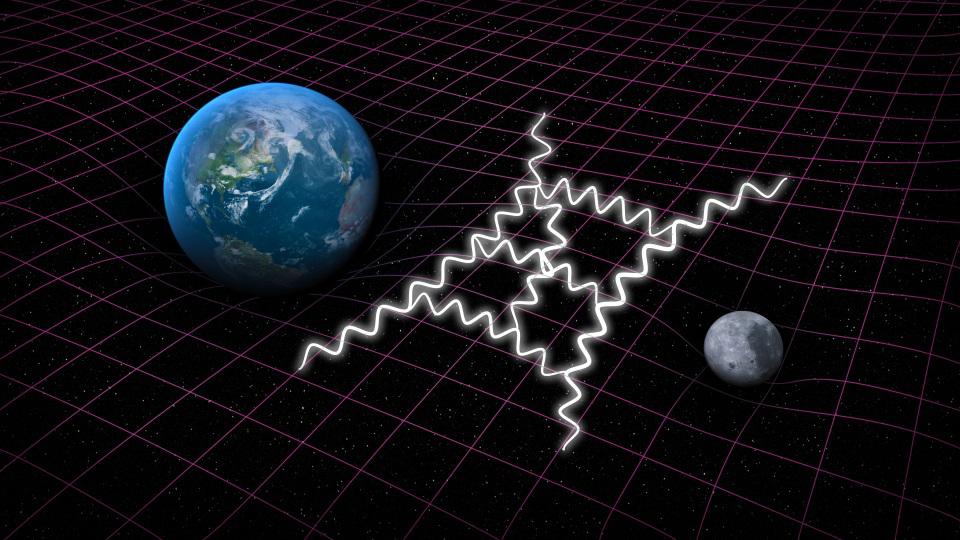

Enter quantum cognition: A team of researchers has determined that while our choices and beliefs don’t often make sense or fit a pattern on a macro level, at a “quantum” level, they can be predicted with surprising accuracy. In quantum physics, examining a particle’s state changes the state of the particle — so too, the “observation effect” influences how we think about the idea we are considering.

The quantum-cognition theory opens the fields of psychology and neuroscience to understanding the mind not as a linear computer, but rather an elegant universe.

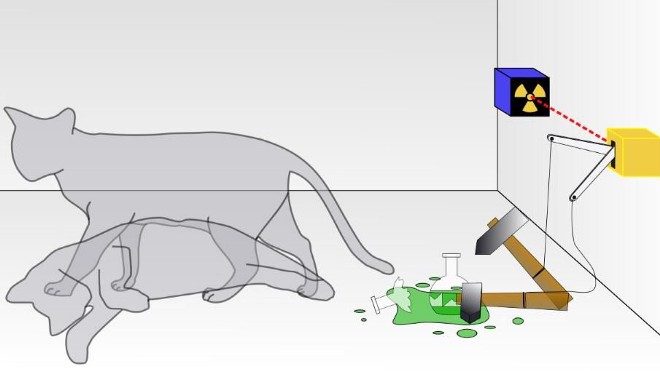

In the example of the meeting, if someone asks, “Did it go well?” we immediately think of ways it did. However, if he or she asks, “Were you nervous about the meeting?” we might remember that it was pretty scary to give a presentation in front of a group. The other borrowed concept in quantum cognition is that we cannot hold incompatible ideas in our minds at one time. In other words, decision-making and opinion-forming are a lot like Schrödinger’s cat.

The quantum-cognition theory opens the fields of psychology and neuroscience to understanding the mind not as a linear computer, but rather an elegant universe. But the notion that human thought and existence is richly paradoxical has been around for centuries. Moreover, the more scientists and scholars explore the irrational rationality of our minds, the closer science circles back to the confounding logic at the heart of every religion. Buddhism, for instance, is premised on riddles such as, “Peace comes from within. Do not seek it without it.” And, in Christianity, the paradox that Christ was simultaneously both a flesh-and-blood man and the Son of God is the central metaphor of the faith.

[D]ecision-making and opinion-forming are a lot like Schrödinger’s cat.

For centuries, religious texts have explored the idea that reality breaks down once we get past our surface perceptions of it; and yet, it is through these ambiguities that we understand more about ourselves and our world. In the Old Testament, the embattled Job pleads with God for an explanation as to why he has endured so much suffering. God then quizzically replies, “Where were you when I laid the foundations of the earth?” (Job 38:4). The question seems nonsensical — why would God ask a person in his creation where he was when God himself created the world? But this paradox is little different from the one in Einstein’s famous challenge to Heisenberg’s “Uncertainty Principle”: “God does not play dice with the universe.” As Stephen Hawking counters, “Even God is bound by the uncertainty principle” because if all outcomes were deterministic then God would not be God. His being the universe’s “inveterate gambler” is the unpredictable certainty that creates him.

The mind then, according to quantum cognition, “gambles” with our “uncertain” reason, feelings, and biases to produce competing thoughts, ideas, and opinions. Then we synthesize those competing options to relate to our relatively “certain” realities. By examining our minds at a quantum level, we change them, and by changing them, we change the reality that shapes them.

Changing the metaphors we use to understand the world — especially the quantum metaphor — can yield amazing insights. Jonathan Keats, experimental philosopher, explains:

—

Daphne Muller is a New York City-based writer who has written for Salon, Ms. Magazine, The Huffington Post, and reviewed books for ELLE and Publishers Weekly. Most recently, she completed a novel and screenplay. You can follow her on Instagram @daphonay and on Twitter @DaphneEMuller.

Image courtesy of Shutterstock