Technomorphic Mental Tools

We see with our ideas. And new labels for ideas, like “technomorphic,” can alter the rules of our thinking about our thinking.

1. Tech — that practical magic — also provides spellbinding metaphors, which transfer traits by “technomorphism,” a kind of inverse anthropomorphism.

2. We’ve long had unlabelled technomorphic habits: The universe runs like clockwork. Economies are like delicate watches. And evolution, that blind watchmaker, creates “self-winding” machines.

3. Thomas Hobbes believed minds were also mechanisms, and thinking “is but reckoning … adding … subtracting.” And Michel de Montaigne said we’re all “furnished … with similar tools and instruments … for thought.”

4. But our mental tools aren’t so similar. Their hardware might be, but their upgradable software varies greatly. Unharvested wisdom lies in casting minds as programmable computers — a now easy technomorphism, unavailable to Montaigne.

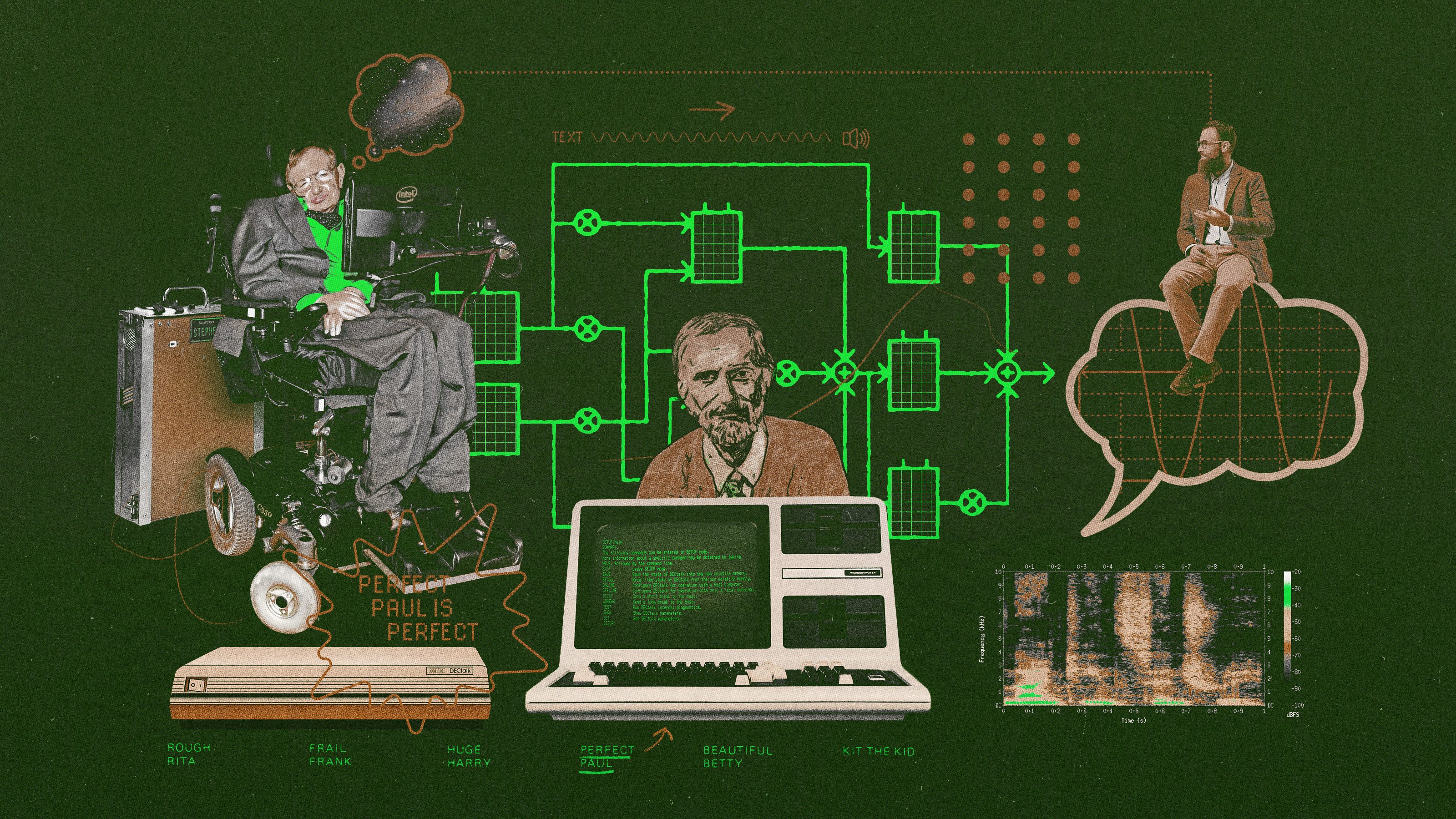

5. Technologist Christopher Nguyen says the cutting-edge ideas and “vocabulary of machine learning” can better illuminate our own mental instruments. E.g., a five-layer neural network might illustrate how “human imagination” works.

6. Nguyen reminds us engineering can precede its science. Tinkering inventors can build machines before the ideas needed to explain why they work arise — e.g., steam engines preceded thermodynamics/entropy. Nguyen hopes AI is at the steam engine stage, offering “profound … scientific clues,” perhaps building a full head of steam for entirely new trains of thought.

7. Meanwhile, simpler mind-as-computer metaphors go underused. Arguing against “The Blank Slate” mental model, Steven Pinker says we have a common “battery of emotions, drives, and faculties for reasoning,” that can work like “setting a dial [or] flipping a switch.” But those technomorphic elements should include computer-like logic scripts (if X, then do Y) and algorithms. Parts of minds are blank slates awaiting social scripts that will encode acquired rules.

8. But rules can get unruly. Unlike physics forces, rules can interact unpredictably. And rules can be usefully un-universal.

9. Stephen Wolfram’s “Rule 30” shows that unpredictable “aperiodic, chaotic behavior” can result from simple rule-driven interactions. New tools beyond geometry and algebra are needed. Rules and algorithms generate richer patterns.

10. Nguyen notes the importance of un-universal rules (knowing “when the rules don’t apply”). Rules + their conditions + exception handling = a great model for our kludgey, loosely organized bag of mental tools (which include constructively contradictory rules — see maximsvs. maximization — and many System-1-triggered scripts, that encode personal and cultural habits— see individualism = software).

11. Obviously, technomorphism can also mislead. Human memory isn’t like hardware memory, or raw video. It’s incomplete and unreliable. Oliver Sacks notes “no mechanism in the mind or the brain” ensures “the truth … of … recollections.” And here’s Daniel Kahneman (the “most influential psychologist” alive) confusing happiness with “hedonometer” utility/pleasure readings.

Deep learning awaits wise use of technomorphic (and algomorphic) ideas.

Illustration by Julia Suits, The New Yorker Cartoonist & author of The Extraordinary Catalog of Peculiar Inventions.