Richard Dawkins has made a career out of hypothesizing and articulating ideas that move the world forward, insomuch as many of those ideas could be called “ahead of their time.” Having said that, he tells us here that we might be living in the dawn of not just artificial intelligence but of a silicon civilization that will look back on this time period as the dawn of their kind. They could one day, Dawkins suggests, study us the same way that we studied other beings that once ruled the earth. Sound crazy? Open your mind and think about it. Dawkins isn’t that far off from a potential actuality on this planet. Richard Dawkins’ new book is Science in the Soul: Selected Writings of a Passionate Rationalist.

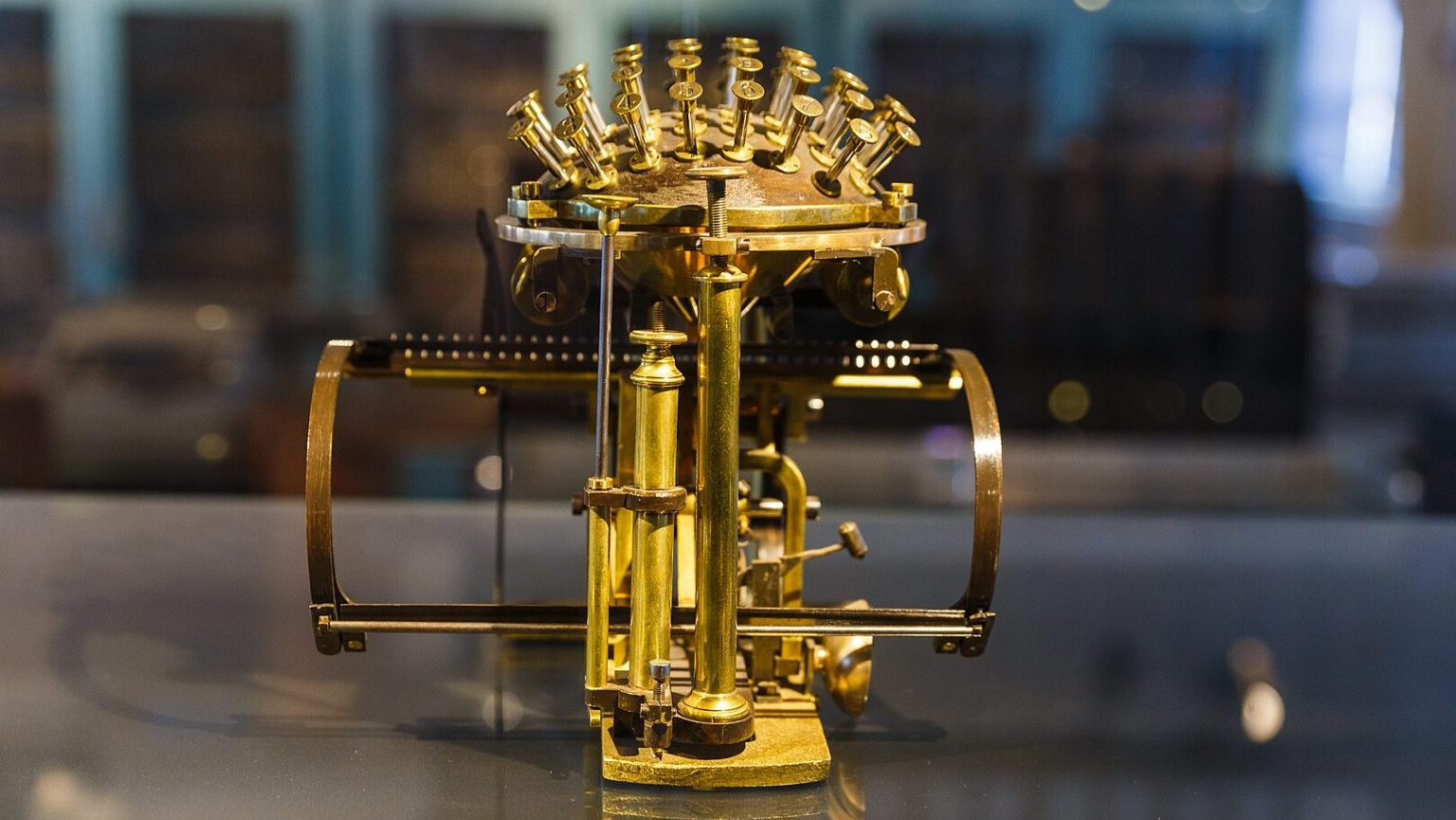

Richard Dawkins: When we come to artificial intelligence and the possibility of their becoming conscious we reach a profound philosophical difficulty. I am a philosophical naturalist. I am committed to the view that there’s nothing in our brains that violates the laws of physics, there’s nothing that could not in principle be reproduced in technology. It hasn’t been done yet, we’re probably quite a long way away from it, but I see no reason why in the future we shouldn’t reach the point where a human made robot is capable of consciousness and of feeling pain. We can feel pain, why shouldn’t they?

And this is profoundly disturbing because it kind of goes against the grain to think that a machine made of metal and silicon chips could feel pain, but I don’t see why they would not. And so this moral consideration of how to treat artificially intelligent robots will arise in the future, and it’s a problem which philosophers and moral philosophers are already talking about.

Once again, I’m committed to the view that this is possible. I’m committed to the view that anything that a human brain can do can be replicated in silicon.

And so I’m sympathetic to the misgivings that have been expressed by highly respected figures like Elon Musk and Steven Hawking that we ought to be worried that on the precautionary principle we should worry about a takeover perhaps even by robots by our own creation, especially if they reproduce themselves and potentially even evolve by reproduction and don’t need us anymore.

This is a science-fiction speculation at the moment, but I think philosophically I’m committed to the view that it is possible, and like any major advance we need to apply the precautionary principle and ask ourselves what the consequences might be.

It could be said that the sum of not human happiness but the sum of sentient-being happiness might be improved, they might make a better job do a better job of running the world than we are, certainly that we are at present, and so perhaps it might not be a bad thing if we went extinct.

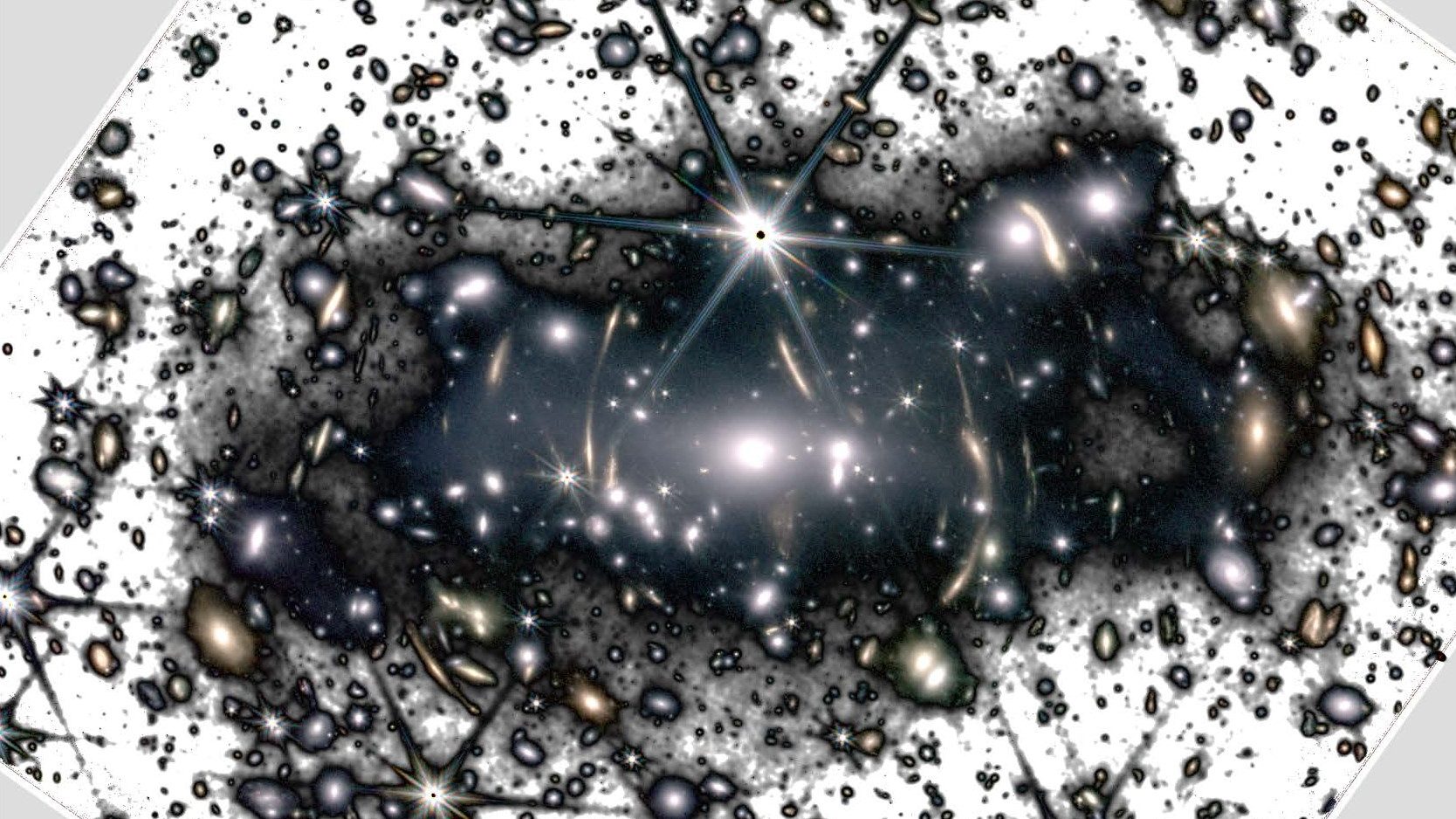

And our civilization, the memory of Shakespeare and Beethoven and Michelangelo persisted in silicon rather than in brains and our form of life. And one could foresee a future time when silicon beings look back on a dawn age when the earth was peopled by soft squishy watery organic beings and who knows that might be better, but we’re really in the science fiction territory now.