GARY MARCUS: A lot of people are really scared about AI. I think they're scared about the wrong things. Most people who talk about AI risk are worried About AI taking over the universe. There's an example from Nick Bostrom that sticks in a lot of people's heads. This is an AI system that is supposed to be rewarded for making paper clips. And this is all well and good for a little while, and then it runs out of the metals that it needs. Eventually it starts turning people into paperclips because there's a little bit of metal in people and there's no more metal to get. So this is our kind of sorcerer's apprentice terror that I think a lot of people are living in. I don't think it's a realistic terror for a couple of reasons. First of all, it's certainly not realistic right now. We don't have machines that are resourceful enough to know how to make paperclips unless you carefully show them every detail of the process. They're not innovators right now. So this is a long way away, if ever. It also assumes that the machines that could do this are so dumb that they don't understand anything else. But that doesn't actually make sense. Like, if you were smart enough not only to want to collect metal from human beings but to chase the human beings down, then you actually have a lot of common sense, a lot of understanding of the world. If you had some common sense and a basic law that says don't do harm to humans, which Asimov thought of in the '40s, then I think that you could actually preclude these kinds of things. So, we need a little bit of legislation. We need a lot of common sense in the machines and some basic values in the machines. But once we do that, I think we'll be OK. And I don't think we're going to get to machines that are so resourceful that they could even contemplate these kinds of scenarios until we have all that stuff built in. So I don't think that's really going to happen. And the other side of this is machines have never shown any interest in doing anything like that. You think about the game of Go, that's a game of taking territory. In 1970 no machine could play go at all. Now machines can play go better than the best human. So they're really good at taking territory on the board. And in that time the increase in their desire to take actual territory on the actual planet is zero. That hasn't changed at all. They're just not interested in us. And so I think these things are just science fiction fantasies. On the other hand, I think there's something to be worried about, which is that current AI is lousy. And thinking about people in the White House, the issue is not how bright somebody is, It's how much power they have. So you could be extremely bright and use your power wisely, or not so bright but have a lot of power and not use it wisely. Right now we have a lot of AI that's increasingly playing an important role in our lives, but it's not necessarily doing the careful multi-step reasoning that we want it to do. That's a problem. So it means, for example, that the systems we have now are very subject to bias. You just, statistics in, and you're not careful about the statistics, you get all kinds of garbage. You do Google searches for, like, "grandmother and child," and you get mostly examples of white people, because there's no system there monitoring the searches trying to make things representative of the world's population. They're just taking what we call a "convenient sample." And turns out there's more labeled pictures with grandmother and grandchild among white people, because more white people use the software or something like that. I'm slightly making up the example, but I think you'll find examples like that. These systems have no awareness of the general properties of the world. They just use statistics. And yet they're in a position, for example, to do job interviews. Amazon tried this for like four years and finally gave up and decided they couldn't do it well. But people are more and more saying, well, let's get the data. Let's get deep learning. Let's get machine learning. And we'll have it solve all our problems. Well, systems we have now are not sophisticated enough to do that. And so trusting a system that's basically a glorified calculator to make decisions about who should go to jail, or who should get a job, things like that, those are, at best risky and probably foolish.

Dr. Gary Marcus is the director of the NYU Infant Language Learning Center, and a professor of psychology at New York University. He is the author of "The Birth of[…]

What most people worry about when it comes to artificial intelligence likely comes from science-fiction fantasy.

▸

4 min

—

with

Gary Marcus is the author of Rebooting AI: Building Artificial Intelligence We Can Trust.

Related

Six visionary science fiction authors on the social impact of their work.

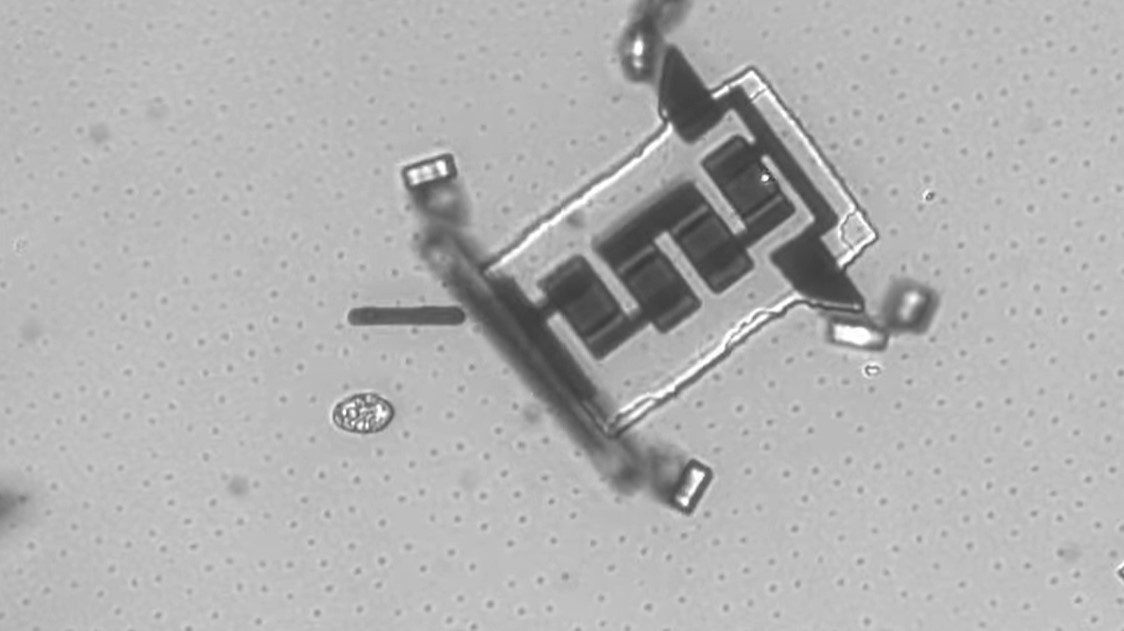

What would it take to create a truly intelligent microbot, one that can operate independently?

It’s not enough just to stay current and competitive with AI — you’ll also need to build a long-term strategy.

"Stargate" could be used to train the world’s most powerful AIs.

Making up false information is one of the biggest problems with AI, but there are no silver-bullet solutions.