How emotional intelligence is the best defense against GenAI threats

Imagine receiving a distressed call from your grandson; his voice filled with fear as he describes a horrible car accident. That’s what happened to Jane, a 75-year-old senior citizen of Regina, Canada.

Pressed by urgency, she was at the bank in a few hours, collecting bail money for her grandson. Only later did she discover she was a victim of an AI-generated scam.

By 2030, GenAI is expected to automate 70% of global business operations, leaving leaders excited and fascinated. But there is a darker side to GenAI – the weaponization of its deception of people.

Jane’s story is not an isolated one. Emotionally manipulative scams also pose a grave threat to businesses, exposing them to financial and reputational risks.

Recent data indicates that one in 10 executives has already encountered a deepfake threat – an AI technology trained on real video and audio material – with 25% of leaders still unfamiliar with its risks.

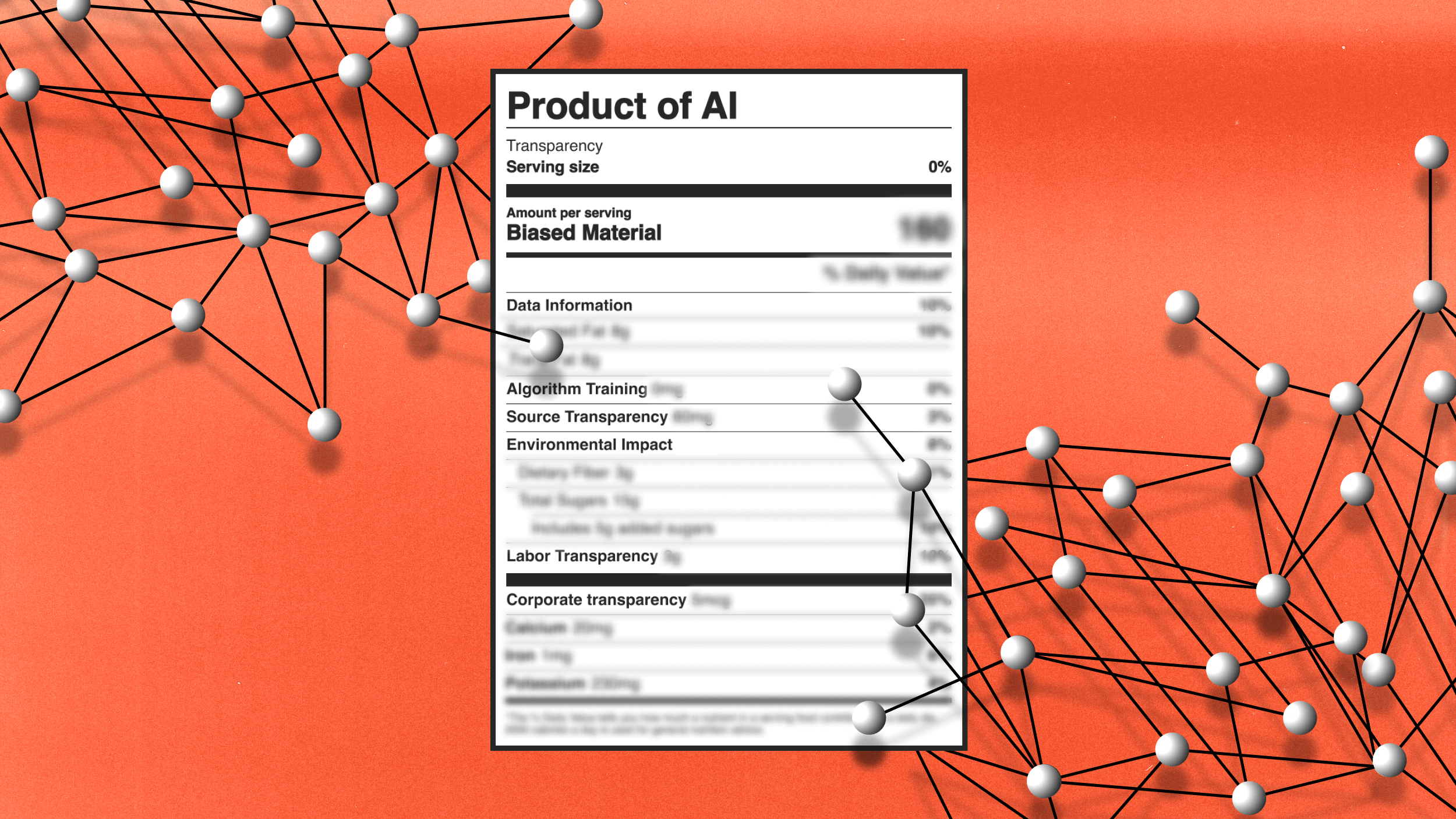

Detecting AI-generated content is still a struggle. To verify digital content, we first turn to technology for help. While many tools boast their ability to detect AI-generated content, their accuracy is inconsistent.

Especially amidst tools that can bypass detection, like removing AI watermarks from images, we are far from the successful automated labelling of AI-generated content.

And despite some promising tools, such as Intel’s FakeCatcher, their large-scale implementation remains absent. While organizations play catch-up with GenAI, human scepticism remains our best safeguard.

Emotional intelligence – recognizing, understanding and managing one’s emotions – is an asset in identifying AI-enabled manipulation.

GenAI presents three key risks that emotional intelligence can help solve:

- GenAI’s hyper-realistic output becomes a scammer’s tool for exploiting emotional responses, such as urgency or compassion.

- The ethical pitfalls of GenAI are often unintentional, as employees may default to AI recommendations or fall into automation bias, prioritizing efficiency over ethics.

- From job anxieties to ethical indifference, employees can make impulsive decisions under the emotional strain of GenAI.

As GenAI threats advance, emotional intelligence is key

To manage the impact of GenAI threats, individuals must recognize the emotions at play, reflect on the implications and respond with informed, empathetic action. Here are three ways to do this.

1. Help teams recognize when emotions are weaponized

Research suggests that human behaviour causes 74% of data breaches – and it’s not hard to see why.

GenAI can personalize scams just by analyzing employee data or company-specific content. Threat actors can even leverage your digital footprint to create a deepfake video of you.

All it takes is less than eight minutes and a negligible cost. Against this backdrop, the first step to mitigating GenAI threats is education.

Employees need to understand how GenAI can leverage emotions to trump rational decisions. A senior manager at a cybersecurity company shared an eye-opening experience with us.

Recently, he received a WhatsApp message and voice recording from a scammer impersonating his CEO, discussing legitimate details about an urgent business deal.

The emotional pressure from a higher authority initially prompted a reaction. However, the imposter’s awareness of standard organizational communication helped spot red flags: that informal channels should not be used for sensitive data and follow-up calls.

Leaders must invest in training that enhances emotional intelligence. Workshops focusing on identifying emotional triggers, combined with simulations – focused on creative thinking and flexible strategies beyond strict rule-following – help employees spot manipulation sooner.

2. Make reflection your team’s default mode

Individuals must also consider how their actions, driven by emotions, can lead to unintended consequences. Reflection allows us to consider how our emotions can unconsciously affect our behaviour.

A recent example of the absence of moral oversight involves a German magazine that published an AI-generated interview with Formula 1 legend Michael Schumacher without his consent. The interview included fake quotes discussing his medical condition and family life since 2013.

Driven by the excitement of publishing a “scoop,” the journalists failed to reflect on its emotional impact on Schumacher and his family. This resulted in significant reputational damages for the magazine.

Critical reflection at work encourages us to consider different perspectives and factors influencing our choices.

Leaders can facilitate this by introducing group-based reflection exercises.

One good example is the “fly on the wall” technique, where a team member presents a GenAI output and then silently observes as others discuss its ethical considerations and biases.

Finally, insights gained from the discussion are shared. Upon such reflection, familiar situations may take on new meanings, reveal underlying assumptions and warn about AI reliance.

3. Turn quick reactions into thoughtful responses

The final step is to translate awareness and reflection into deliberate actions. Even when considering risks, “AI influence” can override good judgment. Give your employees the authority to set boundaries to regain control of the decision-making processes.

Encouraging them to communicate discomfort or delay action until verification, such as saying, “I need written confirmation before proceeding,” can slow down manipulative momentum.

Such responses are made possible through a culture of open dialogue, where employees are encouraged to question instructions or voice concerns without fear.

A recent example involved a Ferrari executive who received a call from the CEO, Benedetto Vigna. At first, it seemed plausible. But as the conversation turned confidential, the executive grew doubtful.

Unwilling to take a risk, he asked a question only the real CEO could answer. The caller abruptly hung up, revealing their scam. We are currently witnessing an increase in the likelihood of “emotional entanglement” through over-reliance, anthropomorphization and blurring boundaries between fiction and reality.

Falling prey to our emotions only makes us human. However, it is also possible to regulate them so that they can make better decisions. Ultimately, an automated world can benefit from these strategies’ sentience, human touch and sensitivity.

Republished with permission of World Economic Forum. Read the original article.