AI is turning thoughts into speech. Should we be concerned?

Epileptology unit, Nice, France Long-term EEG, 3 days, for a check-up of partial epilepsy. (Photo by: BSIP/UIG via Getty Images)

- Recent research in epilepsy patients has provided a breakthrough in AI-enabled speech recognition technology.

- Soon researchers believe such an application will translate brain waves into speech.

- The moral dangers of AI, especially concerning privacy, continue to be an issue.

Tara Thomas thought her two-year-old daughter was inventing voices, perhaps the result of nightmares spilling into daytime. Thomas’s disbelief was suspended when she heard pornography being played through the Nest Cam in her daughter’s room, a device that she had been using as a monitor. Someone hacked into the intercom feature in the software.

This is not as isolated incident. Tech companies could make devices more secure, but that would require more participation by the user—aka “friction”—that would result in lower adoption. As marketing expert Geoffrey Moore notes in his bestselling classic, Crossing the Chasm, exposing your product to the early majority requires a “whole product” approach that early adopters do not require. Dominating the market requires as easy an installation process as possible, such as a one-click setup of the Internet of Things.

To accomplish this, Big Tech sacrifices security for convenience. Consumers are playing right along. As the article above notes, Nest offers two-factor authentication, yet customers often ignore it. The ability to unbox an item, plug it in, and immediately tell it what to do is a feature in the eyes of the consumer.

Then suddenly your infant is listening to hardcore porn and you wonder where your privacy went.

Such problems, which will continue to increase until regulations force tech companies to install more serious security measures, are the building blocks of dystopian novels and movies. Unfortunately, they’re creating public relations problems for beneficial applications of technology.

Hackers used Google’s Nest Cam to Speak to Bay Area Woman’s Daughter

Consider AI. There’s a race to enter this market, but in the excitement of creating machines smarter than us we have to wonder what sorts of compromises are being made.

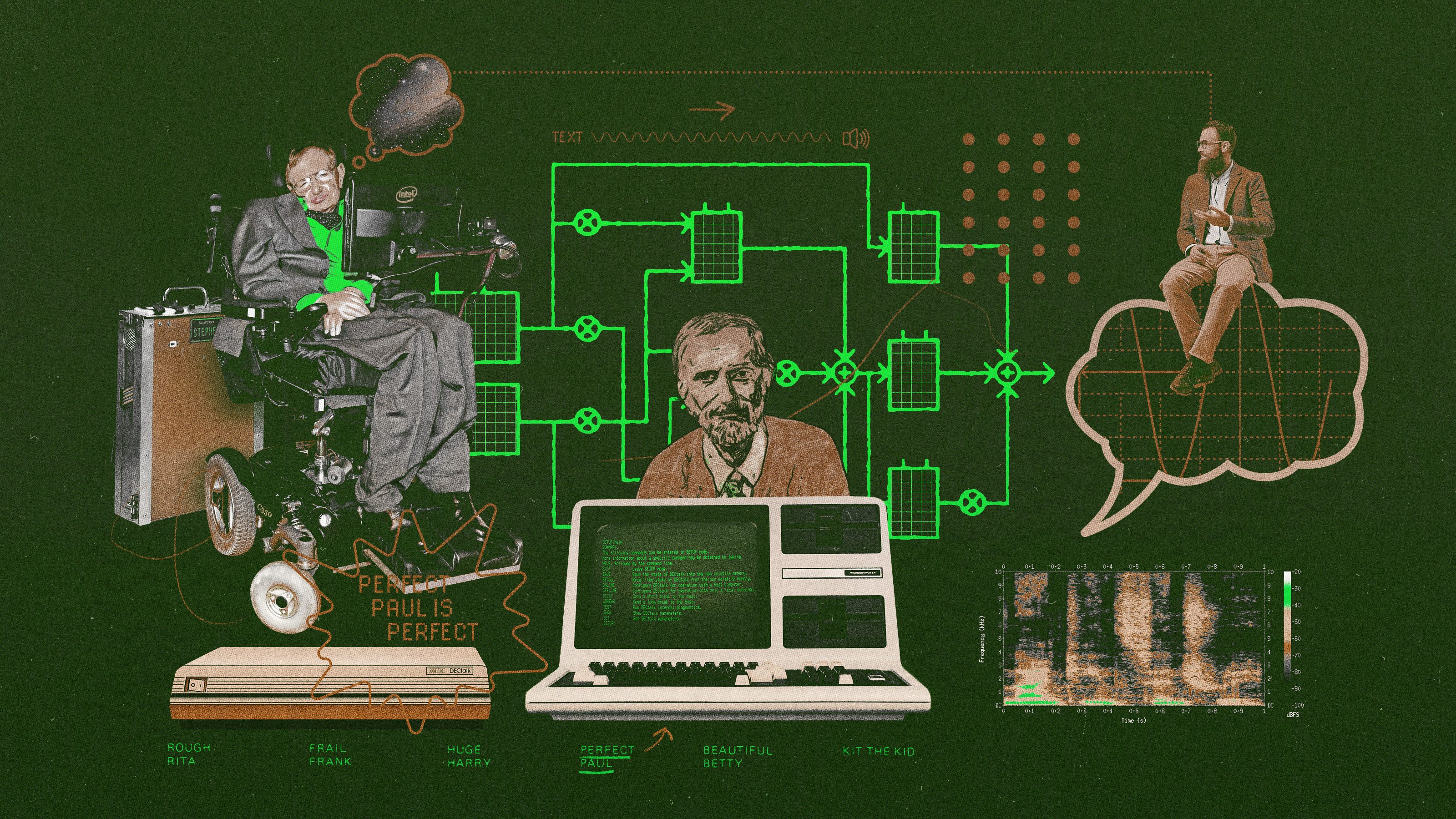

One fascinating use case involves a speech-encoding device. The research, published in a recent issue of Nature, details an AI-enabled technology that translates brain patterns into speech. I highly recommend clicking the link above to listen to a 15-second clip of two examples. Perfect? Not quite, but frighteningly accurate.

Thus far, AI has been able to identify and translate monosyllabic words from brain activity. This recent leap forward, powered by electrodes attached to the skulls of participants, is producing entire sentences. While five epilepsy patients read sentences out loud, researchers recorded their neural activity, combining the data with previous studies that focused on how the tongue, lips, jaw, and larynx create sound.

Enter AI, which identified the specific brain signals producing vocal tract movements. Seventy percent of words in the 101 sentences recorded were understandable. While the ability to translate from brain wave to perfect speech is years away, Chethan Pandarinath and Yahia Ali, both at Emory University, co-authored a commentary to the study, noting:

“Ultimately, ‘biomimetic’ approaches that mirror normal motor function might have a key role in replicating the high-speed, high-accuracy communication typical of natural speech.”

Should We Grant A.I. Moral and Legal Personhood? | Glenn Cohen

Currently, this technology cannot be applied to people that cannot speak at all, though the authors hope this breakthrough to be an entry point for such an application. The ability to communicate with others would be a boon for such patients’ mental health and emotional well-being. Given the rapid increase in this technology over the last decade, researchers are hopeful that such an application is around the corner.

Which is good news in a continually troublesome suite of devices under scrutiny. Telling your monitor to turn on the lights instead of standing up to flick a switch hardly seems worthwhile considering the potential downsides of privacy invasion.

An automated task is not necessarily a better option. Sure, self-driving cars might reduce accidents, but are the ensuing attentional deficits worth the cost? If you never observe where you’re going, how do you even know where you are in space when you arrive?

Advocates like to make moral arguments, such as the idea that AI-enabled devices should receive the same ethical considerations as animals. What about the ethics of one particular animal: humans? The mystique of an encyclopedic machine blinds us to the troubles it will wage on Humans 1.0. As with privacy issues on Facebook, billions will likely be entrenched in the technology before we even recognize an issue at hand.

AI has a bright future ahead, as the Nature study highlights. We just need to ensure the consumer fascination with bright and shiny data-collecting toys doesn’t overwhelm our moral sensibilities in using these technologies soundly. So far, we’re fighting an uphill battle.

—

Stay in touch with Derek on Twitter and Facebook.