Can a robot guess what you’re thinking?

Photo by Andy Kelly on Unsplash

What on earth are you thinking? Other people think they know, and many could make a pretty decent guess, simply from observing your behavior for a short while.

We do this almost automatically, following convoluted cognitive trails with relative ease, like understanding that Zoe is convinced Yvonne believes Xavier ate the last avocado, although he didn’t. Or how Wendy is pretending to ignore Victoria because she thinks Ursula intends to tell Terry about their affair.

Thinking about what other people are thinking—also known as “mentalizing,” “theory of mind,” or “folk psychology”—allows us to navigate complex social worlds and conceive of others’ feelings, desires, beliefs, motivations, and knowledge.

It’s a very human behavior—arguably one of the fundamentals that makes us us. But could a robot do it? Could C-3PO or HAL or your smartphone watch your expressions and intuit that you ate the avocado or had an affair?

A recent artificial intelligence study claims to have developed a neural network—a computer program modeled on the brain and its connections—that can make decisions based not just on what it sees but on what another entity within the computer can or cannot see.

In other words, they created AI that can see things from another’s perspective. And they were inspired by another species that may have theory of mind: chimps.

Chimpanzees live in troops with strict hierarchies of power, entitling the dominant male (and it always seems to be a male) to the best food and mates. But it’s not easy being top dog—or chimp. The dominant male must act tactically to maintain his position by jostling and hooting, forming alliances, grooming others, and sharing the best scraps of colobus monkey meat.

Implicit in all this politicking is a certain amount of cognitive perspective-taking, perhaps even a form of mentalizing. And subordinate chimps might use this ability to their advantage.

In 2000, primatologist Brian Hare and colleagues garnered experimental evidence suggesting that subordinate chimps know when a dominant male is not looking at a food source and when they can sneak in for a cheeky bite.

Now computer scientists at the University of Tartu in Estonia and the Humboldt University of Berlin claim to have developed an artificially intelligent chimpanzee-like computer program that behaves in the same way.

The sneaky subordinate chimp setup involved an arena containing one banana and two chimps. The dominant chimp didn’t do much beyond sit around, and the subordinate had a neural network that tried to learn to make the best decisions (eat the food while avoiding a beating from the dominant chimp). The subordinate knew only three things: where the dominant was, where the food was, and in which direction the dominant was facing.

In addition, the subordinate chimp could perceive the world in one of two ways: egocentrically or allocentrically. Allocentric chimps had a bird’s-eye view of proceedings, seeing everything at a remove, including themselves. Egocentric chimps, on the other hand, saw the world relative to their own position.

In the simplest experimental world—where the dominant chimp and the food always stayed in the same place—subordinate chimps behaved optimally, regardless of whether they were allocentric or egocentric. That is, they ate the food when the dominant wasn’t looking and avoided a beating when it was.

When things became a little more complicated and the food and/or dominant chimp turned up in random places, the allocentric chimps edged closer to behaving optimally, while the egocentric chimps always performed suboptimally—languishing away, hungry or bruised.

But the way the AI simulation was set up meant the egocentric chimp had to process 37 percent more information than the allocentric one and, at the same time, was constrained by its egocentric position to perceive less about the world. Perhaps the lesson is: Omniscience makes life easier.

The computer scientists admit that their computer experiment “is a very simplified version of perspective-taking.” How the AI-chimp perceives and processes information from its simplified digital world doesn’t come close to capturing the complexity of real chimps eyeing up real bananas in the real world.

It’s also unlikely that the AI-chimp’s abilities would generalize beyond pilfering food to other situations requiring perspective-taking, such as building alliances or knowing when it’s safe to sneak off into the virtual bushes for romantic escapades.

So, might artificially intelligent computers and robots one day develop theory of mind? The clue is in the term: They’d surely need minds of their own first. But then, what kind of mind?

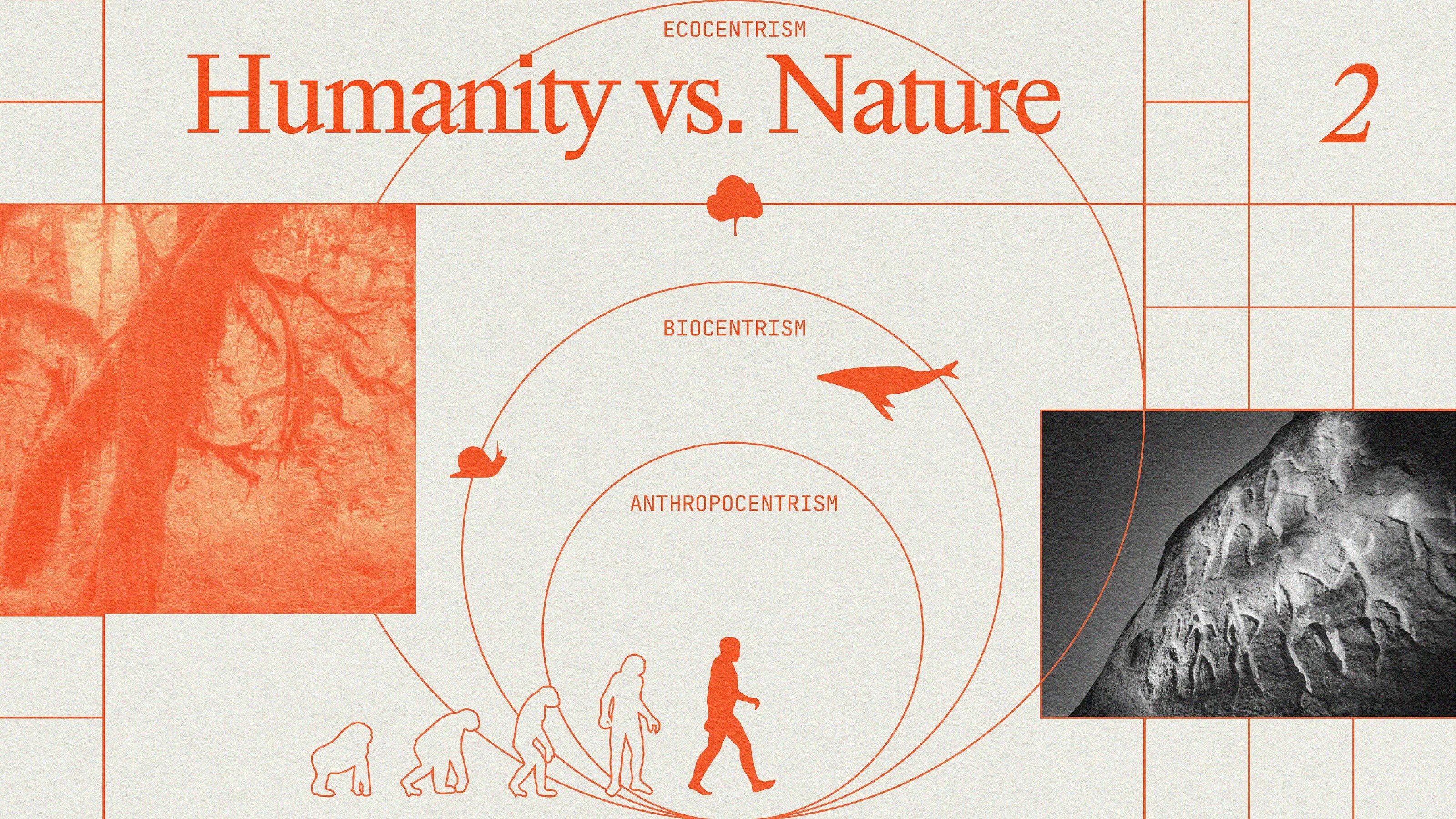

Across the animal kingdom, a variety of minds have evolved to solve a swath of social problems. Chimpanzees are savvy in an aggressively political and competitive way. Crows are clever in their ability to fashion twig tools, attend funerals to figure out what killed a compatriot, and team up to bully cats.

Octopuses are intelligent in their skill at escaping from closed jars and armoring themselves with shells. Dogs are brainy in their knack for understanding human social gestures like pointing and acting so slavishly cute we’d do anything for them. Humans are smart in a landing-on-the-moon-but-occasionally-electing-fascists way.

When it comes to theory of mind, some evidence suggests that chimps, bonobos, and orangutans can guess what humans are thinking, that elephants feel empathy, and that ravens can predict the mental states of other birds.

Minds that have evolved very separately from our own, in wildly different bodies, have much to teach us about the nature of intelligence. Maybe we’re missing a trick by assuming artificial intelligences with a theory of mind must be humanlike (or at least primate-like), as appears to be the case in much of the work to date.

Yet developers are certainly modeling artificial intelligence after human minds. This raises an unsettling question: If artificial, digital, sociable minds were to exist one day, would they be enough like a human mind for us to understand them and for them to understand us?

Humans readily anthropomorphize, projecting our emotions and intentions onto other creatures and even onto robots. (Just watch these poor machines and see how you feel.) So perhaps this wouldn’t be much of an issue on our side. But there’s no guarantee the AIs would be able to feel the same way.

This might not be so bad. Our relationship with AIs could end up mirroring our relationship with another famously antisocial creature. We shout at our cats to stop scratching the sofa when there’s a perfectly good catnip-infused post nearby, as the baffled beasts vaingloriously meow back at us. We are servile to them and have delusions of our own dominance, while they remain objects of mysterious fascination to us. We look at them and wonder: What on earth are you thinking?

Reprinted with permission of Sapiens. Read the original article.