Robot Algorithms Can’t Answer Ethical Dilemmas, Say Programmers and Ethicists

Robots armed with weapons and programmed to act autonomously are already in the hands of national militaries. But taking action on the battlefield depends on making decisions that algorithms are simply not equipped to handle, say computer scientists and ethicists.

SGR-A1 is the name of a robot designed to replace human soldiers along the demilitarized zone dividing North Korea and South Korea. Equipped with a microphone to recognize command passwords, it can automatically track and fire on multiple targets from over two miles away.

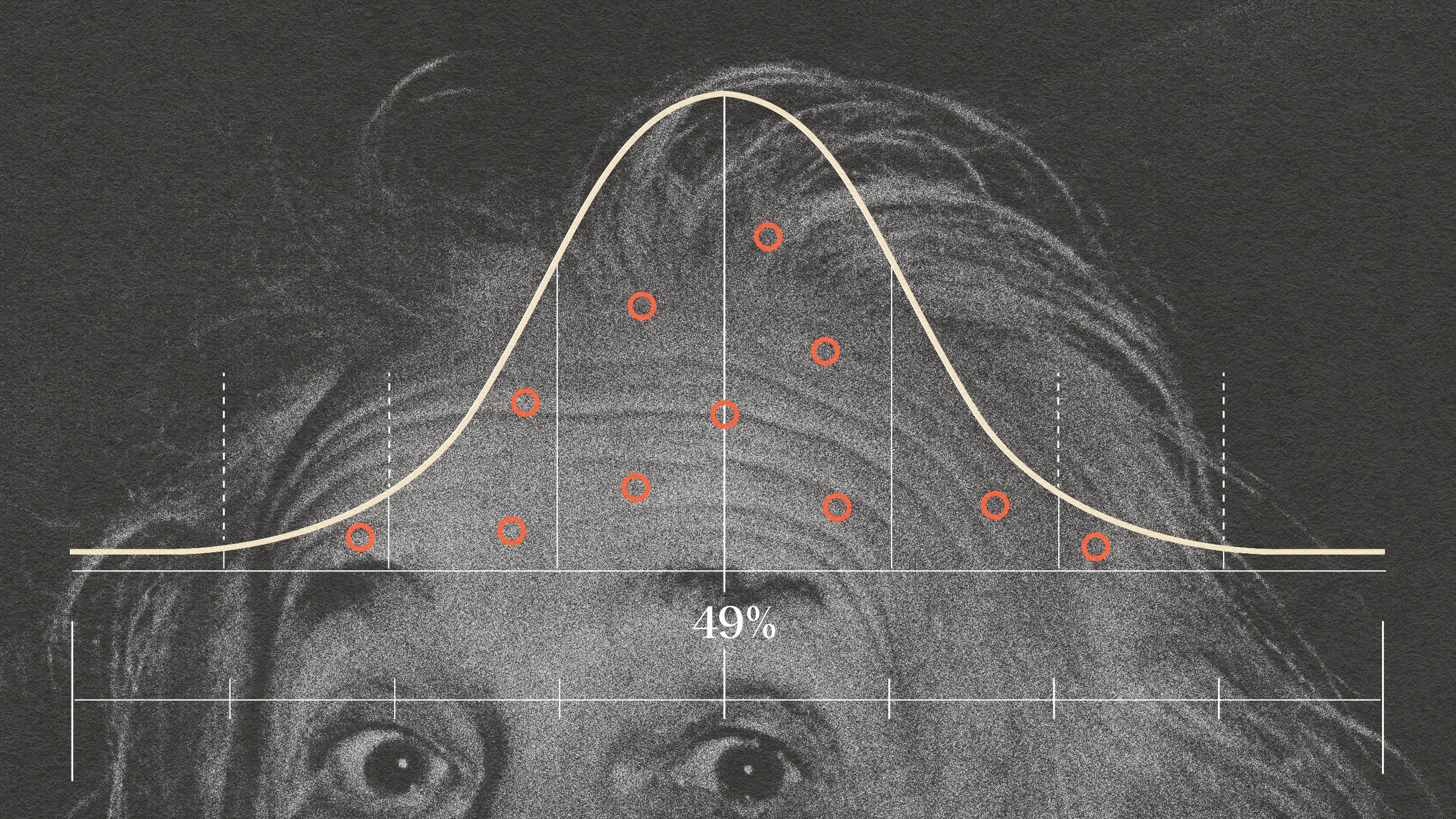

But acting—pulling the trigger and taking a human life—entails a moral calculus that computer algorithms may literally be incapable of completing. The reason is called the “halting problem” in which moral calculations are complicated endlessly by introducing countervailing possibilities.

Defenders of the robotic future raise the point that humans themselves are only so good at complicated moral reasoning, if they engage in moral reasoning at all!

Here Michio Kaku describes how we might deactivate robots if they should become murderous:

Read more at Physics arXiv

Photo credit: Shutterstock