dreams

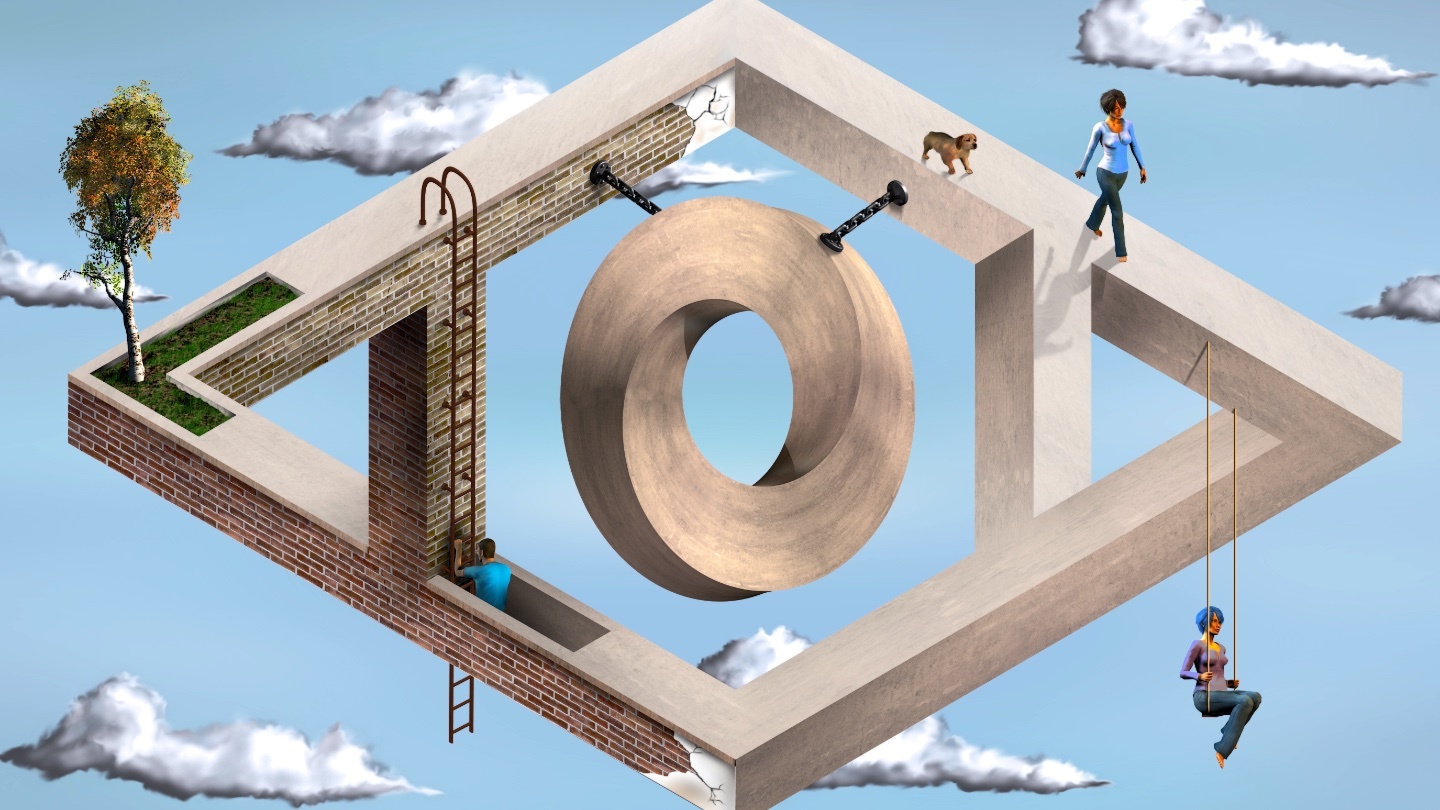

A new theory suggests that dreams’ illogical logic has an important purpose.

It’s all about smooth pursuit.

Just imagining movement fires the same neurons as if we were actually moving. A new study shows we can wake our sleeping mind to practice motor skills in our dreams.

They may look odd, but it’s all part of Google’s plan to solve a huge issue in machine learning: recognizing objects in images.

Dreams might be a whole lot sexier than we thought – but not because of their narrative content. Neurologist Patrick McNamara’s theory links the biological changes in our brains during sleep to human’s inherent desire to procreate.