‘Deepfake’ technology can now create real-looking human faces

Karros et al.

- In 2014, researchers introduced a novel approach to generating artificial images through something called a generative adversarial network.

- Nvidia researchers combined that approach with something called style transfer to create AI-generated images of human faces.

- This year, the Department of Defense said it had been developing tools designed to detect so-called ‘deepfake’ videos.

A new paper from researchers at Nvidia shows just how far AI image generation technology has come in the past few years. The results are pretty startling.

Take the image below. Can you tell which faces are real?

Karros et al.

Actually, all of the above images are fake, and they were produced by what the researchers call a style-based generator, which is a modified version of the conventional technology that’s used to automatically generate images. To sum up quickly:

In 2014, a researcher named Ian Goodfellow and his colleagues wrote a paper outlining a new machine learning concept called generative adversarial networks. The idea, in simplified terms, involves pitting two neural networks against each other. One acts as a generator that looks at, say, pictures of dogs and then does its best to create an image of what it thinks a dog looks like. The other network acts as a discriminator that tries to tell fake images from real ones.

At first, the generator might produce some images that don’t look like dogs, so the discriminator shoots them down. But the generator now knows a bit about where it went wrong, so the next image it creates is slightly better. This process continues until, in theory, the generator creates a good image of a dog.

What the Nvidia researchers did was add to their generative adversarial network some principles of style transfer, a technique that involves recomposing one image in the style of another. In style transfer, neural networks look at multiple levels of an image in order to discriminate between the content of the picture and its style, e.g. the smoothness of lines, thickness of brush stroke, etc.

Here are a couple examples of style transfer.

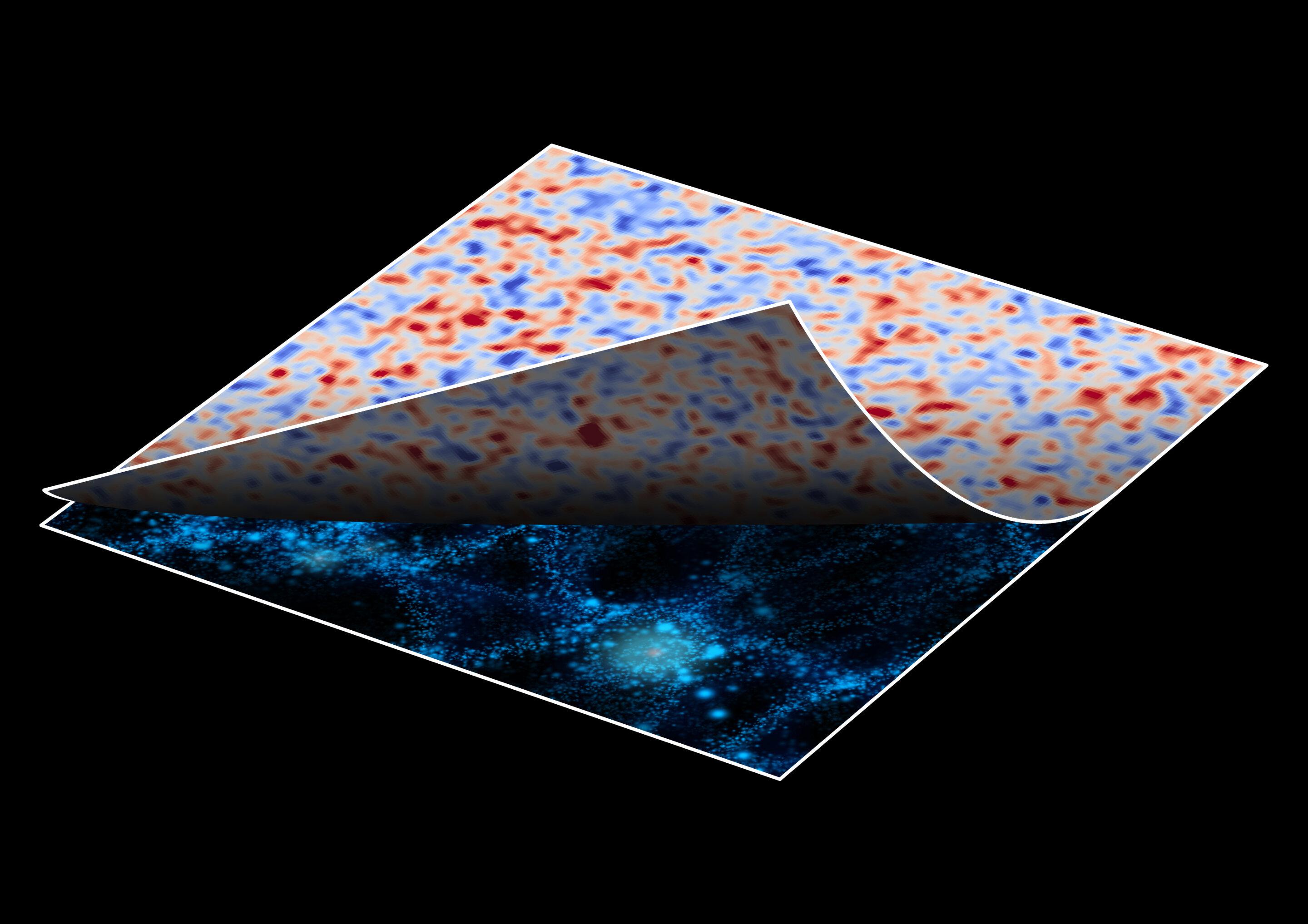

In the Nvidia study, the researchers were able to combine two real images of human faces to generate a composite of the two. This artificially generated composite had the pose, hair style, and general face shape of the source image (top row), while it had the hair and eye colors, and finer facial features, of the destination image (left-hand column).

The results are surprisingly realistic, for the most part.

Karros et al.

Concerns over ‘deepfake’ technology

The ability to generate realistic artificial images, often called deepfakes when images are meant to look like recognizable people, has raised concern in recent years. After all, it’s not hard to imagine how this technology could allow someone to create a fake video of, say, a politician saying something abhorrent about a certain group. This could lead to a massive erosion of the public’s willingness to believe anything that’s reported in the media. (As if concerns about ‘fake news’ weren’t enough.)

To keep up with deepfake technology, the Department of Defense has been developing tools designed to detect deepfake videos.

“This is an effort to try to get ahead of something,” said Florida senator Marco Rubio in July. “The capability to do all of this is real. It exists now. The willingness exists now. All that is missing is the execution. And we are not ready for it, not as a people, not as a political branch, not as a media, not as a country.”

However, there might be a paradoxical problem with the government’s effort.

“Theoretically, if you gave a [generative adversarial network] all the techniques we know to detect it, it could pass all of those techniques,” David Gunning, the DARPA program manager in charge of the project, told MIT Technology Review. “We don’t know if there’s a limit. It’s unclear.”