The surefire way to never find anything new in science

If you “know” the answer before you ever begin, you might as well not even try.

“I have difficulty to believe it, because nothing in Italy arrives ahead of time.” –Sergio Bertolucci, research director at CERN, on faster-than-light neutrinos

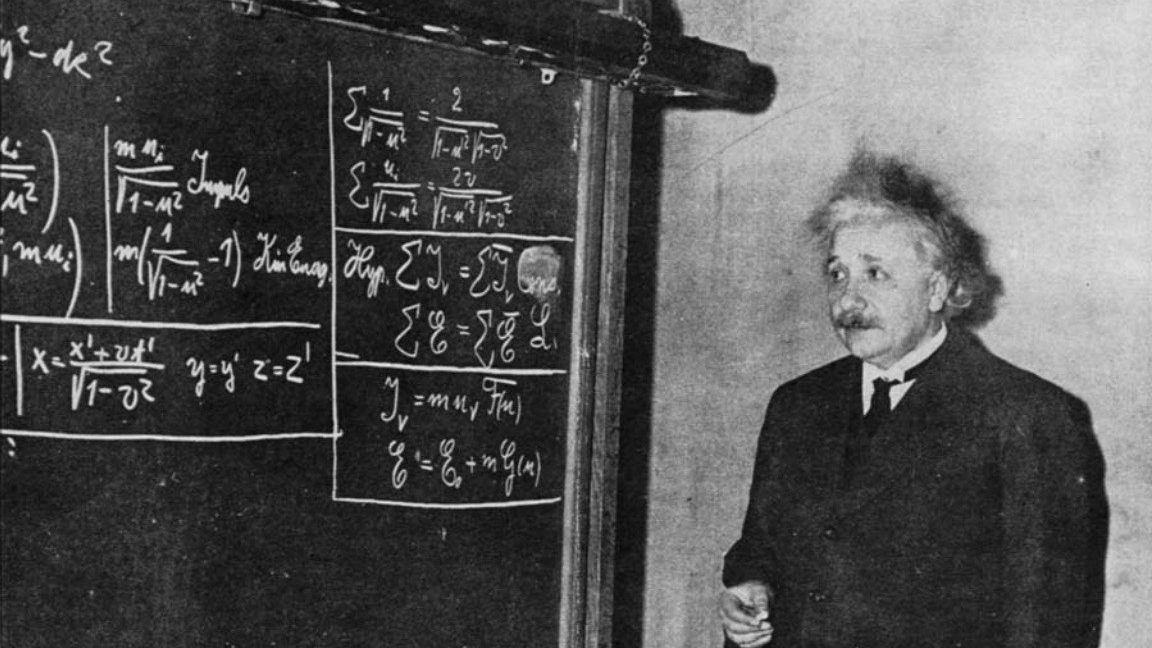

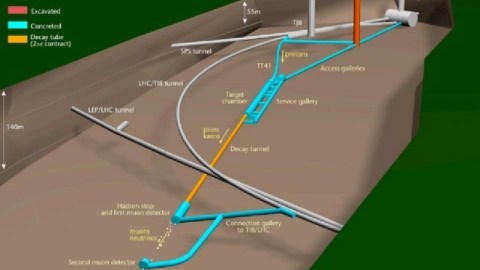

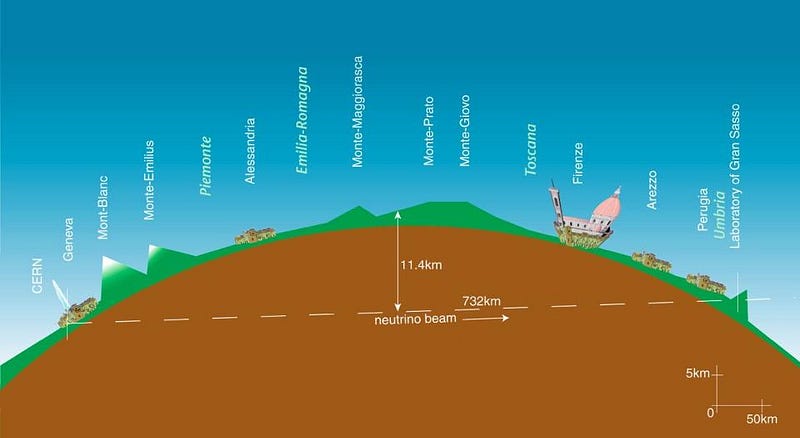

It’s been five years since the OPERA collaboration announced a bizarre, unexpected and perhaps revolutionary result to a normal experiment: particles were observed to move faster than the speed of light — the ultimate cosmic speed limit — would allow. The way the experiment worked was simple, as neutrinos generated in the Large Hadron Collider were sent through the Earth (so that all the other particles would be absorbed by the intervening matter) and then detected hundreds of miles away in a very intricate setup. The arrival time should have been very precise: 2.4 millliseconds after the collision that generated them, to an incredible accuracy. Neutrinos, of such low mass and such high energy, should travel at a speed indistinguishable from c, the speed of light. Instead, the neutrinos arrived 60 nanoseconds (6 × 10^-8 seconds) earlier than they should have, setting off a flurry of papers, speculations and wild explanations. The result flew in the face of a century of experiments and one of our most hallowed and well-verified theories: Einstein’s relativity.

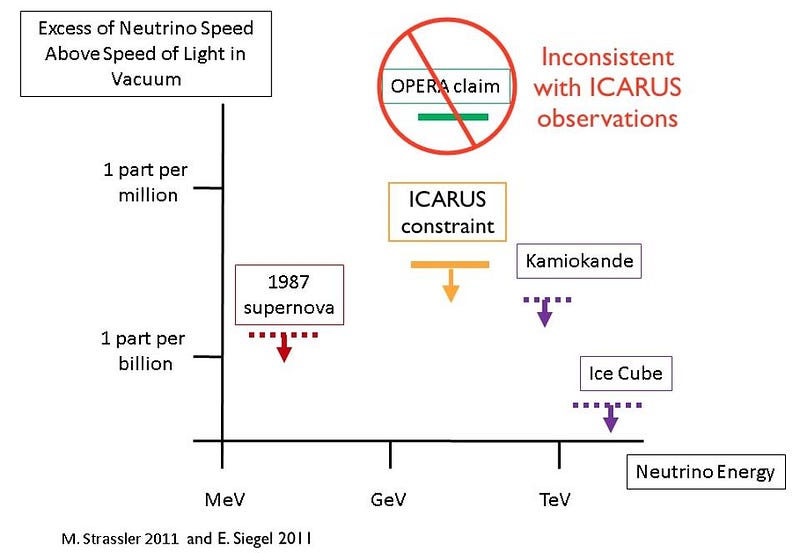

The resolution turned out to be mundane: there was an error with the experimental setup in the form of a loose cable. When everything was attached properly, the anomaly went away, and the difference between the predicted and measured arrival times were reduced to < 1 nanosecond. You might think that this is evidence for poorly executed science, but nothing could be further from the truth. Other collaborations had measured the speed of travel for neutrinos under various energy conditions previously, obtaining a tight constraint that was indistinguishable from the speed of light. When OPERA came along, they measured a very different result — an outlier result — for a parameter that had already been previously measured. They couldn’t account for that anomalous result, but they couldn’t find a flaw in what they had done. They published it anyway, even though another collaboration using the same neutrinos, ICARUS, had measured the speed of those neutrinos and found it to be consistent with c.

This was truly incredible! It wasn’t incredible because it was unbelievable; it was incredible because this was an experiment whose results should have been known in advance. It was incredible because we knew how fast neutrinos should have been traveling. And yet their experiment/observations didn’t line up with those anticipated results, and they didn’t force the data to fit the theory. It would have been an easy thing to do, to:

- note that your results didn’t fit what the “known” result should have been,

- to look for possible sources of error/systematic problems until your result had regressed to the expected value,

- and then to publish a paper that lined up with the “acceptable” outcome.

But they didn’t. They looked at what they found, saw it didn’t line up, and published anyway. An interesting account can be found in Gianfranco D’anna’s novel, 60.7 nanoseconds: an infinitesimal instant in the life of a man.

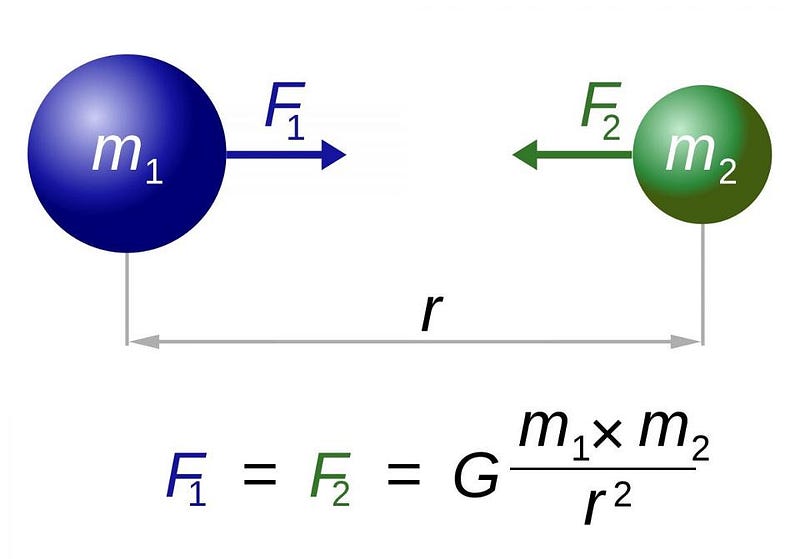

That’s how science moves forward. 30 years ago, the gravitational constant (Newton’s G) was known to be about 6.6726 × 10^-11 N · kg²/m², where different experiments would measure results like:

- 6.67274 × 10^-11,

- 6.67248 × 10^-11,

- 6.67281 × 10^-11, and

- 6.67263 × 10^-11.

They were all very close together, with some minor variations but that all pointed to the same overarching figure. And they all cited errors of about ± 0.00020 × 10^-11 or so. And then, about 15 years ago, a new measurement came out for G: 6.674 ± 0.001 × 10^-11. It was way off from the other values; it had a much larger error; and moreover, it was repeatable. The other experiments were all shown to be in error, together.

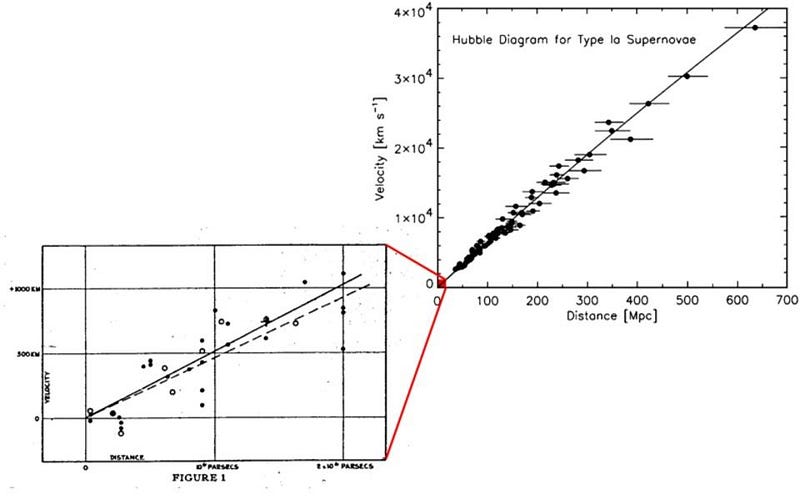

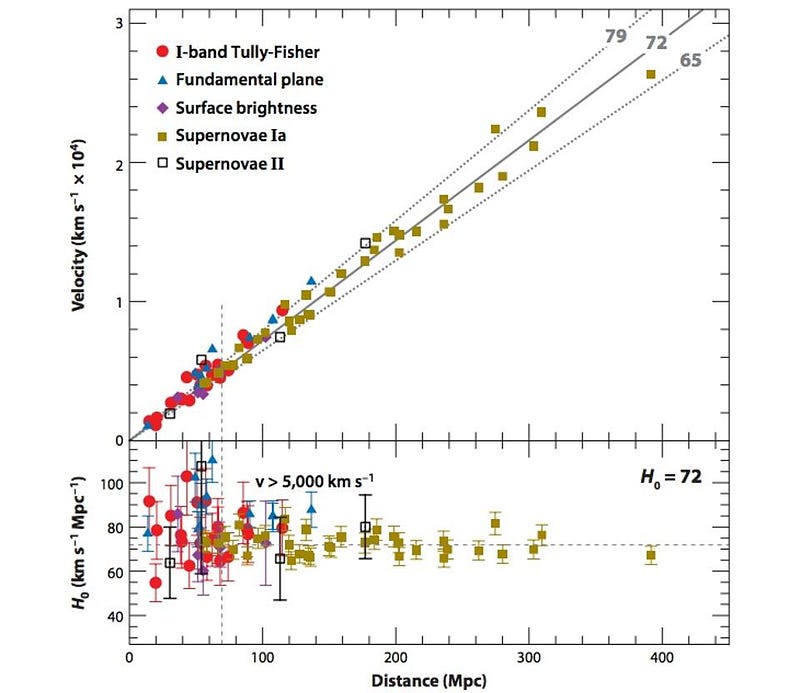

The same thing happened with the Hubble parameter (H_0): the physical parameter that measures the recession of galaxies and the expansion rate of the Universe. In the 1960s, it was generally regarded to be about 50-to-55 km/s/Mpc, with an uncertainty of about ± 5. All the papers that came out around that time for over a decade — following the lead of Allan Sandage — agreed. Then in the early 1980s, a new team, led by Gerard de Vaucouleurs, claimed that H_0 was about 100 km/s/Mpc, with an uncertainty of around ± 10. For over a decade, these two teams argued and fought, with one camp claiming “50” and the other claiming “100,” with neither group budging. It wasn’t until the Hubble space telescope (which was so named because of its major science goal of settling the debate) returned its key project results. Its finding? H_0 was 72 ± 7; both camps were wrong.

Science isn’t about getting it right the first time, nor is it about getting a result in line with what everyone else has found. Yes, most of the time the older results are correct, but you do no one a service by expecting a particular result in advance. Right now, there’s a new interesting tension in H_0, for example: measurements from the CMB indicate about 67; measurements from galaxies and stars indicate about 74. There are tensions in the amount of dark matter and dark energy: some groups claim 25% and 70%, respectively; some are farther at 20% and 75%; others are closer at 30% and 65%. You account for your systematics as best as you can, you perform your experiment, and you publish your results, no matter what they are. They might not be in line with the other results of the time, but that’s not necessarily a bad thing. The new, outlying data point you produced may be evidence that you did something wrong, but it just may be the nudge that takes you one step closer to scientific truth than anyone in the field would realize!

This post first appeared at Forbes, and is brought to you ad-free by our Patreon supporters. Comment on our forum, & buy our first book: Beyond The Galaxy!