No, The Cosmic Controversy Over The Expanding Universe Isn’t A Calibration Error

Something isn’t adding up, but it isn’t a calibration error.

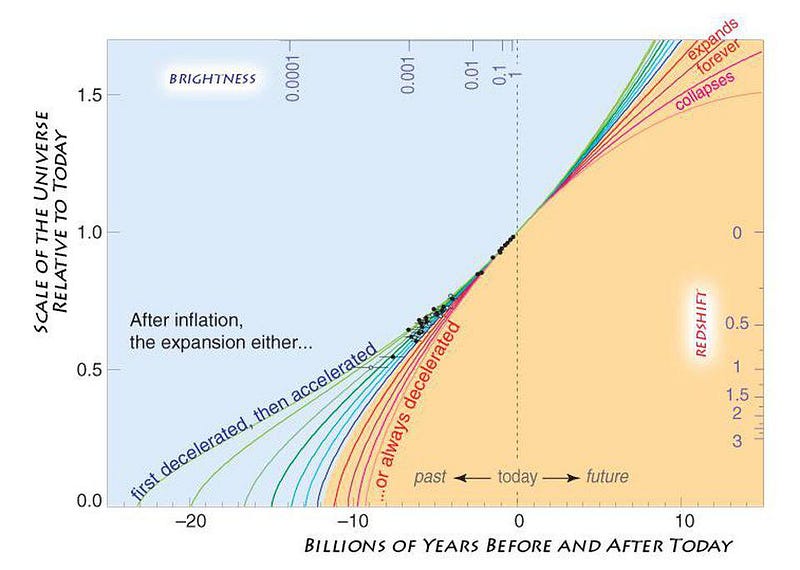

It’s been nearly 100 years since we discovered that the Universe was expanding. Ever since, the scientists who study the expanding Universe have argued over two details of that expansion in particular. First off, there’s the question of how fast: what is the rate of expansion of the Universe, as we measure it today? And second, there’s the question of how this expansion rate changes over time, since the way the expansion changes is completely dependent on exactly what’s in our Universe.

Throughout the 20th century, different groups using different instruments and/or techniques measured different rates, leading to a number of controversies. The situation appeared to finally be resolved thanks to the Hubble key project: the main science goal of the Hubble Space Telescope. At last, everything pointed to the same picture. But today, 20 years after that important paper was released, a new tension has emerged. Depending on which technique you use to measure the expanding Universe, you get one of two values, and they don’t agree with each other. Worst of all, you can’t chalk it up to a calibration error, as some have recently tried to do. Here’s the science behind what’s going on.

If you want to measure how fast the Universe is expanding, there are basically two different ways to do it. You can:

- look at an object that exists within the Universe,

- know something fundamental about it (like its intrinsic brightness or its physical size),

- measure the redshift of that object (which tells you how much its light has been shifted),

- measure the observed thing that you fundamentally know (i.e., its apparent brightness or apparent size),

and put all of those things together to infer the expansion of the Universe.

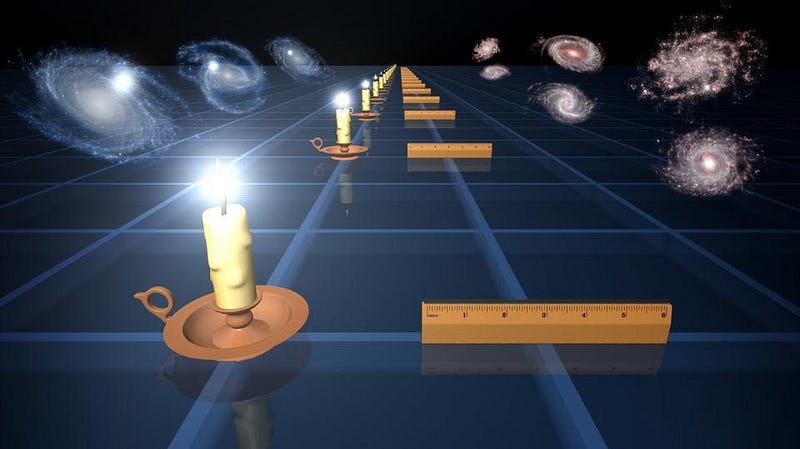

This sure does look like one way to do it, right? So why did I say there are basically two different ways to do it? Because you can either pick something where you’re measuring its brightness, or you can pick something where you’re measuring its size. If you had a light bulb whose brightness you knew, and then you measured how bright it appeared, you’d be able to tell me how far away it is, because you know how brightness and distance are related. Similarly, if you had a measuring stick whose length you knew, and you measured how big it appeared, you’d be able to tell me its distance, because you know — geometrically — how angular size and physical size are related.

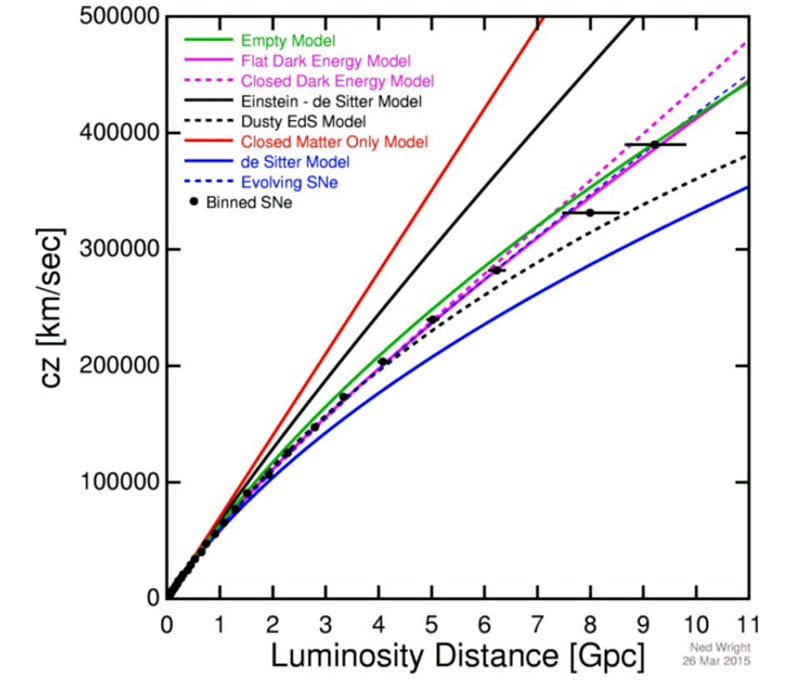

These two methods, respectively, are both used for measuring the expanding Universe. The “light bulb” metaphor is known as a standard candle, while the “measuring stick” method is known as a standard ruler. If space were static and unchanging, these two methods would give you identical results. If you have a candle at a distance of 100 meters, and then you measure its brightness, placing it twice as far away would make it appear just one-quarter as bright. Similarly, if you placed a 30-cm (12”) ruler at a distance of 100 meters, and then doubled the distance, it would appear just half as big.

But in the expanding Universe, these two quantities don’t evolve in this straightforward manner. Instead, as an object gets more distant, it actually gets fainter more quickly than your standard expectation of “double the distance, one-fourth the brightness” that we use in when we neglect the expansion of the Universe. And, on the other hand, the farther away an object gets, it appears smaller and smaller, but only to a point, and then appears to get larger again. Standard candles and standard rulers both work, but they work in a fundamentally different way from one another in the expanding Universe, and this is one of the many, many ways that geometry is a little bit counterintuitive in General Relativity.

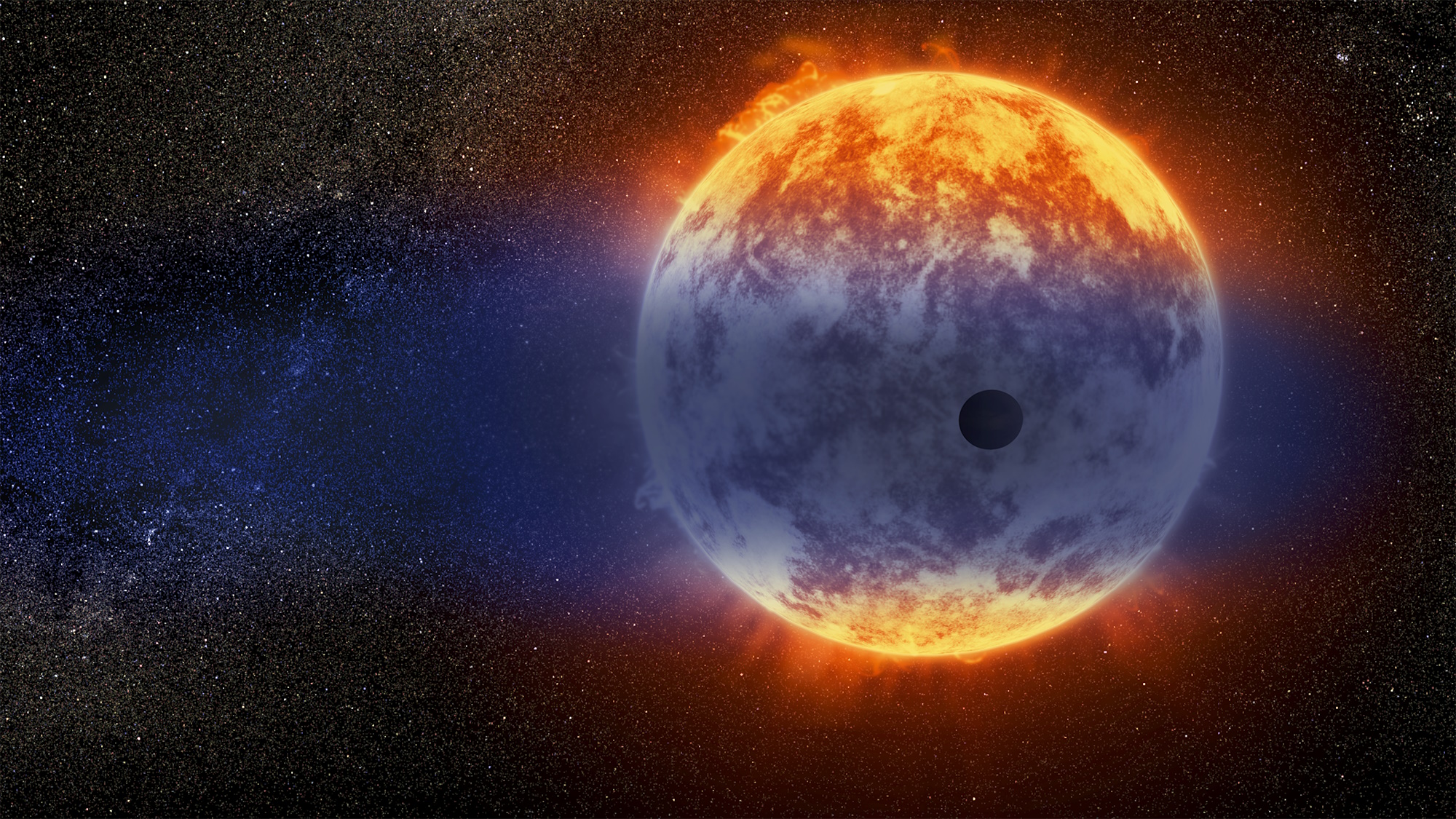

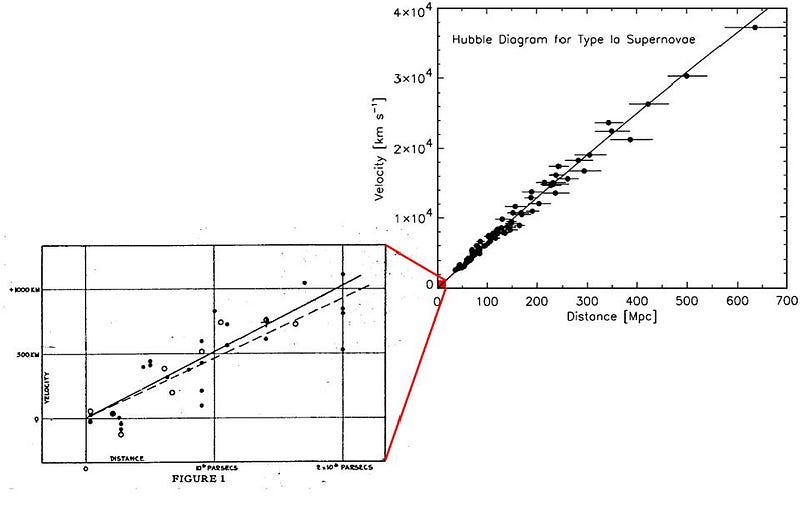

So, what could you do if you had a standard candle: an object whose intrinsic brightness you simply knew? Each one that you found, you could measure how bright it appeared. Based on how distances and brightnesses work in the expanding Universe, you could infer how far away it is. Then, you could also measure how much its light had been shifted from its emitted value; the physics of atoms, ions and molecules doesn’t change, so if you measure the details of the light, you can know how much the light has shifted before it reaches your eyes.

Then you put it all together. You’ll have lots of different data points — one for each such object at a particular distance — and that allows you to reconstruct how the Universe has expanded at many different epochs throughout our cosmic history. Part of the light is stretched because of the expansion of the Universe, and part is because of the relative motion of the emitting source to the observer. Only with large numbers of data points can we eliminate that second effect, enabling us to reveal and quantify the effect of cosmic expansion.

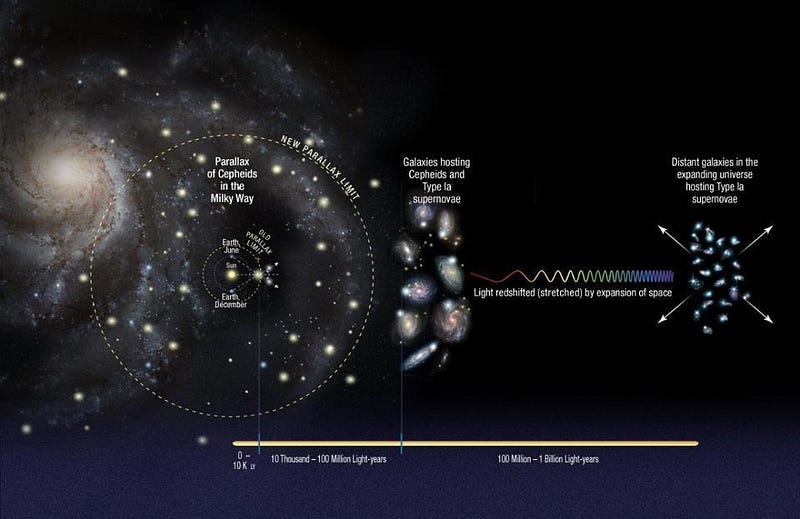

We call this generic method the “distance ladder” method of measuring the Universe’s expansion. The idea is that we start off close by, and we know the distance to a variety of objects. For example, we can look at some of the stars within our own Milky Way, and we can observe how they change position over the course of a year. As the Earth moves around the Sun and the Sun moves through the galaxy, the closer stars will appear to shift relative to the more distant ones. Through the technique of parallax, we can directly measure the distances to the stars, at least in terms of the Earth-Sun distance.

Then, we can find those same types of stars in other galaxies, and hence — if we know how stars work (and astronomers are pretty good at that) — we can measure the distances to those galaxies, too. Finally, we can measure that “standard candle” in those galaxies as well as others, and can extend our measurements of distance, apparent brightness, and redshift to galaxies that are as far away as we can see.

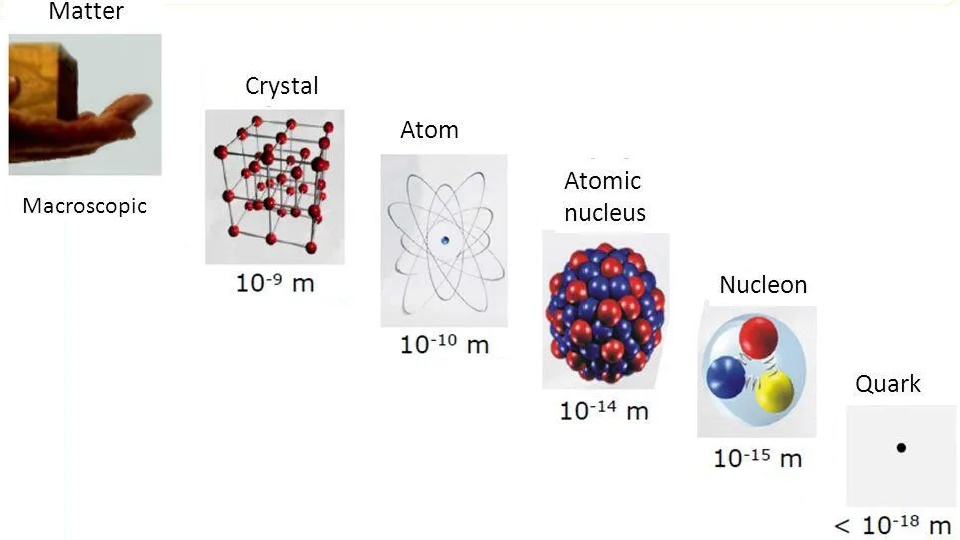

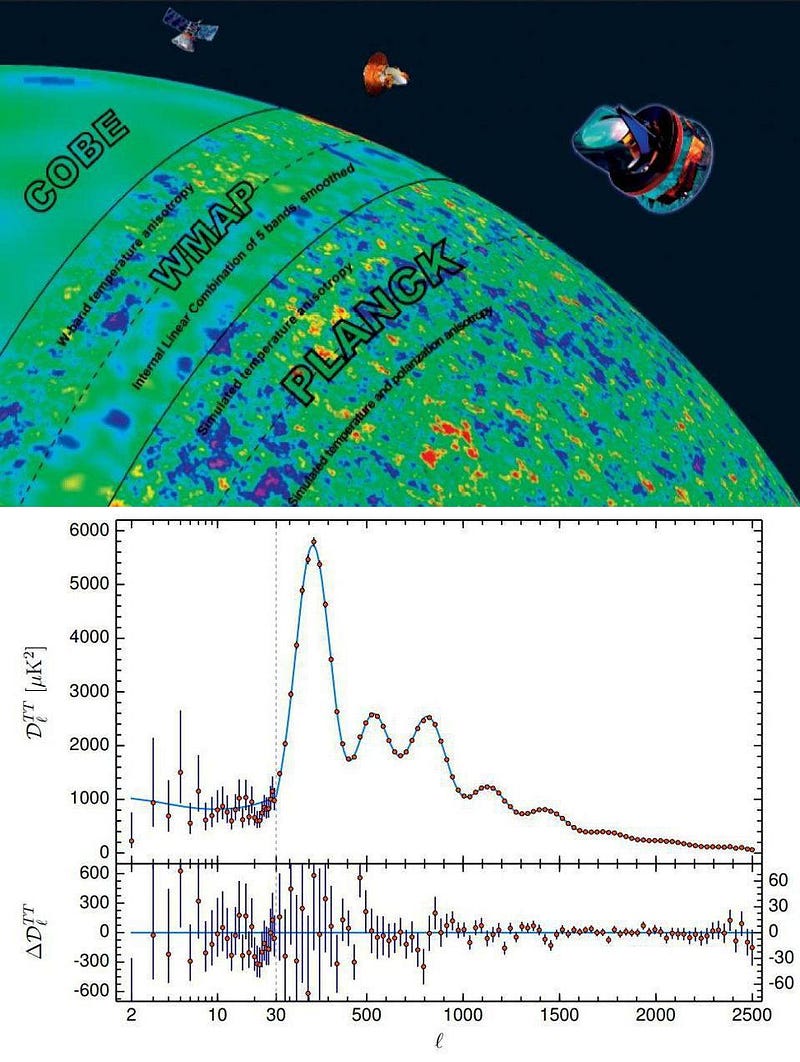

On the other hand, there’s a specific “ruler” that we have in the Universe, too. Not an object like a black hole, neutron star, planet, normal star, or galaxy, mind you, but a particular distance: the acoustic scale. Way, way back in the very early Universe, we had atomic nuclei, electrons, photons, neutrinos, and dark matter, among other ingredients.

The massive stuff — dark matter, atomic nuclei, and electrons — all gravitate, and the regions that have more amounts of this stuff than others will try to pull more matter into them: gravity is attractive. But at early times, the radiation, particularly the photons, have a lot of energy, and as a gravitationally overdense region tries to grow, the radiation streams out of it, causing its energy to drop.

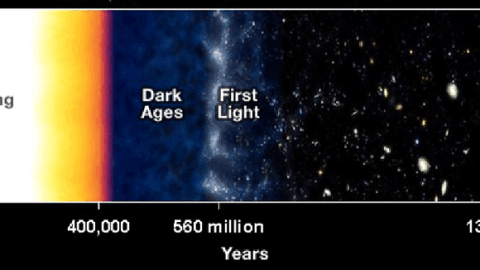

Meanwhile, the normal matter collides with both itself and with the photons, while the dark matter doesn’t collide with anything. At a critical moment, the Universe cools enough so that neutral atoms can form without being blasted apart by the most energetic photons, and this whole process comes to a halt. That “imprint” is left on the face of the CMB: the cosmic microwave background, or the remnant radiation from the Big Bang itself.

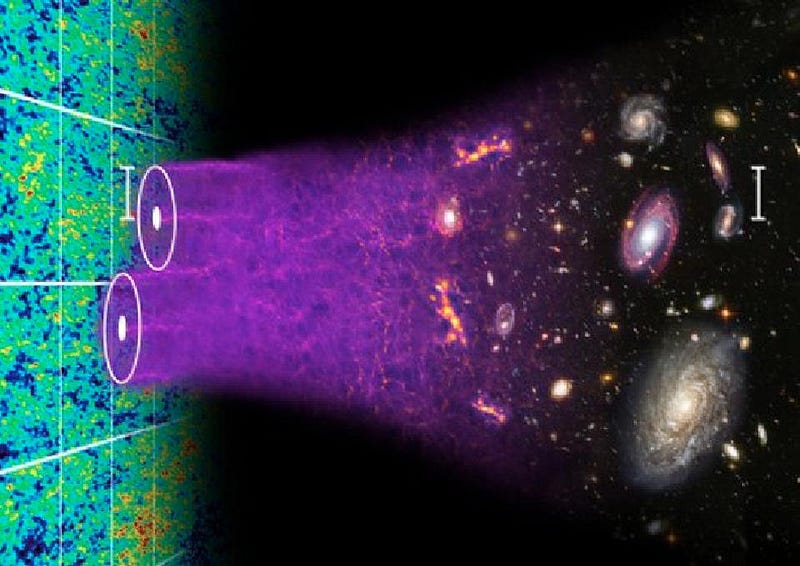

At this moment, which occurs some ~380,000 years after the hot Big Bang, there’s lots of matter that’s falling into overdense regions for the first time. If the Universe remained ionized, those photons would continue streaming out of those overdense regions, pushing back against the matter and washing that structure out. But the fact that it becomes neutral means there’s a “preferred distance scale” in the cosmos, which translates to us becoming more likely to find a galaxy a particular distance away from another, rather than slightly closer or slightly farther away.

Today, that distance is about 500 million light-years: you’re more likely to find a galaxy about 500 million light-years away from another than you are to find one either 400 million or 600 million light-years away. But at earlier times in the Universe, when it had yet to expand to its present size, all of those distance scales were compressed.

By measuring the clustering of galaxies today and at a variety of distances, as well as by measuring the spectrum of temperature fluctuations and temperature-polarization fluctuations in the CMB, we can reconstruct how the Universe has expanded throughout its history.

This is where we encounter today’s cosmic puzzle. Although there have been disputes over the Hubble constant in the past, the community has never had a more agreed-upon picture than right now. The Hubble Key Project — a distance ladder/standard candle result — taught us that the Universe was expanding at a specific rate: 72 km/s/Mpc, with an uncertainty of about 10%. That means, for every Megaparsec (3.26 million light-years) an object is from us, it will appear to recede by 72 km/s, which appears as part of its measured redshift. The farther away we look, the greater the effect of the expanding Universe.

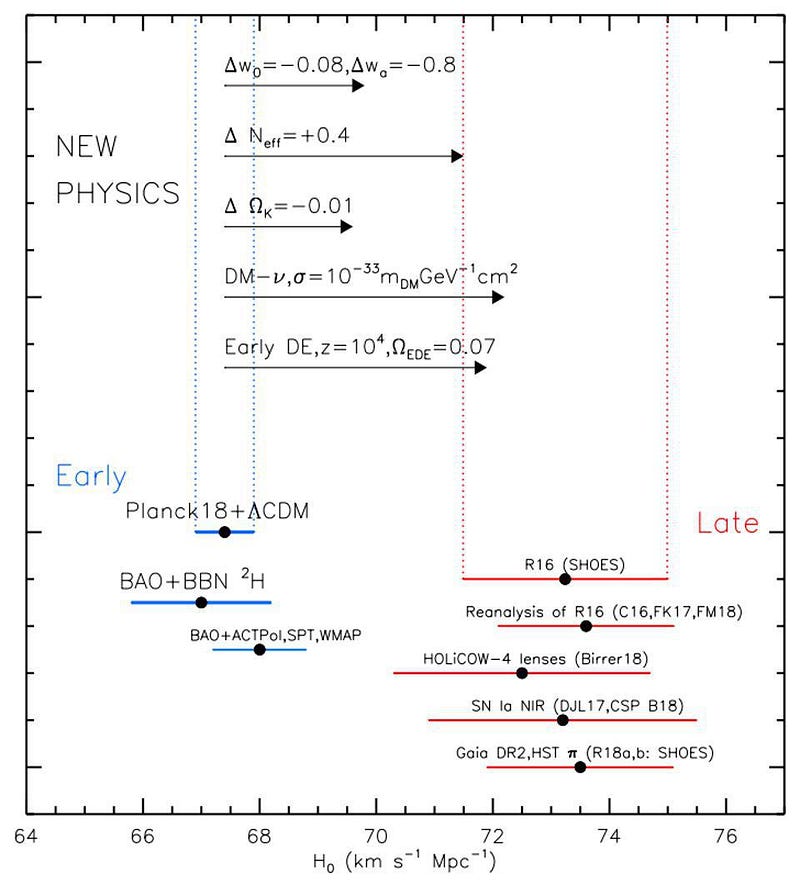

Over the past 20 years, we’ve made a number of important advances: more statistics, greater precision, improved equipment, better understanding of systematics, etc. The distance ladder/standard candle value has shifted slightly: to 74 km/s/Mpc, but the uncertainties are much lower: down to about 2%.

Meanwhile, measurements of the CMB, the CMB’s polarization, and the large-scale clustering of the Universe have poured in, and have given us a different “standard ruler” value: 67 km/s/Mpc, with an uncertainty of just 1%. These values are consistent with themselves but inconsistent with one another, and nobody knows why.

Unfortunately, the most unproductive thing we can do is one of the most common things that scientists have been doing to one another: accuse the other camp of making an unidentified error.

“Oh, if the acoustic scale is wrong by just ~30 million light-years, the discrepancy goes away.” But the data fixes the acoustic scale to about ten times that precision.

“Oh, lots of values are consistent with the CMB.” But not at the precisions we have; if you force the expansion rate higher, the fits to the data worsen substantially.

“Oh, well, maybe there’s a problem with the distance ladder. Maybe the Gaia measurements will improve our parallaxes. Or maybe the Cepheids are calibrated incorrectly. Or — if you have a new favorite — maybe we mis-estimate the absolute magnitude of supernovae.”

The problem with these arguments is that even if one of them were correct, they wouldn’t eliminate this tension. There are so many independent lines of evidence — beyond Cepheids, beyond supernovae, etc. — that even if we threw out the most compelling evidence for any one result entirely, there are many others to fill in those gaps, and they get the same result. There really are two different sets of answers we get dependent on how we measure the expanding Universe, and even if there were a serious flaw in the data, somewhere, the conclusion wouldn’t change.

For years, people tried to poke every possible hole in the supernova data to try and reach a different conclusion than a dark energy-rich Universe whose expansion was accelerating. In the end, there was too much other data; by 2004 or 2005, even if you ignored all the supernova data together, the evidence for dark energy was overwhelming. Today, it’s much the same story: even if you (unjustifiably, mind you) ignored all of the supernova data, there’s too much evidence that supports this dual, but mutually inconsistent, view of the Universe.

We have the Tully-Fisher relation: from rotating spiral galaxies. We have Faber-Jackson and fundamental plane relations: from swarming elliptical galaxies. We have surface brightness fluctuations and gravitational lenses. They all yield the same results as the supernova teams — a faster-expanding Universe — except with slightly less precision. Most importantly, there’s still this unresolved tension with all of the “early relic” (or standard ruler) methods, which give us a slower-expanding Universe.

The problem is still unresolved, with many of the once-proposed solutions already ruled out for a variety of reasons. With more and better data than ever before, it’s becoming clear that this is not a problem that will go away even if a major error is suddenly identified. We have two fundamentally different ways of measuring the Universe’s expansion, and they disagree with each other. Perhaps the most frightening option is this: that everyone is right, and the Universe is surprising us once more.

Starts With A Bang is written by Ethan Siegel, Ph.D., author of Beyond The Galaxy, and Treknology: The Science of Star Trek from Tricorders to Warp Drive.