How uncertain are LIGO’s first gravitational wave detections?

Did they perform their analysis sub-optimally? Maybe. But gravitational waves were seen no matter what.

“We hope that interested people will repeat our calculations and will make up their own minds regarding the significance of the results. It is obvious that “belief” is never an alternative to “understanding” in physics.”

–J. Creswell et al.

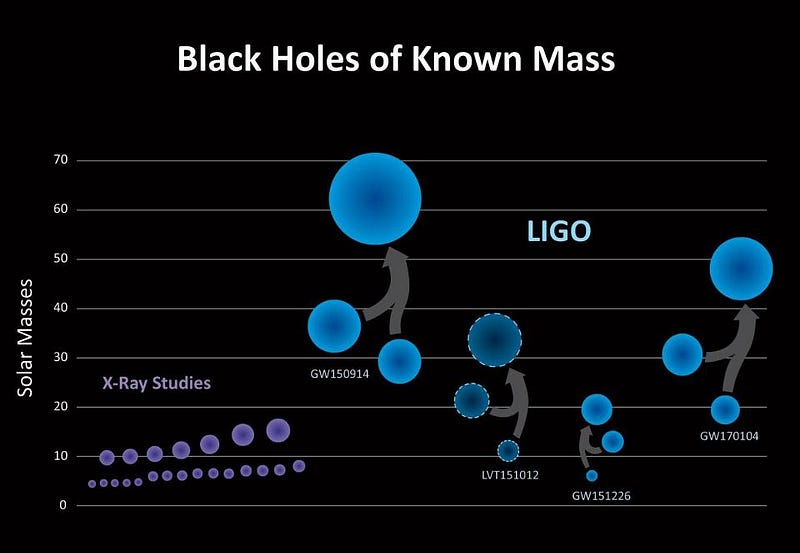

On September 14, 2015, two black holes of 36 and 29 solar masses merged together from over a billion light years away. In the inspiral and merger process, about 5% of their mass was converted into pure energy. It wasn’t energy the way we’re used to it, though, where photons carry it away in the form of electromagnetic energy. Rather, it was gravitational radiation, where waves ripple through the fabric of space itself at the speed of light. The ripples were so powerful, they stretched and compressed the entire Earth by the width of a few atoms, allowing the LIGO apparatus to directly detect gravitational waves for the first time. This confirmed Einstein’s General Relativity in an entirely new way, but a new study has cast doubton whether the detection is as robust as the LIGO team claims it is. Despite a detailed response from a member of the LIGO collaboration, doubts remain, and the issue deserves an in-depth analysis for everyone to ponder.

There have been plenty of instances in science, even in just the past few years, where an incredible experiment brought back results that failed to hold up. Sometimes, it’s because there’s insufficient data, and that increased statistics shows that what we hoped was a new particle or a bona fide signal happened to be just a random fluctuation. But other times, the data is great, and there’s simply an error in how the data was analyzed. Over the past 15 years, reports have come out claiming:

- the Universe was reionized twice in the earliest stages,

- that the spectrum of density fluctuations indicated that slow-roll inflation was wrong,

- that neutrinos moved faster than light,

- and that the polarization of light from the cosmic microwave background showed evidence for gravitational waves from inflation.

These results were incredible, revolutionary, and wrong. And they had something in common: they were all based on data that was analyzed incorrectly.

It’s not so much a problem that the analysis teams themselves were mistake-prone, although that’s an easily conclusion to draw. Rather, there was a problem with how the data that was collected — data that was very, very good and valuable — was calibrated. In the first two cases, there were foreground emissions from the galaxy that were incorrectly attributed to originating from the cosmic microwave background. In the third case, a loose cable caused a systematic shift in the measured time-of-flight of neutrinos. And in the final case, the polarization data was misinterpreted by a team that was working with incomplete information. In physics, it’s important to get every detail correct, especially when your results have the potential to revolutionize what we know.

Of course, sometimes huge breakthroughs are absolutely correct. Every experiment or observatory that operates will collect data, and that data comes from two separate sources: signal and noise. The signal is what you’re attempting to measure, while the noise is what simply exists as background, and must be tuned out appropriately. For telescopes, there are errant photons; for detectors, there are natural backgrounds; for gravitational wave observatories, there’s the vibration of the Earth itself and noise inherent to the experimental apparatus. If you understand your noise perfectly, you can subtract 100% of it — no more, no less — and be left with only the signal. This process is how our greatest discoveries and advances have been made.

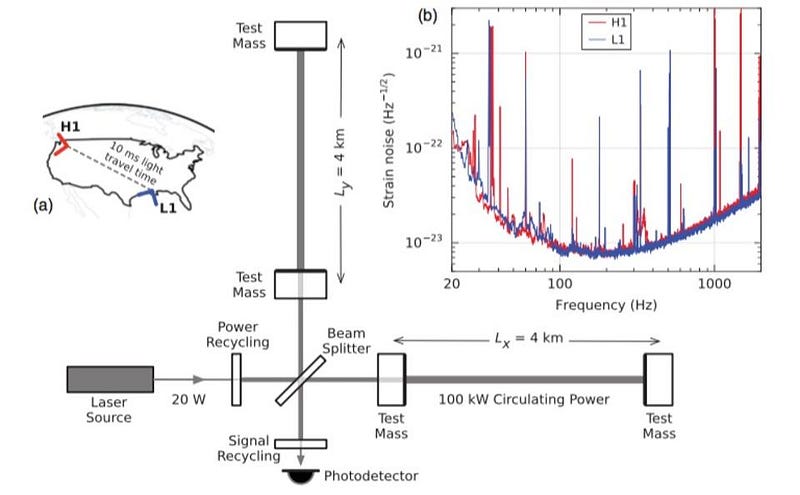

The worry, of course, is that if you subtract the noise incorrectly, you wind up with a false signal, or a combination of the actual signal and some noise that alters your findings. Because the idea behind LIGO is straightforward and simple, but the execution of LIGO is incredibly complex, there’s a worry that perhaps some noise has made it into the perceived signal. In principle, LIGO simply splits a laser down two perpendicular paths, reflects them a bunch of times, brings them back together, and produces an interference pattern. When one (or both) of the path lengths change in size due to a passing gravitational wave, the interference pattern changes, and therefore a signal emerges by measuring the shifting of the interference pattern over time.

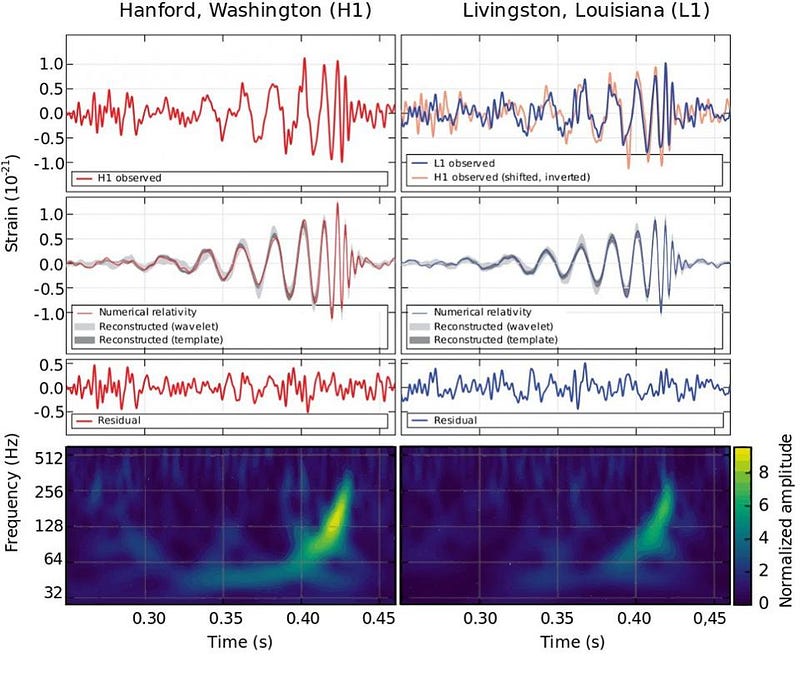

This extracted signal is what’s led to LIGO’s three detections (and one almost-detection), and yet they’ve been just barely over the threshold for a bona fide discovery. This isn’t a flaw in the concept of LIGO, which is brilliant, but rather in the large amount of noise that the collaboration has worked spectacularly to understand. The source of the recent controversy is that a group from Denmark has taken LIGO’s public data, their public procedure, and executed it for themselves. But when they analyzed the removed noise, they found that there were correlations between the noise found in the two detectors, which shouldn’t be the case! Noise is supposed to be random, and so if the noise is correlated, there’s a danger that what you’re calling your extracted signal may actually be contaminated by noise.

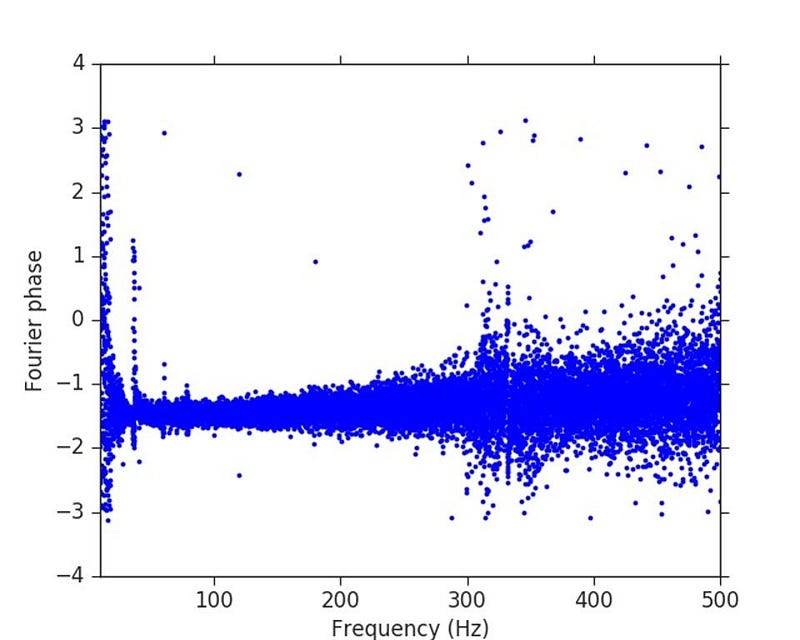

Is the Denmark group correct? Or is there a flaw in their work, and is LIGO’s original analysis free of this potential problem? Ian Harry, a member of the LIGO collaboration, wrote a response where he demonstrated how it was very easy to do your noise subtraction and analysis incorrectly that produced a noise correlation eerily similar to what the Denmark team found. In particular, he produced this picture, below.

It’s a reproduction of the analysis he believes the Denmark team performed, and that they performed incorrectly. His explanation was as follows:

For Gaussian noise we would expect the Fourier phases to be distributed randomly (between -pi and pi). Clearly in the plot shown above, and in Creswell et al., this is not the case. However, the authors overlooked one critical detail here. When you take a Fourier transform of a time series you are implicitly assuming that the data are cyclical (i.e. that the first point is adjacent to the last point). For colored Gaussian noise this assumption will lead to a discontinuity in the data at the two end points, because these data are not causally connected. This discontinuity can be responsible for misleading plots like the one above.

Case closed? Only if this is what the Denmark team actually did.

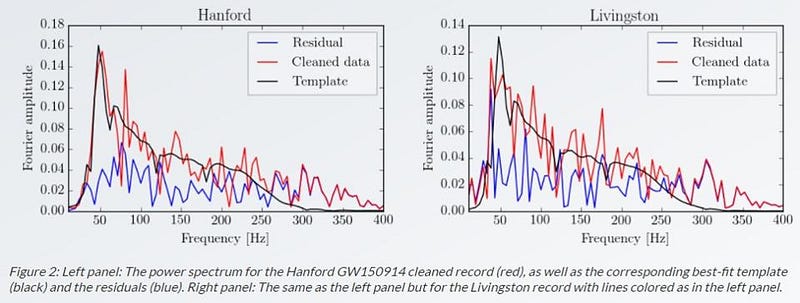

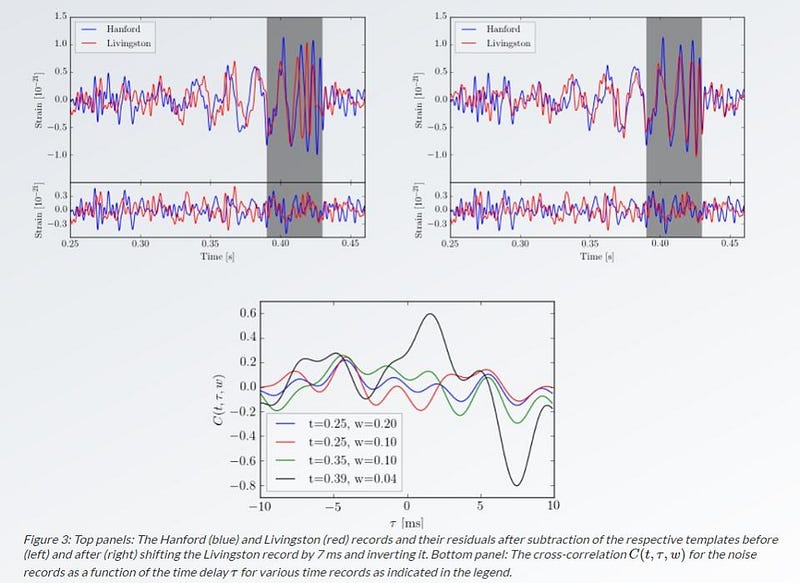

But according to the Danish team, they did not. In fact, they wrote a response to Ian Harry’s comment, where they graciously thanked him for access to his computer code, and worked with it to re-perform their analysis. The whole point of this, by the way, is not to claim that LIGO may have falsely detected gravitational waves. Even in the most extreme scenario, where there is noise contaminating the results seen between both detectors, a strong gravitational wave signal — one that matches the template for black hole mergers — still appears. The worry, rather, is that the noise has been dealt with sub-optimally, and that perhaps some of the signal has been subtracted out while some of the noise has been left in. When the Danes performed their full analysis, building off the methodology of LIGO, that’s what they are forced to conclude.

Quite clearly, there is a signal that goes way beyond noise, and it appears independently in both detectors. But also of note is the black curve in the bottom graph above, which shows the noise correlations between two detectors. In particular, the big “dip” at +7 milliseconds correlates with the time at which the gravitational wave signal emerges, and this is what the Danish team wants to focus on. As they say explicitly:

The purpose in having two independent detectors is precisely to ensure that, after sufficient cleaning, the only genuine correlations between them will be due to gravitational wave effects. The results presented here suggest this level of cleaning has not yet been obtained and that the identification of the GW events needs to be re-evaluated with a more careful consideration of noise properties.

And this is something that I think everyone is taking seriously: making sure that what we’re subtracting off and calling “noise” is actually 100% noise (or as close as possible to it), while what we’re keeping as “signal” is actually 100% signal with 0% noise. It’s never possible, in practice, to do this exactly, but that’s the goal.

What’s vital to understand is that no one can rightfully claim that LIGO is wrong, but rather that one team can claim that perhaps LIGO has room for improvement in their analysis. And this is a very real danger that has plagued experimental physicists and astronomical observers for as long as those scientific fields have existed. The issue is not that LIGO’s results are in doubt, but rather that LIGO’s analysis may be imperfect.

What you’re witnessing is one small aspect of how the scientific process plays out in real-time. It’s a new development (and one that makes many uncomfortable) to see it partially playing out on the internet and on blogs, rather than in scientific journals exclusively, but this is not necessarily a bad thing. If not for the original piece that drew significant attention to the Danish team’s work, it is possible that this potential flaw might have continued to be ignored or overlooked; instead, it’s an opportunity for everyone to make sure the science is as robust as possible. And that’s exactly what’s happening. The Danish team may still be making an error somewhere, meaning this whole exercise will be a waste of time, but it’s also possible that the analysis techniques will be improved as a result. Until this finishes playing out, we won’t know, but this is what scientific progress unfolding looks like!

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.