How do we know the distance to the stars?

Everything depends on it, and yet we don’t know it as well as we’d like!

“Exploration is in our nature. We began as wanderers, and we are wanderers still. We have lingered long enough on the shores of the cosmic ocean. We are ready at last to set sail for the stars.” –Carl Sagan

To look out at the night sky and marvel at the seemingly endless canopy of stars is one of the oldest and most enduring human experiences we know of. Since antiquity, we’ve gazed towards the heavens and wondered at the faint, distant lights in the sky, curious as to their nature and their distance from us. As we’ve come to more modern times, one of our cosmic goals is to measure the distances to the faintest objects in the Universe, in an attempt to uncover the truth about how our Universe has expanded from the Big Bang until the present day. Yet even that lofty goal depends on getting the distances right to our nearest galactic neighbors, a process we’re still refining. We’ve taken three great steps forward in our quest to measure the distance to the stars, but we’ve still got further to go.

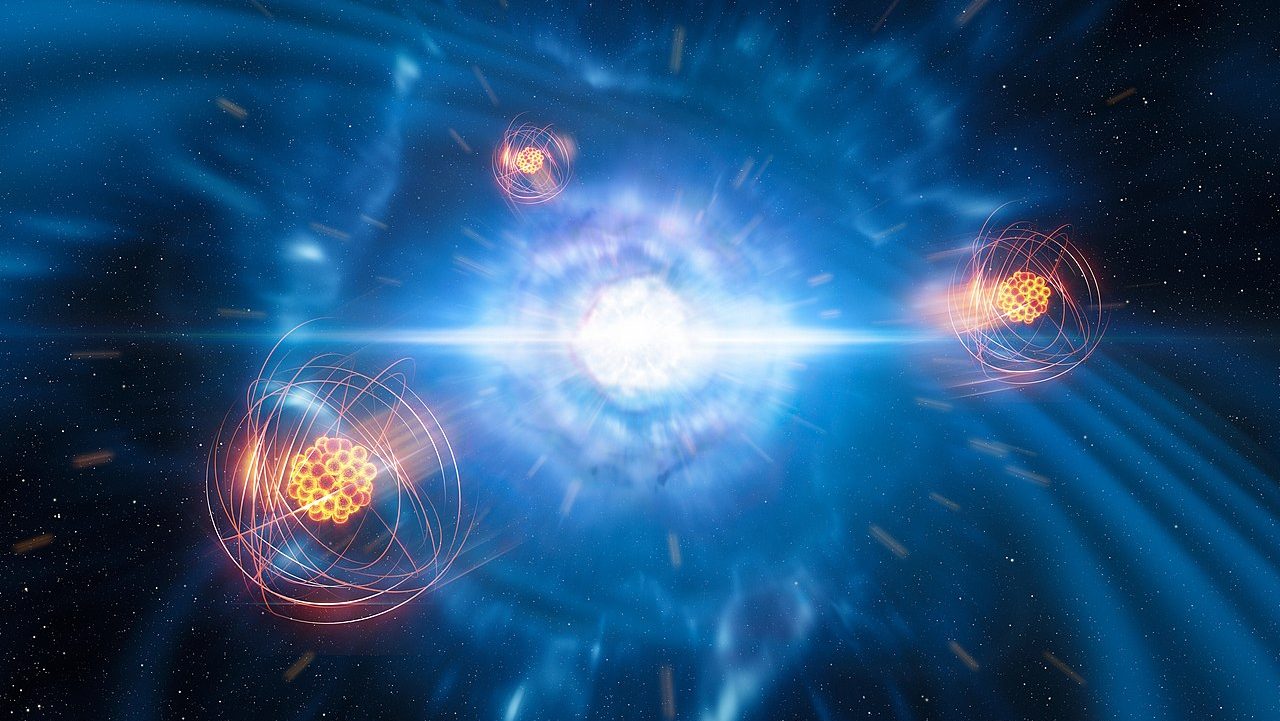

The story starts in the 1600s with the Dutch scientist, Christiaan Huygens. Although he wasn’t the first to theorize that the faint, nighttime stars were Suns like our own that were simply incredibly far away, he was the first to attempt to measure their distance. An equally bright light that was twice as far away, he reasoned, would only appear one quarter as bright. A light ten times as distant would be just one hundredth as bright. And so if he could measure the brightness of the brightest star in the night sky — Sirius — as a fraction of the brightness of the Sun, he could figure out how much more distant Sirius was than our parent star.

He began by drilling holes in a brass disk, allowing just a tiny pinhole of sunlight through, then comparing the apparent brightness with the observed brightness of the stars at night. Even the smallest hole he could possibly manufacture resulted in a blip of sunlight that far outshone all the stars, so he additionally masked it with beads of varying opacities. Finally, he calculated, after reducing the brightness of the Sun by a factor of approximately 800 million, the brightest star in the sky, Sirius, must be 28,000 times as distant as the Sun. That would place it 0.44 light years away; if only Huygens knew that Sirius was intrinsically 25.4 times as bright as our Sun, he could have come up with a reasonably good distance estimate just from this most primitive of methods.

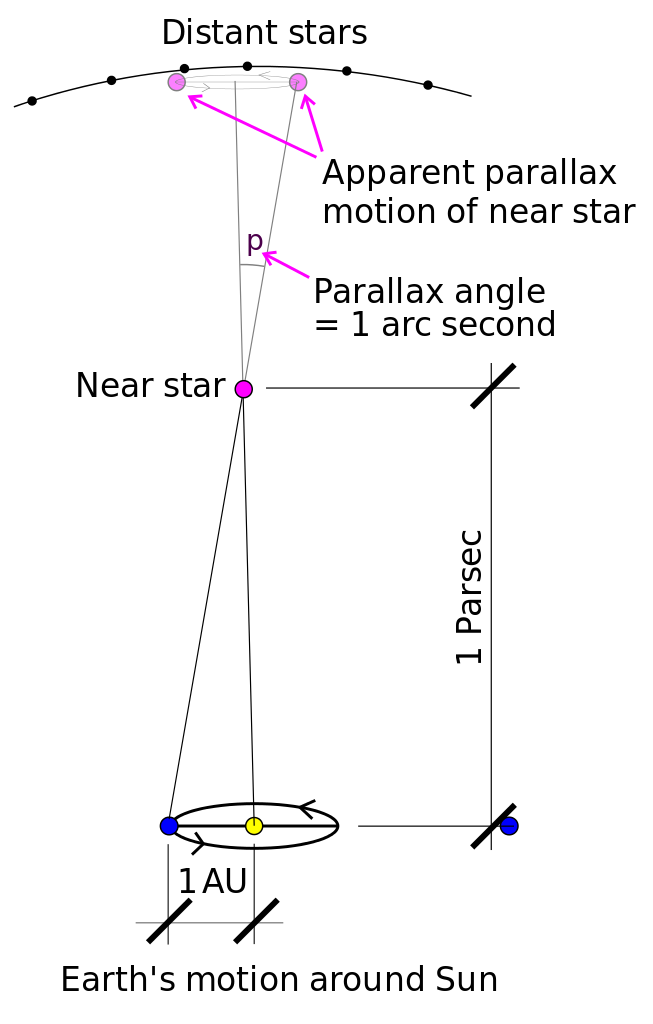

In the 1800s, we made another huge leap forward. The combination of heliocentrism — or the notion that the Earth orbited the Sun — combined with improvements in telescope technology enabled us to consider, for the first time, directly measuring the geometrical distance to one of these stars. No assumptions about what type of star it was, or about its luminous properties, were required any longer. Instead, the same math that enables you to hold your thumb at arm’s length, close one eye and then switch eyes and watch your thumb appear to shift, allowed us to measure the distances to the stars.

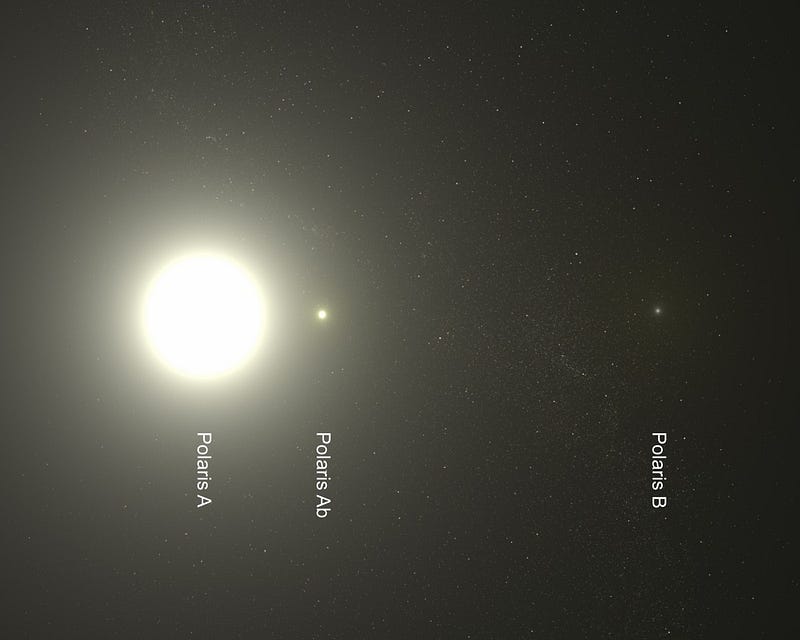

Known as parallax, the fact that our planet’s orbit is some 300 million kilometers in diameter around the Sun means that if we view the stars today versus six months from now, we’ll see the closest stars appear to shift position in the sky relative to the other, more distant stars. By measuring how a star’s apparent position appeared to shift over an Earth year, in a periodic pattern, we could simply construct a triangle and figure out its distance from us. Beginning with Friedrich Bessel in 1838, who measured the star 61 Cygni, and immediately followed by Friedrich Struve and Thomas Henderson, who measured the distance to Vega and Alpha Centauri, respectively. (Interestingly, Henderson could have been the first to get there, but he was afraid his data was wrong, and so he sat on it for years until Bessel eventually scooped him!) This was a more direct method that led to much more accurate results. But even this came with problems.

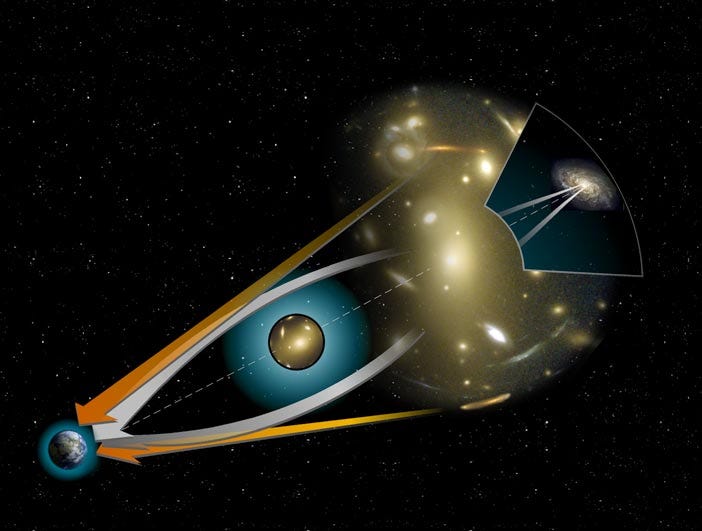

Because the 20th century brought with it the physics of General Relativity, and a revolution all its own. The realization that mass itself caused curvature in the fabric of spacetime meant that the locations of the different masses — both in our Solar System and beyond — warped the apparent positions of these stars in different ways as the months and years ticked by. Although the warping is incredibly small, the differences in positions are tiny: minuscule fractions of a thousandth of a degree. Understanding this bending of starlight helps us obtain more accurate results than simple geometric parallax alone, but our lack of a complete mass map of the Solar System and galaxy makes this a difficult undertaking.

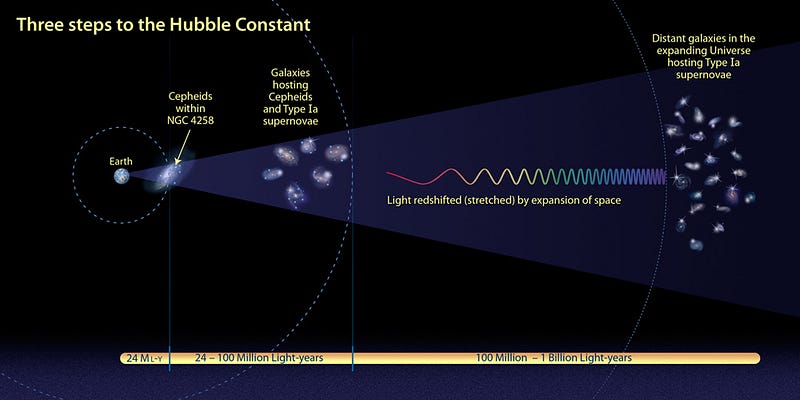

Today, our understanding of the expanding Universe depends extraordinarily precisely on measuring cosmic distances. Yet the closest rungs on that cosmic distance ladder, for star like Cepheid variables within our own galaxy, are dependent on this parallax method. If there’s an error of just a few percent on those measurements, then those errors will propagate all the way to the greatest distances, and this is one potential resolution tothe tensions in the measurements of the Hubble constant. We’ve come a long way in measuring cosmic distances to an incredible precision, but we’re not 100% certain that our best methods are as accurate as we need them to be. Perhaps, after four centuries of trying to measure how far away the nearest stars truly are, we still have farther to go.

This post first appeared at Forbes. Leave your comments on our forum, check out our first book: Beyond The Galaxy, and support our Patreon campaign!