We need another particle accelerator. Don’t let these 5 myths fool you

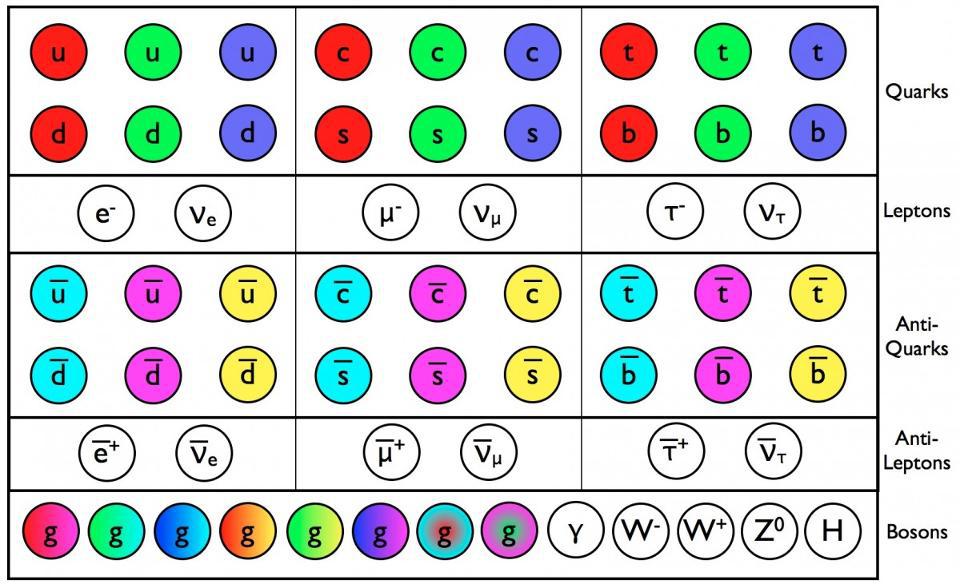

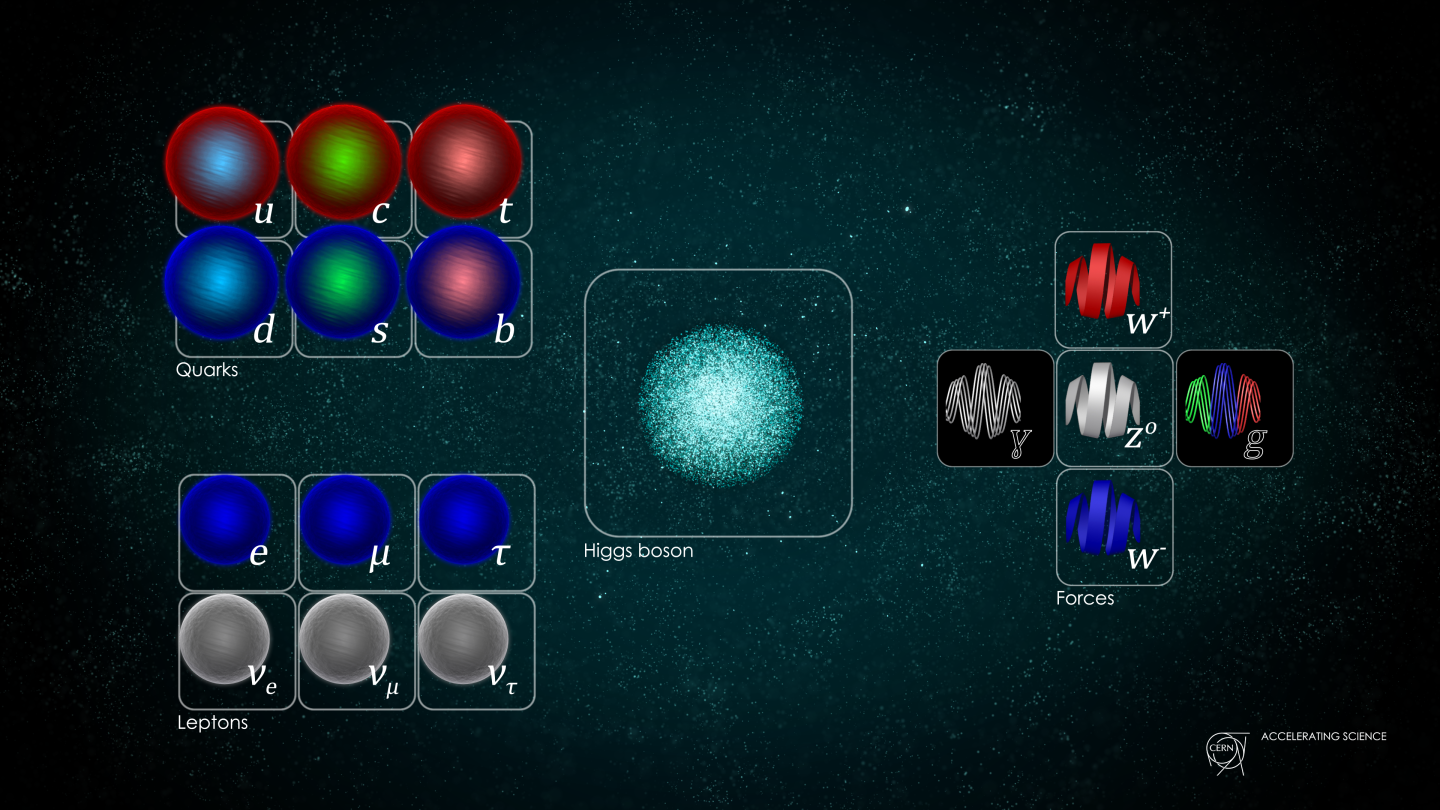

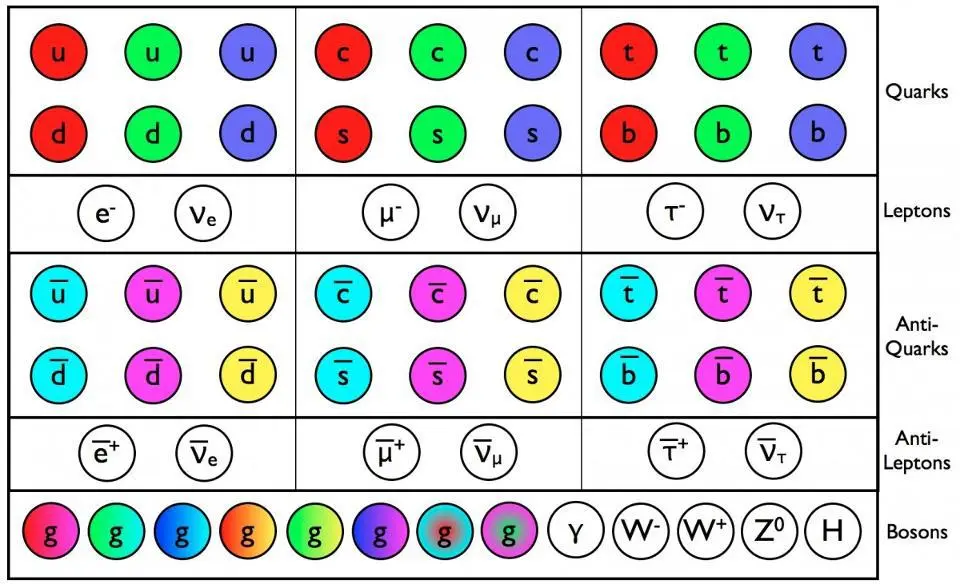

- Perhaps you’ve heard: the Large Hadron Collider at CERN found the Higgs boson a decade ago, completing the so-called “particle zoo” that makes up the Standard Model.

- Despite the promise of ideas like string theory and supersymmetry, no new, unexpected particles turned up at the Large Hadron Collider, including those related to dark matter, extra dimensions, or other exotic physics.

- This has been sold, alternately, as a “failure,” a “defeat,” or “the end of particle physics.” It’s none of those, and you shouldn’t believe any such assertion without knowing what’s really going on.

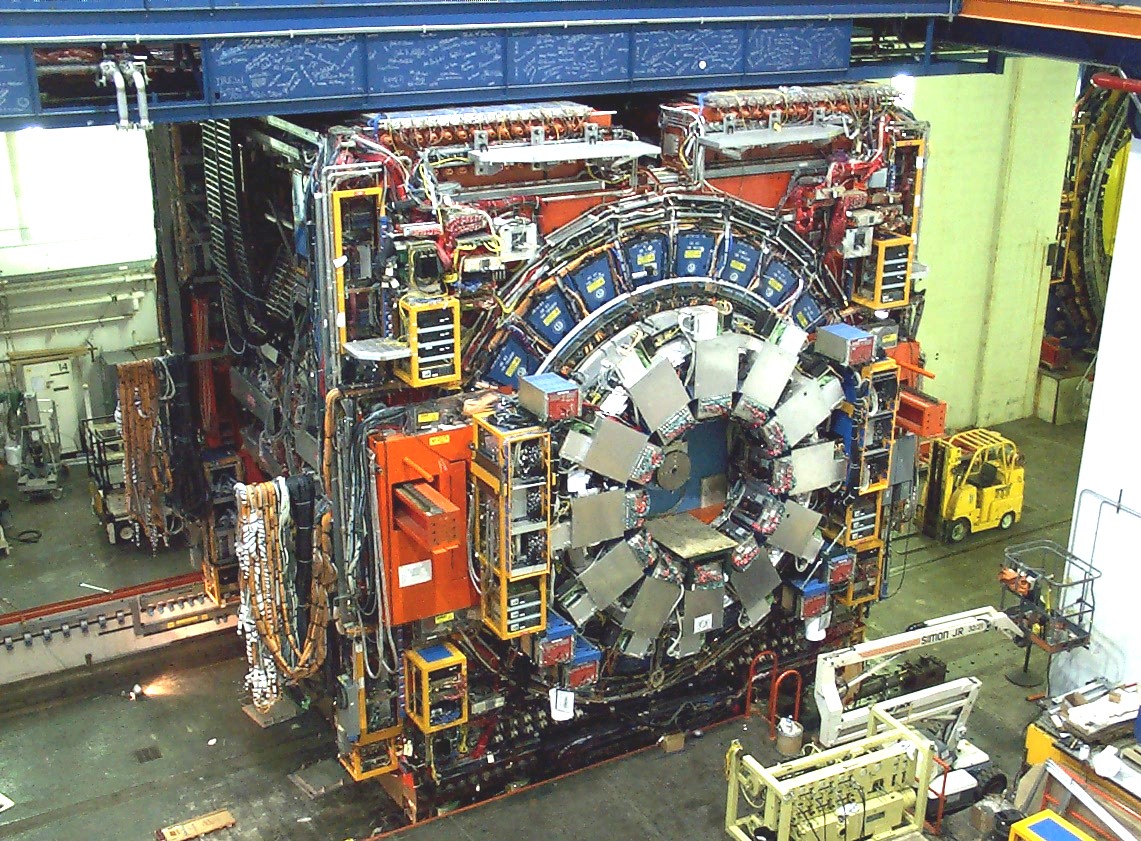

Here in early July of 2022, the world’s most powerful, most successful particle physics collider of all-time is currently smashing its own records. Having just completed a series of upgrades, it’s now colliding protons into protons:

- at higher energies (13.6 TeV),

- at faster speeds (299792455.15 m/s, or just 2.85 m/s shy of the speed of light),

- with more tightly-packed beams, which greatly increase the rate of collisions,

- and with all four detectors working faster and more precisely than ever before.

This new run successfully started on July 5th, kicking off with an announcement of the discovery of three new types of composite particles: one pentaquark species and two new tetraquark species.

And yet, you’d never know it from reading the news. Instead, the topics that people are talking about are dominated by two baseless assertions:

- that these experiments somehow are a threat to Earth and/or the Universe (no, they’re not),

- and that, due to its failure to find any new fundamental particles not predicted by the Standard Model, the Large Hadron Collider (i.e.., the LHC) should be humanity’s last cutting-edge particle accelerator.

While it’s very easy for physicists to demonstrate that the first scenario has no basis in reality, the truth is struggling to penetrate the public consciousness in the second case, likely due to five widespread myths that have been elevated and repeated at length. Here are the refutations — and the truthful reality — underlying each of them.

Myth #1: the motivation for particle colliders is rooted in ideas like string theory, supersymmetry, extra dimensions, grand unified theories, and particle dark matter.

This is a tale that almost everyone has heard by now: that theoretical physicists have led themselves down a blind alley, have overcommitted to it despite a lack of supporting evidence, and are simply moving the goalposts toward higher and higher energies to keep these unsupported ideas from being ruled out. There’s a kernel of truth to this, in the sense that, as a theorist, you never say, “We didn’t find evidence where we were expecting it, and therefore the idea is wrong.”

Instead, you say, “We didn’t find evidence where we were hoping it would be, and therefore, we can place constraints on the relevance of this idea for our physical Universe. The best constraints we can infer apply to this particular regime, but not these other ones.” Yes, it’s true that you can find overly optimistic theorists advocating for their favorite particular ideas — string theory, supersymmetry, grand unification, and extra dimensions among them — but these are not the leading motivations for building better and better particle colliders.

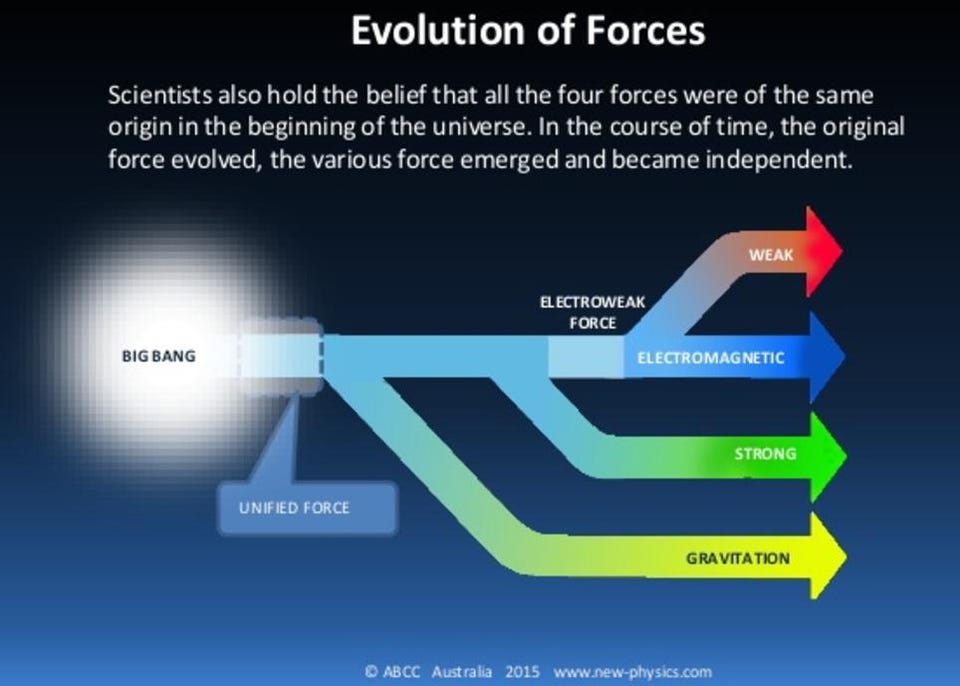

In fact, it’s easy to argue the exact opposite: that we have a very successful theory, the Standard Model, that works extraordinarily well for describing the Universe up to LHC-era energies. Simultaneously, we know new fundamental physics must exist to explain the Universe we observe, as without some sort of new physics, we can’t explain:

- what dark matter is,

- what dark energy is and why it exists at all,

- why there’s more matter than antimatter in the Universe,

- and how particles got to have the fundamental properties that they actually possess.

We have only three ways at our disposal for how to proceed. The first is to derive potential extensions to the Standard Model, calculate the consequences that would arise, and to look for them in experiment. That’s precisely what theorists and phenomenologists do: a hitherto remarkably unsuccessful approach. (If we could successfully guess what physics was out there beyond the Standard Model, the story would end very differently!) The second is to build giant, isolated detectors and hope that new physics literally falls from the skies; cosmic ray observatories and underground neutrino experiments do precisely this. The third way is to directly recreate the highest energy collisions you can, and to detect the results of those collisions to the greatest precisions possible. This third way, irrespective of the expectations or hopes of theorists, remains arguably the path of greatest scientific value toward the endeavor of exploring the unknown.

Myth #2: It would be better to invest in thousands of small experiments rather than one large, brute-force one akin to the LHC.

No. This betrays a fundamental misunderstanding of the nature of particle physics research. In any experimental or observational science, there are typically two major routes that you can pursue.

- You can design a tailor-made experiment or observatory to look explicitly for one particular signal under one specific condition or set of conditions.

- Or you can build a large, general, all-purpose (or multi-purpose) apparatus, pooling and investing a large set of resources together that serves a broad swath of your community, achieving a wide range of science goals.

In almost every scientific discipline, administrators advocate for a balanced portfolio between these two types. The large, brute-force style, flagship-class missions are the ones that drive science forward in great, ambitious leaps, while the small, finesse style, lower-budget missions are the ones that fill in the gaps that cannot be sufficiently probed by the prevailing large-scale experiments and observatories of the time.

In the realm of experimental nuclear and particle physics, the “things” that we need if we want to better understand the Universe we inhabit are greater numbers and rates of events that show and probe the process we’re looking to investigate.

- Want to investigate neutrino oscillations? Collect data on neutrinos, under a variety of conditions, that show them oscillating from one species into another.

- Want to investigate heavy, unstable particles? Create them in large numbers, and measure their decay pathways to the greatest possible precisions.

- Want to investigate the properties of antimatter? Create and cool it, and then probe it the same way you’d probe normal matter, to the limits of your capabilities.

While these low-cost, finesse-style experiments are well-positioned to investigate certain aspects of these (and other) questions in fundamental particle physics, there is no pathway that’s even close to as successful for probing the properties of fundamental particles as a dedicated collider with dedicated detectors surrounding them. The reason that the experimental particle physics community has consolidated around a “put all your eggs in one basket” collider for so long is that the science that results from pooling our resources into one gargantuan effort far outstrips any series of efforts that arises from divided resources.

The collider physics community is of one voice when it comes to calls to build one superior machine to advance past our current generation’s technological limits. The alternative is to give up.

Myth #3: Instead of investing in a next-generation particle collider, we would be better off investing in (solving X problem), as that’s a better use of our resources.

This line of thought rears its ugly head anytime an opponent of a particular project wants to see that project killed, simply asserting, “Project A is more important than this particular project under consideration, so therefore we should kill this project and instead devote more resources into project A.” Time and time again, history has shown us that this is a terrible way to fund project A, but it’s a great way to torpedo public support for whatever particular project is being considered.

This argument was raised many times over during the late 1960s, when terrestrial concerns such as civil rights, war, world hunger, and poverty sought to gain funding at the expense of the Apollo project.

It was raised again in the early 1990s, when concerns over the domestic economy led to the cancellation of the Superconducting Supercollider: a collider that would have been many times as powerful as the current LHC.

It was raised again in the early 2000s, when then-NASA administrator Sean O’Keefe wanted to cancel the Hubble Space Telescope and numerous other science-based NASA missions to focus on a crewed return to the Moon: a disastrous plan that almost ended our current golden era of discovery in space.

And it’s being raised now, again, by voices that argue that our climate crisis, the energy crisis, the water crisis, and many other environmental and humanitarian concerns all outstrip the need for a new, expensive particle collider.

Here’s the thing that I wish people would understand so that they wouldn’t be led astray by these baseless arguments: the amount of funding we have to give out is not fixed. If you “save money” by not spending it on A, that doesn’t free up that money to go toward B; it simply doesn’t get spent. You might think, “Hey, great, I’m a taxpayer, and that means more money in my pocket,” but that’s not true. All that happens — particularly in a world where governments don’t balance their budgets anyway — is that your project doesn’t get funded, resources don’t get invested into it, and society doesn’t reap the rewards.

And yes, all of these projects come about with great rewards, both scientifically and technologically. The list of NASA spinoff technologies shows that the Apollo Program actually created nearly $2 in new economic growth/activity for every $1 we invested in it. From the LHC at CERN, the advances in computing and data handling, alone, have already paid substantial dividends in industries around the world.

In general, taking on a hard problem and investing the necessary resources to do it justice is something that has always required national and international investments to occur, while the beneficiaries are the whole of humanity. Yes, there are plenty of other problems to be solved; abandoning fundamental physics won’t help anyone solve them.

Myth #4: The next-generation successor to the LHC will cost in the ballpark of $100 billion, which is way too expensive to justify.

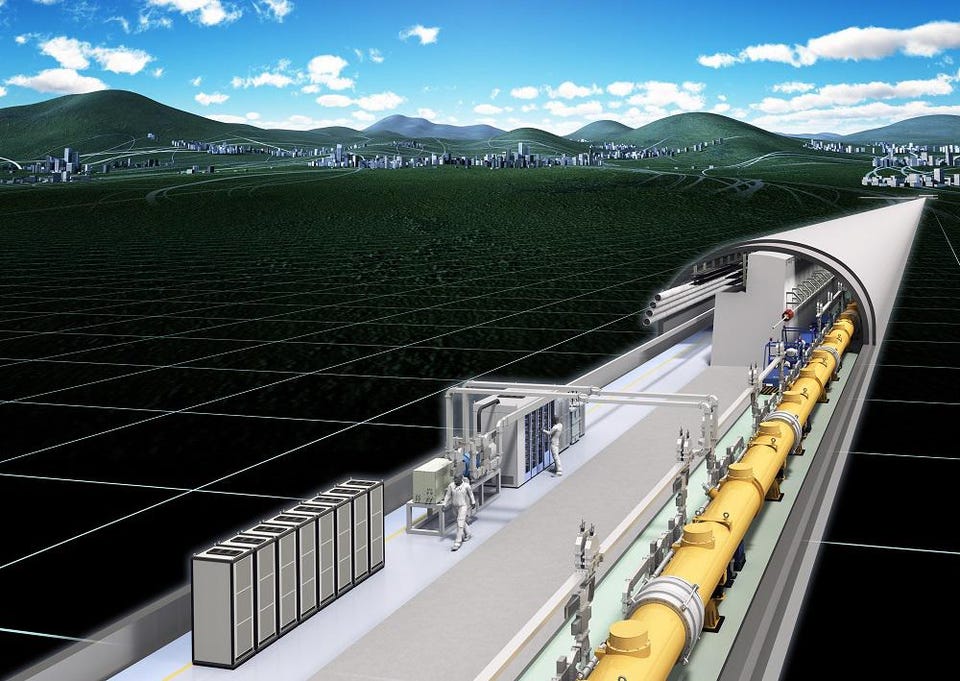

Another physicist writing for Big Think recently made this claim. But it’s not true, and that should matter. First off, the only way to get a figure in the ballpark of $100 billion is to assume that the next machine will:

- be an enormous, underground circular tunnel,

- of somewhere around ~100 kilometers in diameter,

- requiring an all-new set of high-field electromagnets,

- that collides protons with protons around a variety of collision points,

- and that will fund the machine, including construction, maintenance, energy costs of operation, and the employment of its personnel for a total timespan of somewhere between 40 and 60 years.

There are two problems with this argument. The first problem is that, when we talk about “how much a machine costs,” we typically ask about cost of construction, not about the cost over its total lifetime. There are many endeavors, scientific and non-scientific alike, that we’re happy to invest a billion or two dollars in a year, because of the cumulative benefit to humanity that results. Experimental particle physics is simply one of them; ~$2 billion a year is a tiny price to pay to continue to probe the frontiers of nature as we never have probed them before.

But the second problem is arguably even bigger: this machine, that might cost up to $100 billion at most, is not the next machine that collider physicists are asking for to further the science of their community. This machine being considered — known in the community as the Future Circular Collider: proton-proton program — is a proposal for the particle accelerator after the next one.

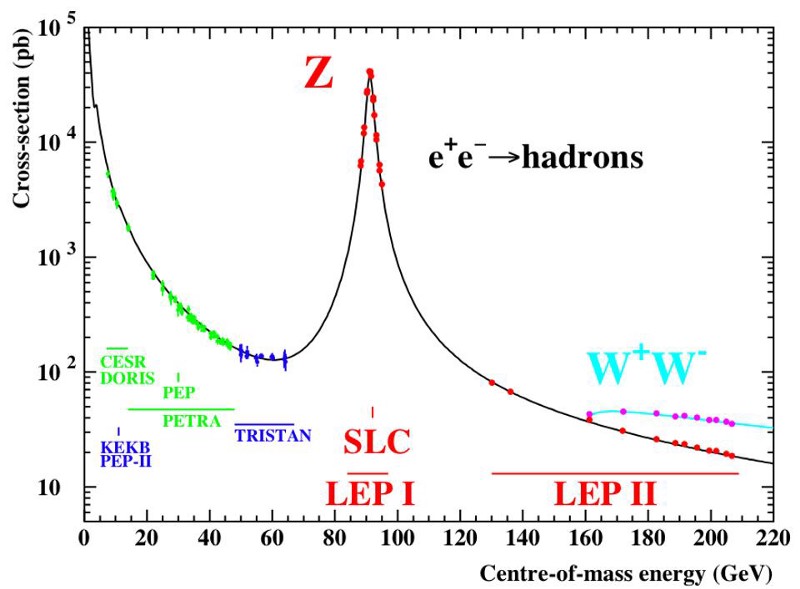

The reason is simple: you build proton-proton colliders to probe nature at higher energies than you ever have before. It’s the best way to peer into the unknown. But if you want to study the nature you’ve already uncovered to high precision, you want to build an electron-positron collider, that way you can produce enormous numbers of the particles you want to study under a more pristine set of conditions.

The next collider that the particle physics community is seeking to build is either:

- a large, circular electron-positron collider, one whose tunnel could be the basis for a next-generation proton-proton collider,

- or a long, linear electron-positron collider, whose sole use would be for this one experiment.

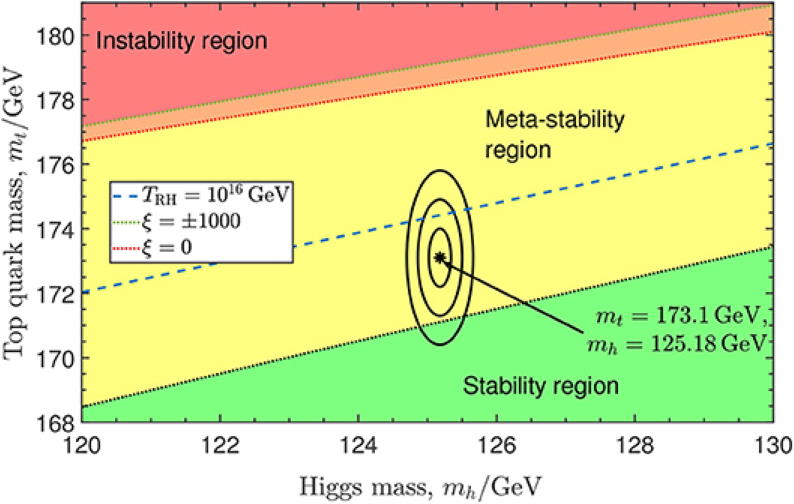

Either way, the next step is to produce large numbers of W-and-Z bosons, Higgs bosons, and top quarks in an attempt to study their properties more precisely. The “$100 billion” number is about five times too much, meant to scare budget hawks away from a scientifically viable, valid, and even sensible path.

Myth #5: If the LHC doesn’t turn up anything new and/or unexpected, experimental particle physics using colliders becomes pointless, anyway.

This is perhaps the most ubiquitous and untrue myth out there: that if you can’t find a new particle, force, or interaction that isn’t part of the Standard Model, there’s nothing worth learning from studying elementary particles further. Only the most incurious minds would draw such a conclusion, especially given facts like:

- dark matter and dark energy exist, and we don’t know why,

- there’s more matter than antimatter in the Universe, and we don’t know why,

- we have three and only three generations of particles, and we don’t know why,

- there’s CP-violation in the weak interactions but not the strong interactions, and we don’t know why,

- the W-boson doesn’t have the mass we expected it to, and we don’t know why,

- and the muon’s magnetic moment doesn’t conform to our expectations, and we don’t know why.

There are a plethora of puzzles that we can’t explain in particle physics, and colliding as many particles as possible at the highest energies possible is one primary avenue for revealing properties of nature that may be immune to our other lines of inquiry. In fact, even as the LHC continues its “mundane” operations, we’re finding properties of nature we didn’t expect: challenges to lepton flavor universality and bound states of quarks and/or gluons that we couldn’t have predicted.

The simple truth of the matter is this: as it now begins its third major run of data-taking here in mid-2022, the various collaborations are searching for a variety of potential signals. As we create more and more Higgs bosons, we’ll see if they decay precisely as the Standard Model predicts, or if cracks arise. As we create large numbers of composite particles containing charm and/or bottom quarks, we can see how their properties inform our conception of the Universe. As new pentaquarks and tetraquarks are discovered, we can test all sorts of our predictions, and we can also look for predicted states that have never been observed before, like glueballs and other exotic forms of (purely Standard Model-based) matter. The LHC has only taken 2% of all the data it will ever take; the other 98% is now finally on its way.

But the biggest motivator is this: we can look at the Universe as we’ve never looked at it before, and that’s almost always where new and unexpected discoveries come from. We have the technology to push our scientific frontiers, and although the trickle-down benefits to society (what business-types call the return on investment) are always substantial, that’s not why we do it. We do it because there’s a whole Universe out there to discover, and no one ever found anything by giving up before sufficiently trying. Don’t let the fear of not finding what we’ve hoped for prevent us from looking at all.