When your brain keeps time with rhythms in speech

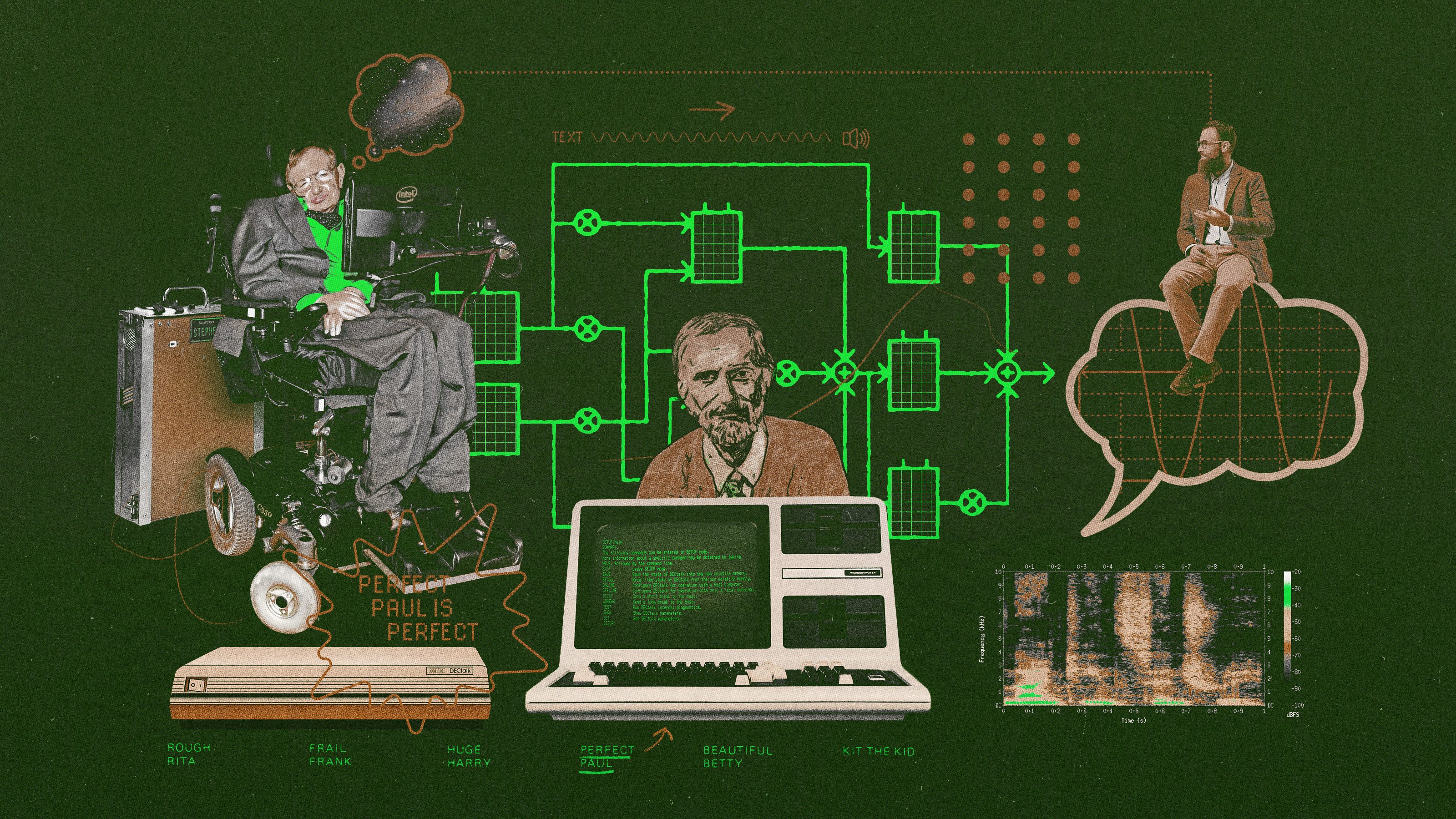

Ever wonder why it’s hard to understand people talking really quickly, and difficult to stay focused on people speaking super-slowly? Scientists have known for some time that the average rate of human speech varies from about one to 10 syllables per second, with the average being about four syllables per second. They’ve also known that waves of neural activity in the auditory cortex synchronize themselves to the current speech tempo — it’s suspected this helps the brain break speech into manageable chunks of audio. Oddly, neural activity in the motor cortex, the part of the brain that moves your mouth to talk, may also follow along. We don’t know why this happens. Are we silent mimicking the words we hear physically? A new study reveals, at least, the surprising reason the motor cortex only sometimes responds to speech.

Speed talk

Four syllables per second is the tempo in speech we most often hear and are comfortable with. You can try this out for yourself. Listen to yourself saying out loud, “Is that really true?” You’ll hear that those four syllables do, in fact, take about a second to say. Then slow things down so that you speak only one syllable per second, and notice how strange that sounds — likewise imagine how insanely unintelligible it would sound to pack 20 syllables into each second.

Our auditory cortex is believed to be conditioned, or “entrained,” over time to synchronize itself to speech, and the authors of the new research David Poeppel and M. Florencia Assaneo, have found that the tempo at which waves of neurons most commonly fire in the auditory cortex (and sometimes motor cortex) when doing this is 4.5 Hz (or 4.5 strong/weak cycles per second), accompanied at times by similar activity in the motor cortex. This makes sense given the average speech rate.

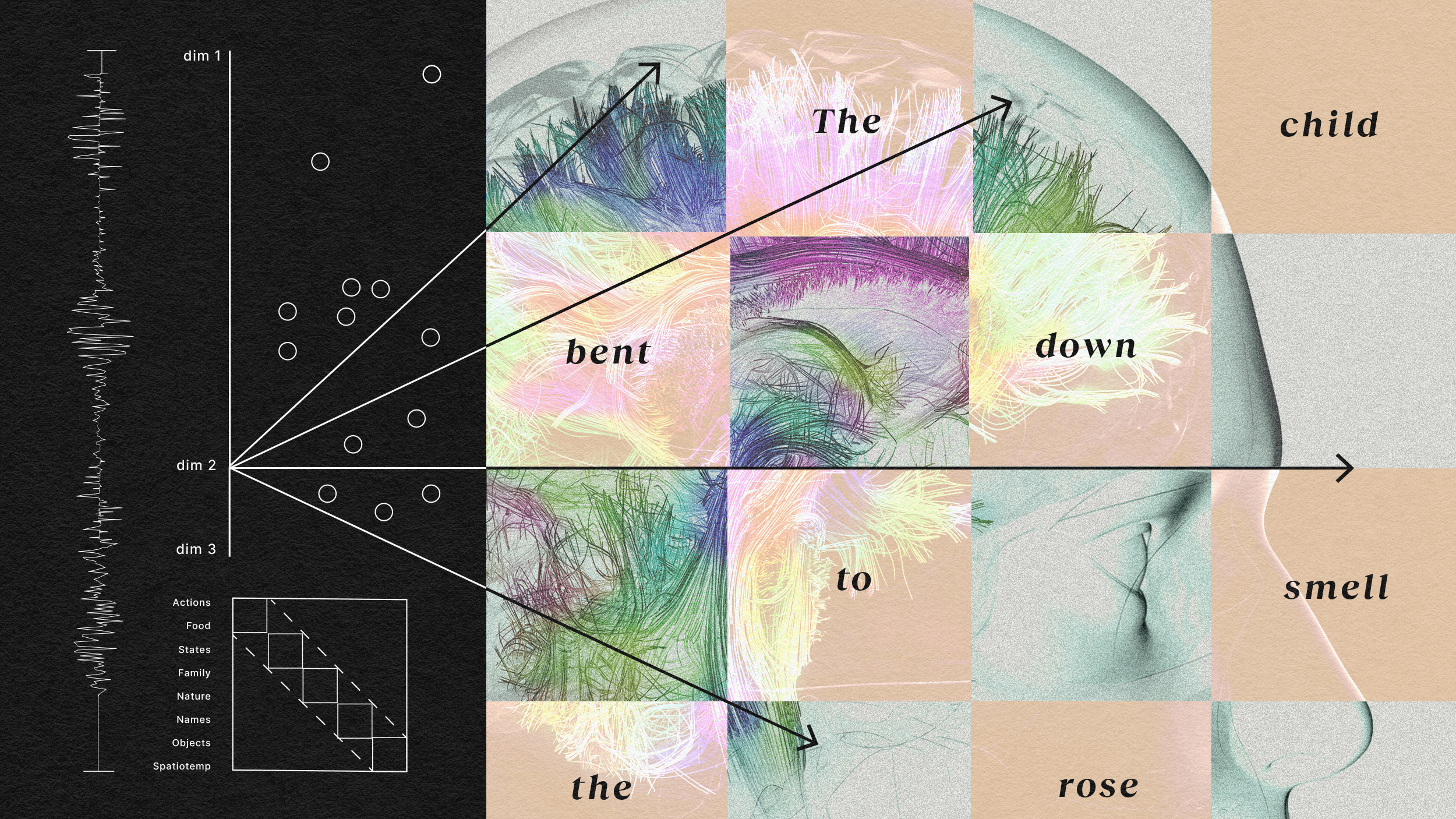

A sea of data

Poepple has spoken about the problem of the “epistemological sterility” with which neuroscientists are collecting data about how neurons fire absent any connection to the underlying behaviors and psychological states that may be causing the activity in the brain. He’s skeptical that at some point, all this data will somehow magically coalesce into an understanding of consciousness, and telling a meeting of the American Association for the Advancement of Science, “There’s an orgy of data but very little understanding.”

Poepple sees greater value in starting with experiments based on theories of human behavior or psychology and then going off to track down what may be the associated neural activity. And he’s not the only one questioning the mad dash to collect data. In his book Vision: A Computational Investigation into the Human Representation and Processing of Visual Information, David Marr put it well: “… trying to understand perception by understanding neurons is like trying to understand a bird’s flight by understanding only feathers. It cannot be done.”

The study

And so, when Assaneo and Poepple set out to investigate the mystery behind the relationship between the auditory and motor cortexes when processing speech the brain turns sound waves into meaning, his team concentrated on how different speech rates affect these cortexes. One of the questions is whether the auditory cortex somehow turns on and synchronizes the motor cortex through some unknown connective mechanism, or whether they simply both respond it speech on their own — the researchers’ computer modeling seems to suggest they’re not, in fact, connected. Here’s why.

Knowing the syllable-per-second rates that result in intelligible speech, the researchers were able to focus right away on the relevant area within the 160-channel magnetoencephalography (MEG) data they collected as 17 subjects listened to strings of meaningless syllables at rates between two per second and seven per second. Focusing on the expected tempo range, they found that the auditory stayed in sync with the speed from two to seven syllables per second. However, the motor cortex did not: Once the speech rate hit about five syllables per second, activity there ceased to follow along, essentially dropping out of the action. The researchers theorize that, if the auditory cortex were controlling the motor cortex, it would have remained entrained over the same range of speeds that the auditory cortex did. As it is, it seems the motor cortex has its own similar, though-not-identical clocking system separate from the auditory cortex. There appears to be no hidden linking mechanism between the cortexes.

Since, as Assaneo notes, “When we perceive intelligible speech, the brain network being activated is more complex and extended,” it may be that different results would be produced using words that have meaning, rather than nonsense sounds. The team is looking at this next.