Toward a More Holistic Definition of “RISK”

You see it all the time, the rationalist argument about the way people perceive risk. It goes something like this: “Why are you so afraid of X (insert some threat that is a really low probability but which is really scary), when you SHOULD be more afraid of Y” (insert something that is far more likely, but less scary.) The Onion recently published a great satire about this, “142 Plane Crash Victims Were Statistically More Likely To Have Died In A Car Crash”. Click there, read it, laugh, then come back. Because this raises a profound issue directly relevant to your health and survival.

– – –

Welcome back. What is really irrational about arguing that people should be more rational about risks, is the argument itself, the belief that we are – or should be – reason-based thinkers who only use the facts to figure out what we should be afraid of and how afraid we ought to be. This argument denies overwhelming evidence from a host of scientific disciplines that has discovered what is patently obvious from daily events in the real world…that the way we perceive and respond to risk is a mix of facts AND feelings, reason AND gut reaction, cognition AND intuition. In fact, the rationalists who still argue that people should, and CAN, be unemotionally objective and coldly fact-based about risk, are more than irrational. They are arrogant, and they do us all, and themselves, real harm. Presuming we can be über rational about risk denies the reality of the risks that arise because we CAN’T be.

Notwithstanding the general success our ancient instinctive/subjective risk perception system has had getting us this far, we sometimes get risk wrong. We are sometimes more afraid, or less afraid, than the evidence suggests, and THAT – what in “How Risky Is It, Really?” (excerpts) I call The Perception Gap – is a risk in and of itself. When we are too afraid (of vaccines, or nuclear radiation, or genetically modified food), or not afraid enough (of climate change, or obesity, or using our mobile phones when we drive), The Perception Gap is the root cause of dangerous personal behaviors. When The Gap causes us to worry too much, for too long, we suffer the health effects of chronic stress. When we all fear similar things for similar reasons, we press the government to protect us from what we fear MORE than what may actually threaten us the most (we spend way more on the threat of terrorism than the threat of heart disease), in which case The Perception Gap leads to policies that raise our overall risk.

Refusing to accept that the instinctive/emotional affective way we perceive risk is an innate part of the human condition, keeps us from recognizing the threat of the Perception Gap, and that keeps us from managing those risks with the same tools we use to manage risks like pollution and crime and food safety.

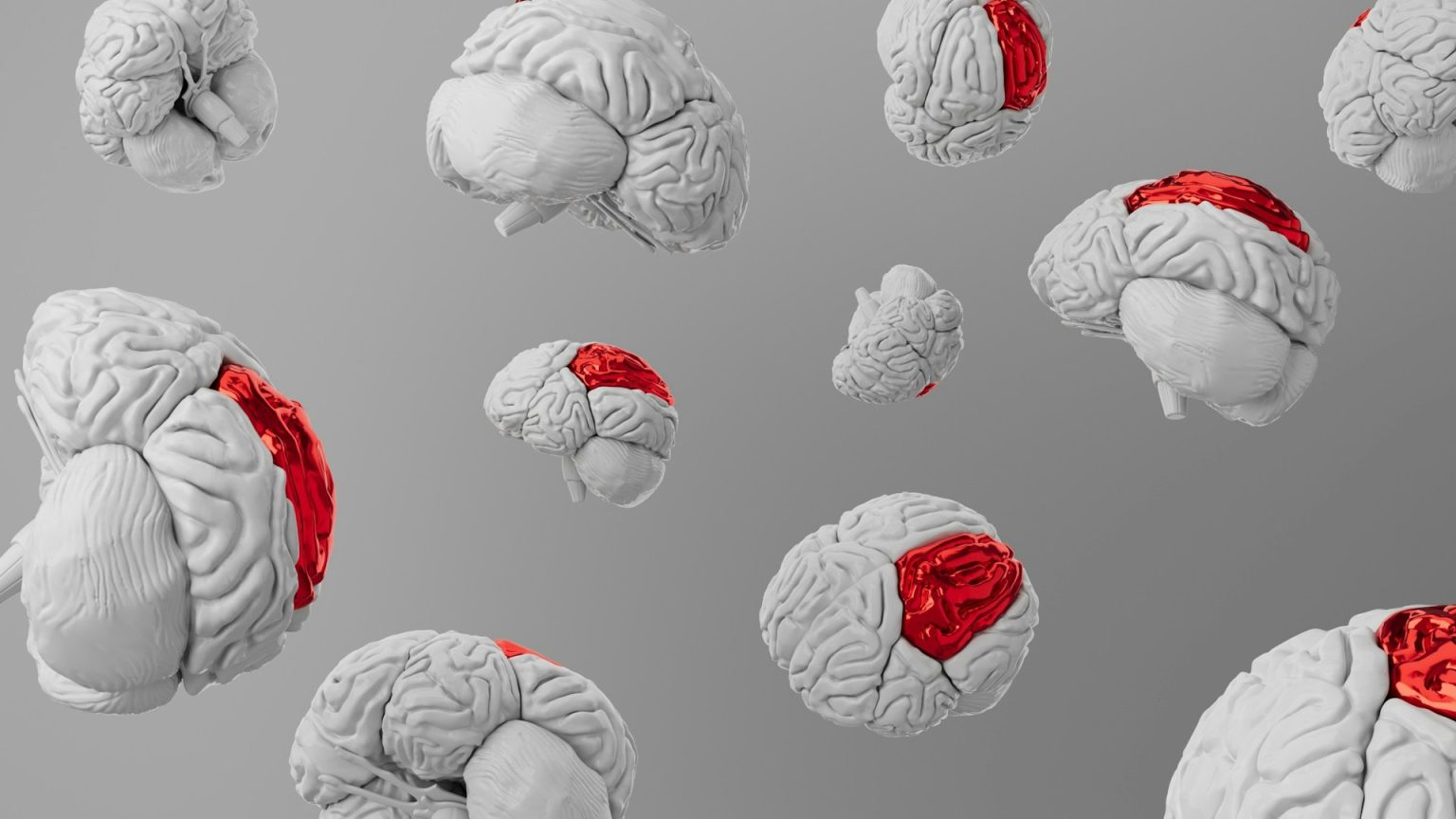

One fundamental bit of evidence about the affective nature of risk perception; Antonio Damasio wrote in Descartes Error about a fellow named Elliott who needed brain surgery to relieve seizures, severing the connection between the prefrontal cortex – where we think and decide – and the limbic area of the brain – associated with feelings. Elliott could not behave rationally, even though he passed all the tests of intellect with flying colors. He could process the facts, but they had no meaning, no valence, nor ‘pro or con’. Elliott teaches us that facts are lifeless ones and zeros until we run them through the software of how we interpret them. The fact that Elliott could not not conform his behavior to social norms, and could not make a decision about anything, because no option was preferable to another, also teaches us that subjective interpretations and feelings are intrinsic requirements for rational behavior. As Damasio put it, “… while emotions and feelings can cause havoc in the processes of reasoning…” “…the absence of emotion and feeling is no less damaging, no less capable of compromising rationality…”

The really important lesson from Elliott is that even with perfect information, our perceptions of anything are still subjective. This is particularly true of risk, where other research has found a wide range of mental filters we use, subconsciously and often preconsciously, to quickly assess how dangerous something might feel. Education, and effective risk communication, can narrow The Perception Gap, but they can’t close it. Managing the risks of The Perception Gap is not just about making the facts clear. That’s not enough. There will still be risks that need to be managed because we sometimes get risk wrong.

– Illness and death from nearly eradicated diseases continue to rise in communities where people are refusing vaccination.

– Millions of people don’t get flu shots, not for fear of the vaccine, but lack of fear of the disease.

– Rejection of genetically-modified food could be dramatically improving the health of (and saving) millions of lives.

The list of Perception Gap risks is long. And every one of the dangers on it can be identified, studied, quantified by standard risk analytic methods, and managed, with regulations and civil and criminal laws and economic incentives and disincentives, and all the tools society uses to regulate any risk, in the name of the greater common good.

But first we have to get past this ignorant/arrogant post-Enlightenment fealty to the Cartesian God of Pure Reason. The brain, as Ambrose Bierce defined it, is only “the organ with which we think we think.” The reality is more like what Princeton neuroscientist John Wong says; “The brain really is a survival machine. It’s not there to perform some sort of super computing operation and leading to some calculation of Pi to 100 digits. It tells you lies. Your brain misrepresents. It distorts. All in the interest of helping you survive.”

Wouldn’t the smartest way to use our brain be to realize its realities and limitations, to accept the inconvenient truth of what Andy Revkin has called our ”Inconvenient Mind”, and with open minds take the pitfalls of our risk perception system into account as we try to keep ourselves safe and alive?