A Brief History of Moore’s Law and The Next Generation of Computer Chips and Semiconductors

Super-powerful desktop computers, video game systems, cars, iPads, iPods, tablet computers, cellular phones, microwave ovens, high-def television… Most of the luxuries we enjoy during our daily lives are a result of the tremendous advancements of computing power which was made possible by the development of the transistor.

The first patent for transistors was filed in Canada in 1925 by Julius Edgar Lilienfeld; this patent, however, did not include any information about devices that would actually be built using the technology. Later, in 1934, the German inventor Oskar Heil patented a similar device, but it really wasn’t until 1947 that John Bardeen and Walter Brattain at Bell Telephone Labs produced the first point-contact transistor. During their initial testing phases, they produced a few of them and assembled an audio amplifier which was later presented to various Bell Labs executives. What impressed them more than anything else was the fact that the transistor didn’t need time to warm up, like it’s predecessor the vacuum tube did. People immediately started to see the potential of the transistor for computing. The original computers from the late-1940s were gigantic, with some even taking up entire rooms. These huge computers were assembled with over 10,000 vacuum tubes and took a great deal of energy to run. Almost ten years later, Texas Instruments physically produced the first silicon transistor. In 1956, Bardeen and Brattain won the Nobel Prize in physics, along with William Shockely, who also did critically important work on the transistor.

Today, trillions of transistors are produced each year, and the transistor is considered one of the greatest technological achievements of the 20th century. The number of transistors on an integrated circuit has been doubling approximately every two years, as rate that has held strong for more than half a century. This nature of this trend was first proposed by the Intel co-founder, Gordon Moore in 1965. The name of the trend was coined “Moore’s Law” and its accuracy is now used in the semiconductor industry as somewhat of a guide to define long-terms planning and the ability to accurately set targets for R&D. But it’s likely that our ability to double our computing power this way will eventually break down.

For years, we have been hearing announcements from chip makers stating that they have figured out new ways to shrink the size of transistors. But in truth we are simply running out of space to work with. The question here is “How Far Can Moore’s Law Go?” Well, we don’t know for sure. We currently use etchings of ultraviolet radiation on microchips, and it’s this very etching process that allows us to cram more and more transistors on the chip. Once we start hitting layers and components that are 5 atoms thick, the Heisenberg Uncertainty Principle starts to kick in and we would no longer know where the electron is. Most likely, the electrons on such a small transistor would leak out, causing the circuit to short. There are also issues of heat which is ultimately caused by the increased power. Some have suggested we could use X-rays instead of ultraviolet light to etch onto the chip—but while it’s been shown that X-rays will etch smaller and smaller components, the energy used is also proportionally larger, causing them to blast right through the silicon.

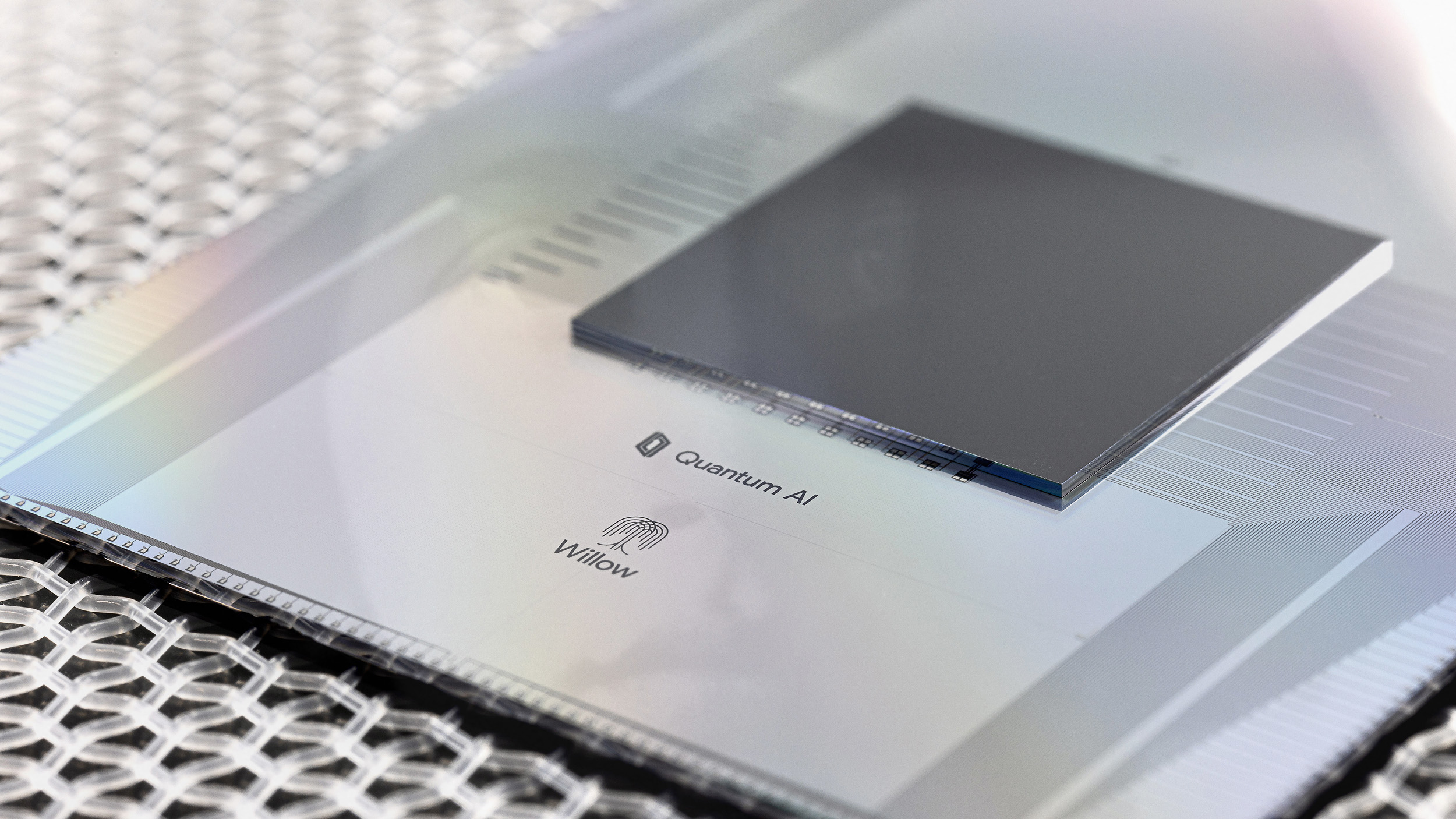

The other questions are the steps that we are going to take to find a suitable replacement for silicon when we hit the tipping point. We are of course looking at the development of quantum computers, molecular computers, protein computers, DNA computers, and even optical computers. If we are creating circuits that are the size of atoms, then why not compute with atoms themselves? This is now our goal. There are, however, enormous roadblocks to overcome. First of all, molecular computers are so small that you can’t even see them—how do you wire up something so small? The other question is our ability to determine a viable way to mass-produce them. There are a great deal of talk about the world of quantum computers right now, but there are still hurdles to overcome, including impurities, vibrations and even decoherence. Every time we’ve tried to look at one of these exotic architectures to replace silicon, we find a problem. Now, this doesn’t mean that we won’t make tremendous advances with these different computing architectures or figure out a way to extend Moore’s law beyond 2020. We just don’t quite know how yet.

So let’s look at some of the things that large chip makers, labs and think tanks are currently working on; trying to find a suitable replacement for silicon and take computing to the next level.

With some 2% of the world’s total energy being consumed by building and running computer equipment, a pioneering research effort could shrink the world’s most powerful supercomputer processors to the size of a sugar cube, IBM scientists say.

So I think the next decade of computing advancements is going to bring us gadgets and devices that today we only dream of. What technology will dominate the Post Silicon Era? What will replace Silicon Valley? No one knows. But nothing less than the wealth of nations and the future of civilization may rest on this question.