The Freud Apps: AI, Virtual Life Coaching, and the Future of Psychotherapy

BY MAX CELKO

Mental health and self-improvement services are increasingly accessible via mobile apps. The newest crop of these apps increasingly integrates Artificial Intelligence capabilities similar to Apple’s virtual assistant Siri. These intelligent systems will make our devices come to life, taking on new functions as our personal virtual ‘psychotherapist’ or life coach.

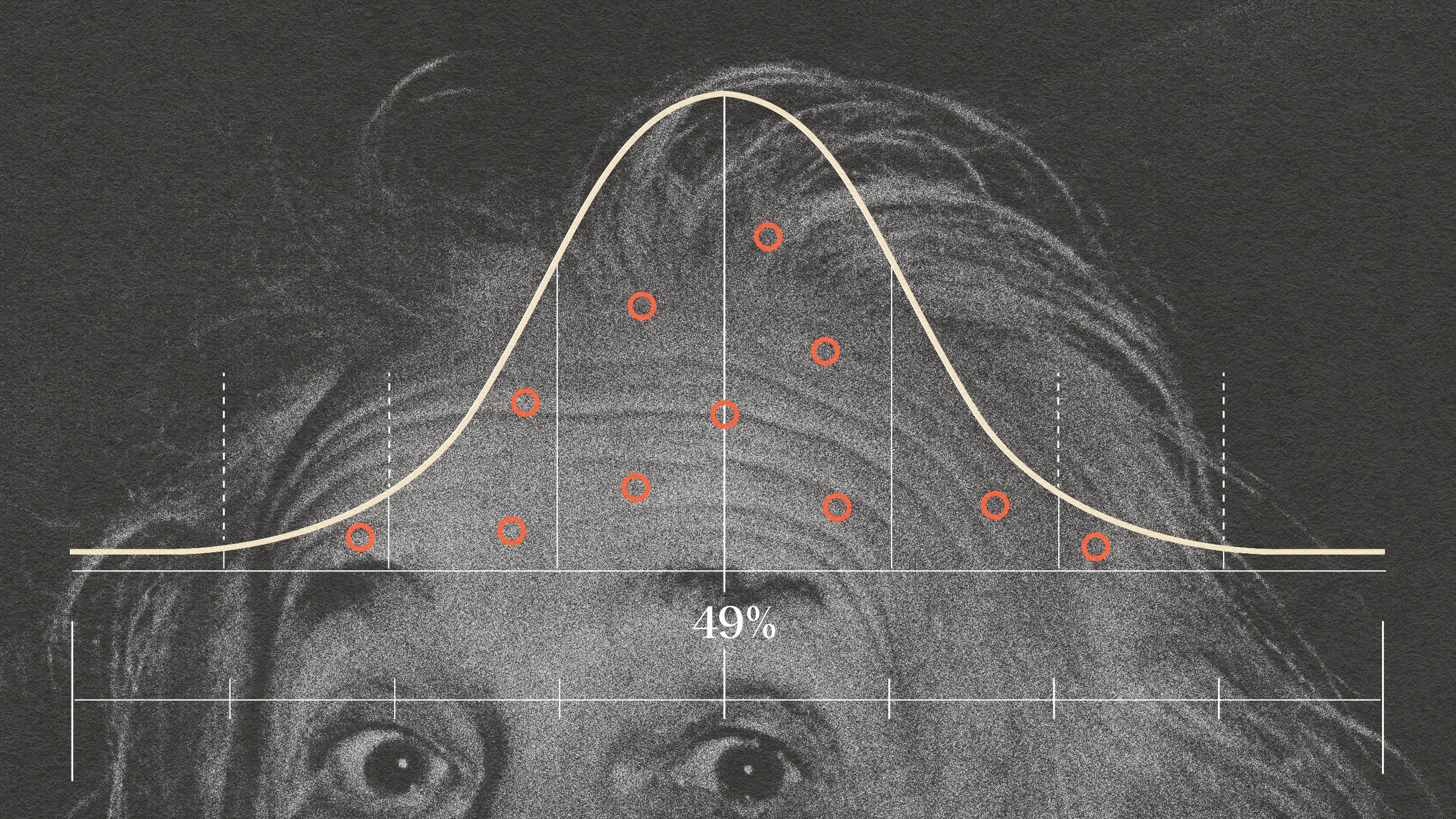

Though the self-improvement industry is unregulated with actual numbers hard to come by, publicity would indicate that the business is booming. The same is true of the traditional mental health profession, whose ranks of counselors are expected to grow 36% by 2020.

The increasing demand for psychotherapy and self-development coaching has spurred a wave of new digital services aiming at this market. The mobile App Mindbloom, for example, is a social gaming platform that enables users to motivate each other to improve their behavior, reach their life goals and generally be more successful in life. Users can send each other inspirational messages, track and compare their progress, and congratulate each other to their achievements such as a pay raise, a new workout achievement, or a new romantic relationship. “The most effective way to succeed in improving one’s life is through social support,” says Chris Hewett, founder of Mindbloom. “To make these social interactions more fun, we designed Mindbloom to feel like a social game.” The Mindbloom platform hence ‘crowdsources’ life coaching services from one’s group of friends, so to speak.

Researchers have also been developing ‘therapy’ programs for mobile phones to help users deal with anxiety and depression. As the New York Times recently reported, apps like Sosh are designed to help children and young adults improve their social skills. Primarily targeting individuals with Asperger’s Syndrome, the app features exercises that help users manage their behavior, understand feelings and connect with others.

Studies have shown that virtual coaches are effective in helping users change their behavior. A recent study on weight loss from the Center for Connected Health found that overweight participants who had access to an animated, virtual coach providing personalized feedback lost significantly more weight than participants trying to lose weight without virtual coaching. According to the study, published in the current issue of the Journal of Medical Internet Research, those using a virtual coach maintained their exercise regime over the course of the 12-week study, while those without saw their exercise intensity levels decrease by 14.3 percent. 87.1 percent of the participants using a virtual coach reported feeling guilty if they skipped an online appointment. “Virtual coaches and other relational agents have an important role in health and wellness,” says study co-author Timothy Bickmore, an associate professor in the College of Computer and Information Science at Northeastern University. “With a growing population of aging baby boomers and an ongoing shortage of healthcare professionals, a virtual coach can help bridge the gap to help remind and motivate people to stick to a care plan or wellness regimen.”

Today, theses systems are still in an early stage, but as technology further progresses, it might become possible to ‘converse’ with Artificial Intelligence beyond simple voice commands. AI systems could even possess ‘emotion sensing’ capabilities enabling them to detect users’ emotions and intents based on their tone of voice and speech patterns, making interactions richer and more effective. Theoretically, such capabilities would make it possible to develop AI systems that mimic interactions with a real psychotherapist or life coach. “One day our devise will tap in to an emotional intelligence to inspire us to improve the quality of our lives,” predicts Hewett. “They will become companions that are with us through the ups and downs of our hectic lives, making sure we don’t forget what’s important to us: our goals, our passions, our dreams, and our purpose.”

Experiments have shown that even very simplistic AI systems can simulate a ‘psychotherapy’ situation that is perceived as realistic by users. The earliest such experiments have been performed at the MIT in the 1960ies with the chatbot ELIZA. Even though the system didn’t have any understanding of what the ‘patients’ were saying and just looked for certain patterns of words and replied with a pre-determined output, users took it seriously and became very emotionally involved with the computer program. There is an anecdote of a secretary who thought the machine was a ‘real’ therapist, and spent hours revealing their personal problems to the program. When she was informed that the researchers performing the experiments, of course, had access to the logs of all the conversations, she reacted with outrage at this invasion of her privacy. AI systems that have a true semantic understanding of speech and are programmed with psychotherapy and coaching techniques can thus be expected to be very effective in therapy applications.

These systems could gather a wealth of data on our behavior by tracking our movements via GPS and by monitoring our behavior and our social interactions online. Mobile-based AI systems could even be integrated with devices worn directly on the body – similar to Nike’s Fuel Band– measuring our activity levels and our biofeedback information. Based on our data, theses systems could work with us to correct bad habits, provide personal development advice, and generally help us to improve our lives. “Such a technology does have the unique ability to supplement existing human experiences in a very powerful way,” states Chris Hewett. “Knowing our current location, our personal calendar, our task lists, our social connections, our interests, and the wealth of crowd sourced information and knowledge that could be useful for us is what makes Artificial Intelligence a truly revolutionary ingredient in the field of personal development.”

In the future it might become common have one or several virtual companions ‘living’ on our cell phones. “We are at the beginning of a new era of intelligent interfaces”, says Vlad Sejnoha, CTO of Nuance, the speech recognition company that licensed its voice engine to Apple. “Users really resonate with Siri – they clearly have a real emotional connection with human-like conversational device interfaces. We believe that the bulk of mobile devices going forward will be voice-enabled.”

Interacting with ‘humanized’ technology in the context of therapy and coaching will turn our devices into ‘identity accessories’: they will become tools to actively sculpt our behavior and identity. This will further heighten our emotional attachment to technology. “As intelligent systems get better at understanding and reacting to us, our relationship with devices is bound to become stronger over time,” says Sejnoha. Already today, the fear of being separated from one’s phone, a condition known as Nomophobia, is on the rise. According to new research by OnePoll on behalf of UK firm SecurEnvoy, 77 percent of those aged between 18 and 24 say they feel anxious if they become separated from their mobile phone. If our devices become ‘alive’ via AI technology, acting as a mediator for self-discovery and self-realization, it might have far-reaching consequences for how we relate to our devices. A new category of ‘living’ technology will emerge, taking on new roles as companion, confidant, and ‘friend’. These artificial intelligences will be with us whenever we need them, keeping us company, supporting us, and making us feel secure and cared for in an increasingly complex and erratic world.

As systems take on new ‘therapy’ and coaching functions and gather an increasing amount of personal data about us, the issue of privacy will become even more pressing as it already is today. Depending on how advanced these AI systems are, they will not only gather factual data such as our workout or eating patterns, but also glean intimate insight into our psyche and our problems, desires, dreams, and fears. It is obvious that corporations would be keen on getting their hands on such in-depth consumer insights data to further personalize their marketing efforts. As digital services get adopted in the healthcare realm, it will thus become increasingly important to set clear boundaries regarding which information third parties are allowed to have access to. Connected to the issue of corporate data mining is the danger of data theft. Since virtual psychotherapists and coaches have such a wealth of information about us, they would be a profitable target for hackers: if one could hack into their memory, then one would receive far-ranging insight into the life and psychology of their user.

If doctors start using therapy apps as part of medical treatment, it will also raise new questions regarding their accreditation. It might become necessary to create new official certifications for virtual therapy services to make sure they meet certain quality requirements. Even though there is expected to be a shortage of healthcare professional in the coming years, the impact AI systems on the employment market needs to be considered too: If virtual therapy systems become very advanced at some point in the future, certain aspects of the psychotherapy and coaching profession might get outsourced to technology, similar to the way lawyers today are already getting outsourced to text scanning devices to a certain extent.

Even though AI systems are no substitute for interactions with a real human, they do have the potential to improve our quality of life. Especially in a time when proactivity and constant self-development are becoming a precondition for being successful in life, virtual therapy and coaching can be expected to continue gaining popularity.

Max Celko is a Researcher of the Hybrid Reality Institute, a research and advisory group focused on human-technology co-evolution, geotechnology and innovation.He is a trends and innovation consultant based in New York and Berlin. Follow him on twitter.com/maxcelko