Big Idea: Statistics About Groups Tell You Nothing About Individuals

How tall are people, as a rule? If you tried to answer that question by interviewing a seven-foot-tall man, you’d be making a grave mistake. Most any educated person can tell you that a single individual’s case won’t tell you what to expect about people in general, or people in the same category as that individual. What we don’t hear often enough, though, is that this absolute barrier also blocks traffic in the other direction: Statistics about people in general, or about some category of people, tell you nothing certain about any one individual.

To say this is almost heresy in our knowledge-based society. Data-mongering is how Americans try to explain or control someone’s actions. As in: Eating like that will give you diabetes. Smacking your kid will make him violent. Taking a walk will make you creative. Being a paranoid schizophrenic will spur you to murder. Haven’t you seen the news? Studies show!

To see why this is wrong, think about an insurance company contemplating the great American highway. Those actuaries estimate roughly 30,000 people will be killed in U.S. traffic accidents every year. That’s reliable knowledge, gathered by sound methods. But it doesn’t allow them to state the name and address of any particular future victim. Medical research faces the same barrier: I can tell you that people who smoke a pack a day are more likely to develop lung cancer than people who don’t. I can’t say that means for sure that you, with your pack-a-day habit, are going to get lung cancer (some smokers, after all, never do).

Moreover, if you do get lung cancer after my warning, I cannot come to you and say, “you see, the statistical analysis foresaw what would happen to you.” Because it didn’t. Instead, it told you about the quality (not good) of the bet you were making with your life. It predicted with some confidence that at some future time the group of smokers who beat the odds would be way smaller than the group who failed to do so. That analysis not “wrong” if you never get cancer, or “right” if you end up getting sick. Which group you happened to land in is irrelevant.

Contrast this with the story of your life, as you might tell it near its end. Retrospectively, either outcome—you getting sick, crushed as you were by the odds, or, you beating those odds—would feel inevitable. How could your personal history, those unique memories that shaped you, have turned out any other way? If you had a different history, after all, you wouldn’t be you.

Stories—including, of course, the autobiographies we tell ourselves—are about individuals and the things that certainly did and did not happen to them. This means that stories are backward-looking: The only events of which we are absolutely sure are those that have already occurred. Statistical predictions, though, are about groups, and the things that might happen to them, and the hidden connections among those things. Statistical efforts are forward-looking. They ask: What is likely to happen in the future? Or, What would happen in the future if this hypothesis were true? These two modes of thinking about experience are truly incompatible.

Yet we persist in trying to turn statistically-based estimates about future results into narratives. The human mind is honed to pay attention to other people’s experiences and feelings, so those details impress us in a way that numbers don’t. And there’s a good case for the assertion that we evolved to understand the world by narrative means. So people who want to make statistical points about people in general will instinctively brighten up their picture with some compelling story—either hypothetical (imagine a 20-year-old schizophrenic being cared for by a demented old father!) or concrete (this guy had a leg amputated due to diabetes!). These tales are supposed to act as an illustration of a statistically-based argument. In the first, it’s “you should have children earlier in life than you imagine”; in the second, it’s “you should drink and eat less sugar.”

Trouble is, stories are so compelling that they work too well. What is supposed to act as an illustration of a general case feels like a prophecy—an edict declaring how your own personal narrative will read some day in the future. The story captured your attention and your emotions, which makes it easy to think about again, which makes it feel more probable (in a phenomenon psychologists call the availability heuristic, what comes to mind easily is deemed more likely than what doesn’t, regardless of real odds). So this dreadful future, which might happen to you, according to the best analysis we can make right now, feels as if it will happen to you, with the certainty of a movie plot.

Marketers and political types and journalists like me don’t really set out to confuse. We’re just doing what works, attaching tales to numbers, because that gets the number-based facts across. But part of our craft depends on the false impression that numbers and narratives are compatible. For instance, take the first of my two examples above. It’s taken from Judith Shulevitz’s terrific recent piece in The New Republic on the largely unrecognized effects of people having children later in their lives than ever. As Shulevitz recounts, being an older mother (or an older father, a point she rightly stresses because it hasn’t yet become part of the national conversation) correlates with a higher risk of having a child with autism, schizophrenia or chromosomal abnormalities. Hence the image, recounted by one of her sources, of the schizophrenic young adult with the father too old and feeble to step and provide needed help.

As an observation about society in general, the well-reported point here is hard to contest. Society as a whole should prepare for an uptick in the number of young people with learning problems, autism and schizophrenia. But does this mean that any single reader of the article should decide to have a child ten years earlier than planned? Or that a 40-something reader should decide against having offspring? Well, according to one study cited in the piece, a man has a 6-in-10,000 chance of fathering an autistic child before age 30, but a 32-in-10,000 chance of doing so when he’s 40. That’s a big jump, but still: A very small number multiplied by another number results in a very small number. And a little Googling reveals that 30-in-10,000 is also the estimated risk in the United States of having a heart attack if you are a menopausal woman or of simply dropping dead at age 55.

In other words, while it’s true as a general observation that older parents will have more children with behavioral problems, it would be a mistake for any individual person to decide that they’re going to have this problem. The odds are vastly against that fate. Being able to imagine it happening to you, or knowing that it has happened to someone else, has no effect on those odds. Such is the difficulty of connecting statistics about whole populations to any one person’s story, in fact, that the amputee in my second example, from a New York City public health campaign, had to be created in Photoshop.

Where ancient peoples had gods and heroes and medieval Europeans had the lives of saints, we 21st-century citizens have the normal distribution: To find out what we should do and be, we turn to data. If the statistics come from solid research, they have important information for us about how we, as a nation, are faring. But they aren’t prophecies, and your individual life—that string of wildly improbable events that began with your conception on one particular night—remains a story that no body of data can predict.

ADDENDUM 12/30/12: Thinking a bit more about this post, I realized that something else was nagging at me about the argument that Shulevitz wants to build on her impeccable reporting. It seems to me it’s quite relevant to the theme here.

She’s quite correct in saying older parenthood is a vast “natural experiment” that the human race is conducting on itself. However, part of what makes that claim effective is narrative power: The announcement of a scary natural experiment rivets the attention and engenders some fear. However, the piece doesn’t mention (because it would weaken its rhetorical impact, I guess) that we have been engaged in vast natural experiments with fertility for two centuries. In other words, to be an effective piece of writing, it implies that we have existed in a state of natural balance and health, only now disturbing it. But the reality is that there is no logical reason to claim that today’s experiment is unique, or uniquely bad.

What are some of these other natural experiments? Well, there was the one where much of the human population stopped being at the edge of starvation. And the one where major improvements in sanitation led to (a) parents seeing all their children live to adulthood and (b) adults commonly having one or more living parents and (c) millions living long enough to be active grandparents. Neither (a) nor (b) nor (c) would have seemed normal to most of our ancestors. (By the way, (a) is often cited as the prime driver of another great natural experiment, the plummeting rate of births-per-woman all over the world.)

Shulevitz says adults still need their parents and that children do better with sturdy grandparents, which (to my eye, anyway) implies that this is the human norm. That is narratively effective (“something is menacing the natural order of things”) but not supported by the evidence (which tells us that “normal” for much of human history was to live to perhaps 40 or 50, and see a good number of your infant offspring die. It’s factually wrong to suggest that we are, only now in the 21st century, deviating from the natural life-cycles of the species. But as a way of telling the story, it’s almost irresistible to a good writer.

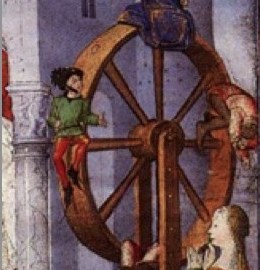

Illustration: Medieval statistical thinking: Kings and clerics and peasants go up and down in life according to the spin of Fortune’s wheel.

Follow me on Twitter: @davidberreby