More than meets the eye: A Q&A with Dartmouth’s Hany Farid

Did you see that photo of sharks allegedly swimming in a mall in Kuwait? Or the video of the eagle grabbing a baby in Montreal? Both must have been shared at least a dozen times on my social media feeds before someone even ventured to suggest either was a fake. As digital image manipulation tools advance, more and more of these kinds of images and videos will be released into the wild. And it gets harder and harder for us to tell the real from the manipulated.

Hany Farid is a computer scientist who specializes in digital forensics and image analysis. He’s the guy lawyers, news agencies and governments call to validate images and videos–and we spoke about the challenges of doing image forensics, whether we are de-sensitized by Photoshop, and why people are so gullible when it comes to doctored photos.

Q: What got you interested in image forensics initially?

Hany Farid: Back in school, I had to use the library and I was ready to check out some books and I saw on the return cart something called, “The Federal Rules of Evidence.” It was this huge book about introducing evidence in a court of law. I randomly opened it and saw a page about how you can introduce photographs. I started reading and basically, learned that you have to have a 35mm negative with a print on it–that’s what is considered an original. But at this point there were digital images and the way this read it was like the 35mm negative and digital image are practically the same. They aren’t and that’s a problem. So this problem just kept kicking around in the back of my head. How do you deal with this?

Q: It’s a problem that’s now the center of your research.

Hany Farid: Yes, I hadn’t expected that. But this is an interesting scientific problem with a natural solution: find new ways to analyze the images and determine their authenticity. But it’s also a cool problem that has real world applications. Almost as soon as we’re developing tools to analyze images, there are applications in the courts, in the media and in law enforcement. It’s rare when that happens in science.

Q: What are some of the biggest challenges in digital photography forensics?

Hany Farid: Today, we’re very good at determining if an image is an original–that it was taken from a camera and absolutely nothing has been changed. Where things get tough are when images are modified–resized, recompressed, uploaded to a social network, maybe changing the brightness or contrast. You know, that little bit of post-processing that doesn’t fundamentally change the image but is an alteration of some sort. It’s hard to distinguish between these little things and more nefarious manipulations.

Q: Why are we so gullible when it comes to those shark-in-a-shopping-mall type photos?

Hany Farid: You know, people are gullible on one hand–but they are also overly cynical. I see the public making mistakes in both directions. They look at images that are real and assume they are fake or see a fake image and think it’s real. So I’m not sure people are gullible but they do make mistakes. They know that images can be manipulated but they aren’t particularly good at knowing what’s real and what’s not.

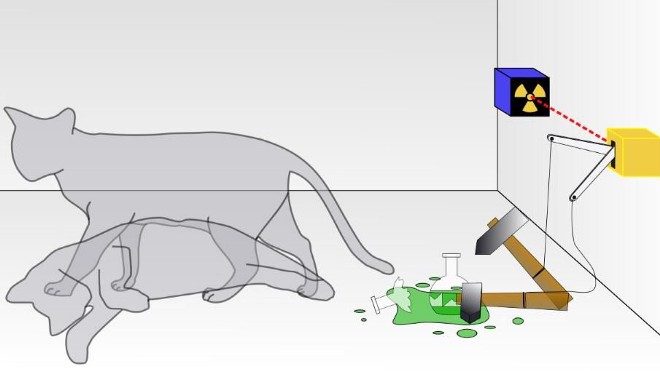

About 25 percent of my time is spent in perceptual science, studying how the brain perceives and trying to understand the visual system. We’ve done studies to try to understand how good people are at detecting fake images. And it turns out, we’re really bad at it. So what I think is happening is that people have gut reactions to images and just go with that instead of taking a more critical or analytical process. People have trouble sorting it all out. And it’s hard to sort out.

Q: Have we been de-sensitized because we see so many doctored images in magazines and in movies?

Hany Farid: It’s possible. We see these amazing things and they’re seamless, right? We don’t even think twice about it. So, yes, it could be a de-sensitization. It could be a firehose effect where we’re just inundated with images every day and it’s difficult to make heads or tails of it all.

Q: So given all your knowledge, are you any better than the average person in eyeballing an image and detecting a fake?

Hany Farid: When we do experiments, the answer is always in the image itself. We generate simple CGI scenes where the shadows are inconsistent. If you know the trick, you can determine whether it’s been doctored or not. The information to make the determination is there but people just don’t do it.

Much to my surprise, the average Psych 101 student is no better or worse than graduate students in computer vision, graduate students who know all these rules about shadows and inconsistencies. In some ways, the graduate students were even slightly worse at making the decisions, though not significantly so. But it’s a cognitive task, you have to reason it out to figure out if it’s real. And your instinct is almost always wrong. So now, when I look at images, I sort of ignore my instinct and start making measurements.

Our brains have rough heuristics for visual perception. And, if you think about it, it makes sense. There’s no reason for the visual system to have evolved to detect fake images. The world is real–so picking out doctored images is just not a skill we necessarily need to have.

Q: You’ve made the comment that one image can change the world–so we better make sure the image is real. Given that we have such poor gut instincts when it comes to doctored images, how do we make sure we’re getting the right information and making the right decisions?

Hany Farid: I do think an image can change the world. Look at the news–we have North Korea testing nuclear bombs and we’re using satellite images to verify that. More and more, we use images from around the world to make really important, serious decisions that have massive consequences. That’s one of the reason why we created a company to make these forensic techniques available to a wider audience. At the end of the day, if we can make these tools effective and available, we have a hope of giving the right parties the ability to do critical reasoning about these images and videos–and then make the right decisions about what to do about them.

Photo credit: Richard Scalzo/Shutterstock.com