Overhyped and never delivered: A brief history of innovations that weren’t

- Skepticism is appropriate when analyzing forecasts of future technology breakthroughs.

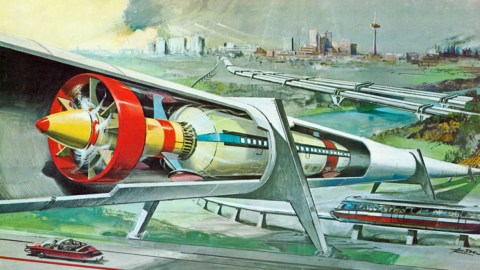

- From colonies on Mars to self-driving cars, the history of inventions is full of promises that won’t be fulfilled until well into the future (if they come at all).

- We must balance our long-term pursuit for innovation with improving what we know and have available today.

First, every major, far-reaching advance carries its own inherent concerns, if not some frankly undesirable consequences, whether immediately appreciated or apparent only much later: Leaded gasoline, a known danger from the very start, and chlorofluorocarbons, found undesirable only decades after their commercial introduction, epitomize this spectrum of worries. Second, rushing to secure commercial primacy or deploying the most convenient but clearly not the best possible technique may not be the long-term prescription for success, a fact that was clearly demonstrated by the history of “beaching” the submarine reactor for a rapid start of commercial electricity generation.

Third, we cannot judge the ultimate acceptance, societal fit, and commercial success of a specific invention during the early stages of its development and commercial adoption, and much less so as long as it remains, even after its public launch, to a large extent in experimental or trial stages: The suddenly truncated deployments of airships and supersonic airplanes made that clear. Fourth, skepticism is appropriate whenever the problem is so extraordinarily challenging that even the combination of perseverance and plentiful financing is no guarantee of success after decades of trying: There can be no better illustration of this than the quest for controlled fusion.

But both the acknowledgments of reality and the willingness to learn, even modestly, from past failures and cautionary experience seem to find less and less acceptance in modern societies where masses of scientifically illiterate, and often surprisingly innumerate, citizens are exposed daily not just to overenthusiastically shared reports of potential breakthroughs but often to vastly exaggerated claims regarding new inventions. Worst of all, news media often serve up patently false promises as soon-to-come, fundamental, or, as the current parlance has it, “disruptive” shifts that will “transform” modern societies. Characterizing this state of affairs as living in a post-factual society is, unfortunately, not much of an exaggeration.

Breakthroughs that are not

In light of how common this category of misinformation concerning breakthrough inventions (and their likely speed of development and the ensuing impact on the society) has become, any systematic review of this dubious genre would be both too long and too tedious. Instead, I will note the breadth of these claims — with impossible timings and details coming across the vast range of scales, from colonizing planets to accessing our thoughts […].

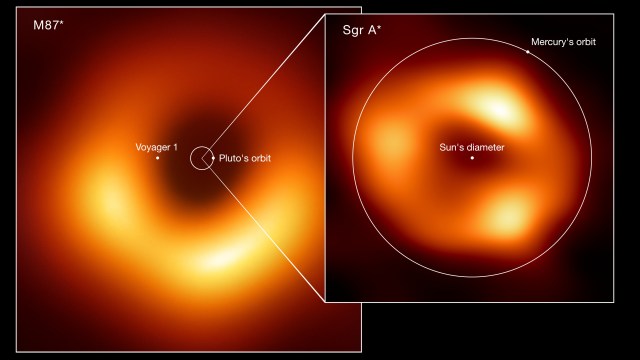

In 2017 we were told that the first mission to colonize Mars would blast off in 2022, to be followed soon by an extensive effort to “terraform” the planet (turn it into a habitable world by creating an atmosphere) preparatory to its large-scale colonization by humans. As science fiction this was an old and utterly unoriginal fable: Many storytellers have done that, no one more imaginatively than Ray Bradbury in his Martian Chronicles in 1950. As a prediction and description of an actual scientific and technical advance it is a complete fairy tale, but one that has been reported seriously and repeatedly by the mass media for years as if it were something that would actually get under way according to that delusional schedule.

At the opposite side of this touted invention spectrum (from transforming the planets to reconnecting individual neurons) is a way for machines to merge with humans’ brains: The brain-computer interface (BCI) has been a much-researched topic during the past two decades. This is something that would eventually require the implanting of miniature electronic devices directly into the brain to target specific groups of neurons (a noninvasive sensor on or near the head could never be so powerful or precise), an undertaking with many obvious ethical and physical perils and downsides. But one would never know this from reading the gushing media reports on advances in BCI.

This is not my impression but the conclusion of a detailed examination of nearly four thousand news items on BCI published between 2010 and 2017. The verdict is clear: Not only was the media reporting overwhelmingly favorable, it was heavily preoccupied with unrealistic speculations that tended to exaggerate greatly the potential of BCI (“the stuff of biblical miracles,” “prospective uses are endless”). Moreover, a quarter of all news reports made claims that were extreme and highly improbable (from “lying on a beach on the east coast of Brazil, controlling a robotic device roving on the surface of Mars” to “achieving immortality in a matter of decades”) while failing to address the inherent risk and ethical problems.

In light of these planet-molding claims and brain-merging promises, how much easier it is, then, to believe many comparatively down-to-earth achievements that have been wholesaled by media during recent years. Forecasts of completely autonomous road vehicles were made repeatedly during the 2010s: Completely self-driving cars were to be everywhere by 2020, allowing the operator to read or sleep during a commute in a personal vehicle. All internal combustion engines currently on the road were to be replaced by electric vehicles by 2025: This forecast was made and again widely reported as a nearly accomplished fact in 2017. A reality check: In 2022 there were no fully self-driving cars; fewer than 2 percent of the world’s 1.4 billion motor vehicles on the road were electric, but they were not “green,” as the electricity required for their operation came mostly from burning fossil fuels: In 2022 about 60 percent of all electricity in general came from burning coal and natural gas.

In the grand scheme of things, improving what we know and making it universally available might bring more benefits to more people in a shorter period of time than focusing overly on invention and hoping that it will bring miraculous breakthroughs.

Vaclav Smil

By now, artificial intelligence (AI) should have taken over all medical diagnoses: After all, computers had already beaten not only the world’s best chess player but even the best Go master, so how much more difficult could it be for the likes of IBM’s Watson to do away with all radiologists? We know the answer: In January 2022 IBM announced that it was selling Watson and exiting health care. Apparently, doctors still matter! And the problems with electronic medicine affect even the simplest of tasks, the adoption of electronic health records (EHR) in place of charts written in longhand. According to a 2018 survey by Stanford Medicine researchers, 74 percent of responding physicians said that using an EHR system increased their workload and, even more important, 69 percent claimed that using an EHR system took time away from seeing patients. In addition, EHRs expose private information to hackers (the repeated attacks on hospitals demonstrate how easy is to extort payments for restarting these essential data services); poorly designed interfaces cause endless frustration; and why should every doctor and nurse be a prodigious typist? Above all, what is there to admire about the new model of care with a physician looking at a screen rather than at a patient recounting her problems?

Such lists could be considerably extended, starting with puerile promises of leading alternative lives (as lifelike avatars) in a realistic 3-D virtual space: Of course, the most prominent testament to this delusion is Facebook’s 2021 conversion by renaming itself Meta and believing that people would prefer to live in an electronic metaverse (I cannot find suitable adjectives to describe this mode of reasoning, if that word is the right noun to describe such an action). Another obvious candidate is the astonishing power of genetic engineering enabled by CRISPR, a new, effective method for editing genes by altering DNA sequences and modifying gene functions: In sensational reporting there is a short distance between this ability and genetically redesigned worlds. After all, has not a Chinese geneticist already begun to design babies, only to be stopped by insufficiently innovative bureaucrats? And just one more recent example: Franklin Templeton’s 2022 advertisement that asked “What if growing your own clothes was as simple as printing your own car?” Apparently, the latter (never achieved) option is now considered the template for simplicity. What a perfect solution — when in 2022, even major car makers struggled with getting enough materials and microprocessors for their production lines: just print it all at home!

In the grand scheme of things, improving what we know and making it universally available might bring more benefits to more people in a shorter period of time than focusing overly on invention and hoping that it will bring miraculous breakthroughs. To forestall the obvious critique, this is not an argument against the determined pursuit of new inventions, merely a plea for a better balance between the quest for (perhaps, but not assuredly) stunning future gains and the deployment of the well-mastered but still far from universally applied understanding of achievements.