Why don’t robots have rights?

- When we discuss animal rights and welfare, we often reference two ideas: sentience (the ability to feel) and sapience (the capacity for have complex intelligence).

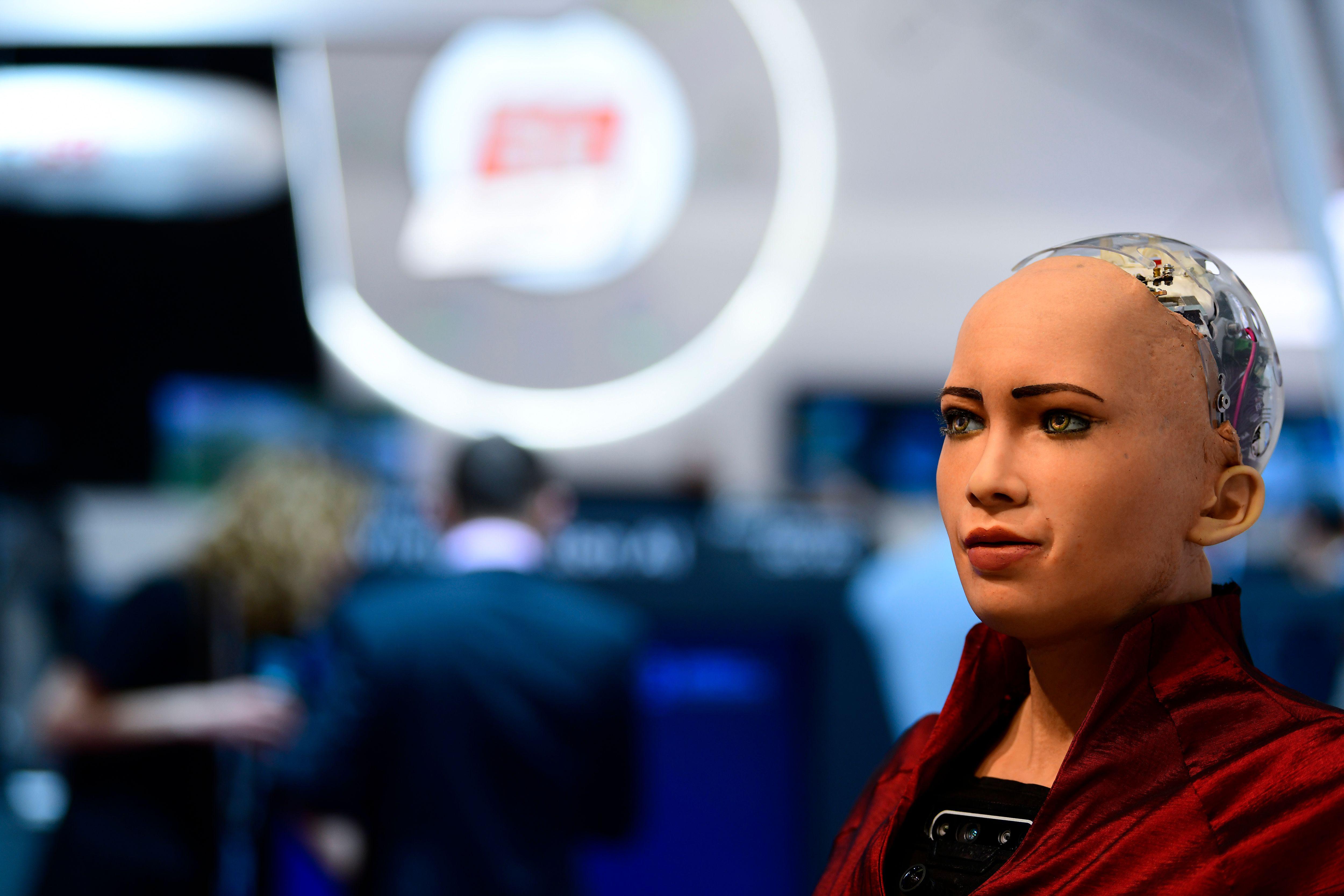

- Yet, when we discuss robots and advanced AI, these two ideas are curiously absent from the debate.

- Perhaps we are on a technological threshold between “AI” and “dignified AI.” Maybe it’s time to talk more about robot rights.

In 2010, the EU issued a legal directive entitled, “On the protection of animals used for scientific purposes.” It outlines and explains which animals ought to have certain protections, and it mandates that 27 EU states must follow certain rules for the capture and testing of animals. It bans any action that would bring those animals “severe pain, suffering, or distress, which is likely to be long-lasting and cannot be ameliorated.” What’s more, all testing needs independent feasibility studies to prove that animals were necessary for this or that research. On this list are nonhuman primates, most mammals, and a lot of vertebrates. (Interestingly, cyclostomes and cephalopods like, octopuses, are included as honorary vertebrates.)

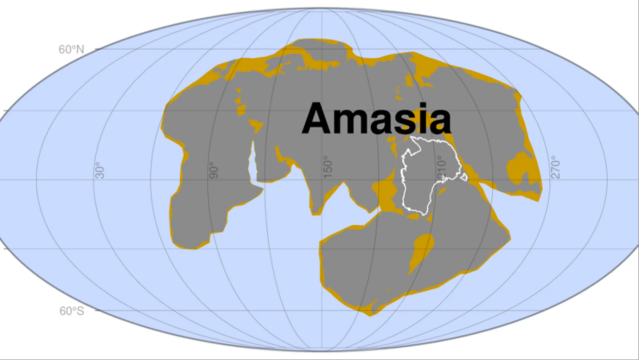

While the directive explicitly states that “all animals have intrinsic value,” the document is basically an exercise in justifying how some animals are more valuable than others. But what criteria are we to use if we’re going about ranking species? If we imagine a long line of animals from amoeba to humans, at what point do you draw a line to say, “After this point, things have rights”?

It’s an issue that applies more and more to another thorny area: robot rights. Here, too, we can draw a similar line. At one end are calculators and at the other end are the transcendent, omniscient AIs of science fiction. Where does our line fall, now? When does a computer have rights? And when, if ever, is a robot a person?

Future slaves

In most science fiction, robots are depicted as slaves or resources to be exploited. They always have fewer, if any, rights than the good old blood-and-genes homo sapiens. In Star Wars, droids use language, they plan, and they seem to be in distress at various things. Yet they are treated little more than slaves. Blade Runner’s replicants — indistinguishable from “real” humans — are forced into working as soldiers or prostitutes. Star Trek even devotes an entire episode to the question of whether Data can choose his own life path. Science fiction depicts worlds void of any kind of robot rights.

This is because we are all raised with a kind of emotional disconnect from robots. They are set apart and different. It’s the norm of our times to treat computers and AI as objects to be used. Their wellbeing, their choices, and their “lives” are trivial matters, if they’re even matters at all.

All of which is a curious moral inconsistency. In our legal and ethical documents (such as the EU directive above), we often reference two concepts: sapience (complex intelligence) and sentience (the capacity to have subjective experiences or “feelings”), both of which we ascribe to animals in varying degrees. We do not know they have either, but we assume they do. Even our EU directive says, “Animals should always be treated as sentient creatures.” This isn’t to say they are, but rather we should act as if they are. Yet, why do we not so readily ascribe them to sophisticated AI?

The reverse Turing test

The Turing test is a famous experiment where human users ask an artificial intelligence a series of questions to determine whether it is indistinguishable from a human or not. It’s a question of intelligence and imitation. But what if we turned this on its head and made a “Robot Rights Turing Test”? What if we asked the question, “What is it about a robot that excludes them from rights, protections, and respect?” What makes robots different to humans?

Here are just three possible suggestions and their replies.

1. Robots lack advanced, generalized intelligence (sapience). This is certainly true, at the moment. Your calculator is great at working with π and cos(x), but it can’t help you read road signs. Voice assistants are good at telling you the weather, but they can’t hold a conversation. There are three problems with this objection, though. First, there are a great many animals without much intelligence that we still respect and treat well. Second, there are some humans who lack advanced intelligence — such as babies or the severely mentally disabled — who we still give rights to. Third, given the speed of improvement in AI, this is a threshold we may soon be able to cross. Are we really ready to unshackle computers and treat them as equally humans?

2. Robots cannot feel emotions like pain or love (sentience). This is a tricky area, not least because we don’t entirely know what emotions are. On a physicalist level, if we reduce feelings to hormones or electrochemical reactions in the brain, then it seems plausible we can reproduce that in an AI. In that context, will they be given legal/ethical rights? The other issue, though, is that we still encounter a human-centric bias here. If your friend cries, you assume they’re sad — not that they’re imitating sadness. If your mom takes off her jacket, you assume she’s hot. When an AI demonstrates a feeling (this is more the case in science fiction representations than anything today) why do we assume they are only imitating? Is it because…

3. Robots are made and programmed by humans. Even when a more advanced AI can “learn” from situations, it still needs a lot of human direction in the form of programming. Something so dependent on human agency cannot be considered worthy of rights. There are two issues with this. First, while we don’t talk about humans being “programmed,” it’s not too much of a stretch to say that’s exactly what our genes do. You are simply the result of your genetic wiring and your societal-parental input. Change the words, and little is different. Second, why does dependency exclude you from rights? Dogs, children, and the very elderly are dependent on humans, yet we do not let them be abused or mistreated. (As an aside, the “dependency = slavery” argument was first presented by Aristotle).

The wrong side of history

There are two questions, hiding within one big issue. The first is whether an AI is worthy of personhood; this is, perhaps, too big a question for now (and something that might never be satisfactorily answered). The second, though, is at what point does AI qualify to be treated with respect and care? When can we no longer exploit or mistreat advanced robots?

It might well be that future generations will look back aghast at our behavior. If we imagine a future where sentient and sapient AI are treated just as humans are, we can also imagine how shocked they would be at our age. If the AI of the 22nd century are our friends, colleagues, and gaming partners, will the subject of 21st century exploitation be an awkward topic?

Perhaps we are on the precipice of “dignified AI.” No one is campaigning for calculator’s rights, but maybe we should start to reappraise how we look at the generalized, impressive AI we are creating. We have one foot on either side of a technological threshold, and it’s time to re-examine our ethical and societal values.

Jonny Thomson teaches philosophy in Oxford. He runs a popular account called Mini Philosophy and his first book is Mini Philosophy: A Small Book of Big Ideas.