Cosmology’s Biggest Conundrum Is A Clue, Not A Controversy

How fast is the Universe expanding? The results might be pointing to something incredible.

If you want to know how something in the Universe works, all you need to do is figure out how some measurable quantity will give you the necessary information, go out and measure it, and draw your conclusions. Sure, there will be biases and errors, along with other confounding factors, and they might lead you astray if you’re not careful. The antidote for that? Make as many independent measurements as you can, using as many different techniques as you can, to determine those natural properties as robustly as possible.

If you’re doing everything right, every one of your methods will converge on the same answer, and there will be no ambiguity. If one measurement or technique is off, the others will point you in the right direction. But when we try to apply this technique to the expanding Universe, a puzzle arises: we get one of two answers, and they’re not compatible with each other. It’s cosmology’s biggest conundrum, and it might be just the clue we need to unlock the biggest mysteries about our existence.

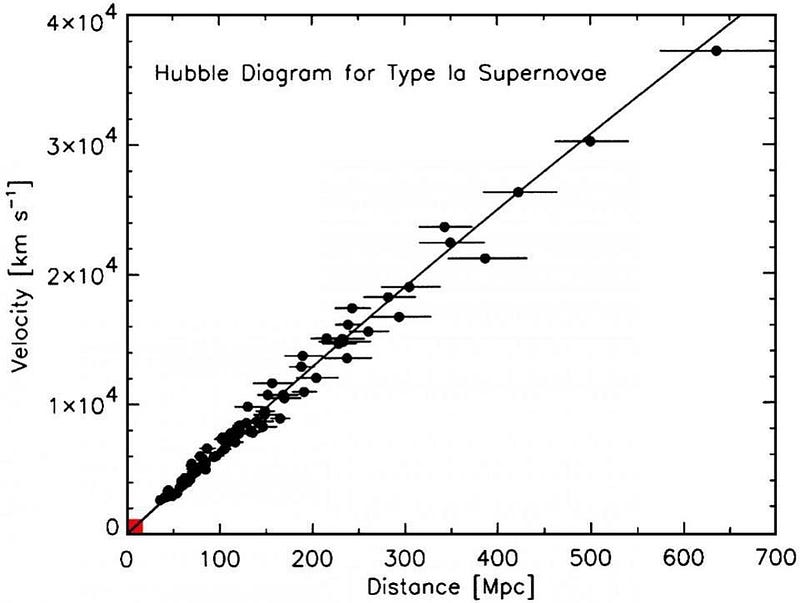

We’ve known since the 1920s that the Universe is expanding, with the rate of expansion known as the Hubble constant. Ever since, it’s been a quest for the generations to determine “by how much?”

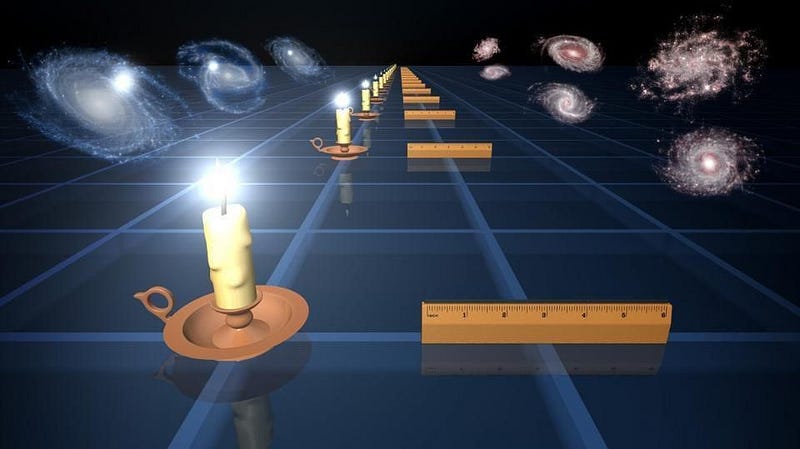

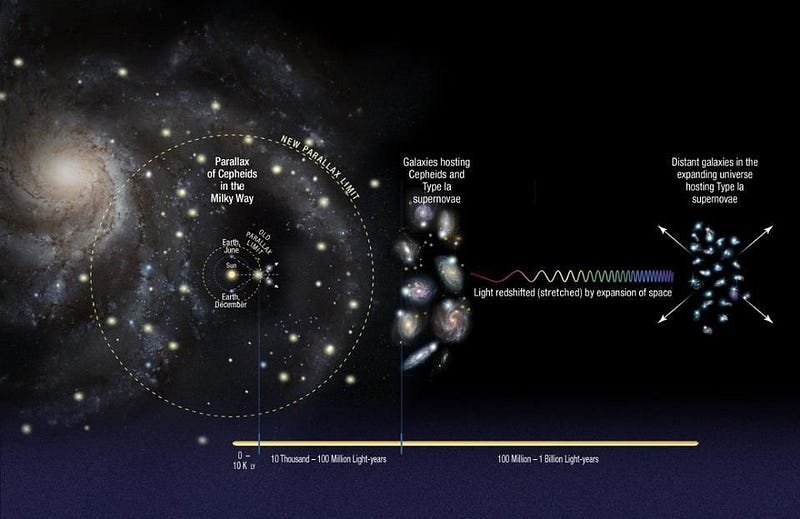

Early on, there was only one class of technique: the cosmic distance ladder. This technique was incredibly straightforward, and involved just four steps.

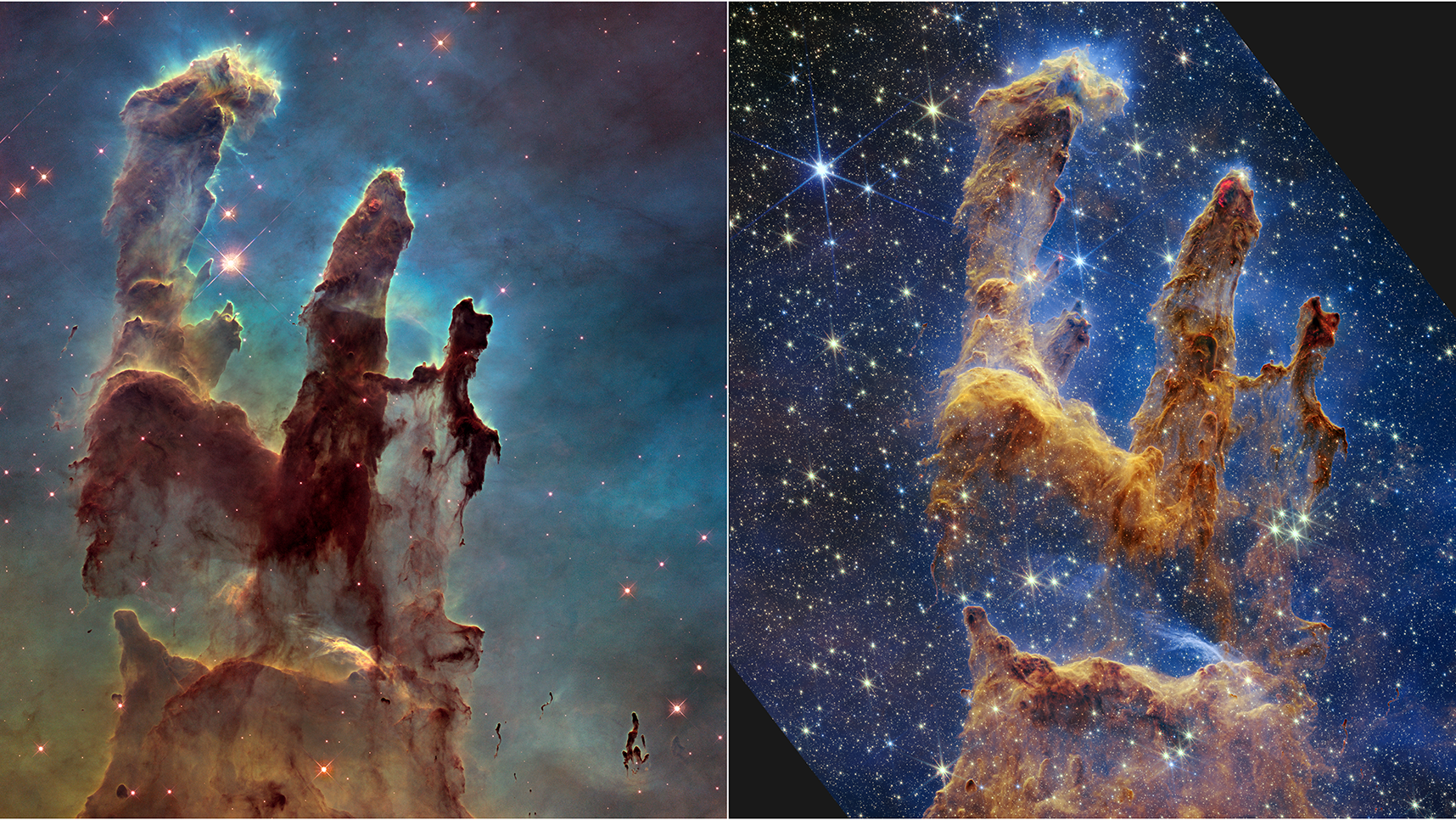

- Choose a class of object whose properties are intrinsically known, where if you measure something observable about it (like its period of brightness fluctuation), you know something inherent to it (like its intrinsic brightness).

- Measure the observable quantity, and determine what its intrinsic brightness is.

- Then measure the apparent brightness, and use what you know about cosmic distances in an expanding Universe to determine how far away it must be.

- Finally, measure the redshift of the object in question.

The redshift is what ties it all together. As the Universe expands, any light traveling through it will also stretch. Light, remember, is a wave, and has a specific wavelength. That wavelength determines what its energy is, and every atom and molecule in the Universe has a specific set of emission and absorption lines that only occur at specific wavelengths. If you can measure at what wavelength those specific spectral lines appear in a distant galaxy, you can determine how much the Universe has expanded from the time it left the object until it arrived at your eyes.

Combine the redshift and the distance for a variety of objects all throughout the Universe, and you can figure out how fast it’s expanding in all directions, as well as how the expansion rate has changed over time.

All throughout the 20th century, scientists used this technique to try and determine as much as possible about our cosmic history. Cosmology — the scientific study of what the Universe is made of, where it came from, how it came to be the way it is today, and what its future holds — was derided by many as a quest for two parameters: the current expansion rate and how the expansion rate evolved over time. Until the 1990s, scientists couldn’t even agree on the first of these.

They were all using the same technique, but made different assumptions. Some groups used different types of astronomical objects from one another, others used different instruments with different measurement errors. Some classes of object turned out to be more complicated than we originally thought they’d be. But many problems still showed up.

If the Universe were expanding too quickly, there wouldn’t have been enough time to form planet Earth. If we can find the oldest stars in our galaxy, we know the Universe has to be at least as old as the stars within it. And if the expansion rate evolved over time, because there was something other than matter or radiation in it — or a different amount of matter than we’d assumed — that would show up in how the expansion rate changed over time.

Resolving these early controversies were the primary scientific motivation for building the Hubble Space Telescope. It’s key project was to make this measurement, and was tremendously successful. The rate it got was 72 km/s/Mpc, with just a 10% uncertainty. This result, published in 2001, solved a controversy as old as Hubble’s law itself. Alongside the discovery of dark matter and energy, it seemed to give us a fully accurate and self-consistent picture of the Universe.

The distance ladder group has grown far more sophisticated over the intervening time. There are now an incredibly large number of independent ways to measure the expansion history of the Universe:

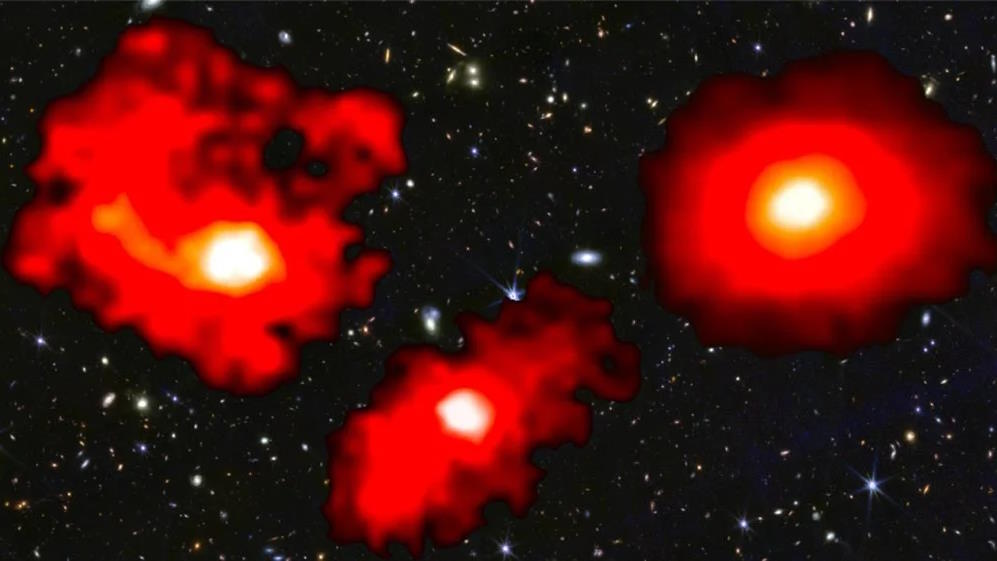

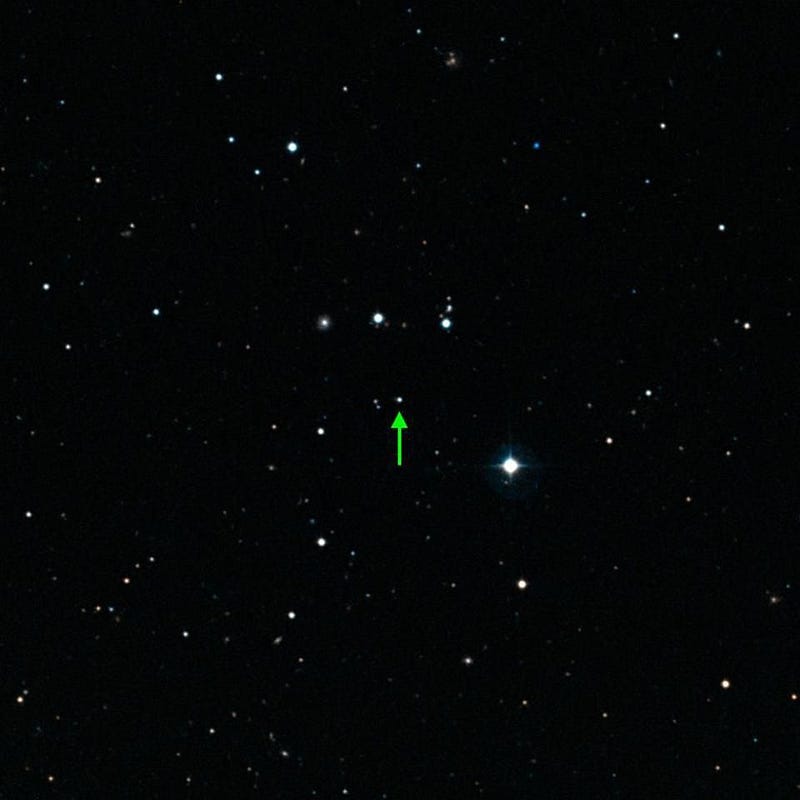

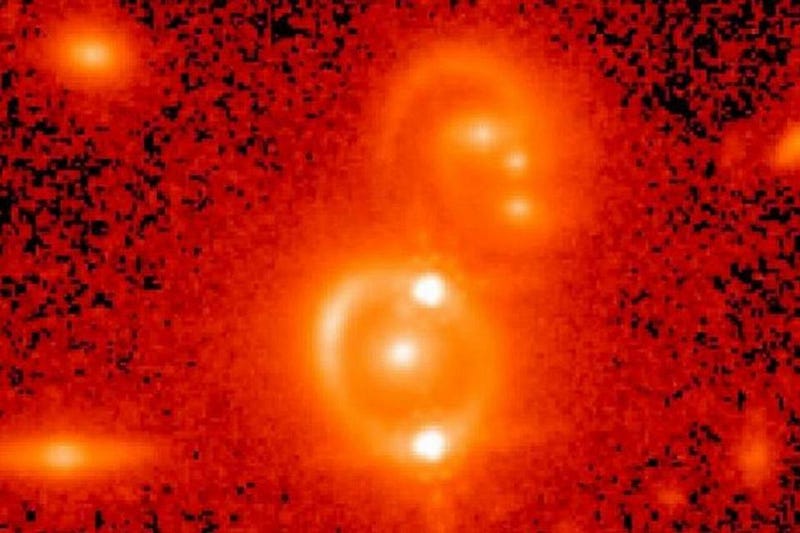

- using distant gravitational lenses,

- using supernova data,

- using rotational and dispersion properties of distant galaxies,

- or using surface brightness fluctuations from face-on spirals,

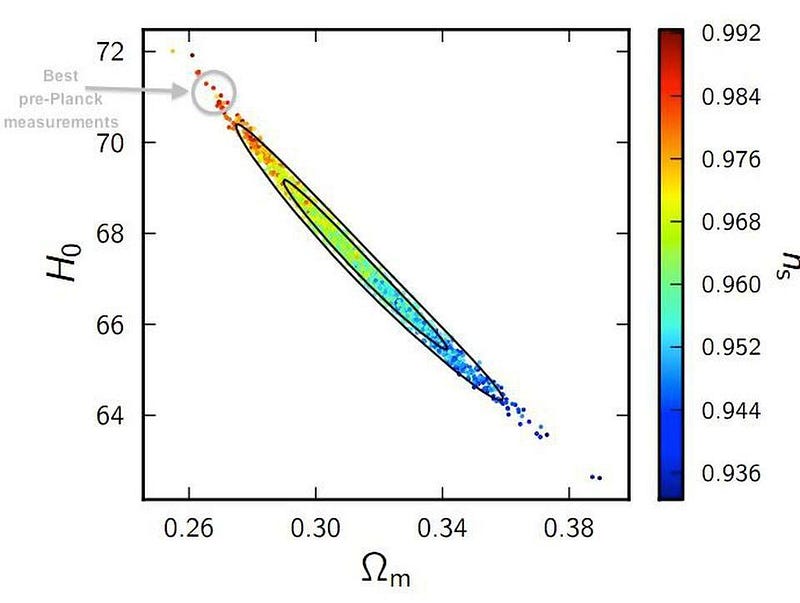

and they all yield the same result. Regardless of whether you calibrate them with Cepheid variable stars, RR Lyrae stars, or red giant stars about to undergo helium fusion, you get the same value: ~73 km/s/Mpc, with uncertainties of just 2–3%.

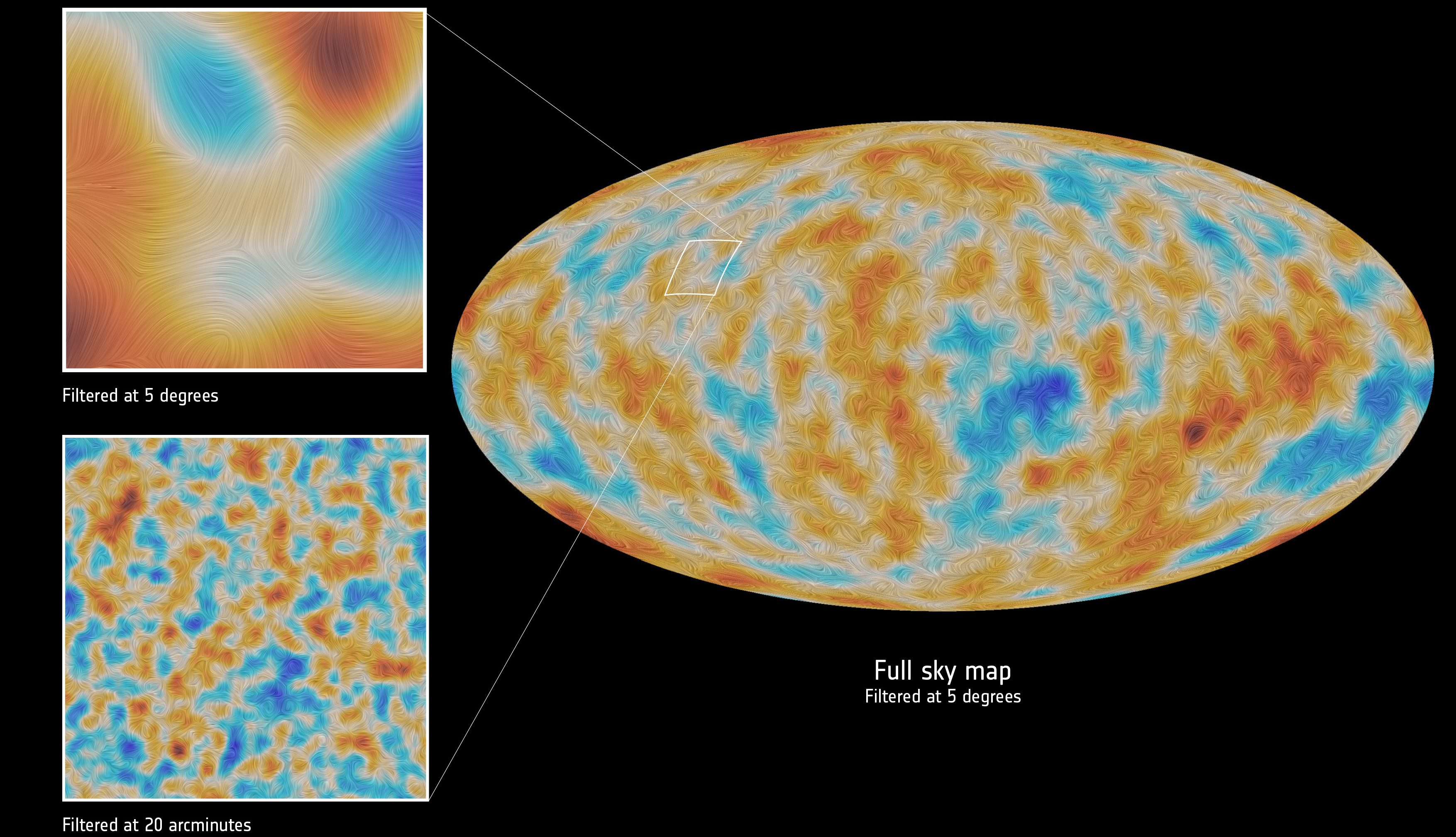

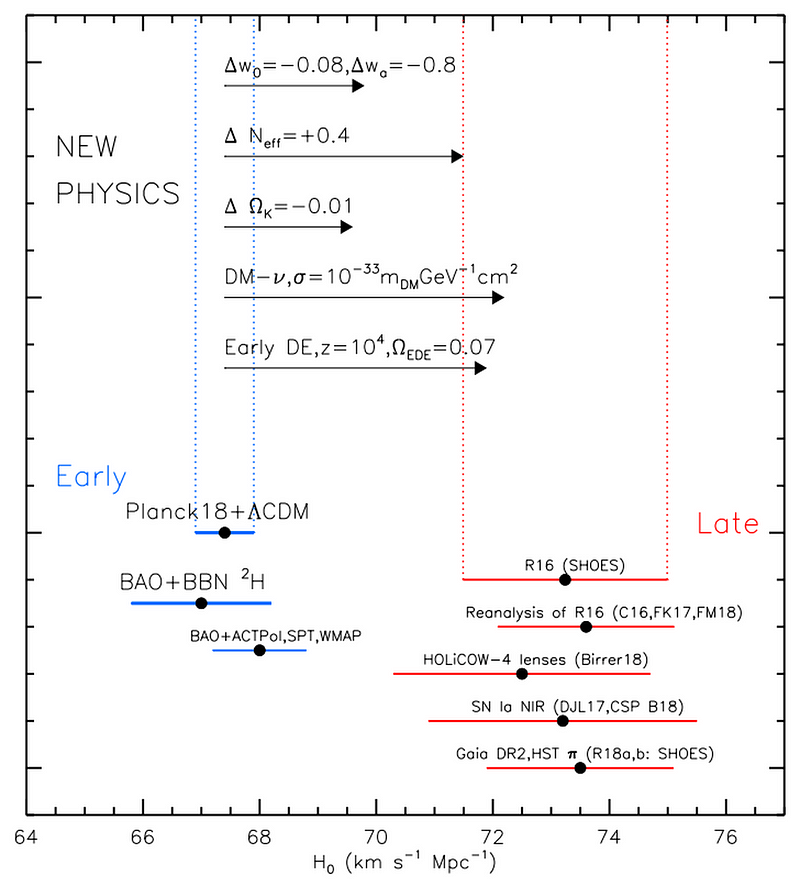

It would be a tremendous victory for cosmology, except for one problem. It’s now 2019, and there’s a second way to measure the expansion rate of the Universe. Instead of looking at distant objects and measuring how the light they’ve emitted has evolved, we can using relics from the earliest stages of the Big Bang. When we do, we get values of ~67 km/s/Mpc, with a claimed uncertainty of just 1–2%. These numbers are different by 9% from one another, and the uncertainties do not overlap.

This time, however, things are different. We can no longer expect that one group will be right and the other will be wrong. Nor can we expect that the answer will be somewhere in the middle, and that both groups are making some sort of error in their assumptions. The reason we can’t count on this is that there are too many independent lines of evidence. If we try to explain one measurement with an error, it will contradict another measurement that’s already been made.

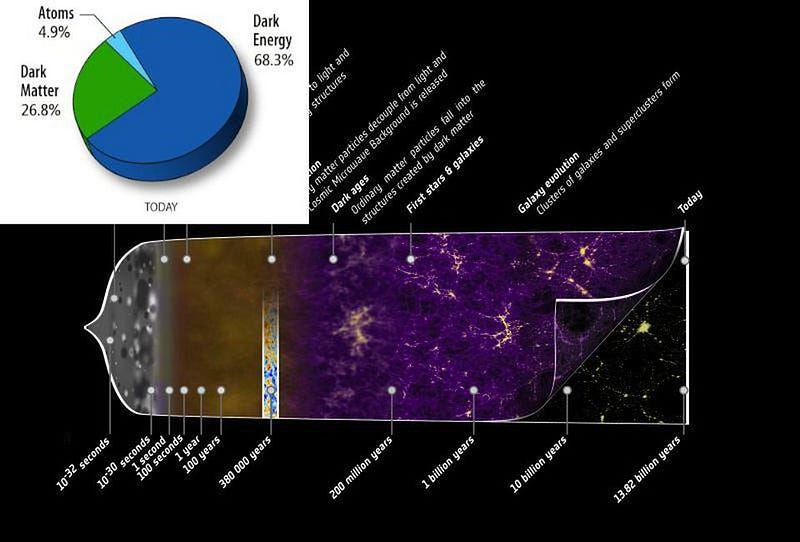

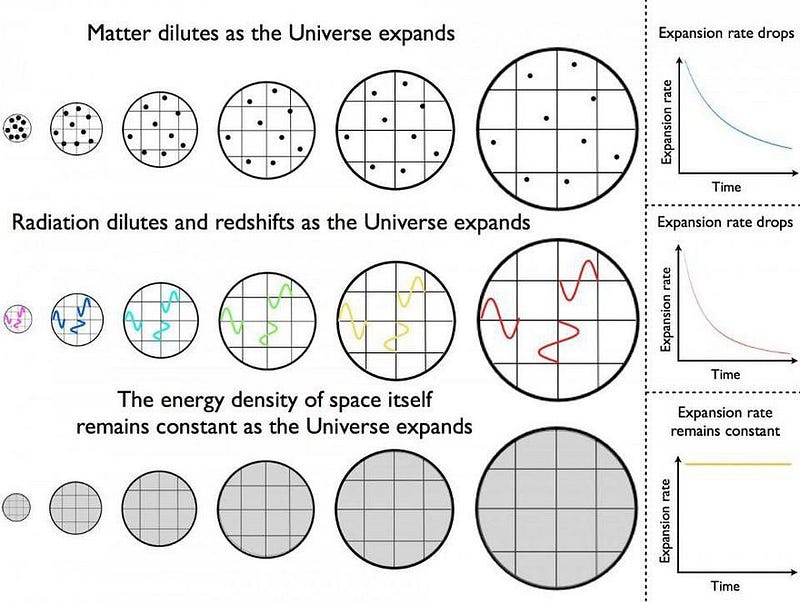

The total amount of stuff that’s in the Universe is what determines how the Universe expands over time. Einstein’s General Relativity ties the energy content of the Universe, the expansion rate, and the overall curvature together. If the Universe expands too quickly, that implies that there’s less matter and more dark energy in it, and that will conflict with observations.

For example, we know that the total amount of matter in the Universe has to be around 30% of the critical density, as seen from the large-scale structure of the Universe, galaxy clustering, and many other sources. We also see that the scalar spectral index — a parameter that tells us how gravitation will form bound structures on small versus large scales — has to be slightly less than 1.

If the expansion rate is too high, you not only get a Universe with too little matter and too high of a scalar spectral index to agree with the Universe we have, you get a Universe that’s too young: 12.5 billion years old instead of 13.8 billion years old. Since we live in a galaxy with stars that have been identified as being more than 13 billion years old, this would create an enormous conundrum: one that cannot be reconciled.

But perhaps no one is wrong. Perhaps the early relics point to a true set of facts about the Universe:

- it is 13.8 billion years old,

- it does have roughly a 70%/25%/5% ratio of dark energy to dark matter to normal matter,

- it does appear to be consistent with an expansion rate that’s on the low end of 67 km/s/Mpc.

And perhaps the distance ladder also points to a true set of facts about the Universe, where it’s expanding at a larger rate today on cosmically nearby scales.

Although it sounds bizarre, both groups could be correct. The reconciliation could come from a third option that most people aren’t yet willing to consider. Instead of the distance ladder group being wrong or the early relics group being wrong, perhaps our assumptions about the laws of physics or the nature of the Universe is wrong. In other words, perhaps we’re not dealing with a controversy; perhaps what we’re seeing is a clue of new physics.

It is possible that the ways we measure the expansion rate of the Universe are actually revealing something novel about the nature of the Universe itself. Something about the Universe could be changing with time, which would be yet another explanation for why these two different classes of technique could yield different results for the Universe’s expansion history. Some options include:

- our local region of the Universe has unusual properties compared to the average (which is already disfavored),

- dark energy is changing in an unexpected fashion over time,

- gravity behaves differently than we’ve anticipated on cosmic scales,

- or there is a new type of field or force permeating the Universe.

The option of evolving dark energy is of particular interest and importance, as this is exactly what NASA’s future flagship mission for astrophysics, WFIRST, is being explicitly designed to measure.

Right now, we say that dark energy is consistent with a cosmological constant. What this means is that, as the Universe expands, dark energy’s density remains a constant, rather than becoming less dense (like matter does). Dark energy could also strengthen over time, or it could change in behavior: pushing space inwards or outwards by different amounts.

Our best constraints on this today, in a pre-WFIRST world, show that dark energy is consistent with a cosmological constant to about the 10% level. With WFIRST, we’ll be able to measure any departures down to the 1% level: enough to test whether evolving dark energy holds the answer to the expanding Universe controversy. Until we have that answer, all we can do is continue to refine our best measurements, and look at the full suite of evidence for clues as to what the solution might be.

This is not some fringe idea, where a few contrarian scientists are overemphasizing a small difference in the data. If both groups are correct — and no one can find a flaw in what either one has done — it might be the first clue we have in taking our next great leap in understanding the Universe. Nobel Laureate Adam Riess, perhaps the most prominent figure presently researching the cosmic distance ladder, was kind enough to record a podcast with me, discussing exactly what all of this might mean for the future of cosmology.

It’s possible that somewhere along the way, we have made a mistake somewhere. It’s possible that when we identify it, everything will fall into place just as it should, and there won’t be a controversy or a conundrum any longer. But it’s also possible that the mistake lies in our assumptions about the simplicity of the Universe, and that this discrepancy will pave the way to a deeper understanding of our fundamental cosmic truths.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.