The Idea is AI Ears That Are Better Than Your Own

(DOPPLER LABS)

It could be argued that no animal has a more complex soundscape to navigate than a human. We lack cats’ handy ability to turn an ear up to 180° to hone in on a sound, and they hear much better than we do anyway.

Yet we often have to sonically focus on specific sounds within the din — our child’s cry, a friend talking in a loud club, and so on — and it can be difficult. What if AI could makes our ears smarter? One company plans to release a Trojan horse of a hardware platform early next year: $299 audiophile Bluetooth earbuds. Their real purpose? The dawn of AI-enhanced human hearing. (Also, on a minor note, possibly the end of the phone.)

The earbuds are called Here One.

The new company behind them, Doppler Labs, has been giving writers (not this one) impressive demos of the upcoming device. Its feature list is eye-opening and add up to both an vision of humans’ enhanced future as well as an inventory of the technological challenges to be overcome. The product is an example of the kind of personalized, technology-based human enhancements we’re likely to see much more of.

Here’s what Doppler expects the Here Ones and their accompanying phone app to be able to do when they’re released. This is in addition to the obvious features: wireless streaming audio, wireless phone calls, and controlling Siri, Google Voice, and other virtual assistants.

Mix streamed music with sounds around you so you can hear both.

Previous attempts at capturing ambient audio sounded weird and were slightly delayed. Doppler’s apparently got this finally worked out. Upon first inserting the earbuds, WIRED writer David Pierce found the Here One version of the real world so transparent and immediate he didn’t at first realize he was hearing it. The idea is to offer people a way to listen to recordings without having to block out the world.

Amplify or reduce the volume of a speaker you need to hear.

WIRED’s conversation with a Doppler exec continued normally until the exec suddenly pulled his voice out of the Here Ones altogether. The ability to focus on people you want to hear, and block out those you don’t, is something we could totally use and that we can’t do organically.

Bring down the volume of unwanted noise, or eliminate it altogether.

Here One’s Smart filtering depends on machine learning. It requires an expansive knowledge of sounds users may encounter, and there are a lot of them. Doppler’s Fritz Lanman tells Quartz, “Babies are ridiculously variable. [They’re] wide-band and unpredictable and unique.”

To that end, Doppler’s been capturing audio samples — over a million so far, from five continents — that it converts into sound-detection algorithms for Here One. One of the most interesting things the company’s doing — and part of the reason we invoked the Trojan horse metaphor earlier — is that it’s collecting audio data from the purchased Here Ones out in the world, and continually feeding that data back to users as new algorithms. (Doppler says the data is anonymized.) So the company is essentially crowdsourcing their system’s detection algorithms, and the more earbuds they sell, the larger the crowd.

Listen in different directions.

The earbuds can be set listen only to what’s physically in front of you, or behind you, blocking everything else out. Doppler’s deciding what to call the backward listening, considering “eavesdrop” or “spy” mode. It’s basically just like ear-turning “cat” mode. Envy the furry ones no more.

Customize the sound around you.

A set of controls let you change the world you hear, allowing each of us our own soundscape. It’s kind of a new form of bubble reality — this may be a good thing or a bad thing. Either way, you’ll be able to adjust the volumes of sounds — smart filters let you target them — change their tonal characteristics with EQ, or add audio effects to them.

Create a personal listening profile.

Here Ones note your listening habits and suggest adjustments based on them as you enter different audio environments. This is another step out of harsh sonic reality into your own curated acoustic environment. Good thing?

Doppler’s also offering journalist a peek at other features that aren’t quite ready for prime time.

Translate languages in real-time.

In the demo Doppler gave WIRED, a staffer told Pierce a joke in Spanish that he heard in English. An AI Babel fish. It’s not perfect yet — the punchline arrived about five seconds late, for one thing — but the value of this capability is obvious. It’s safe to say that this is something people who travel have been waiting for, and could usher-in a world-changing cross-culturalism.

Automatically recognize and boost the volume of people important to you.

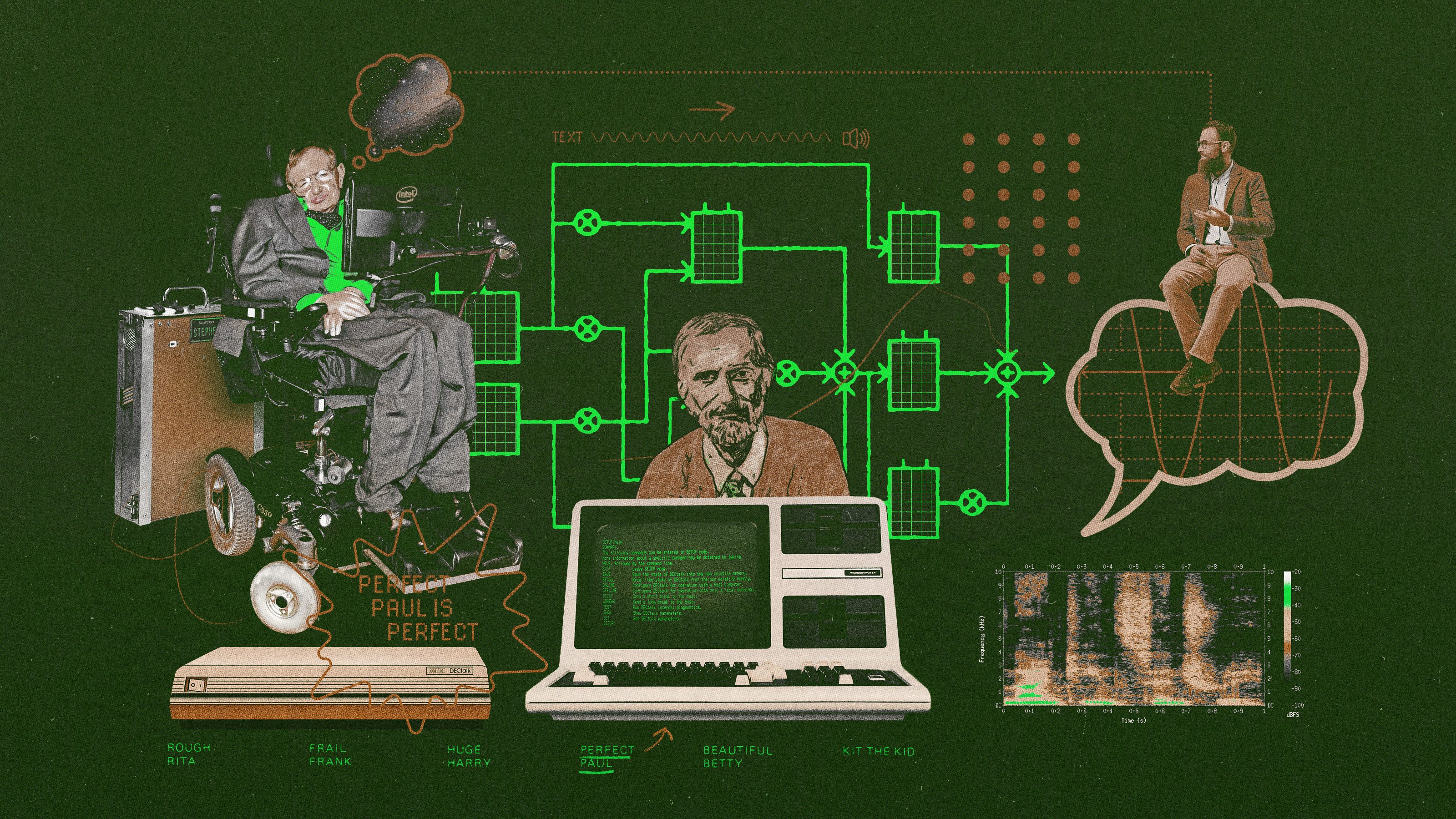

This would be fantastic for things like hearing your baby crying through a wall of background noise. Oh, also, the inverse would true: You could have Here Ones automatically mute an annoying friend. But real-world voice ID is super-hard from an AI point of view. Siri, Amazon Echo, Google Voice, and Cortana have it easy: They take a few listens to your voice in a quiet environment and they know you. Picking out someone’s voice from a confused and shifting soundscape is far more problematic, and, according to Quartz, Doppler’s not there yet.

About the future of your phone.

If you can take calls over Bluetooth straight on your earbuds, why do you need an app on a phone? Will we still need phone screens and apps down the road? It may be that we do: Complex information is still more easily apprehended visually . WIRED notes that technologist Chris Noessel, author of Make it So: Interface Lessons from Sci-Fihad this to say about the way Samantha, the AI OS, spoke to her human in the movie Her, “Samantha talks to Theodore through the earpiece frequently. When she needs to show him something, she can draw his attention to the cameo phone or a desktop screen.” Still, Doppler’s looking for ways to remove the phone from the equation: “We know that as soon as the user pulls the phone out of their pocket, that’s friction to the experience,” says Sean Furr, head of UX and UI at Doppler.“ Hello, Google Glass?

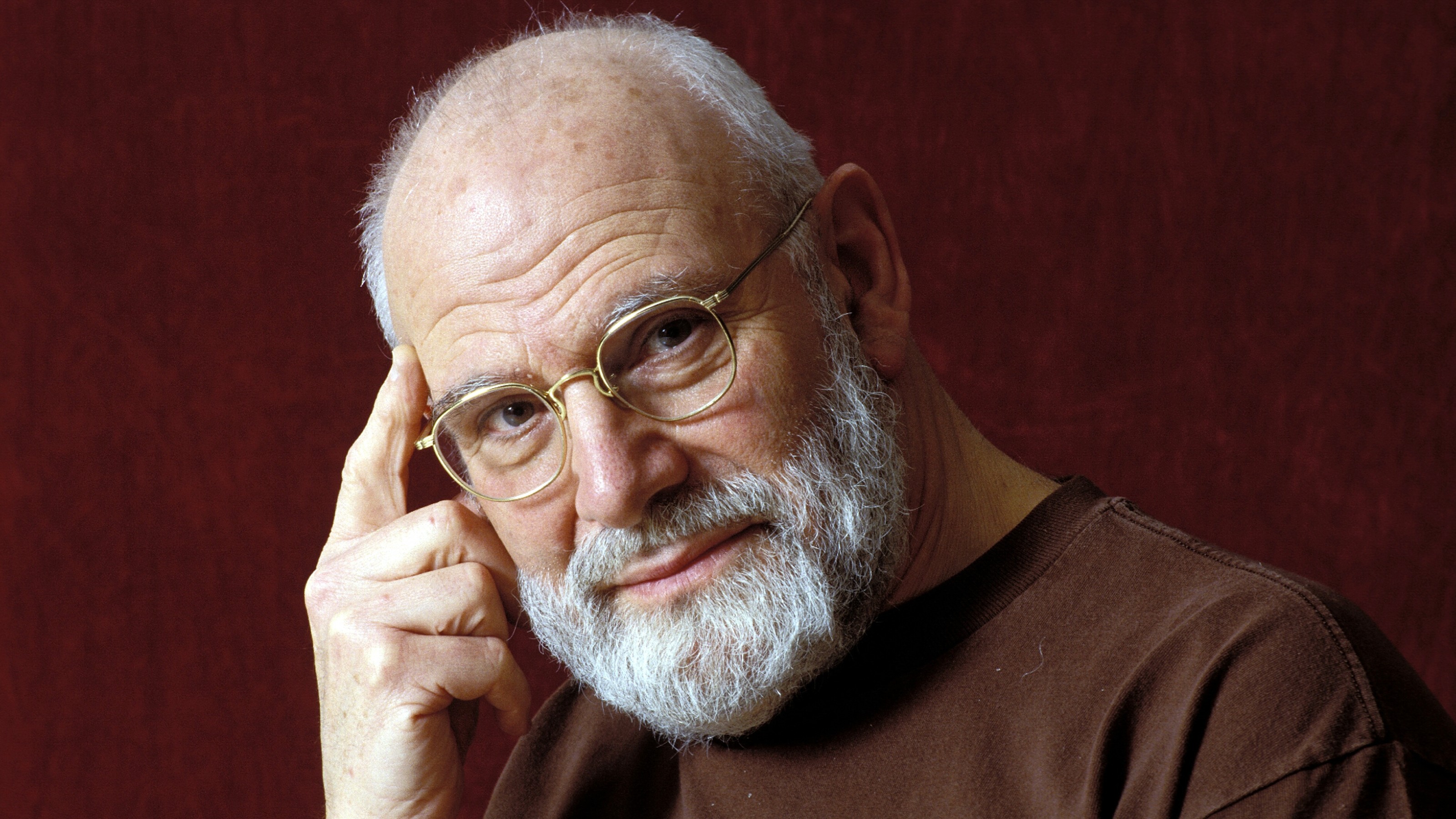

There are big issues here about what we are, how we relate to each other, and how we experience and move through the world. Is each of us living in our own sonic world — not to mention our Google Glasses, etc. — a good thing, or will it make a shared sense of reality even harder to attain? Impossible to know until the tech truly becomes integrated into our lives. Can’t wait.