Are Alexa, Siri, and Their Pals Teaching Kids to Be Rude?

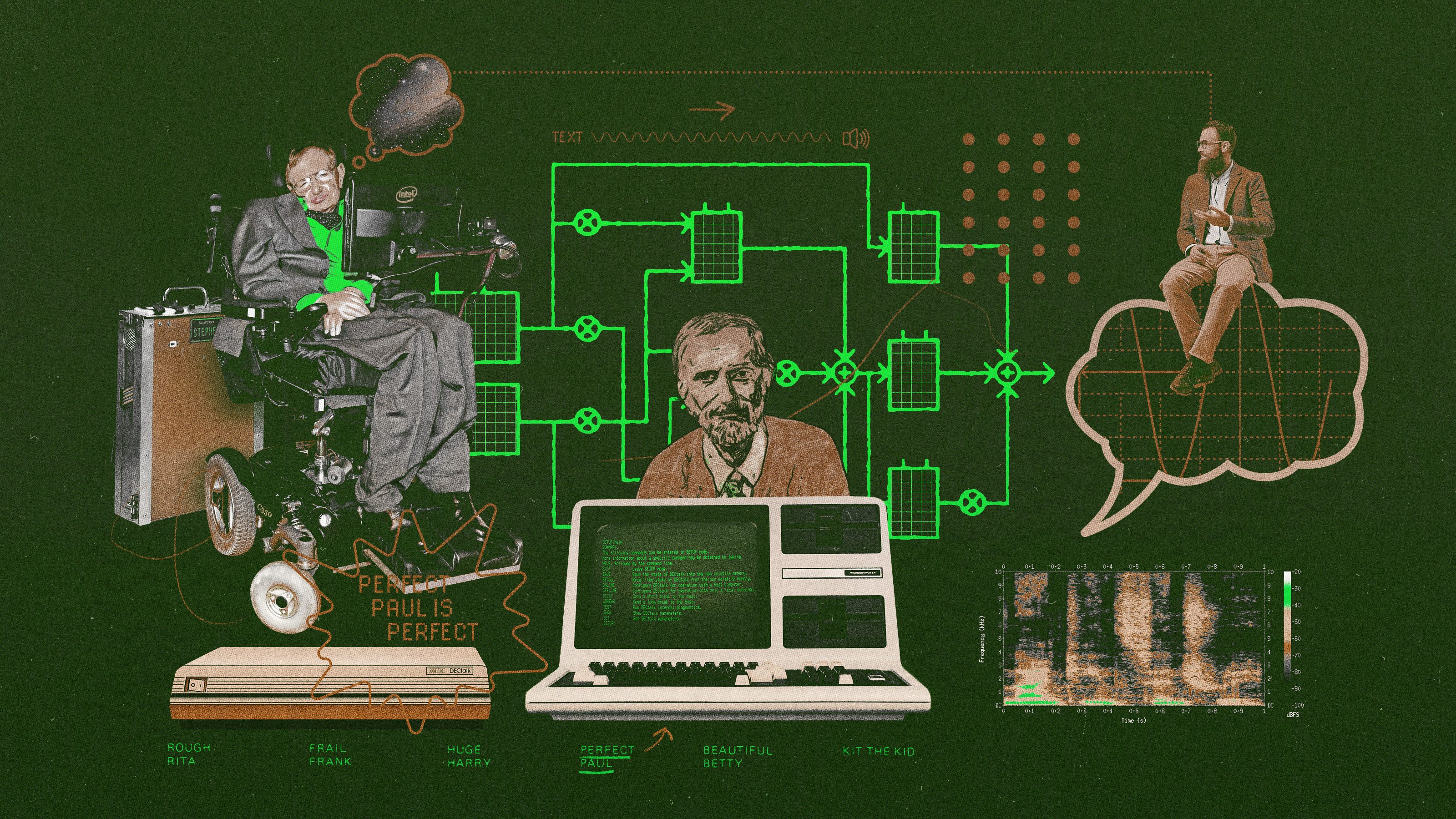

Image source: medley/Shutterstock/Big Think

Our lives are beginning to be, um, “populated” by virtual assistants like Amazon’s Alexa, Google Assistant, Apple’s Siri, and Microsoft’s Cortana. For us adults, they’re handy, if occasionally flawed, helpers to whom we say “thank you” upon the successful completion of a task if it hasn’t required too many frustrating, failed attempts. This is a quaint impulse left over from traditional inter-human exchanges. Kids, though, are growing up with these things, and they may be being taught a very different way of communicating. It’s okay if the lack of niceties — “please,” “thank you,” and the like — is contained to conversations with automatons, but neural pathways being susceptible to training as they are, we have to wonder if these habits are going to give rise to colder, less civil communications between people. Parents like Hunter Walk, writing on Medium, are wondering just what kind of little monsters we’re creating.

Neuroscientists are in general agreement that when we repeat an action, we build a neural pathway for doing so; the more we repeat it, the more fixed the pathway. This is the reason that a mistake gets harder and harder to correct — we’ve in effect taught the brain to make the mistake.

So what happens when kids get used to not saying “please” and “thank you,” and generally feeling unconcerned with the feelings of those with whom they speak?

Of course, it’s not as if an intelligent assistant cares how you talk to it. When Rebecca of the hilarious Mommyproof blog professed her love to Alexa, she got a few responses, including “I cannot answer that question,” and “Aw. That’s nice.”

I told Siri I loved her three times and got these responses:

1. You hardly know me.

2. I value you. [I think I’ve been friend-zoned.]

3. Oh, stop.

Neither one says “I love you back.” At least they don’t lie. But they also present a model that’s pretty unaffectionate, meaning there are no virtual hugs to support little kids’ emotional needs.

And that’s worrisome in its own way, since the borderline between alive and not can be unclear to little children. Peter Kahn, a developmental psychologist at the University of Washington studies human-robot interaction. He told Judith Shulevitz, writing for The New Republic, that even though kids understand that robots aren’t human, they still see virtual personalities as being sort of alive. Kahn says, “we’re creating a new category of being,” a “personified non-animal semi-conscious half-agent.” A child interacting with one of Kahn’s robots told him, “He’s like, he’s half living, half not.”

That nebulous status also threatens to make a virtual assistant something to practice bullying on, too. One parent, Avi Greengart, told Quartz that, “I’ve found my kids pushing the virtual assistant further than they would push a human. “[Alexa ] never says ‘That was rude’ or ‘I’m tired of you asking me the same question over and over again.'”

Virtual assistants do teach good diction, which is nice, but that’s about it. And they serve up lots of info, some which, at least, is appropriate. But we’re just at the dawn of interacting by voice with computers, so there’s still much to learn about what we’re doing and the long-term effects our virtual assistants will have.

Hm, Captain Picard never said “please” either: “Earl Grey, hot!”