Fake News Is an Unpleasant Symptom of a Bigger Problem

Watch out, fake news sites: Google and Facebook are cutting your purse strings.

In the wake of fake 2016 election results being the #1 Google search result, the company has finally decided to take action against fake news sites. According to a statement in Reuters, Google “will restrict ad serving on pages that misrepresent, misstate, or conceal information about the publisher, the publisher’s content, or the primary purpose of the web property,” moving forward. Furthermore, “Facebook updated its advertising policies to spell out that its ban on deceptive and misleading content applies to fake news,” Reuters reports.

Facebook has a big problem with fake news sites, as The Guardianhighlights. Fake news sites thrive on views, and Facebook is the main source of those views. As the owner of one site told them, “If your goal is straight page views [sic] then Facebook is the best investment of your time. It does drive more ‘clicks’ than posting on other platforms with an equal amount of followers.” And drive clicks it does: sites like these eschew normal journalistic standards for virality. “The ability to write a clickbaity headline, toss in some user-generated video found on YouTube, and dash off a 400-word post in 15 to 30 minutes is a skill they don’t teach in journalism school,” one site owner told The Guardian. That format, while lacking in journalistic integrity, is ideal for viral sharing. Sites investigated by The Guardian averaged about 700,000 visitors a day just through Facebook. By leveraging the platform and sharing their stories with other like-minded Facebook pages, they earn easily 7 or 8 million visitors per day — every single one of whom generates ad income when they click on the site. Another site owner told The Guardian that those views “generate income of between $10,000 and $40,000 a month from banks of ads” alone.

So Facebook’s inability to differentiate between informative and misleading content allows misinformation to spread. But Facebook’s approach to misleading content is particularly problematic. After backlash earlier this year over how employees covering Trending Topics filtered out conservative news sites, Facebook has been loathe to use any kind of content filtering tools for fear of appearing biased, according to The Verge: “Facebook had developed a tool to identify fake news on its platform, but chose not to deploy it for fear it would disproportionately affect conservative websites and cause more right-wing backlash.”

By waiting in fear rather than searching for a better solution, Facebook allowed major amounts of misleading information about the 2016 presidential election and its candidates to spread unchecked. For a platform where 63% of its users consider it their primary news source, according to Nieman Lab, that’s irresponsible. “Given the viral aspects of fake news, social networks and search engines were gamed by partisan bad actors intending to influence the outcome of the race,” as The Verge puts it.

Why does this matter? Because Facebook made this the first Google search result for the 2016 Presidential election results:

Credit: Twitter

These numbers are wrong. Clinton won 47.68% of the popular vote with 62,414,338 votes; Trump won 46.79% with 61,252,488 votes, according to CNN,The New York Times, Fox News, Politico, the BBC, and every other news site that reported on this election. No news organization in the country reported these numbers — yet, they are the first Google search result. I found them on Twitter, and despite the fact that there were hundreds of responses pointing out how they were wrong, people are still sharing and believing them.

The Washington Post made a concerted effort to track these numbers down. They report that the false statistics came from a site called 70news.com, which got them from a tweet, which got them from a site called USA Supreme. The Post did more digging and found:

The source behind the “USA Supreme” website isn’t clear. It looks an awful lot like Prntly, a made-up news website we looked at earlier this year. Founded by a former convict named Alex Portelli, Prntly is part of the broad diaspora of websites that takes news about American politics, frames it in a pro-Trump way (often at the expense of accuracy) and then peppers the page with ads.

The Post continues, reporting that Portelli “denied involvement in USA Supreme, suggesting that it was the work of a group of young people in Macedonia.” There may be some truth to that, as The Guardianreports. Yet, 70news.com now has a header at the top of its post-election numbers. That header attributes the false statistics come from “twitter posts” and insisting that “the popular vote number still need [sic] to be updated in Wikipedia or MSM [mainstream] media – which may take another few days because the liberals are still reeling and recovering from Trump-shock victory.”

That’s wrong: the mainstream media has been regularly updating election results as they came in, as The Post points out. “”Alex” is wrong; he’s hesitating to change the numbers,” they conclude.

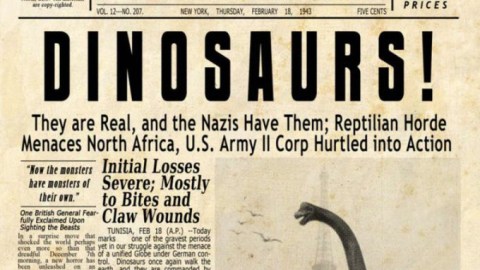

He is: here’s the Wikipedia page. Credit: Wikipedia

While Google and Facebook are taking steps to diminish the spread of this kind of misinformation, they are far from stemming it. As Reuters points out, “Google’s move does not address the issue of fake news or hoaxes appearing in Google search results… Rather, the change is aimed at assuring that publishers on the network are legitimate and eliminating financial incentives that appear to have driven the production of much fake news.”

Facebook’s hesitancy to own its role as a major news platform is a problem, too. This issue has caused great internal debate about the future of the platform. Right now, according to The Guardian, they are using a combination of algorithm and live screening to weed out content — much like Google. But until both platforms embrace their roles as news distributors, they have a long way to go before enacting meaningful change. Until then, we’ll all just have to double-check our Google results.

Columbia professor Tim Wu recently came to the Big Think studio to talk about the ways media companies harvest our attention and re-sell it to advertisers. There’s a gaping disparity between the way social media sites and media companies define “audience engagement” and the way good journalists do; to the former it means clicks that can be monetized, to the latter it means stoking deep thought that will gestate and be reborn as comments, emails, and conversation. As this recent study shows, 59% of links shared on Twitter are never even clicked. Reading headlines is a national pastime; reading articles is a rarity. And the worse of the two is incentivized.

Fake news is just an unpleasant symptom of a larger problem that we really don’t know how to fix.