“Stereotyping” has become a dirty word, but as psychologist Sam Gosling explains, we all do it—and need to.

Question: Can stereotypes be helpful?

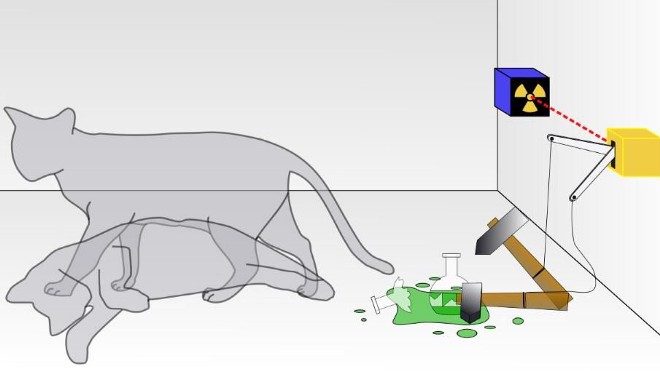

Sam Gosling: Yeah. Well, we have to use stereotypes because we don't have the time to treat every instance as though it's really a new event. It's much more efficient to treat it as a class of broader events about—you know, where we already have information on those things. I've never sat on this chair before, you know, but I didn't test it. You know, how did I know that this was—would be able to hold me and wouldn't just melt, or—who knows? I mean, there are all kinds of things that I didn't know about this particular chair, but I made, you know, an evil, oppressive stereotype—I use that: evil, oppressive stereotype—and I just treated this chair like an other chair. But it turns out that that was a pretty good stereotype. This—thinking that this chair would be comfortable and that I could sit on it served me as a pretty good guide to my behavior. And not only did it do that, it took me a microsecond. I didn't have to go through and check the chair beforehand. So where stereotypes have a kernel of truth to them, then they are useful. Of course, where we get into trouble is when we're using stereotypes that don't have a kernel of truth to them. And that's something that's often very hard to know. So some of our stereotypes it turns out have some validity, some don't. And we can't always know which they are. And even if some—of course, even if some of those stereotypes do have a kernel of truth to them, they may not have a kernel of truth to them for the right reasons. It doesn't mean there's no—it could be all kinds of horrible processes that led to the fact that that stereotype is valid, but that doesn't—but—so I'm not endorsing the fact that some of these stereotypes, even if they're true, are indeed oppressive. Some really are. But it's nonetheless from an information processing stance useful to use them.

The other thing about—I just want to say about stereotypes is, it makes sense to use them, but to use them as a first guess. But then it's also important to be able to update that with real information as you get it. And so that's what we find when people are looking at people's spaces, is that people do use the stereotypes when they go into people's spaces; they make generalizations; they say, oh, okay, this space clearly belongs to a female. Therefore I'm going to activate some of my stereotypes based on differences between males and females. And that's a good place to start, but what's really important is that you are quick to abandon that if there's other information. And that's the danger of stereotypes, is, the danger of stereotypes is that we use them and then we stick too closely to them, and we're unwilling to revise our impressions afterwards, on the basis of new information. And the other danger is that we think those stereotypes may exist for some good reason, whereas it may not be a good reason for their existence.

Recorded on November 6, 2009

Interviewed by Austin Allen