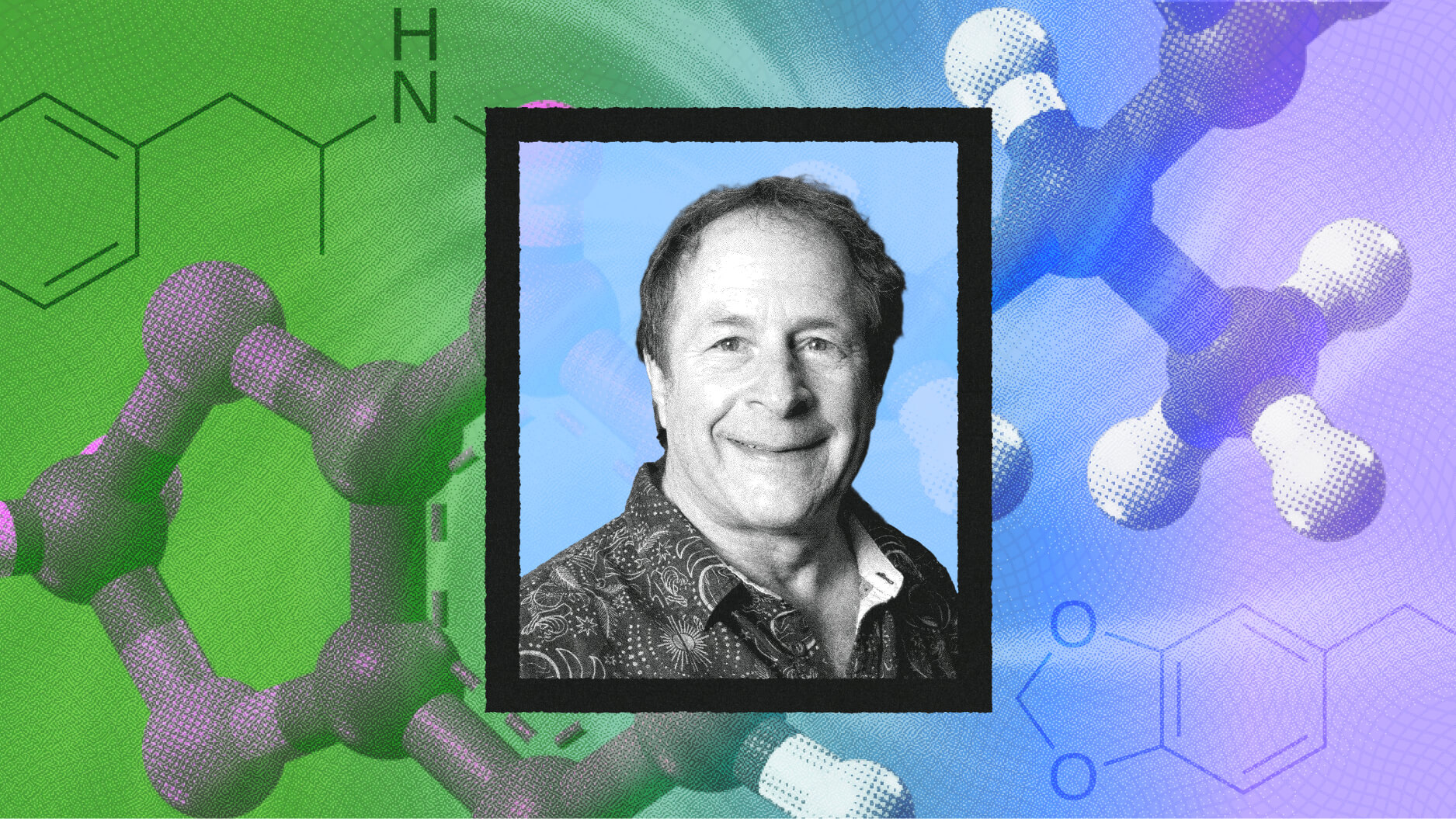

A conversation with the neuroscientist.

Question: What is attention on a neurological level?

Tony Zador: Yeah, the way I think about attention is really as a problem of routing, routing signals. So actually we know a lot about how signals come in through your sensory apparatus, through your ears and your eyes. We know an awful lot about how the retina works, how the cochlea works. We understand how the signals travel up and eventually they percolate up to the cortex. It the case of sound they percolate up to the auditory cortex and we know a lot about... a fair amount about how they look once they get there and after that we kind of lose track of them and we can’t really pick up the signal until they’re coming out the other end. We know a lot about movement. We understand and awful lot about what happens when a nerve impulse gets to a muscle and how muscles contract. That’s been pretty well understood for 50 years or something, more than that.

So where we really lose track of them is when they enter into the early parts of the sensory cortex and when they’re... we pick them up again sort of on the other side. And so attention is one of the ways in which signals can find their destination if you like. So think about it in terms of a really specific problem. Imagine that I ask you to raise your right hand when I say "go." Okay, so I say "go"—you raise your right hand. "Go"—raise your right hand. Now let’s change it and I say "go"—raise your left hand. "Go"—raise your left hand. So somehow the same signal coming in through your ear is producing different activity at the other end. Somehow the signal on the sensory side had to be routed to either the muscles of your right hand or the muscles of your left hand.

Now attention is a special case of that kind of general routing problem that the brain faces all the time because when you attend to let’s say your sounds rather than your visual input or when you attend to one particular auditory input out of many, what you’re doing is your selecting some subset of the inputs—in this case, coming into your ears—and subjecting them to further processing and you’re taking those signals and routing them downstream and doing stuff with them. How that routing happens that’s the aspect of attention that my lab focuses on.

So the basic setup for the problem that I’m interested in is this: Imagine that you’re at a cocktail party. There are a bunch of conversations going on and you’re talking to someone, but there is all this distracting stuff going on in the sidelines. You can make a choice. You can either focus on the person you’re talking to and ignore the rest. Or as often happens, you find that the conversation that you’re engaged in is not as interesting and "uh-huh, uh-huh, oh really, yeah, summer," okay and you start focusing in on this conversation on the right. Your ability to focus in on this conversation while ignoring that conversation, that is sort of the challenge, understanding how we do that is the challenge of my research.

And that problem actually has two separate aspects, so there is one aspect of that problem that is really a problem of computation. It’s a problem that is a challenge to basically any computer, namely we have a whole bunch of different sounds and from a bunch of different sources and they are superimposed at the level of the ears and somehow they’re added together and to us it’s typically pretty effortless for us to separate out those different threads of the conversation, but actually that’s a surprisingly difficult task, so computers maybe 10 years ago already were getting pretty good at doing speech recognition and in controlled situations and quiet rooms computers were actually pretty good at recognizing even random speakers, but when people actually started deploying these in real world settings where there is traffic noise and whatnot the computer algorithms broke down completely and it was sort of surprising at first because that is the aspect that we as humans seem to have not much trouble with at all, so the ability to take apart the different components of an auditory scene that’s what we’ve evolved for hundreds of millions of years to do really well. So that is one aspect of attention. There is almost this sort of aspect of it that happens before we’re even aware of it where the auditory scene is broken down into the components.

The other aspect, which is the one that we’re sort of more consciously aware of is the one where out of the many components of this conversation we—or of this auditory scene—we select one and that is the one we focus in on, right, so at this hypothetical cocktail party we have the choice of focusing on either the conversation we’re engaged in or this one or that one.

Question: How does sound travel from the ear to the brain?

Tony Zador: So actually we know a lot about the early stages of auditory processing. We know that there are sound waves. They are propagated down into a structure in your ear called the cochlea. Within that structure there are neurons that are exquisitely sensitive to minute changes in pressure. They are sensitive to those changes at different frequencies, so actually what your cochlea does is it acts as what is called a spectral analyzer, so there are some neurons that are sensitive to low frequency sounds and other neurons that are sensitive to middle frequency and other neurons that are sensitive to high frequency and each one of those is coded separately along a set of nerve fibers, then they’re passed through a bunch of stages in your auditory system before they get to your cortex, so the last stage... So I’ll say that what is interesting is that the stages of processing a sound are incredibly different as you might imagine from the stages of processing a visual scene, so those stages that I just told you are designed for processing physical vibrations between the ranges in a human of 20 hertz to 20 kilohertz. We have eyes that aren’t responsible for transducing sound vibrations, but rather, light. And you know the structure of the retina is also well understood. There are photoreceptors that pick up photons and transmit those signals, but what is interesting is that once those signals get processed or, if you like, preprocessed they end up in structures that now look remarkably similar. A structure called the thalamus and there is a part of the thalamus that receives input from the auditory system, another part of the thalamus that receives input from the visual system, from the retina, and then after it gets to the thalamus it goes to the cortex and within the cortex the signals now look very similar.

And so what seems to be the case is that there is this preprocessor in the... on the auditory side, on the visual side and actually all your sensory modalities that’s highly specialized for the kind of sensory input we have, but then it converts it into sort of a standard form that gets passed up to the cortex, so what we actually believe is that if that the mechanisms of auditory attention are actually not probably fundamentally different from the mechanisms of any other kind of attention, including visual attention.

Question: How do you study attention?

Tony Zador: There are couple of choices, so ultimately what I’d like to understand is how attention works in a human because I’m a human, but the problem is that for doing experiments humans are sometimes... in many ways not the ideal preparation. They’re not the ideal subjects and the reason of course is that we don’t have access to what is going on inside the human brain at the level of resolution that one might like and furthermore, we don’t have the means of manipulating that activity within a human in a way that we can actually probe what is really going on, so although one preparation that has been and is, remains really useful for studying attention is the human. If we want sort of a finer grain understanding we have to go to so called non-human models of attention, and there are several. The dominant one is the primate monkey... the primate model the monkey and the reason for studying a monkey of course is that monkeys are fairly similar to humans in a lot of ways. Their brains seem similar. They’re evolutionary... evolutionarily quite close. But in practice there are also limitations with monkeys, and so for my research we’ve actually moved to rodents and there are a couple of advantages of studying attention in rodents.

The first advantage is that we can scale up and study dozens and potentially even hundreds of rodents in parallel in a way that you can’t really do in a monkey. In practice, the way that people work with monkeys is they train a monkey for a year or two and then they perform experiments on that monkey for many years—because monkeys are incredibly valuable, so the kinds of information that you can get out from a monkey is really useful, but you can’t really do a sequence of experimental manipulations the way you can in other kinds of preparations. So with rodents what we do is we focus on tasks that we can train the rats and mice to perform. Our training time is typically a few weeks and then what we can do is explore what happens in their brain after they’ve learned to perform an attention task. On top of that what we can do is we can manipulate the neural activity within a rodent’s brain at a level of precision that at this point we really can’t achieve in a monkey and certainly not in a human. With a human we study groups, neural activity in patches that are you know consist of hundreds of thousands of neurons. In monkeys we can study single neurons at a time, but we can’t really manipulate whole parts of the circuit and in rodents we can actually now target subsets of neurons based on how they’re wired up and so that is what we’re able to do now in the rodent preparation.

We’ve designed a special behavioral box so that we can actually train rodents to perform attentional tasks in parallel. The whole thing is computer-controlled. The starting point for all these tasks is a rat that has been trained to stick its nose into the center port of a three-port box, so what you see is looking down on a box, the rat sticks its nose into the center port. That breaks and LED beam that signals to a computer that, ah, the rat has entered the center port and now we can program the computer to deliver whatever kind of stimuli we want, auditory... in fact one of my colleagues uses it to present olfactory stimuli, visual stimuli, but in our case it is auditory stimuli. The rat breaks the center beam. In this example what you see is that that triggers the computer to present either a low frequency sound or a high frequency sound. When it presents a low frequency sound the rat goes to one of the ports and then he gets a reward, a small amount of water. If he breaks the beam and it’s the other sound that is presented he goes to the other port and gets his reward. And as you can see the rat really understands what he is supposed to do. He sticks his nose in, goes in one way, sticks his nose in, goes the other way. He is going as fast as he can to pick up his rewards. Now what you actually hear on this video is that the low frequency and high frequency sounds are both masked by some white noise. That is to make it more challenging for the rat and the other thing is that the hearing range of a rat is shifted from the hearing range of a human, so humans hear from about 20 hertz to about 20 kilohertz. Rats hear from about 1 kilohertz to the ultrasonic, what is for us ultrasonic, 70 kilohertz, so the low frequency sound for the rat is something that is sort of on the upper end of what we hear, a few kilohertz—I think it’s five kilohertz—and the high frequency sound in this case is beyond what we can actually hear and so what you actually hear is either white noise alone or white noise plus a low frequency sound and that is what you see.

So how do we use this to study attention? So this is the starting point for all our tasks. This just demonstrates that the rat can take sound stimuli and use it to guide his behavior left and right. We have more complicated tasks where what we do is we have the rat attend to a particular aspect of the sound stimulus, so one of the studies that we’ve done recently is the ability of a rat to focus in on particular moments in time. in other words, we train the rat to guide his temporal expectation as to when a target occurs, so in the other example what you see is that the rat sticks his nose in and he is presented with a series of distracter tones and then he goes either left or right based on whether a target sound, which is a warble is either high frequency or low frequency and what makes it an attentional task is that we’ve trained the rat to expect the target to be either early in the trial, after one or two of the distracters on late in the trial, after about ten or fifteen of the distracters that is after about a second, second and a half in the center port and in that way we can guide the moment at which he expects the target to occur and the idea is that he kind of ramps up his attention for the target sound and what we’ve found is that when he ramps up his attention in this way his… the speed with which he responds to the target is faster than if the target comes unexpectedly and that is something that we find in humans as well, so that is one of in fact, the hallmarks of attention is an improvement in performance as measured either by his speed or accuracy and the other thing we found is that there are neurons in the auditory cortex whose activity is specially enhanced when he expects the target compared with when that same target is presented, but it comes in an unexpected moment, so we think that that is beginning to be the neural correlates of the enhanced performance that we’ve seen.

Question: How could research on attention help treat autism?

Tony Zador: Yeah, so ultimately what we’re interested in understanding are the neural circuits underlying attention. Autism, we think, is in large part a disorder of neural circuits. Ultimately the cause of the disruption of neural circuits is partly genetic and partly environmental, but we think that the manifestation of that... of the environmental and genetic causes of autism is a disruption of neural circuits and in particular there is some reason to believe that it’s a disruption of long range neural circuits, at least in part, between the front of the brain and the back of the brain. And those are the kinds of neural pathways that we think might be important in guiding attention.

Now one thing that pretty much anyone who has worked with autistic kids has found is that in many of them there is a disruption of auditory processing and especially of auditory attention, so one of the ongoing projects in my lab is to take mouse models that other people have developed of autism, that is mice in which genes that we think in humans when disrupted cause autism. We take those genes, disrupt them in a mouse and now we have a mouse whose neural development is perhaps perturbed in the same way that it would be perturbed in a human with autism and then what we can ask is what happens to the neural circuits and how does that disruption of neural circuits affect auditory attention and these are ongoing studies in my lab right now. We don’t really have final results yet, but that is because these mice have only very recently become available, so we’re very optimistic that by understanding how autism affects these long range connections, how those long range connections in turn affect attention that we’ll gain some insight into what is going on in humans with autism.

Recorded August 20, 2010

Interviewed by Max Miller