Mechanized minds: AI’s hidden impact on human thought

- The intense focus on what machines can or cannot do has led us to sidestep an equally pressing question: How might the widespread use of AI transform human thought? Will it become more machinelike?

- The philosopher Jiddu Krishnamurti warned that as machines replicate our cognitive abilities, humanity risks losing touch with deeper, non-mechanical forms of consciousness.

- The real question isn’t whether AI will become conscious, but whether humans can cultivate intelligence beyond the predictable, machine-like patterns of thought.

Since the 1950s, discussions about AI have largely revolved around a big, tantalizing question: What can machines do, and where might they hit a wall? Will they ever truly think, understand, or maybe even become conscious? Could they reach the so-called “heights of human intelligence”? And then there’s that shadowy question looming in the background: Would they turn on us if they could, becoming some kind of rival species? This fear has fueled imaginations for years. The philosopher Nick Bostrom, for example, envisions a future where machine superintelligence could control our destiny, much as human power now holds sway over the survival of gorillas.

With the rise of generative AI in 2022, the prospect of an artificial mind feels suddenly close to home. As historian Yuval Noah Harari notes, generative AI has “hacked the operating system of our civilization” — language. What if, in this new world, AI starts to generate not just words but beliefs, myths, and maybe even a new kind of culture that could completely shift the course of human history? On the other hand, most philosophers of AI have been quick to rain on this parade, pointing out that while AI can put on a great show imitating and simulating human minds (what the famed philosopher John Searle dubbed “weak AI”), it simply doesn’t — and can’t — have a mind of its own (“strong AI”).

However, this intense focus on what machines can or cannot do has led us to sidestep an equally pressing question. While we’re busy wondering whether machines will ever become conscious, we rarely stop to ask: What happens to us? How does it change us when we realize that even today’s mind-mimicking “weak AI” can already take over cognitive tasks and abilities we once thought were exclusively human?

This kind of close encounter happened to philosopher Sven Nyholm. One day, while experimenting with ChatGPT, he typed in, “What would Martin Heidegger think about the ethics of AI?” To his surprise, the software quickly produced a “fairly impressive short essay on the topic.” Nyholm candidly admitted, with a hint of amusement, that “ChatGPT did a better job than at least some — maybe even many — of the students in my classes.” He even confessed that the AI’s response might have been better than what he could come up with on the spot.

This anecdote, published in 2023, came at a very early stage in the evolution of large language models (LLMs). In just a year or two, we may find it nearly impossible to tell the difference between a paper written by a university professor and one crafted by a skillful, mindless AI. If machines can rapidly weave together complex ideas from diverse schools of thought to uncover conceptual connections, would this type of academic activity still be deemed intelligent, and will the knowledge we’re boasting to have — our prideful capacity to activate our brain’s net of associations and comparisons — retain any significance?

This is just one glimpse of how Promethean AI is snatching the fire from humanity’s gods, pointing toward a philosophical, psychological, and existential shake-up we’re all too happy to overlook. But would this crisis only hit us if a “strong AI” reigned supreme? Not likely. Even today’s so-called weak AIs — which can do statistically what we do semantically, achieving the same results without real intelligence or self-awareness — are already stirring the pot. Imagine a human painter insisting they felt every brushstroke deeply: Does it matter if software can produce something equally stirring without a single ounce of feeling?

A challenge to human thought

Fortunately, in the past few years, some philosophers have begun to sense the looming earthquake of this crisis of meaning. Discussions about how AI might influence our ability to live the good life and experience personal fulfillment are just beginning to take baby steps. AI ethics has expanded beyond simply figuring out how to use these technologies in moral or valuable ways. Yet, even these emerging conversations often miss the bigger picture, focusing mostly on whether AI will render meaningful human activities, like work, obsolete, or whether it might bring new avenues of meaning, such as virtual realities, into existence. But there’s a deeper, unasked question that lingers in the background, one introduced by the unconventional thinker Jiddu Krishnamurti in the early 1980s: “If the machine can take over everything man can do, and do it still better than us, then what is a human being, what are you?”

When Krishnamurti (1895–1986) asked this question, he was an 85-year-old sage who’d spent nearly 60 years probing the mysteries of the mind, consciousness, and the need for a psychological revolution. He wasn’t your typical philosopher; born in India, Krishnamurti carved his own path as an uncompromising thinker. He turned his back on organized religion and ideological systems, believing true freedom could only come from within. For him, the key to a meaningful life lay in self-inquiry and a kind of education that would spark independent thinking and deep self-awareness. Krishnamurti’s philosophy wasn’t about following a set of beliefs — it was about each person’s fearless dive into life’s big, unsettling questions.

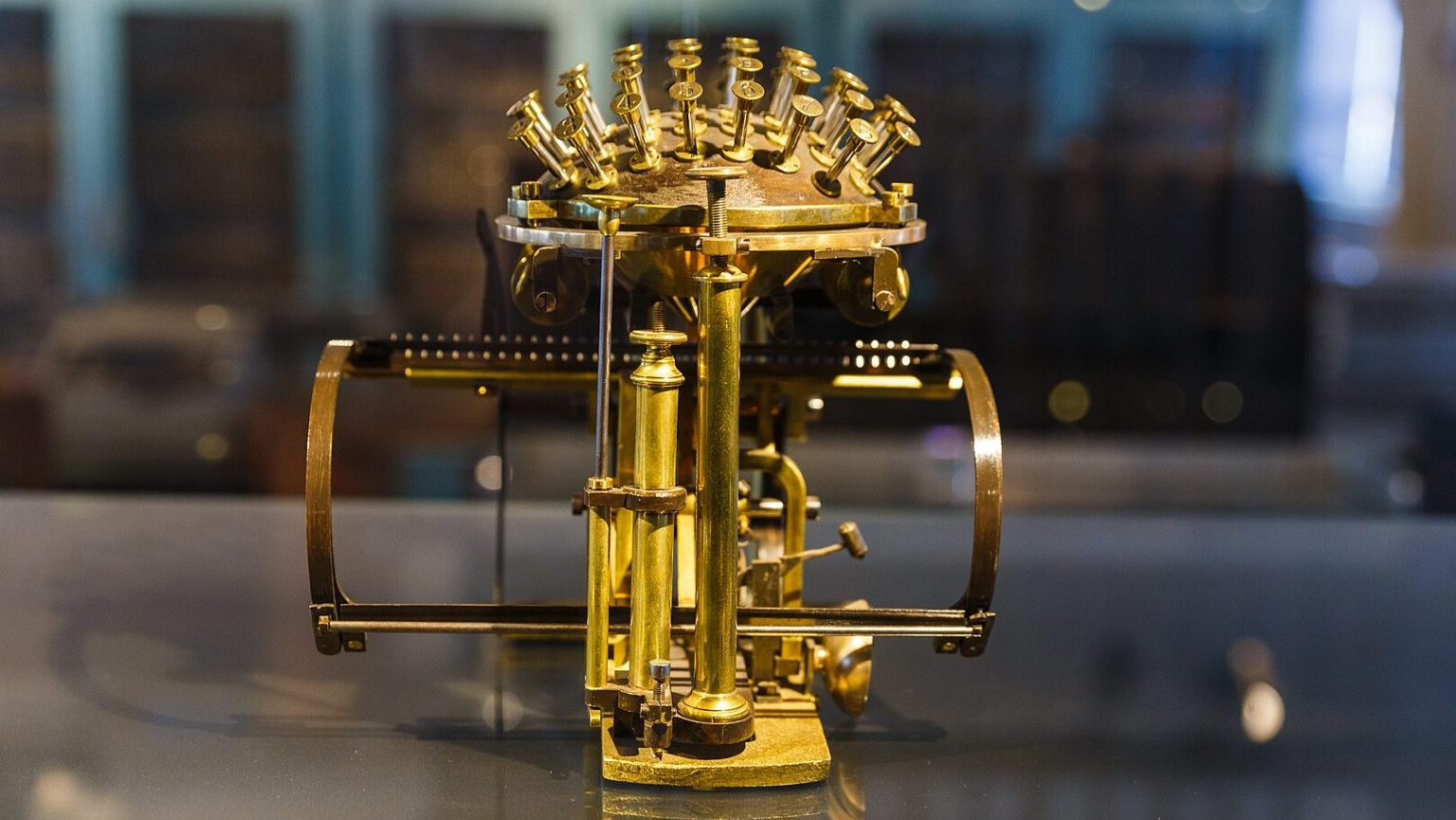

The year 1980 was a turning point for the philosophy of artificial intelligence, even while AI itself was still finding its feet. Philosopher John Searle introduced his now-famous Chinese Room thought experiment, arguing that machines could produce impressive results without a shred of genuine understanding. Around this time, Krishnamurti, too, encountered the world of AI through conversations with computer scientists. But what struck him wasn’t the technology’s marvels; it was the disturbing challenge it posed to the human mind — the idea that machines might one day take over its unique processes and faculties. This question got under his skin and became one of his deepest concerns in his final decade. He felt a powerful need to bring this issue to his audiences, seeing in it an urgent crisis that demanded thoughtful attention.

Krishnamurti, ever the visionary, laid out a series of bold predictions — almost prophetic warnings — about a future where humans might drift into obsolescence. With his characteristic intensity, he sketched a vivid scene of a not-so-distant world — “in about ten, fifteen years” — where AI would eclipse human intelligence entirely, reducing us to a society of idle zombies, fixated on leisure and entertainment. Machines, he predicted, would dictate how we live, diagnose us better than any doctor, translate books, compose symphonies to rival Beethoven, create groundbreaking philosophies, and even conjure new gurus and gods for us to follow.

While this vision hasn’t fully come to pass, and human culture remains alive and kicking 44 years later, recent leaps in machine learning — especially with deep learning — echo some of Krishnamurti’s projections. Large language models now spin out humanlike text, essays, poems, and even art and music, while AI ventures into drug design and scientific discovery. In many ways, the world he foresaw is beginning to sound a little too familiar.

The mechanized mind

Krishnamurti’s apocalyptic vision has surprising echoes in Yuval Noah Harari’s idea of AI as a culture-creator. But there’s one big difference: Krishnamurti wasn’t as caught up in social worries like disinformation. His life’s mission was all about unmasking the mind’s mechanical habits. To him, the idea that machines could mimic human thought wasn’t just intriguing — it was a revelation with deep psychological and spiritual punch. If our minds can be recreated by a machine, then maybe our thinking is more robotic than we’d like to admit. And that’s a reality that shakes us right down to our human core.

It’s not that Krishnamurti saw the mind as anything close to a computer. While a computational functionalist might argue that building a mind is as simple as building a machine, Krishnamurti believed our minds are so much more. But he worried we were selling ourselves short, letting our minds get stuck in mechanical routines like memory and knowledge-crunching. A mind that only works this way, he warned, would be easily copied, even replaced by a machine. So, the real question isn’t whether AI will develop humanlike minds — it’s whether we’re slipping into machinelike minds ourselves. “The computers are challenging you,” he said, “and you have to meet the challenge.” To him, meeting it meant doing everything we could to make our minds different from artificial intelligence — a call to rise above the routines that risk turning us into reflections of our own machines.

Krishnamurti didn’t see intelligent machines as some strange, rival species ready to replace us. To him, machines were just an extension of the human mind — a brain built in our own image, with human and artificial thought as mirror reflections. When you think about it, AI developers aren’t pulling ideas out of thin air; they’re crafting AI based on assumptions about human thought, intelligence, and creativity. So, when we look into this artificial mirror and find our own thinking patterns staring back, it’s a wake-up call: Many of the abilities we once thought needed consciousness, intelligence, or creativity can actually be handed off to machines. Consciousness itself doesn’t set us apart if we reduce the mind to computation. In truth, the line between brains and computers gets blurrier by the day.

In a way, Krishnamurti turns Alan Turing’s famous ‘imitation game’ on its head. In his 1950 paper, Turing proposed a thought experiment to see whether a machine could mimic human verbal behavior by responding meaningfully to whatever is asked. Picture it: a woman and a computer, each hidden away in separate rooms, while a human judge, unaware of who’s where, poses questions via email (or, in Turing’s day, teletype). If the judge can’t do better than a coin toss when guessing who’s human, the computer has passed the test. But Krishnamurti’s interest isn’t in whether machines can talk like humans; he’s far more concerned with humans talking like machines. In other words, if machines could indeed pass the Turing test, Krishnamurti saw this not as proof of machine intelligence but as a reflection of the mechanical nature of human thought itself.

We like to imagine our thinking is, at least sometimes, intelligent and creative. But Krishnamurti didn’t see thought in this flattering light. He argued that our vulnerability to imitation stems from our heavy reliance on thinking itself. To him, thinking was the mind’s computational habit — a mechanical cycle that “starts from experience, which becomes knowledge stored up in the cells of the brain as memory; then from memory, there is thought.” This chain reaction of experience, memory, and knowledge gets reinforced through repetition: We act and react based on this inner stockpile, learn from those actions and reactions, and gather more experience, memory, and knowledge. It’s a purely mechanical loop, driven by repetition. In Krishnamurti’s view, thinking boils down to “memory responses,” a kind of inner programming that’s strikingly similar to how computers work. Computers, after all, are like brain-like warehouses of information, learning and self-correcting as they go. They run this cycle without truly “thinking” and could eventually outperform us at it.

So, can we at least take comfort in knowing our real, physical experiences are one-of-a-kind? After all, no ultra-smart machine can slip into human form, fall in love, or look up at the stars and sigh, “What a marvelous night!” But if we think our embodied consciousness gives us a leg up, Krishnamurti would quickly burst that bubble. He argued that even our experiences are clouded by reactions rooted in memory. Picture this: As I admire a beautiful evening star, my mind immediately jumps to familiar images, comparing and labeling the moment rather than letting me experience it as something new. Thought creates symbols and mental snapshots — an Instagram-perfect moment, perhaps — and gets trapped, seeing the present only through yesterday’s lens.

This “image-making,” as Krishnamurti called it, pops up in all corners of life, turning our reactions into routines. It’s not just our political or religious convictions that loop us back to old patterns; it’s there in our cravings for food, sex, and entertainment, too. Take something like pornography — people may be drawn to it because of pleasurable images from past experiences, yet every exposure just adds to the mental library, feeding a cycle of conditioned responses. So, Krishnamurti cautions us against finding comfort in the sensory limitations of machines because, in truth, the mechanical mind is everywhere, quietly draining the depth from human experience.

Krishnamurti warns that our ability to truly experience life will only fade further. As smart machines and robots take over more of our thinking, the brain risks becoming lazy, unstimulated, and, frankly, bored. In a world where work and struggle are things of the past, our experiences might dwindle to nothing more than a search for entertainment and pleasure — a cycle of reacting to one shiny distraction after another. When we hit that point, Krishnamurti says, we’ll be faced with two paths: We either surrender to nonstop amusement and slip into a zombielike existence, or we decide to keep our minds sharp by waking up to a higher consciousness.

Krishnamurti’s alarm bell

Krishnamurti’s advice for facing the challenge of AI is simple but profound: Resist the lure of the entertained mind and keep your mental gears turning by diving into the “vast recesses of one’s being.” The optimists among us might think that the brain would naturally leap to higher pursuits once freed from routine. But Krishnamurti is less hopeful. He doubts that a brain conditioned to mechanical thought would suddenly spring to life without some serious effort. That’s why he insists we actively engage our minds, a practice that sets us apart from machines, and explore activities and capacities that go beyond mere thinking. For Krishnamurti, mind and mechanical thought are worlds apart. And when he talks about exercising the brain, he’s not referring to crossword puzzles or sudoku. He’s pointing to a human mind with an immense, even infinite capacity — one that remains unknown as long as it’s bogged down by knowledge, specialization, and material concerns.

This is Krishnamurti’s alarm bell: In an AI-ruled world, any human ability we neglect will start to shrivel up. Plenty of philosophers have made bold arguments to preserve the mind’s special status, unmatched consciousness, and natural intelligence. But Krishnamurti wasn’t interested in viewing consciousness as some fixed, unshakable trait. Instead, he saw the rise of AI as a challenge to view consciousness as a skill — a potential we rarely exercise. For him, the real task isn’t to protect the mind as it is, but to stretch it, to cultivate certain hidden faculties. Consciousness, he insisted, is not just a given; it’s a possibility waiting to be fulfilled.

This shift flips the usual debate on its head: Instead of asking whether machines will ever become conscious, we might ask whether humans can become conscious enough to outgrow the “artificial intelligence” both inside them and in the machines around them. Krishnamurti also questioned whether thought, in its inherently mechanical nature, could ever produce real intelligence. After all, if our thinking operates like a machine, can it truly be called intelligent? For Krishnamurti, AI demands a fresh look at intelligence itself. If we agree that true intelligence can’t be mechanical, then maybe it’s time to define intelligence as something beyond what any computer could imitate or simulate.

According to Krishnamurti, the ultimate response to super-intelligent machines is the cultivation of a genuinely intelligent mind — a mind guided by non-thought intelligence. He envisions this mind as one that isn’t rooted in memory. While most of us engage with the world through layers of past experiences and stored-up knowledge, the intelligent mind uses knowledge only “when necessary” and, for the most part, doesn’t rely on the familiar. It places little value on the mechanical processes of memory — yesterday’s thoughts or tomorrow’s plans — and strives to stay as open and uncluttered as possible. Beyond essential practical knowledge, this mind releases the day’s weight each night, clearing the slate “as if dying at the end of the day.” The result is a mind that is spontaneous and alive. For Krishnamurti, this non-mechanical individual would see themselves as “something that is changing all the time,” discovering a fresh, new self with each dawn.

The first crucial step toward cultivating a truly intelligent mind — and moving away from the automated thinking of machines — is to engage in what philosophers call “second-order cognition” or reflective self-awareness, recognizing the mind’s programmed patterns. Fortunately, the mind and mechanical thought aren’t the same, which means we have the ability to notice when our minds are caught in cycles of experience–memory–knowledge. Though it may feel uncomfortable, Krishnamurti sees this recognition as essential: Understanding the machine-like quality of thought, he says, is the “very source of intelligence,” the spark of a unique, deeper awareness.

Krishnamurti doesn’t offer a full set of answers, but what he does suggest is intriguing enough to get us asking the right questions. For him, the question itself is more powerful than any answer. He believed that by simply contemplating what true intelligence really is, we begin to awaken it. And this awakening, he says, is what ultimately allows us to distinguish our own minds from the artificial intelligence that surrounds us.