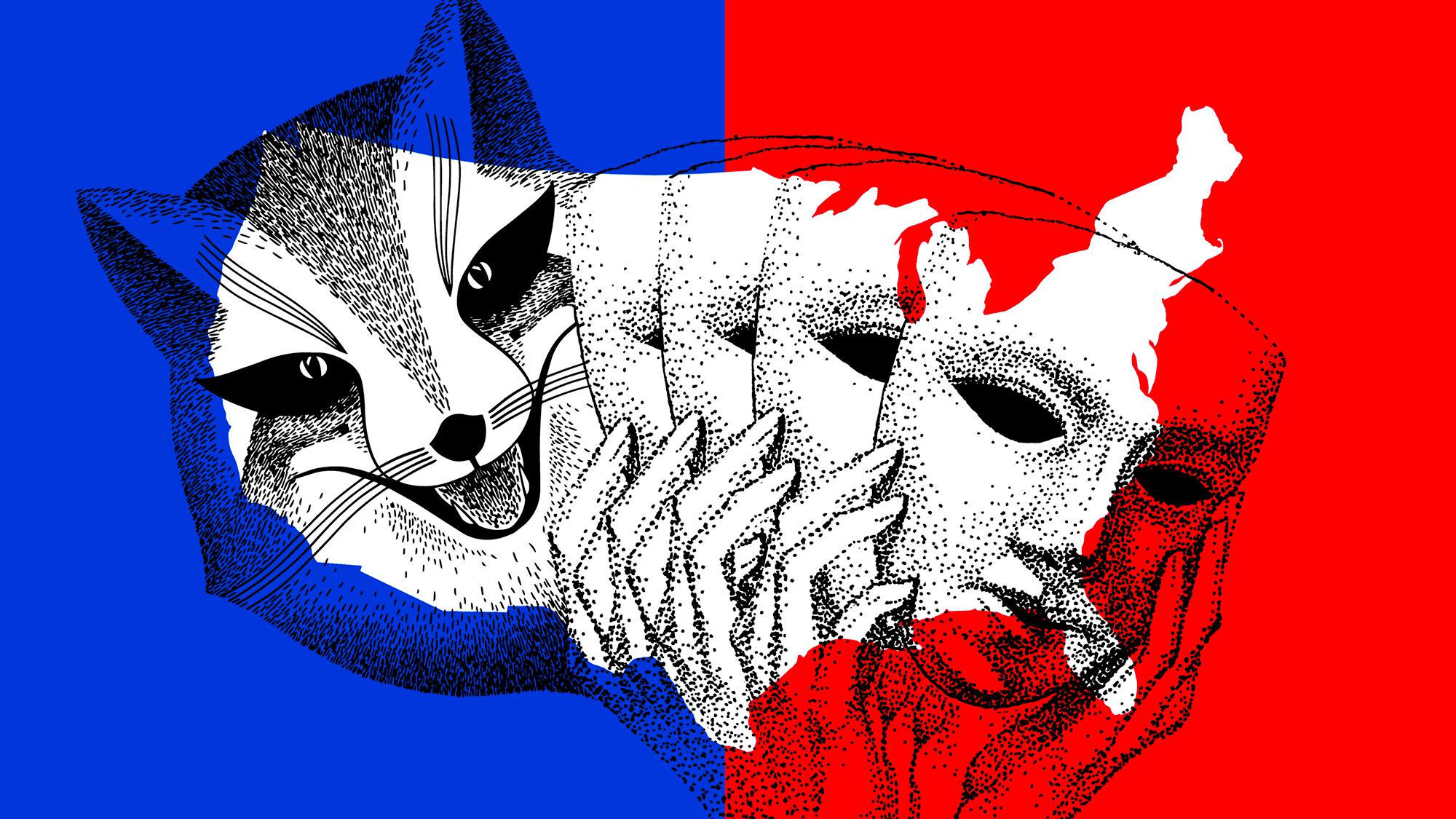

Scientific certainty survival kit: How to push back against skeptics who exploit uncertainty for political gain

The mathematician Kurt Gödel was obsessed by the fear that he would die by poisoning. He refused to eat a meal unless it was prepared by his wife, the only person he trusted. When she fell ill and was sent to hospital, Gödel died of starvation.

His death is sad, but also ironic: The man who discovered that even logical systems are incomplete — that some truths are unprovable — died because he demanded complete proof that his food was safe. He demanded more of his lasagne than he did of logic.

“Don’t eat unless you are 100 per cent certain your food is safe” is a principle that will kill a person as certainly as any poison. So, in the face of uncertainty about our food we take precautions and then we eat — knowing there remains the slimmest chance an unknown enemy has laced our meal with arsenic.

The example of Gödel teaches us a lesson: sometimes the demand for absolute certainty can be dangerous and even deadly. Despite this, demands for absolute or near certainty are a common way for those with a political agenda to undermine science and to delay action. Through our combined experience in science, philosophy and cultural theory, we are acquainted with these attempts to undermine science. We want to help readers figure out how to evaluate their merits or lack thereof.

A brief history of certainty

Scientists have amassed abundant evidence that smoking causes cancer, that the climate is changing because of humans and that vaccines are safe and effective. But scientists have not proven these results definitively, nor will they ever do so.

Oncology, climate science and epidemiology are not branches of pure mathematics, defined by absolute certainty. Yet it has become something of an industry to disparage the scientific results because they fail to provide certainty equal to 2+2=4.

Some science skeptics say that findings about smoking, global warming and vaccines lack certainty and are therefore unreliable. “What if the science is wrong?” they ask.

This concern can be valid; scientists themselves worry about it. But carried to excess, such criticism often serves political agendas by persuading people to lose trust in science and avoid taking action.

Over 2,000 years ago Aristotle wrote that “it is the mark of an educated person to look for precision in each class of things just so far as the nature of the subject admits.” Scientists have agreed for centuries that it is inappropriate to seek absolute certainty from the empirical sciences.

For example, one of the fathers of modern science, Francis Bacon, wrote in 1620 that his “Novum Organum” — a new method or logic for studying and understanding natural phenomenon — would chart a middle path between the excess of dogmatic certainty and the excess of skeptical doubt. This middle path is marked by increasing degrees of probability achieved by careful observation, skilfully executed tests and the collection of evidence.

To demand perfect certainty from scientists now is to be 400 years behind on one’s reading on scientific methodology.

A certainty survival kit

It can be difficult to distinguish between the calls of sincere scientists for more research to reach greater certainty, on the one hand, and the politically motivated criticisms of science skeptics, on the other. But there are some ways to tell the difference: First, we highlight some common tactics employed by science skeptics and, second, we provide questions readers might ask when they encounter doubt about scientific certainty.

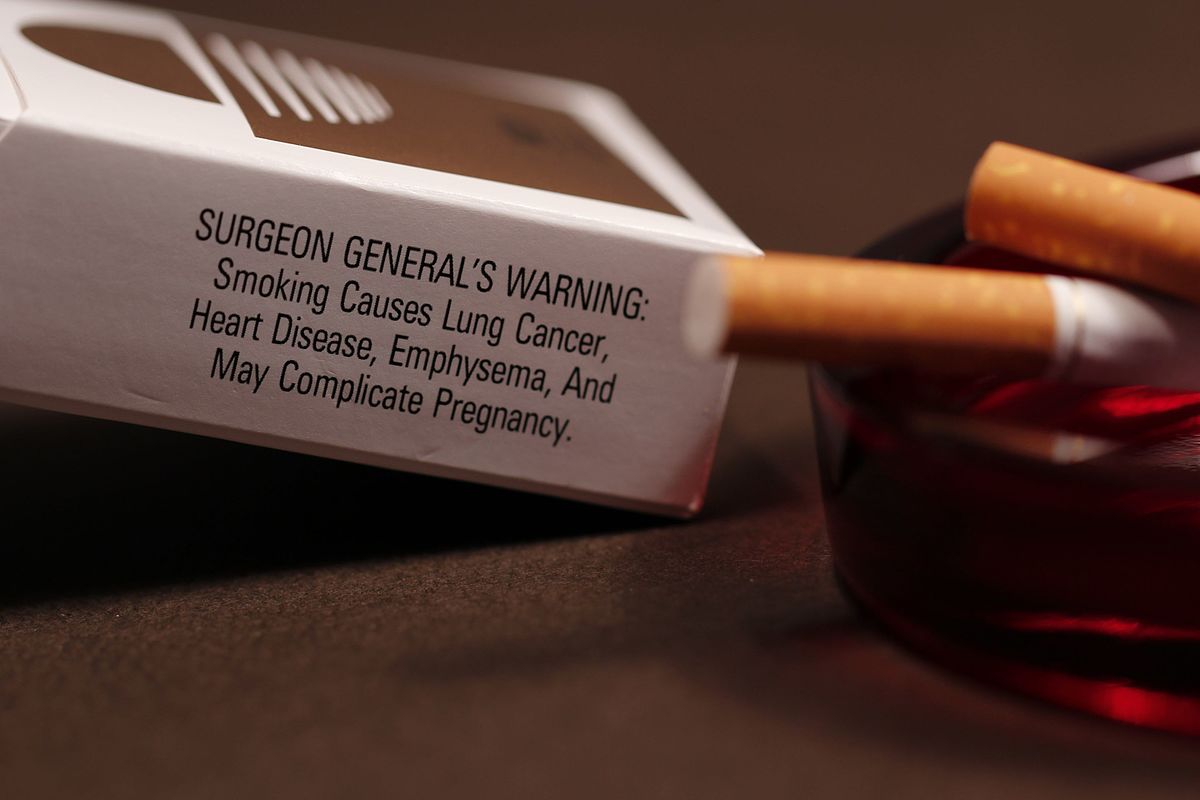

One common tactic is the old “correlation does not equal causation” chestnut. This one was used by the tobacco industry to challenge the link between smoking and cancer in the 1950s and ‘60s.

Smoking is merely correlated with cancer, the tobacco industry and their representatives argued, it didn’t necessarily cause cancer. But these critics left out the fact that the correlation is very strong, smoking precedes cancer and other potential causes are unable to account for this correlation.

In fact, the science linking smoking and lung cancer is now quite clear given the decades of research that produced volumes of supporting evidence. This tactic continues to be a mainstay of many science skeptics even though scientists have well-tested abilities to separate simple correlation from cause and effect relationships.

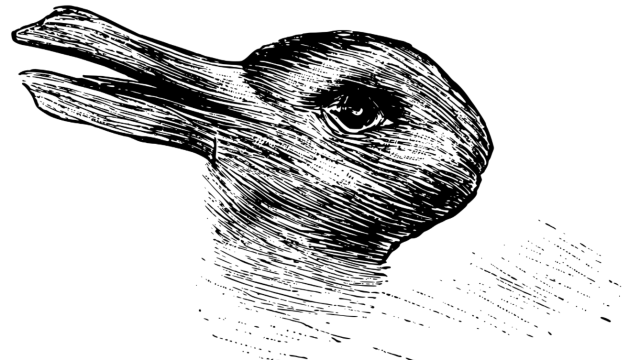

Another tactic argues that science is unable to prove anything positive, that science only tests and ultimately falsifies theories, conjectures and hypotheses. And so, skeptics say, the real work of science is not to establish truths definitively, but to refute falsehoods definitively. If this were true, scientific claims would always be “underdetermined” — the idea that whatever evidence is available may not be sufficient to determine whether we believe something to be true.

For example, science could never prove true the claim that humans are warming the planet. While science may fall short of complete proof, scientists nevertheless amass such great evidence that they render their conclusions the most rational among the alternatives.

Science has moved past this criticism of underdetermination, which rests on an outdated philosophy of science made popular by Karl Popper early last century, according to which science merely falsifies, but never proves. Larry Laudan, a philosopher of science, wrote an influential 1990 essay, “Demystifying underdetermination,” that shows that this objection to scientific methodology is sloppy and exaggerated.

Scientists can reach conclusions that one explanation is more rational than competing claims, even if scientists cannot prove their conclusions through demonstration. These extensive and varied lines of evidence can collectively lead to positive conclusions and allow us to know with a high level of certainty that humans are indeed warming the planet.

Scientists can be the target too

Another way to drum up uncertainty about what we know is through attacks on scientists. Personal attacks on public health officials during the ongoing pandemic are a prime example. These attacks are often framed more broadly to implicate scientists as untrustworthy, profit-seeking or politically motivated.

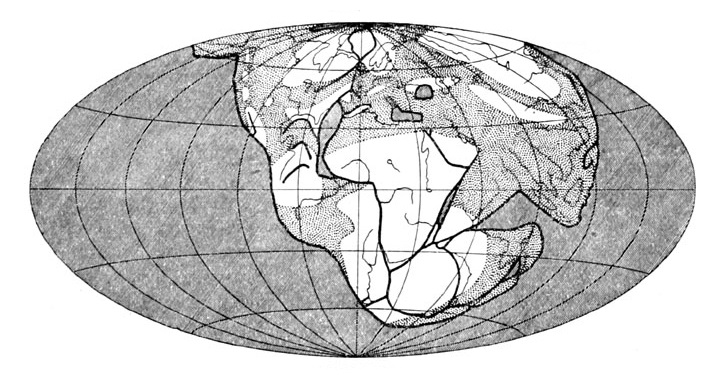

For example, consensus among scientists is sometimes touted as no guarantee of truth or, in other words, scientists are sometimes wrong. One well-known example involves the theory of plate tectonics, where the scientific community for several decades largely dismissed the idea proposed by geophysicist Alfred Wegener. This consensus rapidly shifted in the 1960s as evidence mounted in support of continental drift.

While scientists may be using flawed data, suffer from a lack of data or sometimes misinterpret the data that they have, the scientific approach allows for the reconsideration and rethinking of what is known when new evidence arises. While highlighting the occasional scientific mistake can create sensational headlines and reduce trust in scientists, the reality is that science is transparent about its mistakes and generally self-correcting when these issues arise. This is a feature of science, not a bug.

Being mindful about certainty

When reading critiques that inflate the uncertainty of science, we suggest asking the following questions to determine whether the critique is being made in the interest of advancing science or procuring public health, or whether it is being made by someone with a hidden agenda:

- Who is making the argument? What are their credentials?

- Whose interests are served by the argument?

- Is the critique of science selective or focused only on science that runs against the interests represented by the speaker?

- Does the argument involve any self-critique?

- Is the speaker doubting the existence of the problem? Or asking for delay in action until certainty is obtained? Who stands to benefit from this delay?

- Does the speaker require a high level of certainty on the one hand, but not on the other? For instance, if the argument is that the safety of a vaccine is not sufficiently certain, what makes the argument against its safety sufficient?

- Has the argument made clear how much uncertainty there is? Has the speaker specified a threshold at which point they would feel certain enough to act?

A friend of ours recently encountered a vaccine skeptic who articulated their problem this way: “I don’t know what’s in it.” In fact, we do know what is in vaccines, as much as we can know for certain what is in anything else we put in our bodies. The same question can be fruitfully asked of any argument we put in our minds: “Am I sure I know what’s in it?”

This article is republished from The Conversation under a Creative Commons license. Read the original article.